- Understanding JSON Structure

- Tools for Converting JSON to Excel

- Using Excel’s Built-in Features for JSON Import

- Converting JSON to CSV as an Intermediate Step

- Handling Nested JSON Data

- Automating JSON to Excel Conversion

- Data Cleaning and Transformation Post-Conversion

- Validating Data Integrity After Conversion

- Dealing with Large JSON Files

- Common Errors and Troubleshooting

- Best Practices for Data Conversion

- Case Studies and Real-World Applications

Understanding JSON Structure

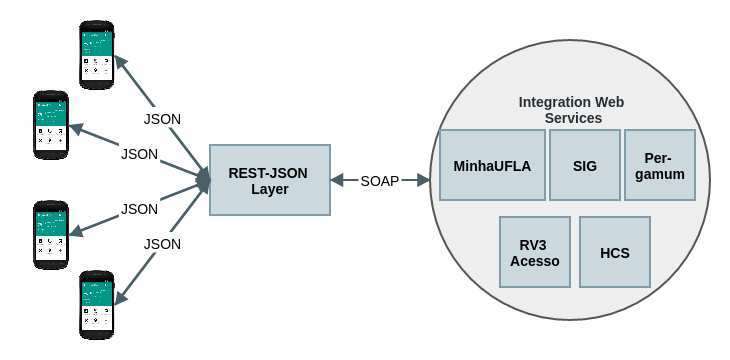

JSON (JavaScript Object Notation) is a lightweight data-interchange format that is easy for humans to read and write and easy for machines to parse and generate. It is commonly used to transmit data between a server and a web application. The structure of JSON is based on key-value pairs, where data is organized into objects and arrays. An object is a collection of key-value pairs enclosed in curly braces {}, with each key being a string followed by a colon and a corresponding value. Values can be strings, numbers, booleans, null, arrays, or other objects. Arrays are ordered lists of values enclosed in square brackets [], and they can contain any combination of data types, including nested arrays or objects. This hierarchical structure makes JSON is flexible and ideal for representing complex data models. JSON syntax is strict: keys must be in double quotes, and values must follow data type rules precisely. Learn more with our Business Analyst Training. Its simplicity, human readability, and compatibility with most programming languages have made JSON a widely adopted standard in APIs, configurations, and data exchange. Understanding the structure of JSON is essential for developers working with modern web services, as it forms the backbone of communication between clients and servers in many web-based applications.

Tools for Converting JSON to Excel

- Online Tools: Websites like JSON to Excel and ConvertCSV offer free online converters that allow users to upload their JSON files and download Excel files directly. These tools are straightforward but might not handle large files or complex nested structures well.

- Microsoft Power Query (Excel): This is a built-in tool in Excel (2016 and later) that allows for importing and transforming data from various sources, including JSON. Power Query can read the JSON file and present it in a tabular format. It’s highly customizable, which is useful for users dealing with complex data structures. Learn more about the Business Analyst Career Path.

- Third-Party Software: Programs like Altair Smart Learning or Tableau Prep also offer more advanced features, including data transformation and integration with other databases or data sources.

- Python Libraries: For those comfortable with scripting, libraries such as Pandas (Python) can be used to programmatically convert JSON into Excel, providing more flexibility, particularly for large or nested datasets.

There are several tools available for converting JSON files into Excel spreadsheets, both online and offline. Some tools are dedicated software, while others are integrated into larger data processing suites.

Become a Business Analyst by enrolling in this Business Analyst Online Course today.

Using Excel’s Built-in Features for JSON Import

Excel offers powerful built-in features for importing JSON data, making it easier for users to analyze and manipulate structured information without needing advanced programming skills. With recent versions of Excel, particularly Excel 2016 and later, users can import JSON files directly using the Power Query Editor. To do this, you simply go to the “Data” tab, select “Get Data,” choose “From File,” and then “From JSON.” Excel then parses the JSON file and loads it into the Power Query Editor, where you can navigate through the nested structure, transform data, and expand records or arrays as needed. This functionality allows users to flatten complex JSON structures into tabular formats suitable for analysis. Power Query also provides options to filter, pivot, merge, or reshape data before loading it into a worksheet. Learn more about Data Analytics Solutions for Strategic Growth. Additionally, transformations applied in Power Query can be refreshed automatically when the source JSON file is updated, allowing for dynamic and up-to-date reporting. This integration eliminates the need for third-party tools or manual data conversion and provides a user-friendly way to work with modern data formats. By using Excel’s built-in JSON import features, professionals can streamline data workflows and improve productivity when handling structured data from APIs, databases, or external files.

Converting JSON to CSV as an Intermediate Step

- Simplifies Data Structure: JSON often contains nested objects or arrays, which can be difficult to analyze. Converting to CSV flattens the data into rows and columns.

- Enhances Compatibility: CSV files are widely supported across different tools, including Excel, Google Sheets, and many data analytics platforms.

- Eases Data Import: Many systems and software applications accept CSV files for bulk data import, making them ideal for integration tasks.

- Improves Human Readability: CSV files are plain text and easier for users to read and understand, especially for those unfamiliar with JSON.

- Supports Batch Processing: Large volumes of data are easier to process and manipulate in CSV format using scripts or spreadsheet tools.

- Facilitates Data Sharing: CSV files are lightweight and easy to share, especially via email or cloud storage, without requiring additional formatting tools.

Converting JSON to CSV is often used as an intermediate step when working with structured data that needs to be analyzed, imported into spreadsheets, or shared across different platforms. While JSON is great for storing nested and complex data, CSV offers a flat, tabular format that’s more compatible with tools like Excel, databases, and data visualization software. This conversion simplifies the data structure and makes it easier to process. Here are six key points about this conversion process:

Handling Nested JSON Data

Handling nested JSON data requires a clear understanding of its hierarchical structure, where values can be objects or arrays within other objects or arrays. This complexity often arises in real-world applications, such as API responses or configuration files, where data is grouped logically. To work with nested JSON, it’s essential to parse and navigate through each level of the hierarchy. In programming, this typically involves accessing elements using dot notation or key references. Explore more in our guide on Master Tableau Server Performance Tuning Tips. When using tools like Excel or Power Query, nested structures must be expanded manually or through transformation steps to flatten the data into a tabular format. This may involve expanding records or lists multiple times to extract meaningful fields. In languages like Python, libraries such as json or pandas can be used to load and normalize nested JSON using functions like json_normalize(). Understanding the nesting helps in preserving relationships between entities during the transformation process. Special attention should be given to arrays within objects or nested objects within arrays, as they may require iteration or mapping logic. Properly handling nested JSON ensures accurate data representation and is crucial for data analysis, reporting, or integration into relational databases. Mastering this skill allows developers and analysts to extract value from complex data structures effectively.

Automating JSON to Excel Conversion

- Use of Scripting Languages: Tools like Python can automate JSON to Excel conversion using libraries such as pandas, openpyxl, or xlsxwriter, enabling flexible and repeatable workflows.

- Integration with APIs: Automation scripts can fetch JSON data directly from APIs and convert it to Excel in real-time, removing the need for manual downloads.

- Scheduled Automation: Tasks can be scheduled using tools like Windows Task Scheduler or cron jobs to perform conversions at regular intervals, ensuring updated data is always available. Discover the Best Big Data Visualization Tools to make the most of your data.

- Error Handling and Logging: Automated processes can include error detection and logging features, making it easier to track issues during data transformation.

- Dynamic Data Mapping: Automation allows for dynamic handling of varying JSON structures, with logic to adapt to nested or changing fields over time.

- Output Customization: Scripts can format Excel outputs, apply styling, and create multiple sheets or summaries automatically, enhancing data presentation.

Automating JSON to Excel conversion streamlines the process of transforming structured data into a format that’s easy to analyze and share. This is especially useful for businesses and developers who regularly work with API responses or receive data in JSON format. Automation reduces manual effort, minimizes errors, and saves time. Here are six key points about automating the conversion process:

Data Cleaning and Transformation Post-Conversion

Once JSON data is converted to Excel, data cleaning and transformation become essential steps to ensure accuracy, usability, and relevance for analysis or reporting. JSON data often contains inconsistencies such as missing values, nested structures, redundant fields, or non-uniform formatting, which must be addressed before meaningful insights can be drawn. Post-conversion, cleaning typically involves identifying and removing duplicates, handling null or empty values, correcting data types, and standardizing formats such as dates or numerical values. Transformation tasks include splitting or merging columns, flattening hierarchical data, renaming headers for clarity, and creating calculated fields to derive new insights. Learn more in our Tableau Comparison with BI Tools guide. Tools like Excel’s Power Query and Python’s pandas library offer robust features to automate and scale these tasks efficiently. For example, nested lists or objects from JSON can be expanded into separate rows or columns, while conditional logic can be applied to categorize or filter data. Ensuring clean and well-structured data is crucial not only for accuracy but also for performance in analytics tools and dashboards. A well-executed post-conversion cleaning process reduces noise, highlights trends, and lays the groundwork for effective data-driven decision-making across various applications, from business intelligence to machine learning workflows.

Validating Data Integrity After Conversion

Validating data integrity after converting JSON to Excel is a critical step to ensure that the transformation process has preserved the accuracy, completeness, and structure of the original data. During conversion, especially when dealing with nested or complex JSON structures, there is a risk of data loss, truncation, misalignment, or incorrect formatting. Validation involves cross-checking key fields, verifying data types, and ensuring that all records have been correctly transferred and flattened without altering their meaning. It also includes confirming that unique identifiers remain consistent, relationships between fields are maintained, and no extra or missing rows or columns have been introduced. Learn more with our Business Analyst Training. Tools like Excel formulas, pivot tables, or scripts in Python or R can be used to perform automated checks, such as row counts, checksum comparisons, or value range validations. Visual inspections and sample audits are also useful for spotting anomalies or structural issues. If the data is sourced from an API or database, comparing the original JSON payloads with the final Excel output helps identify discrepancies early. Maintaining data integrity is essential for ensuring that subsequent analysis, reporting, or decision-making is based on trustworthy and reliable information, making this validation step a fundamental part of any data conversion workflow.

Preparing for YourBusiness Analyst Interview? Check Out Our Blog on Business Analyst Interview Questions & Answer

Dealing with Large JSON Files

- Use Streaming Parsers: Instead of loading the entire file into memory, use streaming parsers like Python’s ijson or Java’s Jackson to read the file incrementally, which reduces memory usage.

- Split the File: Divide the large JSON file into smaller chunks using command-line tools or scripts, making it easier to process and analyze piece by piece.

- Leverage Databases: Import large JSON files into NoSQL databases like MongoDB or document-based relational databases that are optimized for storing and querying JSON structures.

- Optimize Data Structure: Minimize deeply nested or redundant data in the JSON file before processing to simplify transformation and improve performance.

- Use Efficient Tools: Tools like Power Query, Python (with pandas or dask), and big data platforms (like Apache Spark) are designed to handle large datasets effectively.

- Perform Incremental Processing: If the data is updated frequently, consider processing only the new or changed records rather than reprocessing the entire file each time.

Working with large JSON files can be challenging due to memory limitations, long processing times, and complex nested structures. These files often come from APIs, logs, or data exports and may contain thousands or even millions of records. Efficiently handling them requires strategies and tools that can manage size and complexity without crashing or slowing down the system. Here are six key approaches to deal with large JSON files:

Common Errors and Troubleshooting

When converting JSON to Excel or working with JSON data in general, various common errors can occur, often due to structural complexity, data inconsistencies, or tool limitations. One frequent issue is malformed JSON, such as missing brackets, commas, or quotation marks, which prevents parsers from reading the file correctly. Another common error involves incorrect data types strings being interpreted as numbers or dates misformatted during the conversion. Nested structures that are not properly flattened can lead to missing or misaligned data in Excel. Large JSON files may also cause Excel or scripts to crash due to memory overload. Additionally, encoding issues, especially with special characters or non-UTF-8 formats, can lead to unreadable data. Troubleshooting begins with validating the JSON using online validators or tools like jsonlint to catch syntax errors. For structural issues, using the Power Query editor in Excel or libraries like Python’s pandas can help identify and reshape problematic data. Explore Data and Drive Growth with QlikView BI Tool to enhance your business intelligence capabilities. Logging errors, checking row and column counts, and comparing a sample of the original JSON against the converted Excel data are useful practices for spotting discrepancies. Proper error handling in scripts and a clear understanding of the data schema are essential to avoid and fix issues during conversion and analysis.

Thinking About Earning a Master’s Degree in Business Analyst ? Enroll For Business Intelligence Master Program Training Course Today!

Best Practices for Data Conversion

Following best practices for data conversion is essential to ensure accuracy, efficiency, and consistency when transforming data from one format to another, such as from JSON to Excel. The process should begin with a clear understanding of the source data structure, including any nested objects or arrays, to plan for proper flattening and field mapping. Always validate the JSON format before conversion using syntax checkers or schema validation tools to avoid processing errors.Use automation tools or scripts to handle repetitive conversions, reducing the risk of manual errors and improving efficiency. It is also important to maintain consistent naming conventions and data types during the transformation to support clarity and usability. Learn more in our comparison of SAS Vs R for data analysis and statistical computing. Implement logging and error-handling mechanisms in automated workflows to capture and address any issues during the conversion process. Testing with small samples before running full conversions can help identify potential problems early. After conversion, validate the output by cross-checking record counts, field integrity, and data formatting. Where possible, document the conversion process, including transformation rules and scripts used, to ensure reproducibility and transparency. Following these best practices helps ensure that the converted data remains accurate, reliable, and ready for analysis or integration with other systems.

Case Studies and Real-World Applications

The process of converting JSON to Excel has wide applications across industries, often simplifying complex data handling and analysis. In the financial industry, one common use case involves converting API data from stock market feeds into Excel for analysis. Investors and analysts use automated processes to fetch real-time JSON data on stock prices and convert it to Excel, where they can quickly perform calculations, track trends, and visualize performance. In healthcare, electronic health records (EHR) are often stored in JSON format, and converting these records to Excel helps streamline reporting, patient analysis, and regulatory compliance. Similarly, e-commerce businesses rely on JSON data from customer interactions, transactions, and inventory management systems. By converting this data into Excel, they can perform deep dives into sales trends, product performance, and customer behavior to inform marketing and operational decisions. Learn more with our Business Analyst Training. In research, scientists and academic institutions often handle large JSON files containing experimental data, converting them into Excel for statistical analysis and sharing results. Government organizations also use JSON-to-Excel conversion for handling open data from public APIs, enabling easy access and reporting. These case studies show how the conversion process empowers professionals to make data-driven decisions, enhance operational efficiency, and improve strategic planning across various sectors.