- Introduction to TensorFlow

- Setting Up TensorFlow Environment

- TensorFlow Basics: Tensors and Operations

- Graphs and Sessions in TensorFlow

- Building a Neural Network

- Training and Evaluation

- Handling Datasets with tf.data

- TensorBoard for Visualization

- TensorFlow Lite and TensorFlow.js

- Case Study: MNIST Classification

- Best Practices and Tips

- Conclusion

Introduction to TensorFlow

TensorFlow is an open-source end-to-end machine learning (ML) platform developed by the Google Brain team. Since its launch in 2015, TensorFlow has become one of the most popular deep learning frameworks in the world, used by researchers, engineers, and companies alike. It is designed to facilitate the development and deployment of ML-powered applications across various platforms desktop, mobile, web, and embedded systems. TensorFlow supports a wide range of tasks such as classification, regression, object detection, natural language processing (NLP), and generative modeling capabilities that are deeply explored in Machine Learning Training, where learners master frameworks, build end-to-end models, and deploy intelligent solutions across diverse environments. It offers flexibility with a comprehensive ecosystem including high-level APIs (like Keras), visualization tools (like TensorBoard), and deployment utilities (like TensorFlow Lite and TensorFlow.js).

Setting Up TensorFlow Environment

To begin working with TensorFlow, it’s crucial to set up a reliable development environment. TensorFlow is compatible with Python and can be installed on Windows, macOS, and Linux. To complement this setup with career-level insights, exploring Machine Learning Engineer Salary reveals how technical proficiency in frameworks like TensorFlow, PyTorch, and containerized deployment can lead to compensation ranging from ₹7.5 to ₹8 lakh annually in India, with global averages exceeding $120K highlighting the value of hands-on engineering skills in today’s data-driven economy.

- # Step 1: Ensure Python 3.7–3.11 is installed

- # Download from the official Python website or use conda

- # Step 2: Create a virtual environment

- python -m venv tf_env

- source tf_env/bin/activate # macOS/Linux

- tf_env\Scripts\activate # Windows

- # Step 3: Install TensorFlow

- # For CPU-only support:

- pip install tensorflow

- # For GPU support:

- pip install tensorflow-gpu

- # Ensure CUDA and cuDNN libraries are installed for GPU acceleration

- # Step 4: Verify installation

- import tensorflow as tf

- print(tf.__version__)

Ready to Get Certified in Machine Learning? Explore the Program Now Machine Learning Online Training Offered By ACTE Right Now!

TensorFlow Basics: Tensors and Operations

Tensors and Operations are the core data structure in TensorFlow n-dimensional arrays similar to NumPy arrays. To complement this computational foundation with broader development resources, exploring Machine Learning Tools reveals top frameworks like TensorFlow, Accord.NET, and Apache Mahout each offering specialized capabilities for building, training, and deploying intelligent models across domains such as image recognition, recommendation systems, and predictive analytics.

- import tensorflow as tf

- # Creating Tensors

- scalar = tf.constant(5)

- vector = tf.constant([1, 2, 3])

- matrix = tf.constant([[1, 2], [3, 4]])

- # Tensor Operations

- a = tf.constant([[1, 2]])

- b = tf.constant([[3], [4]])

- result = tf.matmul(a, b)

TensorFlow uses lazy evaluation and automatic differentiation for operations, which allows for building efficient and flexible computational graphs.

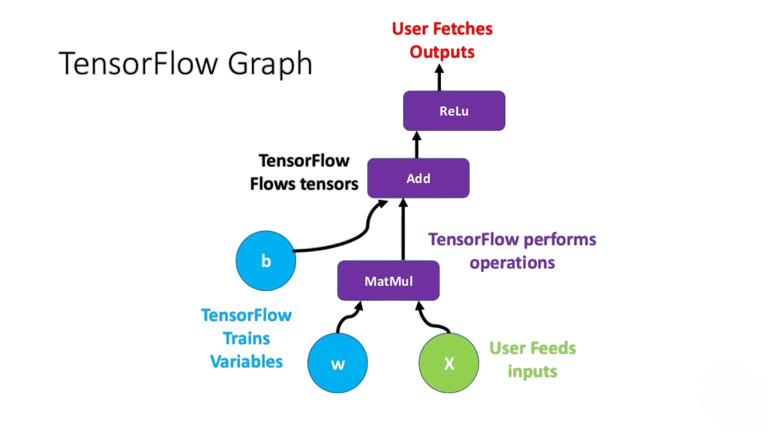

Graphs and Sessions in TensorFlow

In TensorFlow 1.x, computations required explicitly defining Graphs and Sessions running them. TensorFlow 2.x uses eager execution by default.

- @tf.function

- def compute(x, y):

- return x * y + 2

Graphs and Sessions allows TensorFlow to optimize the operation and convert it into a better performance.

To Explore Machine Learning in Depth, Check Out Our Comprehensive Machine Learning Online Training To Gain Insights From Our Experts!

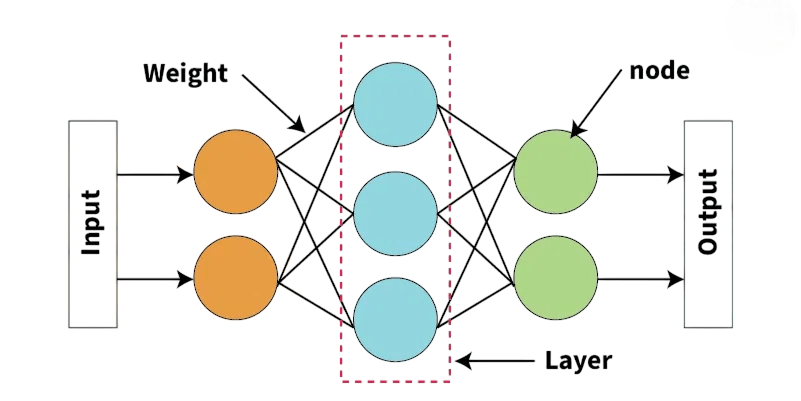

Building a Neural Network

TensorFlow uses Keras as its official high-level API. You can use either Sequential or functional APIs to define building a neural network. To complement this practical framework knowledge with foundational resources, exploring Deep Learning Books to Read reveals expert-recommended titles that balance theory and implementation covering neural network design, Keras-based workflows, and real-world applications across domains like computer vision, sequence modeling, and text generation.

- from tensorflow.keras.models import Sequential

- from tensorflow.keras.layers import Dense

- model = Sequential([

- Dense(64, activation=’relu’, input_shape=(784,)),

- Dense(10, activation=’softmax’)

- ])

Building a Neural Network model consists of a dense hidden layer with ReLU activation and an output layer with softmax for classification.

Training and Evaluation

- # Compile the Model

- model.compile(optimizer=’adam’,

- loss=’sparse_categorical_crossentropy’,

- metrics=[‘accuracy’]

- # Train the Model

- model.fit(train_images, train_labels, epochs=5, validation_split=0.2)

- # Evaluate the Model

- model.evaluate(test_images, test_labels)

You can use metrics such as accuracy, precision, recall, and F1-score to evaluate model performance.

Looking to Master Machine Learning? Discover the Machine Learning Expert Masters Program Training Course Available at ACTE Now!

Handling Datasets with tf.data

The tf.data API provides scalable input pipelines to handle datasets an essential component of efficient model training and deployment. These data engineering skills are thoroughly covered in Machine Learning Training, where learners build robust pipelines, optimize data flow, and prepare large-scale datasets for real-world ML applications.

- # Creating a Dataset

- dataset = tf.data.Dataset.from_tensor_slices((train_images, train_labels))

- dataset = dataset.shuffle(10000).batch(32).prefetch(tf.data.AUTOTUNE)

- # Loading External Data

- import pandas as pd

- df = pd.read_csv(‘data.csv’)

- dataset = tf.data.Dataset.from_tensor_slices((df[‘features’], df[‘labels’]))

This approach enables efficient data manipulation with batching, shuffling, and prefetching.

TensorBoard for Visualization

TensorBoard helps visualize training metrics, graphs, histograms, and images. To complement these monitoring capabilities with foundational modeling techniques, exploring Machine Learning Algorithms reveals essential methods like Linear Regression, SVM, Decision Trees, and Gradient Boosting each offering unique strengths for prediction, classification, and pattern discovery across supervised, unsupervised, and reinforcement learning tasks.

- # Saving and Restoring Models

- # Saving the Full Model

- model.save(‘model_path’)

- # Loading the Model

- new_model = tf.keras.models.load_model(‘model_path’)

- # Saving Only Weights

- model.save_weights(‘weights.h5’)

- model.load_weights(‘weights.h5’)

- # These tools help with version control, experimentation, and model deployment

Preparing for Machine Learning Job Interviews? Have a Look at Our Blog on Machine Learning Interview Questions and Answers To Ace Your Interview!

TensorFlow Lite and TensorFlow.js

TensorFlow extends ML capabilities to mobile and web through: TensorFlow Lite for on-device inference and TensorFlow.js for browser-based execution. To reinforce these deployment strategies with hands-on experience, exploring Machine Learning Projects reveals beginner-friendly applications like sales forecasting, music recommendation, and sentiment analysis each designed to help practitioners master data preprocessing, model training, and real-world deployment across platforms.

- # TensorFlow Lite

- # For embedded devices like Android, Raspberry Pi:

- tflite_convert –saved_model_dir=model_path –output_file=model.tflite

- # You can then use the .tflite model with a lightweight interpreter on mobile devices

- # TensorFlow.js

- # Deploy models in the browser with JavaScript:

- tensorflowjs_converter –input_format=tf_saved_model model_path tfjs_model

This is useful for interactive, real-time web applications without server dependency.

Case Study: MNIST Classification

The MNIST dataset consists of 70,000 grayscale images of handwritten digits (0–9). To complement this image classification task with a foundational algorithm, exploring Logistic Regression reveals how the sigmoid function transforms linear combinations into probabilities enabling binary and multiclass predictions that are both interpretable and efficient, especially for linearly separable data like digit recognition.

- # Loading Data

- mnist = tf.keras.datasets.mnist

- (train_images, train_labels), (test_images, test_labels) = mnist.load_data()

- train_images = train_images.reshape(-1, 784).astype(‘float32’) / 255.0

- # Building the Model

- model = Sequential([

- Dense(128, activation=’relu’),

- Dense(10, activation=’softmax’)

- ])

- model.compile(optimizer=’adam’, loss=’sparse_categorical_crossentropy’, metrics=[‘accuracy’])

- model.fit(train_images, train_labels, epochs=5)

- # Evaluating the Model

- loss, acc = model.evaluate(test_images.reshape(-1, 784) / 255.0, test_labels)

- print(f’Test Accuracy: {acc}’)

This case demonstrates how TensorFlow can be used for rapid prototyping and deployment.

Best Practices and Tips

- Normalize Input Data: Always scale your inputs (e.g., between 0 and 1).

- Use Batch Normalization: To stabilize and speed up training.

- Avoid Overfitting: Apply dropout, early stopping, and cross-validation.

- Monitor Training: Use TensorBoard to visualize learning.

- Save Checkpoints: Avoid losing progress due to crashes or errors.

- Use Pretrained Models: For tasks like image recognition, use tf.keras.applications.

- Optimize Performance: Utilize AUTOTUNE and multi-threaded pipelines.

- Experiment Systematically: Use different optimizers, learning rates, and architectures.

- Leverage the Community: Use TensorFlow Hub and GitHub repositories.

- Maintain Code Modularity: Keep your model, data pipeline, and training loop separate for scalability.

Conclusion

TensorFlow is a versatile, production-ready, and scalable deep learning framework that empowers developers and researchers to turn data into actionable intelligence. With its support for high-performance computation, GPU/TPU acceleration, cross-platform deployment, and a vast ecosystem, TensorFlow stands as a robust tool for a wide array of machine learning tasks capabilities thoroughly explored in Machine Learning Training, where learners build scalable models, optimize performance, and deploy intelligent solutions across diverse platforms. From the basics of tensors and computational graphs to building and deploying neural networks, this guide has covered the full breadth of TensorFlow essentials. As the field evolves, TensorFlow continues to adapt and introduce new functionalities to meet modern AI demands. Whether you’re a student learning machine learning, a developer deploying AI apps, or a researcher experimenting with custom models, TensorFlow is a platform you can rely on for performance, flexibility, and scalability.