- Introduction to Ensemble Learning

- What is Gradient Boosting?

- How Gradient Boosting Works

- Key Components (Loss Function, Weak Learners)

- Types: XGBoost, LightGBM, CatBoost

- Advantages of Gradient Boosting

- Limitations and Overfitting Risks

- Hyperparameter Tuning

- Performance Metrics

- Real-World Applications

- Summary

Introduction to Ensemble Learning

Ensemble learning is a powerful machine learning paradigm where multiple models, often called “weak learners,” are strategically combined to produce a model with improved performance and generalization capability. The underlying principle is that a group of weak learners can come together to form a strong learner, outperforming any individual model. For foundational and advanced skills, explore Machine Learning Training. Common ensemble strategies include bagging, boosting, and stacking. Among these, particularly gradient boosting has gained immense popularity due to its high predictive power and flexibility. Ensemble methods help to reduce variance (overfitting), bias (underfitting), or improve predictions. They are widely used in a variety of machine learning competitions and real-world applications, where accuracy is critical.

Ready to Get Certified in Machine Learning? Explore the Program Now Machine Learning Online Training Offered By ACTE Right Now!

What is Gradient Boosting?

Gradient Boosting is a machine learning technique used for both regression and classification tasks. It builds models in a stage-wise fashion and generalizes them by allowing optimization of an arbitrary differentiable loss function. Gradient Boosting is a type of boosting technique where new models are created to correct the errors made by previous models. Models are added sequentially, and each new model aims to reduce the residual errors of the combined ensemble of previous models. To complement this ensemble strategy with foundational and advanced resources, exploring Learning Books to Read reveals expert-curated titles that balance theory and implementation covering neural networks, optimization techniques, and real-world applications to help practitioners master concepts like boosting, backpropagation, and deep model tuning. Unlike bagging, where models are built independently and then averaged, boosting builds models iteratively with each one focusing on fixing the mistakes of the previous ensemble. This sequential learning process makes gradient boosting highly effective in capturing complex patterns in data.

How Gradient Boosting Works

Gradient Boosting works by sequentially adding predictors to an ensemble, each one correcting its predecessor. The process begins with an initial model, usually a simple one like a mean value in regression. Subsequent models predict the residuals (errors) of the combined ensemble so far, and these predictions are added to improve the model. To complement this iterative boosting strategy with foundational algorithmic insight, exploring Machine Learning Algorithms reveals how techniques like Gradient Boosting, Random Forest, and SVM enable systems to learn patterns, reduce bias and variance, and deliver accurate predictions across classification and regression tasks.

Workflow of Gradient Boosting:

- Initialize the model with a base prediction (e.g., the mean for regression).

- Calculate residuals from the initial prediction.

- Fit a weak learner (e.g., a decision tree) to the residuals.

- Update the model by adding the new learner’s predictions.

- Repeat steps 2–4 for a predefined number of iterations or until convergence.

- Combine all models to make the final prediction.

gradient boosting works an iterative process continues, with each new learner reducing the error of the ensemble. Learning rates and loss functions help in controlling the training process.

Key Components (Loss Function, Weak Learners)

The loss function measures how well the model is performing. Common loss functions include:

Loss Function:

- Mean Squared Error (MSE) for regression

- Log-loss (Binary Cross Entropy) for binary classification

- Categorical Cross Entropy for multi-class classification

Gradient Boosting minimizes this loss function using gradient descent.

Weak Learners

Typically, decision trees are used as weak learners in gradient boosting. These are usually shallow trees, also called “stumps,” which allow the model to be built incrementally without overfitting.

Learning Rate

This parameter controls how much each weak learner contributes to the final prediction. A smaller learning rate generally requires more trees but improves model generalization. To complement this tuning strategy with hands-on experience, exploring Machine Learning Projects reveals beginner-friendly applications like sales forecasting, music recommendation, and sentiment analysis each designed to reinforce concepts like ensemble learning, hyperparameter tuning, and real-world deployment.

To Explore Machine Learning in Depth, Check Out Our Comprehensive Machine Learning Online Training To Gain Insights From Our Experts!

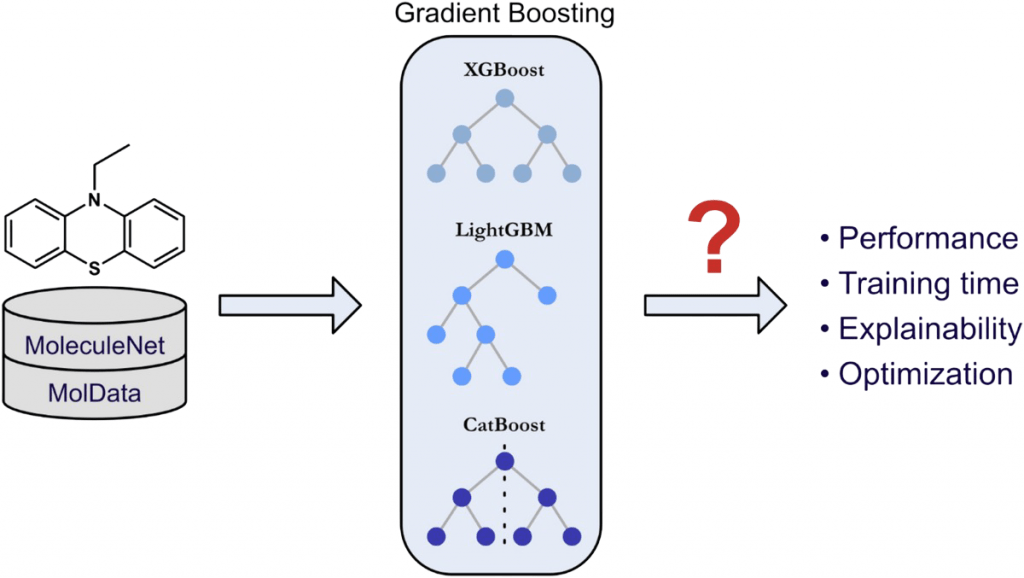

Types: XGBoost, LightGBM, CatBoost

In the field of machine learning, developers have changed predictive modeling with gradient boosting frameworks that provide improved capabilities. XGBoost leads the way as an optimized gradient boosting algorithm. It offers strong features like regularization, parallel processing, and advanced handling of missing values. This makes it very effective for structured data tasks. Microsoft’s LightGBM brings new techniques by using a histogram-based method and growing trees leaf-wise. This approach greatly improves computational efficiency, especially when dealing with large datasets. For foundational and advanced skills, explore Machine Learning Training. Another important framework is CatBoost, which Yandex developed. It excels at handling categorical features, removing the need for explicit encoding and offering strong protection against overfitting. These innovative gradient boosting tools are essential in both industry and research. They provide scalable, high-performance solutions that push the limits of predictive analytics and machine learning.

Advantages of Gradient Boosting

Gradient Boosting Decision Trees (GBDT) are a strong machine learning algorithm, showing impressive skills in various analytical areas. Data scientists widely appreciate GBDT for its high accuracy and consistent top performance in competitive settings. This flexible technique is great at tackling regression, classification, and ranking problems. By capturing complex non-linear data relationships, GBDT provides valuable insights into feature importance and offers many customization options. To complement these ensemble methods with a foundational classification approach, exploring Logistic Regression reveals how the sigmoid function transforms linear combinations into probabilities enabling interpretable binary and multiclass predictions, efficient training, and robust performance on linearly separable datasets. Implementations like XGBoost and CatBoost include built-in regularization features that help reduce overfitting, allowing data scientists to create reliable and accurate predictive models. The algorithm also lets users adjust loss functions and model parameters, increasing its usefulness and making it essential for advanced machine learning tasks.

Looking to Master Machine Learning? Discover the Machine Learning Expert Masters Program Training Course Available at ACTE Now!

Limitations and Overfitting Risks

Despite its advantages, gradient boosting has several challenges:

- Computationally Intensive: Training can be slow, especially for large datasets.

- Overfitting: Without proper tuning, models can overfit the training data.

- Sensitive to Outliers: Gradient boosting models can be influenced by noisy data.

- Parameter Tuning Required: Performance is highly dependent on correct hyperparameter settings.

Using techniques like cross-validation, early stopping, and regularization can help mitigate these issues.

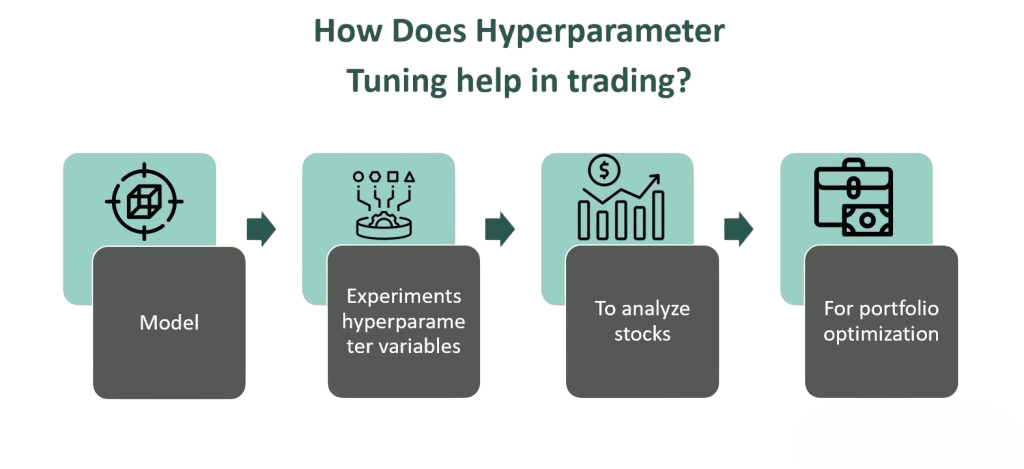

Hyperparameter Tuning

Hyperparameter Tuning is essential to maximize performance and minimize overfitting. Common Hyperparameter Tuning include: learning rate adjustment, tree depth control, and regularization techniques. To complement these optimization strategies with structured evaluation metrics, exploring Confusion Matrix in Machine Learning reveals how classification outcomes true positives, false positives, true negatives, and false negatives are organized into a matrix that helps assess model accuracy, precision, recall, and error types, guiding more effective tuning decisions.

- n_estimators: Number of trees (iterations).

- learning_rate: Shrinks the contribution of each tree.

- max_depth: Controls the complexity of the trees.

- min_child_weight: Minimum sum of instance weight (hessian) needed in a child.

- subsample: Fraction of samples used per tree.

- colsample_bytree: Fraction of features used per tree.

Techniques for Tuning:

- Grid Search

- Random Search

- Bayesian Optimization

- Automated ML tools (e.g., Optuna, Hyperopt)

Preparing for Machine Learning Job Interviews? Have a Look at Our Blog on Machine Learning Interview Questions and Answers To Ace Your Interview!

Performance Metrics

When evaluating machine learning models, it is important to choose the right performance metrics to assess their effectiveness. For regression tasks, key metrics include Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and R-squared (R²). These metrics help measure the model’s ability to predict accurately and its deviation from actual values. In classification tasks, researchers and data scientists use metrics like accuracy, precision, recall, F1-score, Area Under Curve (AUC), and log loss. To complement these evaluation techniques with ensemble learning strategies, exploring Bagging vs Boosting in Machine Learning reveals how bagging reduces variance by training models independently on random subsets, while boosting reduces bias by sequentially correcting errors both enhancing predictive stability and improving metric scores across diverse classification problems. These metrics help measure the model’s predictive ability and its discrimination skills. By selecting these evaluation metrics carefully, data professionals can gain important insights into a model’s performance. This allows them to make informed decisions about refining, optimizing, and potentially deploying the model in real-world situations.

Real-World Applications

Gradient Boosting is used in a wide range of applications due to its accuracy and adaptability: from fraud detection and customer churn prediction to medical diagnostics and recommendation systems. To understand how this technique fits into the broader landscape, exploring What Is Machine Learning reveals how core approaches like regression, classification, clustering, and anomaly detection empower systems to learn from data automating predictions, uncovering patterns, and refining performance across diverse domains.

Finance:

- Credit scoring

- Fraud detection

- Algorithmic trading

Healthcare:

- Disease prediction

- Patient readmission risk

- Personalized treatment recommendations

Marketing:

- Customer segmentation

- Churn prediction

- Targeted advertising

Retail:

- Demand forecasting

- Recommendation systems

- Inventory optimization

Manufacturing:

- Predictive maintenance

- Quality control

- Supply chain optimization

Gradient boosting is particularly well-suited for structured/tabular data, making it a go-to method for many Kaggle competitions and enterprise analytics.

Summary

Gradient Boosting is a powerful and flexible machine learning technique that excels in predictive modeling tasks. By building models iteratively and correcting errors from previous models, it creates a strong ensemble capable of handling both classification and regression challenges. For foundational and advanced skills, explore Machine Learning Training. With variations like XGBoost, LightGBM, and CatBoost, gradient boosting has become a favorite among data scientists. However, it requires careful tuning and evaluation to avoid pitfalls like overfitting. Its applications span across industries, including finance, healthcare, marketing, and more, making it one of the most valuable tools in a data scientist’s arsenal. With continuous advancements and optimizations, gradient boosting remains at the forefront of machine learning methodologies.