- Introduction to Quantum Computing

- Classical vs Quantum Computing

- Key Concepts: Qubits, Superposition, Entanglement

- Quantum Algorithms

- Quantum Gates and Circuits

- Programming Languages for Quantum Computing

- Quantum Hardware Overview

- Current Limitations

- Conclusion

Introduction to Quantum Computing

Quantum computing is a rapidly evolving field at the intersection of computer science, physics, and mathematics. Unlike classical computing, which uses bits (0 or 1) as the fundamental unit of information, quantum computing leverages quantum bits or qubits, which can exist in multiple states simultaneously. This unique feature allows quantum computers to solve complex problems at speeds unattainable by traditional Machine Learning Training . As global tech giants and research institutions continue to make breakthroughs, quantum computing promises to revolutionize areas like cryptography, drug discovery, logistics, and artificial intelligence. Quantum computing is an emerging field of computing that leverages the principles of quantum mechanics to process information in fundamentally new ways. Unlike classical computers, which use bits to represent data as 0s or 1s, quantum computers use quantum bits, or qubits, which can exist in multiple states simultaneously thanks to a phenomenon called superposition. Additionally, qubits can be entangled, allowing quantum computers to perform certain calculations exponentially faster than classical counterparts. This unique capability holds promise for solving complex problems in cryptography, Quantum Hardware Overview optimization, drug discovery, and material science that are currently intractable for classical machines. While still in its infancy, quantum computing is rapidly evolving, with researchers and companies exploring Python practical algorithms, artificial intelligence and hardware to unlock its transformative potential.

Ready to Get Certified in Machine Learning? Explore the Program Now Machine Learning Online Training Offered By ACTE Right Now!

Classical vs Quantum Computing

- Classical computing : Is based on bits that represent information as either 0 or 1. These bits are the building blocks of all traditional computers, which process data using logical operations Applications of Machine Learning in a deterministic and sequential manner. Classical computers excel at a wide range of tasks and are the foundation of today’s technology, from smartphones to supercomputers.

- Quantum computing, On the other hand, uses quantum bits or qubits, which can represent both 0 and 1 simultaneously due to superposition. Moreover, qubits can be entangled, creating strong correlations between them that classical bits cannot replicate. These properties enable quantum computers to perform many calculations in parallel, potentially solving specific problems like factoring large numbers or simulating molecular interactions much faster than classical computers.

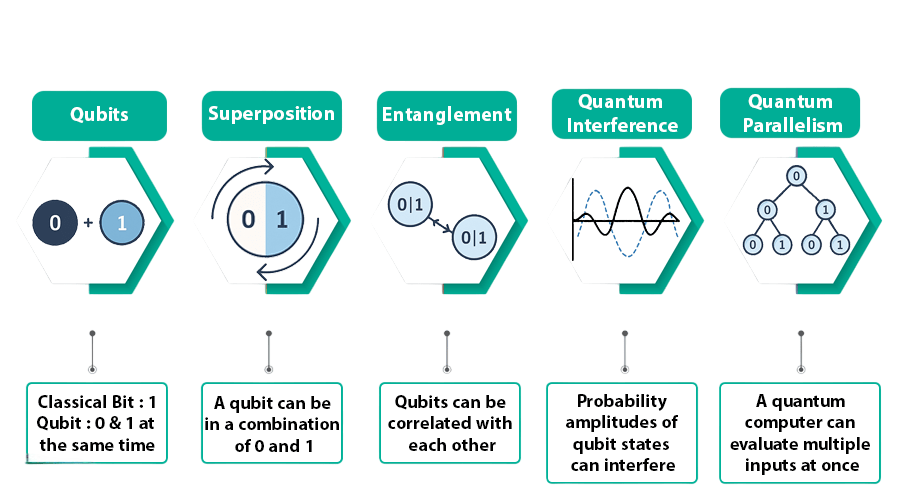

Key Concepts: Qubits, Superposition, Entanglement

- Qubits: A qubit is the basic unit of quantum information. Unlike a classical bit, it can exist in a superposition of 0 and 1. Mathematically, a qubit is represented as.

- Superposition: Superposition allows qubits to perform multiple calculations at once, making quantum computers exponentially faster for certain problems.

- Entanglement: When two or more qubits become entangled, TensorFlow their states are interconnected such that the state of one qubit instantly determines the state of another regardless of distance. This phenomenon enables parallel computation and powerful correlations not possible in classical systems.

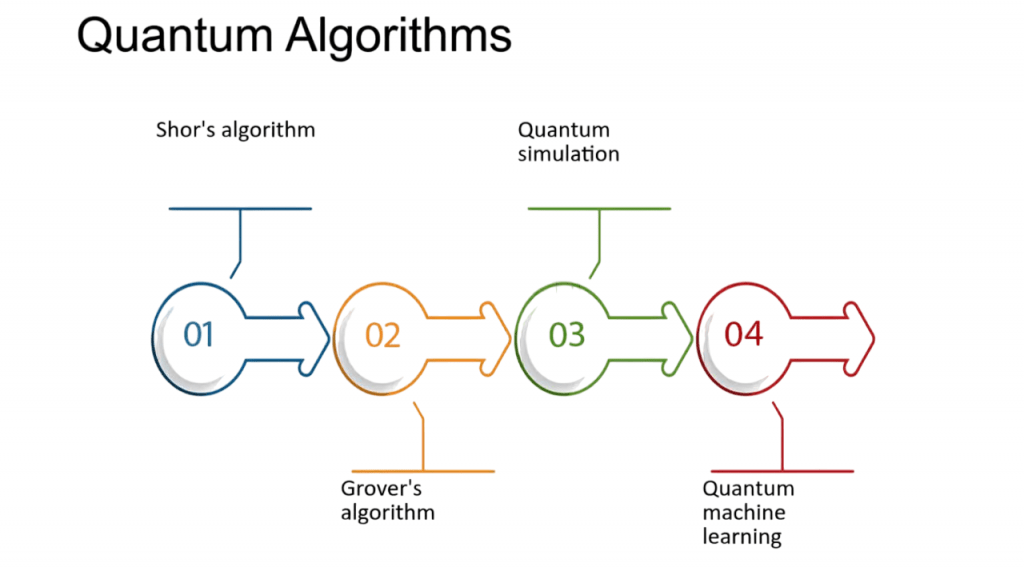

- Shor’s Algorithm – Efficient integer factorization; poses a threat to RSA encryption.

- Grover’s Algorithm – Speeds up unsorted database searches (√N time vs N).

- Quantum Fourier Transform – Core to many quantum algorithms.

- Quantum Machine Learning Algorithms – Emerging field integrating quantum computing with AI.

where α\alpha and β\beta are probability amplitudes.

To Explore Machine Learning in Depth, Check Out Our Comprehensive Machine Learning Online Training To Gain Insights From Our Experts!

Quantum Algorithms

Quantum algorithms exploit the unique properties of qubits to solve specific problems more efficiently than classical algorithms Artificial Intelligence Salary Guide.

Notable Quantum Algorithms:

These algorithms are still in the early stages of practical use but represent significant theoretical milestones.

Quantum Gates and Circuits

Just as classical circuits use logic gates, quantum circuits use quantum gates to manipulate qubits. Machine Learning Training is increasingly intersecting with quantum computing, where unlike classical gates, quantum gates used in artificial intelligence are reversible and represented as unitary matrices.

Common Quantum Gates:

- Pauli-X Gate: Flips qubit state (like NOT).

- Hadamard Gate (H): Puts qubit into superposition.

- CNOT (Controlled NOT): Entangles two qubits.

- T and S Gates: Provide phase shifts.

Quantum circuits are formed by applying a series of gates to qubits to achieve computation.

- |0⟩ –[H]–•— |

- |0⟩ ——-X—

This circuit creates entanglement between two qubits using Hadamard and CNOT gates.

Looking to Master Machine Learning? Discover the Machine Learning Expert Masters Program Training Course Available at ACTE Now!

Programming Languages for Quantum Computing

Quantum computing requires specialized programming languages and frameworks designed to manipulate qubits and quantum gates. Unlike classical programming, Fundamentals Of Reinforcement Learning these languages must accommodate quantum phenomena such as superposition and entanglement. Some popular quantum programming languages and tools include:

- Qiskit: An open-source Python library developed by IBM, used for creating, simulating, cryptography, and running quantum circuits on IBM’s quantum processors.

- Cirq: Developed by Google, Cirq is a Python framework designed for writing, optimizing, and running quantum circuits on quantum computers.

- Quipper: A functional programming language embedded in Haskell, designed for scalable quantum programming with high-level abstractions.

- Q#: Created by Microsoft, Q# is a domain-specific language integrated with the Quantum Development Kit for designing quantum algorithms.

- Forest (pyQuil): Provided by Rigetti Computing, Forest offers the pyQuil Python library to develop and simulate quantum programs.

These languages often interface with classical languages like Python, enabling hybrid quantum-classical algorithms. As quantum hardware evolves, these programming tools continue to mature, helping researchers and developers harness the power of quantum computation.

Quantum Hardware Overview

Unlike classical computers, quantum computers require specialized hardware to maintain quantum coherence. Quantum hardware refers to the physical devices that implement quantum bits (qubits) and perform quantum computations. Unlike classical hardware, artificial intelligence which relies on transistors and classical circuits, quantum hardware uses a variety of technologies to create and control qubits, Become an AI Engineer each with unique advantages and challenges. Some of the most common qubit implementations include superconducting circuits, which use superconducting loops cooled to near absolute zero to minimize energy loss; trapped ions, where individual ions are suspended and manipulated using electromagnetic fields; topological qubits, which rely on exotic particles to encode information more robustly; and photonic qubits, which use particles of light to transmit quantum information. The performance of quantum hardware is measured by factors such as qubit coherence time, gate fidelity, and scalability. Despite significant progress, quantum hardware remains in early development stages, with ongoing research focused on improving qubit stability, error correction, and building larger quantum processors capable of solving practical problems beyond the reach of classical computers.Each hardware type has trade-offs in terms of scalability, Quantum hardware overview error rates, and operation complexity.

Preparing for Machine Learning Job Interviews? Have a Look at Our Blog on Machine Learning Interview Questions and Answers To Ace Your Interview!

Current Limitations

Despite its potential, quantum computing faces several challenges:

- Decoherence and Noise: Qubits lose coherence quickly, leading to errors.Noise makes accurate computation difficult.

- Error Correction: Requires many physical qubits to create one logical qubit.Still a major area of research.

- Scalability: Difficult to build stable systems with thousands of qubits.Current systems are in the range of 50–500 qubits.

- Programming Complexity: Quantum logic is unintuitive.Tools and abstraction layers are still maturing.

These limitations make current systems primarily suited for research and proof-of-concept applications.

Conclusion

Quantum computing represents a groundbreaking shift in how we process information, promising to solve complex problems that classical computers struggle with. Machine Learning Training , while still in its nascent stages in some areas, is rapidly progressing through advancements in quantum hardware and Python programming languages. Challenges like qubit stability, Quantum hardware overview error correction, and scalability remain, cryptography but ongoing research and innovation continue to bring us closer to realizing the full potential of quantum technology. As quantum computers evolve, they have the potential to revolutionize industries ranging from cryptography and pharmaceuticals to materials science and artificial intelligence, ushering in a new era of computational capability.