- Machine Learning Frameworks

- Importance of Machine Learning Frameworks

- TensorFlow Overview

- PyTorch Overview

- Keras Overview

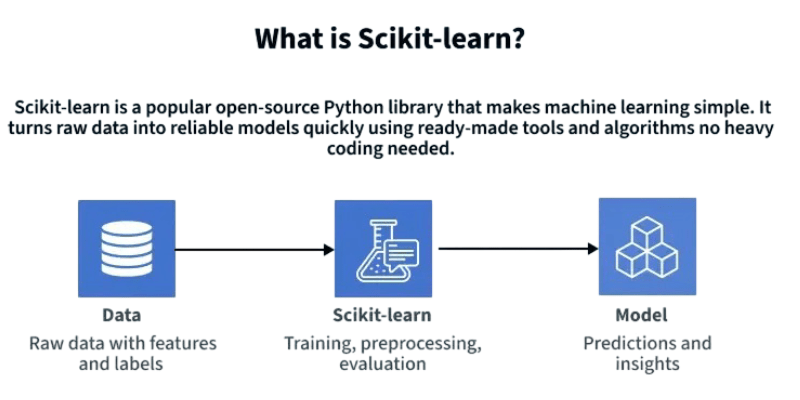

- Scikit Learn Overview

- MXNet and Other Frameworks

- Performance Benchmarks

- Future Trends in ML Frameworks

- Conclusion

Machine Learning Frameworks

Machine Learning frameworks are essential tools that simplify the process of developing, training, and deploying ML models. Machine Learning Training provides hands-on experience with leading frameworks, empowering learners to build scalable and efficient AI solutions.

They provide pre-built components for model architecture, data handling, training loops, evaluation metrics, and deployment options, allowing developers and researchers to focus on solving problems rather than implementing low-level details. Whether you’re building a simple linear regression model or a complex neural network for computer vision, the right framework can accelerate development, improve efficiency, and ensure reproducibility.

Ready to Get Certified in Machine Learning? Explore the Program Now Machine Learning Online Training Offered By ACTE Right Now!

Importance of Machine Learning Frameworks

Machine learning frameworks change how models are developed. They provide strong infrastructure that improves scalability and efficiency. These platforms offer important benefits, including code abstraction that reduces repetitive programming tasks, hardware acceleration that supports advanced GPU and TPU calculation.

Modularity that lets developers easily reuse components like layers, optimizers, and loss functions all enabled by Machine Learning Algorithms that power scalable, efficient, and intelligent systems. Their strong interoperability makes it easy to integrate with visualization tools and deployment systems. Additionally, a helpful community offers tutorials, libraries, and support. By simplifying complex machine learning workflows, these frameworks allow data scientists and engineers to focus on innovation and model design. This ultimately speeds up technological progress and makes development easier.

TensorFlow Overview

TensorFlow, developed by Google, is a powerful, flexible, and production-ready ML framework. It supports deep learning, reinforcement learning, and classical ML models often compared with Keras vs TensorFlow, where Keras offers a simpler, high-level API ideal for rapid prototyping, while TensorFlow provides full-stack control for scalable deployment.

Key Features:

- Keras API: For high-level development.

- TensorBoard: For training visualization.

- TensorFlow Lite and TensorFlow.js: For deployment on mobile/web.

- Multi-GPU and TPU support:

Code Example

- import tensorflow as tf

- from tensorflow.keras.models import Sequential

- from tensorflow.keras.layers import Dense

- model = Sequential([

- Dense(64, activation=’relu’, input_shape=(10,)),

- Dense(1)

- ])

- model.compile(optimizer=’adam’, loss=’mse’)

- model.fit(X_train, y_train, epochs=10)

PyTorch Overview

PyTorch, developed by Facebook, is known for its dynamic computation graph and intuitive coding style. It’s particularly popular in academia and research due to its flexibility while platforms like Overview of ML on AWS offer scalable infrastructure, prebuilt services, and deployment tools for production-grade machine learning workflows.

Key Features:

- Dynamic Graphs: Change architecture during runtime.

- TorchScript: For deploying models in production.

- Support: Native support for GPU acceleration.

- Grow: Growing ecosystem (e.g., TorchVision, TorchText, PyTorch Lightning).

Code Example

- import torch

- import torch.nn as nn

- import torch.optim as optim

- class Net(nn.Module):

- def __init__(self):

- super(Net, self).__init__()

- self.fc = nn.Linear(10, 1)

- def forward(self, x):

- return self.fc(x)

- model = Net()

- criterion = nn.MSELoss()

- optimizer = optim.Adam(model.parameters(), lr=0.01)

- for epoch in range(10):

- optimizer.zero_grad()

- output = model(torch.tensor(X_train, dtype=torch.float32))

- loss = criterion(output, torch.tensor(y_train, dtype=torch.float32))

- loss.backward()

- optimizer.step()

To Explore Machine Learning in Depth, Check Out Our Comprehensive Machine Learning Online Training To Gain Insights From Our Experts!

Keras Overview

Keras is a powerful, high-level neural network API built on TensorFlow. It simplifies deep learning development with an intuitive and user-friendly approach. Keras offers clean syntax and flexible model definition through Sequential and Functional APIs. This allows developers to create complex machine learning models easily. Machine Learning Training introduces learners to Keras fundamentals, enabling rapid prototyping and deployment of deep learning solutions. Its close integration with TensorFlow 2.x offers seamless customization options. This makes Keras appealing to both beginners and professionals who want to prototype quickly. Developers can quickly define neural network architectures using concise code. They can create sequential models with dense layers and specify optimizers and loss functions. This dramatically reduces the complexity that usually comes with implementing deep learning.

Scikit Learn Overview

Scikit-learn is a strong Python library that helps data scientists and researchers use classical machine learning algorithms effectively. It provides a complete set of tools for preprocessing, feature engineering, and model development enabled by Pattern Recognition and Machine Learning, which allow systems to identify trends, classify data, and adapt to complex input patterns. With its simple and consistent API, users can apply various techniques like decision trees, support vector machines, and ensemble methods. Scikit-learn’s solid structure allows for easy pipeline creation for preprocessing and modeling, while its extensive documentation and practical examples guide users in implementing solutions smoothly.

The library works particularly well for classical ML tasks and small to medium-sized datasets, offering user-friendly features that let practitioners split data, train models like Random Forest classifiers, and evaluate performance metrics without much hassle. Its approachable design and wide range of features make Scikit learn a valuable resource for machine learning practitioners looking for dependable and straightforward computing solutions.

Looking to Master Machine Learning? Discover the Machine Learning Expert Masters Program Training Course Available at ACTE Now!

MXNet and Other Frameworks

- MXNet: Backed by Apache and Amazon, MXNet is designed for scalability and efficiency. It supports multiple languages (Python, Scala, Julia) and is the backend for Amazon SageMaker.

- JAX: Developed by Google, JAX is a numerical computing library that excels in automatic differentiation and supports GPU/TPU.

- CNTK: Microsoft’s Cognitive Toolkit, once used in production applications like Skype, is no longer actively maintained.

- FastAI: Built on PyTorch, FastAI provides simplified interfaces for deep learning.

Performance Benchmarks

Performance depends on:

- Dataset size

- Hardware

- Model architecture

- Training methodology

General observations:

- TensorFlow and MXNet scale well across GPUs and TPUs.

- PyTorch has caught up in performance with the release of features like TorchScript and native AMP.

- Scikit-learn is highly optimized for CPU-based classical ML.

Preparing for Machine Learning Job Interviews? Have a Look at Our Blog on Machine Learning Interview Questions and Answers To Ace Your Interview!

Future Trends in ML Frameworks

- Increased Interoperability: Efforts like ONNX (Open Neural Network Exchange) allow converting models across frameworks.

- Integration with MLOps Pipelines: Tools like TensorFlow Extended (TFX), MLFlow, and SageMaker Pipelines are simplifying end-to-end workflows.

- Edge AI & Federated Learning: Frameworks are optimizing for low-power devices with privacy-preserving training often leveraging interpretable models like Decision Trees in Machine Learning, which offer lightweight inference and transparent decision paths suitable for edge deployment.

- AutoML and Low-Code AI: ML frameworks are adding higher levels of abstraction (e.g., KerasTuner, AutoKeras).

- Quantum Machine Learning Integration: Libraries like PennyLane and TensorFlow Quantum point toward integration of quantum computing in ML frameworks workflows.

Conclusion

Think of TensorFlow as a powerful industry designed for large AI projects. Companies count on it for big deployments. In contrast, PyTorch is like a flexible sculptor’s toolkit. It gives developers great control and adaptability. Machine Learning Training helps learners master both frameworks, balancing scalability with flexibility to meet diverse AI development needs. This makes it perfect for research and quick prototyping. Scikit learn is the best choice for simpler tasks. It offers a user-friendly experience and excels at traditional machine learning models. Each of these tools has its strengths, making them suitable for different types of AI work. To choose the right Machine Learning Frameworks, you need to see the whole picture. Think about the performance needs of your project and your main development goals. This knowledge helps you make a good choice. The world of AI changes quickly, with new ideas and tools popping up all the time. To stay ahead, you need to keep learning. Trying out different frameworks is key; it sharpens your skills and prepares you for new challenges. By doing this, you can keep building amazing AI.