- Basics of Image Processing

- Digital Image Representation

- Filtering and Convolution

- Edge Detection Techniques

- Color Spaces and Histograms

- Morphological Operations

- Feature Extraction from Images

- Image Preprocessing for ML

- Applications In Medical, Surveillance, Industrial

- Conclusion

Basics of Image Processing

Image processing refers to techniques that manipulate digital images to extract information, enhance visual quality, or prepare images for analysis by algorithms. It is central to computer vision, graphics, and medical diagnostics, underpinning fields such as facial recognition, satellite imagery, and industrial inspection. Image processing workflows organize three levels, low-level processing focuses on basic pixel data manipulation through filtering and noise removal. Mid-level processing identifies meaningful structural elements using edge detection and segmentation. High-level processing allows object recognition and decision-making each stage contributing to Machine Learning Training pipelines for vision-based models. Practitioners share important goals across these workflows. They aim to improve images for better clarity, restore distorted visual data, and perform analysis to extract geometric and textural features. Key principles guiding these processes include important metrics like brightness, which shows average pixel intensity; contrast, which measures variations between dark and light areas; spatial resolution, which indicates pixel sampling density; and dynamic range, which captures the range of pixel values. By understanding these technical foundations, practitioners can create effective processing pipelines that change raw visual data into meaningful insights, bridging the gap between input and smart interpretation.

Ready to Get Certified in Machine Learning? Explore the Program Now Machine Learning Online Training Offered By ACTE Right Now!

Digital Image Representation

digital image representation computationally using discrete approximations of visual scenes: In digital imaging, images are mainly represented through mathematical structures that capture visual information with great precision. Grayscale images are two-dimensional matrices where each pixel’s brightness ranges from 0 (black) to 255 (white). This setup allows for a nuanced representation of intensity. Color images take this further with three-dimensional tensors that map pixels across red, green, and blue channels to create vibrant visuals structures that also support feature extraction strategies relevant to Bagging vs Boosting in Machine Learning. Different encoding formats support various compression methods, from uncompressed RAW and BMP to lossless PNG and lossy JPEG. Each format balances file size and image quality. Resolution and pixel sampling affect image clarity, with spatial sampling defining the number of pixels while intensity quantization captures brightness levels. Pixel coordinates follow specific frameworks, like row-major or column-major conventions. Embedded metadata captures important details such as camera settings, GPS coordinates, and capture timestamps. These representations form the basis for image processing, allowing for complex computations and serving as a foundation for machine learning and computer vision applications.

Filtering and Convolution

Filtering is a cornerstone operation in image processing. It manipulates pixel values using convolution kernels (filters) to perform effects like blurring, sharpening, denoising, and edge enhancement.

Types of Filters:

- Mean (Box) Filter: Kernel filled with 1⁄mn for local averaging useful for simple blurring.

- Gaussian Filter: Weighting by Gaussian distribution; smooths images while preserving edges better than mean filters.

- Sharpening Filter: Emphasizes edges by applying a Laplacian-like kernel (e.g., [0 −1 0; −1 5 −1; 0 −1 0]).

- Median Filter: Non-linear filtering where each pixel is replaced by the median of its neighbors; excellent at removing salt-and-pepper noise with edge preservation.

Linear vs. Non-linear Filtering:

- Linear filters: (mean, Gaussian, Sobel) convolve weighted combinations of pixel intensities.

- Non-linear filters: (like median filters) use order statistics and are robust to outliers.

Frequency Domain Filtering:

- Fourier transforming images (F = f{I}) allows filters in frequency space:

- Low-pass filters: Attenuate high frequencies producing smoothing.

- High-pass filters: Attenuate low frequencies enhancing edges.

- Band-pass filters: Isolate mid-range frequencies.

Gaussian smoothing in the spatial domain corresponds to attenuating high frequencies in the Fourier domain, and convolution property (ℱ{I * K} = ℱ{I} ⋅ ℱ{K}) enables fast implementation with FFT.

To Explore Machine Learning in Depth, Check Out Our Comprehensive Machine Learning Online Training To Gain Insights From Our Experts!

Edge Detection Techniques

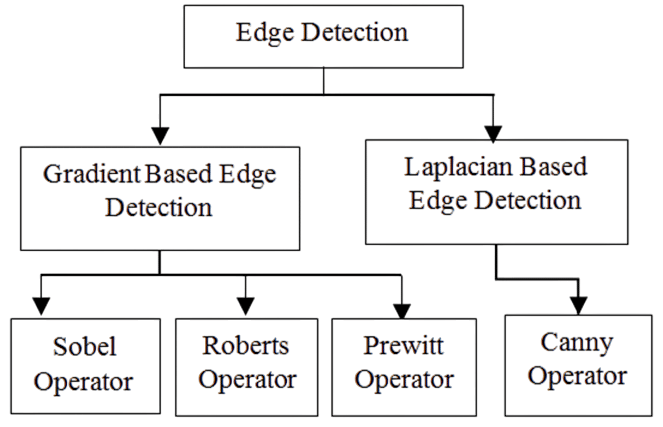

Edge detection techniques locate sharp discontinuities in pixel values critical for segmentation, object detection, and feature extraction core operations that illustrate the practical foundations of What Is Machine Learning.

Common Operators:

- Sobel Kernels: Approximate gradients in x- and y-directions.

- Prewitt Operator: Similar to Sobel but simpler kernels.

- Laplacian of Gaussian (LoG): Applies Gaussian blur then Laplacian (second order derivative); detects edges but is sensitive to noise.

- Canny Edge Detector: Combines gradient, non-maximum suppression, and hysteresis thresholding; produces accurate, thin edge detection techniques.

Steps in Canny Algorithm:

- Smooth image with Gaussian filter.

- Compute gradient magnitude and direction.

- Apply non-maximum suppression.

- Perform double threshold and edge tracing.

Other Edge Detectors:

- Roberts Cross: Quick, sensitive diagonal gradient detection.

- Scharr Filter: Refined version of Sobel for higher rotational symmetry.

Edge maps serve as boundaries for segmentation and can be used as features in machine vision applications.

Color Spaces and Histograms

Color representation and histograms are key techniques in image analysis. They provide effective methods for understanding and processing visual data. Different color spaces, such as RGB, HSV, LAB, and YCbCr, offer unique ways to encode and interpret image features. Each space has specific uses in digital imaging. Researchers use RGB to represent images through additive color channels a foundational concept in Machine Learning Training for vision-based models. HSV helps with color-based segmentation by separating hue, saturation, and intensity. Experts use the LAB color space for a perceptually linear representation. Engineers apply YCbCr to aid in video compression by distinguishing between luminance and chrominance components. Analysts use color histograms as useful tools, capturing pixel intensity distributions across different channels. This enables important functions like comparing image similarity and segmentation. Techniques like histogram equalization allow professionals to improve image contrast and visibility, especially in low-contrast areas. This makes these representations essential for image processing and computer vision applications.

Morphological Operations

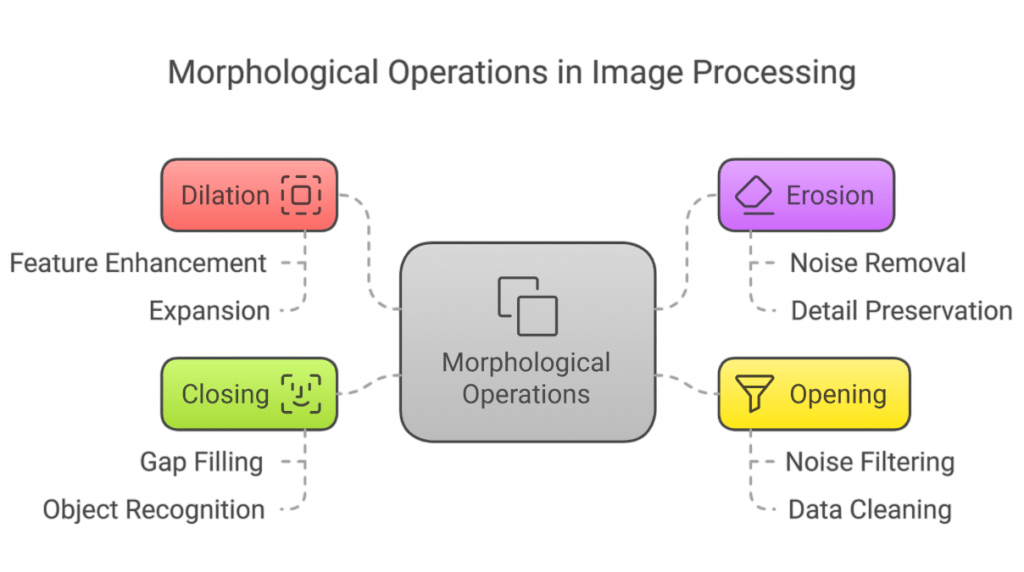

Morphological image processing uses a strong technique for structure-based manipulation of binary and grayscale images. Developers use a small binary matrix called a structuring element (SE) as a probe for transformation. Key operations include erosion, which removes pixels on object boundaries by moving the SE, and dilation, which adds pixels to object boundaries through the mirrored translation of the SE.

Combined techniques like opening and closing allow for sophisticated image refinement by sequentially applying erosion and dilation to remove noise, preserve object shapes, and fill small gaps. Derived operators such as top-hat and geodesic transformations further improve image analysis. Developers implement morphological processing in libraries like OpenCV and scikit-image. These libraries provide efficient solutions for important tasks such as noise removal, hole filling, and connected-component labeling. This makes morphological processing an essential tool in image segmentation and recognition workflows.

Looking to Master Machine Learning? Discover the Machine Learning Expert Masters Program Training Course Available at ACTE Now!

Feature Extraction from Images

To analyze images, meaningful descriptors are required features that enable classification, segmentation, and recognition tasks often powered by the Vector Machine (SVM) Algorithm in high-dimensional spaces.

Traditional Descriptors:

- SIFT: Scale- and rotation-invariant keypoints with descriptive histograms.

- SURF: Faster alternative to SIFT using integral images.

- ORB: Efficient, binary tuple-based descriptor valuable in real-time applications.

Texture Analysis:

- Local Binary Patterns (LBP): Encodes local intensity differences around pixels.

- Gabor Filters: Multi-scale, multi-orientation filtering captures edge and texture information.

- GLCM (Gray-Level Co-occurrence Matrix): Statistical texture measurement entropy, contrast features.

Feature Dimensionality:

- High-dimensional features are commonly reduced using:

- PCA/T-SNE: For analysis and noise reduction.

- Bag-of-Visual-Words (BoVW): For codebook representation.

Image Preprocessing for ML

Successful image preprocessing for ml hinges on preprocessing.

Steps:

- Resizing: Ensures consistent input sizes for CNNs.

- Normalization: Scales pixel values from 0–255 to 0–1 or –1 to +1.

- Mean subtraction: Standardizes based on channel average values.

- Augmentation: Random crop, flip, rotate, color jittering.

- Segmentation: Cropping to foreground or regions.

- Denoising: Applying median or bilateral filters.

- RGB: Grayscale/color-space transformations.

Image preprocessing for ml techniques not only preserve learning consistency but reduce overfitting and improve model robustness.

Preparing for Machine Learning Job Interviews? Have a Look at Our Blog on Machine Learning Interview Questions and Answers To Ace Your Interview!

Deep Learning in Image Processing

Deep learning revolutionized image analysis by enabling feature learning through CNNs a contrast to manually engineered features traditionally used in models like Decision Trees in Machine Learning.

CNN Structure:

- Convolution layers: Learn local patterns.

- Pooling layers: Downsample spatial dimensions.

- Fully connected layers: Aggregate features for classification.

Breakthrough Architectures:

- AlexNet (2012): Ignited the “deep learning era” with breakthrough ImageNet performance.

- VGG, GoogLeNet, ResNet: Deeper structures with skip connections; improved accuracy.

- UNet, FCN: Specialized for segmentation tasks.

Applications:

- Image classification

- Object detection

- Semantic segmentation

- Super-resolution

- Medical diagnostics

- Style transfer

- Tasks across satellite, autonomous driving, and aerial imaging

Applications In Medical, Surveillance, Industrial

Medical Imaging:

- MRIs, CT scans undergo preprocessing, segmentation (e.g., brain tumor detection), and quantification using thresholding and CNNs.

- Essential for lesion classification, surgical guidance, and anomaly detection.

Surveillance:

- Face/vehicle detection, behavior analysis, sports analytics.

- YOLO and HOG+SVM enable real-time monitoring and alerting systems.

Industrial Inspection:

- Detects surface defects (metal, fabric, glass).

- Conveyor-based camera systems and Deep CNNs detect cracks or misalignments in real-time.

- Counting crops, identifying leaf disease via segmentation algorithms.

Each domain requires tailored pipelines combining filtering, feature extraction, segmentation, and classifier ensembles, often anchored by deep learning models for high adaptive performance.

Conclusion

Image processing connects raw visual data with intelligent understanding. It includes a wide range of techniques, from pixel-level filtering to deep learning-based recognition. This field looks at important topics like digital image representation, filtering in spatial and frequency domains, edge detection, color modeling, and methods such as morphology, segmentation, and feature engineering. Machine Learning Training plays a crucial role in equipping researchers and developers to use strong tools like OpenCV and PIL, turning image processing into a significant technology with major applications in essential industries like healthcare, surveillance, and manufacturing. This technology provides new insights and analytical abilities that promote innovation and improve efficiency.