- Introduction to Perceptron

- History and Background

- Perceptron Architecture

- Mathematical Formulation

- Learning Algorithm

- Limitations of Single Layer Perceptron

- Multi Layer Perceptron (MLP)

- Perceptron in Neural Networks

- Use Cases and Examples

- Implementation in Python

- Perceptron Training Visualization

- Summary

Introduction to Perceptron

The perceptron is a fundamental building block in the history of artificial intelligence and neural networks. Introduced in the 1950s, it marked the beginning of the journey toward machines that can mimic human learning. Machine Learning Training introduced foundational models like the perceptron, which though simple in design laid the groundwork for modern deep learning systems. Understanding how perceptrons work is critical for grasping the evolution and mechanisms of artificial neural networks. This guide explores the perceptron’s origins, architecture, mathematical principles, learning algorithm, and its evolution into the multi layer perceptron. We also examine practical implementations, comparisons with logistic regression, and modern applications.

Ready to Get Certified in Machine Learning? Explore the Program Now Machine Learning Online Training Offered By ACTE Right Now!

History and Background

The perceptron was introduced by Frank Rosenblatt in 1958 while working at the Cornell Aeronautical Laboratory. The evolution of intelligent systems begins with foundational Machine Learning Algorithms. Inspired by earlier neural models and the McCulloch-Pitts neuron, Rosenblatt’s goal was to develop a machine capable of pattern recognition and learning from data. Initially, the perceptron received significant attention from the media and researchers. It was hailed as a major breakthrough toward building intelligent machines. Rosenblatt built a physical machine called the Mark I Perceptron, which could recognize simple patterns. However, in 1969, Marvin Minsky and Seymour Papert published a book titled Perceptrons, where they demonstrated the limitations of single layer perceptrons, particularly their inability to solve the XOR problem. This criticism led to a decline in neural network research until the resurgence in the 1980s with the advent of backpropagation.

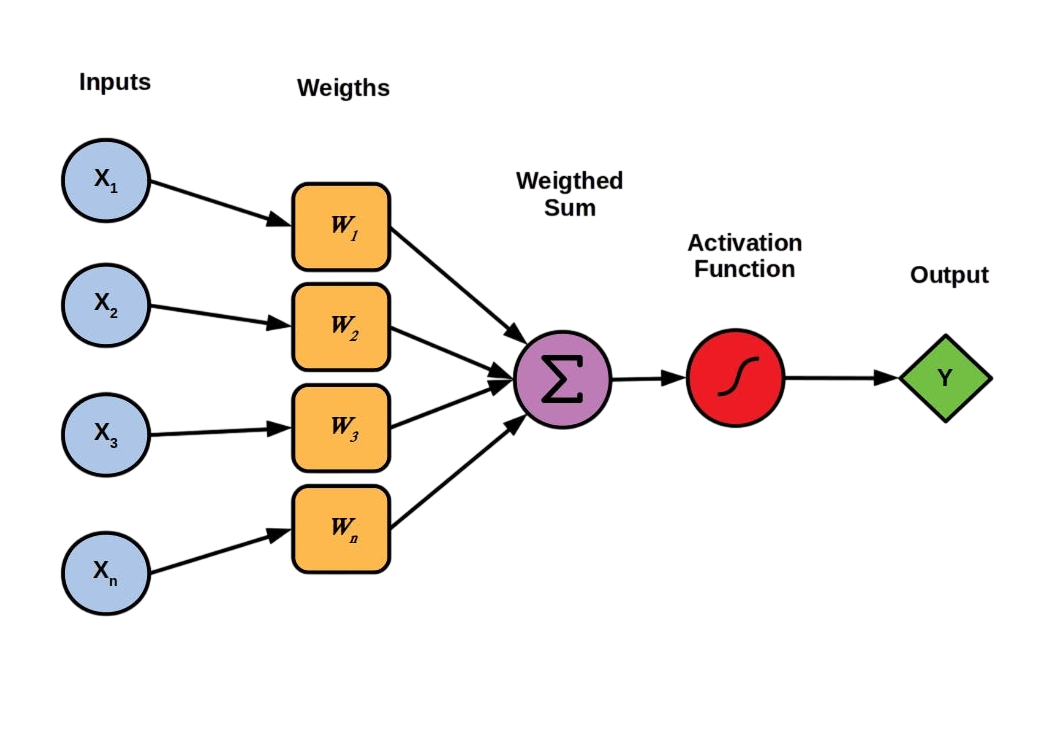

Perceptron Architecture

To evaluate classification performance, explore the Confusion Matrix in Machine Learning. A perceptron is the simplest type of artificial neural network. It consists of an input layer, weights for each input, a summation function, and an activation function that produces the final binary output.

Components:

- Inputs (x₁, x₂, …, xₙ): Feature values from the data.

- Weights (w₁, w₂, …, wₙ): Coefficients that represent the importance of each input.

- Bias (b): A constant that allows shifting the activation threshold.

- Summation: Calculates the weighted sum of inputs.

- Activation Function: Applies a step function to determine output (0 or 1).

- z=∑i=1nwixi+bz = \sum_{i=1}^{n} w_i x_i + b

- y={1if z≥00otherwisey = \begin{cases} 1 & \text{if } z \geq 0 \\ 0 & \text{otherwise} \end{cases}

- Hidden Layers: Intermediate computations that add expressiveness.

- Activation Functions: ReLU, sigmoid, and tanh for non-linearity.

- Backpropagation: An algorithm to train deep networks by propagating errors.

- Loss Functions: Measures like cross-entropy or MSE guide training.

- Deep Neural Networks (DNNs): General-purpose architectures for complex pattern learning.

- Convolutional Neural Networks (CNNs): Specialized for spatial data like images.

- Recurrent Neural Networks (RNNs): Designed for sequential data like text or time series.

- Binary Classification: Spam detection, sentiment analysis.

- Medical Diagnostics: Identifying presence or absence of disease.

- Industrial Automation: Detecting defective items on a conveyor belt.

- Fraud Detection in Finance

- Optical Character Recognition

- Predictive Maintenance in Manufacturing

- from sklearn.datasets import make_classification

- from sklearn.linear_model import Perceptron

- from sklearn.model_selection import train_test_split

- from sklearn.metrics import accuracy_score

- # Dataset

- X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_classes=2)

- X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3)

- # Model

- model = Perceptron(max_iter=1000, eta0=0.01)

- model.fit(X_train, y_train)

- # Evaluation

- y_pred = model.predict(X_test)

- print(“Accuracy:”, accuracy_score(y_test, y_pred))

- Decision boundaries

- Misclassified points

- Learning curve (accuracy vs. epochs)

- Use kernel methods (kernel perceptron)

- Use ensemble techniques like bagging

- Integrate with SVMs for better margin classification

To Explore Machine Learning in Depth, Check Out Our Comprehensive Machine Learning Online Training To Gain Insights From Our Experts!

Mathematical Formulation

Given a vector of inputs x=[x1,x2,…,xn]\mathbf{x} = [x_1, x_2, …, x_n] and weights w=[w1,w2,…,wn]\mathbf{w} = [w_1, w_2, …, w_n], the perceptron computes the output yy as follows:

This model creates a linear decision boundary or hyperplane that separates the data into two classes.

Learning Algorithm

The perceptron learning rule is a basic supervised learning algorithm that adjusts weights to reduce classification errors. It starts by setting weights and bias to small random numbers. Machine Learning Training involves processing training examples repeatedly, calculating predicted outputs, and updating parameters when mistakes occur. It adjusts the weights using a straightforward mechanism: it increases weights according to the learning rate and the difference between actual and predicted labels. This process keeps going until it either converges or hits a set maximum number of epochs. The learning rate, usually a small value like 0.01, controls how much the weights change. Through this step-by-step method, the perceptron slowly improves its classification accuracy by refining its decision boundary based on the training data.

Limitations of Single Layer Perceptron

Despite its elegant simplicity, the perceptron model has significant computational limits that largely restrict its practical use. To understand ensemble learning strategies, explore Bagging vs Boosting. The main issue comes from its linear nature, which confines the model to problems where classes can be perfectly divided by a straight line. Additionally, the perceptron’s binary output system stops it from making probabilistic predictions, further limiting its usefulness. Most importantly, the basic design of the model prevents it from handling complex non-linear functions, like the XOR problem, which needs more advanced decision boundaries. These inherent restrictions underline the pressing need for better neural network architectures.

Looking to Master Machine Learning? Discover the Machine Learning Expert Masters Program Training Course Available at ACTE Now!

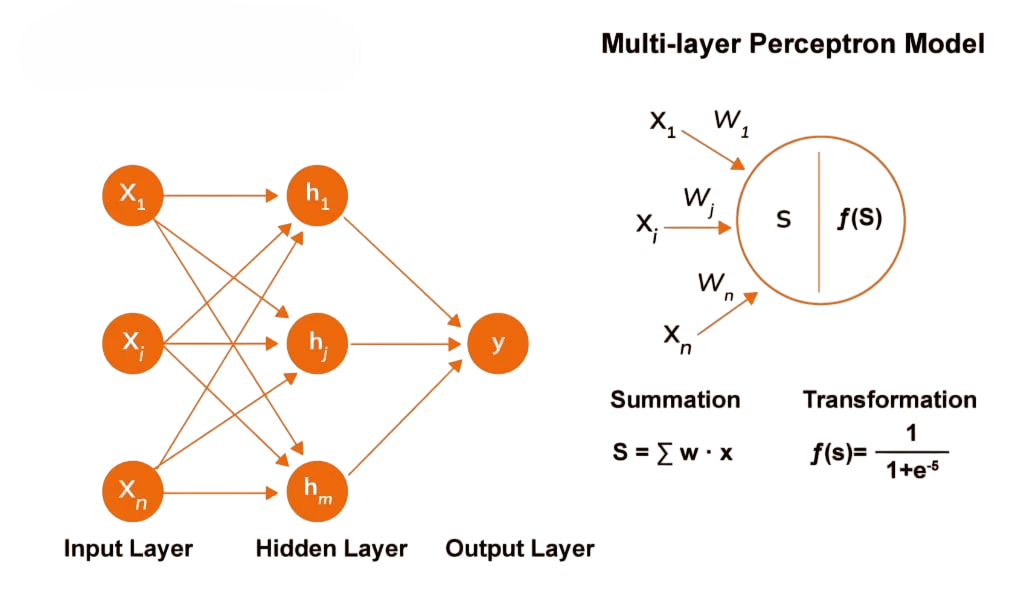

Multi Layer Perceptron (MLP)

The MLP (Multilayer Perceptron) extends the perceptron by adding one or more hidden layers between input and output layers. Each neuron applies a non-linear activation function, enabling the network to model non-linear relationships.

Key Elements:

MLPs are capable of universal approximation, i.e., they can approximate any continuous function given enough hidden units.

Perceptron in Neural Networks

In modern deep learning architectures, perceptrons are the basic units in fully connected (dense) layers. Networks stack these units in multiple layers to form:

These architectures power applications from image recognition to language modeling.

Preparing for Machine Learning Job Interviews? Have a Look at Our Blog on Machine Learning Interview Questions and Answers To Ace Your Interview!

Use Cases and Examples

While the single-layer perceptron has limited practical use, it is foundational in understanding more advanced neural networks. Some real-world scenarios that involve concepts derived from perceptrons include applications of the Support Vector Machine (SVM) Algorithm, which builds on similar principles to find optimal decision boundaries for classification and regression tasks.

In practice, MLPs are applied to:

Implementation in Python

A basic implementation using scikit-learn:

This shows how a simple perceptron can be trained for binary classification.

Perceptron Training Visualization

Using libraries like matplotlib and seaborn, you can visualize:

This helps in understanding the learning dynamics and when to stop training.

Enhancing the Perceptron

Summary

The perceptron is an important algorithm that Rosenblatt introduced in 1958. It marks a key moment in machine learning by modeling a simple biological neuron. Researchers designed this algorithm mainly for binary classification. Machine Learning Training establishes a linear decision boundary that enables basic pattern recognition. While it initially struggled with linearly separable problems, the perceptron has become a key part of more complex neural network designs. Through later developments like multi layer perceptrons (MLPs), researchers have turned this basic model into a powerful tool for tackling complex, non-linear problems. By learning about the perceptron, students gain valuable insights into neural network design. This knowledge gives them a solid starting point for exploring deeper strategies in deep learning and computational modeling.