- Introduction to PyTorch and TensorFlow

- Development History

- Syntax Comparison

- Computational Graphs

- Debugging and Flexibility

- Performance and Speed

- Community Support and Documentation

- Deployment Capabilities

- Use Cases and Applications

- Learning Curve

- Industry Adoption

- Future Trends in ML Frameworks

- Conclusion

Introduction

In the fast-evolving world of artificial intelligence (AI) and deep learning, two frameworks dominate the landscape: TensorFlow and PyTorch. TensorFlow, developed by Google, and PyTorch, created by Facebook’s AI Research lab (FAIR), are both powerful tools for building and training neural networks. Machine Learning Training provides hands-on experience with both frameworks, helping learners choose the right tool for their AI development goals. While both are open-source and serve similar purposes, they differ in architecture, usability, and application. This guide delves deep into their features, history, strengths, and weaknesses to help you decide which framework suits your needs.

Ready to Get Certified in Machine Learning? Explore the Program Now Machine Learning Online Training Offered By ACTE Right Now!

Development History

TensorFlow was introduced in 2015 by Google as a successor to its closed-source machine learning library, DistBelief. Its goal was to provide a flexible platform for production-level machine learning. Over time, TensorFlow has grown with robust support for deployment and scalability complemented by platforms like An Overview of ML on AWS, which offer purpose-built infrastructure, pre-trained models, and scalable services for end-to-end ML workflows. On the other hand, PyTorch was released in 2017 by Facebook, emerging from the older Torch framework. It quickly gained popularity among researchers and developers for its dynamic computation graph and intuitive Pythonic syntax.

Syntax Comparison

- The syntax of TensorFlow and PyTorch is one of the most significant differences. TensorFlow, especially with its Keras API, follows a more declarative style, requiring users to define the computation graph before executing it. PyTorch, in contrast, employs an imperative approach, meaning computations are executed as they are written. This makes PyTorch code more readable and easier to debug for those familiar with Python.

- For instance, building a simple neural network in PyTorch feels like writing regular Python code while frameworks like Keras vs TensorFlow differ in abstraction level, syntax complexity, and integration depth, making them suitable for different stages of model development and deployment.

- TensorFlow 2.x has addressed many criticisms from its earlier versions and now offers eager execution by default, which brings it closer to PyTorch’s usability. However, PyTorch still holds the edge for developers who prefer native control flow and debugging tools.

Computational Graphs

- TensorFlow originally used static computational graphs, which required defining the entire graph before running it. This approach was efficient for optimization and deployment but was cumbersome during development. TensorFlow 2.x introduced eager execution and tf.function decorators to support both dynamic and static graph modes.

- PyTorch uses dynamic computational graphs, also known as define-by-run, where the graph is built on the fly as operations are executed. This feature is particularly advantageous for research and applications where model structure needs to change dynamically. It offers a flexible and intuitive development experience, especially for tasks like recurrent neural networks.

- Both frameworks have strong community support and extensive documentation. TensorFlow boasts a broader range of tutorials, courses (such as the TensorFlow Developer Certificate), and integration with Google Cloud services. It has a well-established ecosystem including TensorFlow Lite, TensorFlow.js, and TensorFlow Extended (TFX).

- PyTorch, meanwhile, is the preferred choice in academic research, with most cutting-edge models and papers implemented using it alongside other Best Machine Learning Tools that streamline development, training, and deployment across diverse ML workflows.

- The framework enjoys active contributions from the research community and organizations like OpenAI, Hugging Face, and Tesla. The documentation is concise, and the ecosystem has matured rapidly with tools like PyTorch Lightning and TorchServe.

- TensorFlow is widely used in enterprise and production settings due to its scalability and deployment tools. Companies like Google, Airbnb, and Twitter use TensorFlow for image recognition, recommendation systems, and natural language processing.

- PyTorch is dominant in the research community and is frequently used in academia and experimental projects. Organizations such as Facebook, Tesla, and OpenAI rely on PyTorch for cutting-edge applications, including computer vision, autonomous driving, and large language models.

- preference. TensorFlow is favored for end-to-end production pipelines and real-time inference systems. PyTorch is preferred in fast-paced research and prototyping environments where evaluation tools like the Confusion Matrix in Machine Learning help quantify classification performance through metrics like accuracy, precision, recall, and F1-score.

- Tech giants like Google, Intel, and NVIDIA support TensorFlow, while Facebook, Microsoft, and Tesla back PyTorch. The competition between the two has driven innovation, benefiting the entire AI community.

- Both frameworks are evolving rapidly. TensorFlow is expanding its reach in mobile, edge, and browser-based AI with TensorFlow Lite and TensorFlow.js. Its focus is on seamless integration, optimization, and scalability.

- PyTorch continues to prioritize research and usability. Projects like PyTorch 2.0 and TorchInductor are pushing performance boundaries while ensemble methods like Bagging vs Boosting in Machine Learning enhance model robustness by reducing variance through parallel learners or correcting bias via sequential refinement. The rise of PyTorch Lightning and integrations with ONNX (Open Neural Network Exchange) further enhance its flexibility and deployment options.

To Explore Machine Learning in Depth, Check Out Our Comprehensive Machine Learning Online Training To Gain Insights From Our Experts!

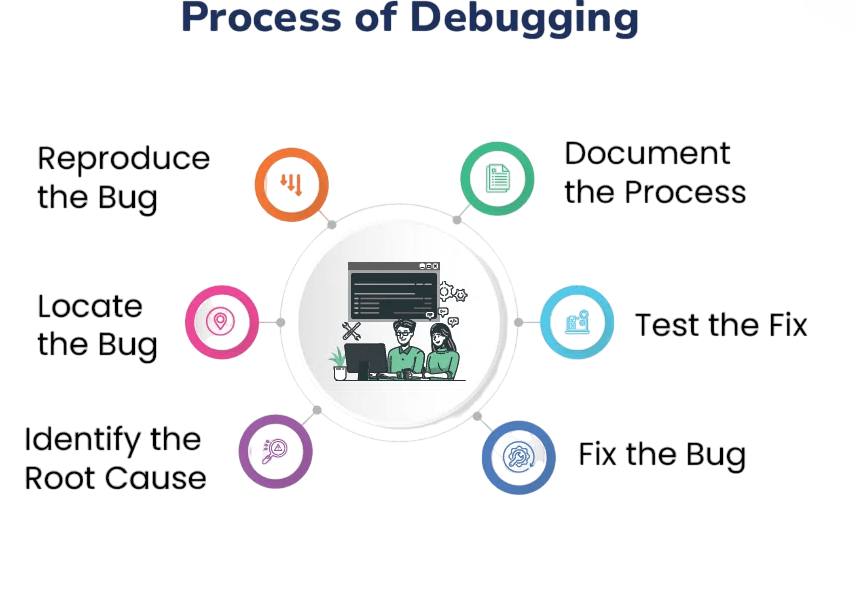

Debugging and Flexibility

When it comes to debugging, PyTorch has a clear advantage due to its dynamic nature. You can use Python debugging tools.

Such as pdb and print() statements directly within the model, making it easier to inspect and modify code during runtime. TensorFlow has improved significantly in this area with the release of version 2.x. The integration of eager execution simplifies debugging tools like TensorBoard and tf.debugging provide structured ways to monitor and debug models. However, the initial learning curve remains steeper compared to PyTorch.

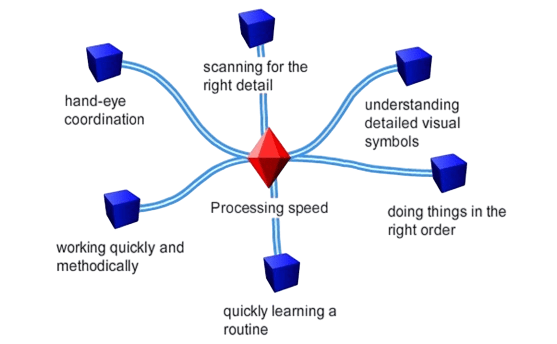

Performance and Speed

Performance benchmarks often show close competition between the two frameworks. TensorFlow generally has an edge in training speed and resource optimization due to its mature graph optimization tools. Machine Learning Training helps learners understand these performance trade-offs, enabling informed decisions when selecting frameworks for real-world AI projects.

It also offers XLA (Accelerated Linear Algebra) for additional performance gains. PyTorch, while slightly behind in some benchmark metrics, provides faster prototyping capabilities. The recent introduction of TorchScript and advancements in backend optimizations have significantly narrowed the performance gap between PyTorch and TensorFlow.

Looking to Master Machine Learning? Discover the Machine Learning Expert Masters Program Training Course Available at ACTE Now!

Community Support and Documentation

Use Cases and Applications

Preparing for Machine Learning Job Interviews? Have a Look at Our Blog on Machine Learning Interview Questions and Answers To Ace Your Interview!

Learning Curve

PyTorch is often recommended for beginners due to its simplicity and alignment with Python programming. It allows users to learn by doing, without abstracting away too many implementation details making it ideal for experimenting with Machine Learning Algorithms like linear regression, decision trees, and support vector machines, which form the backbone of predictive modeling and data-driven decision making. This approach fosters a deeper understanding of machine learning concepts. TensorFlow 2.x has simplified its API with Keras integration, making it easier for newcomers to get started. However, the breadth of the ecosystem and the transition from older versions can make it slightly more complex for absolute beginners.

Industry Adoption

- Both frameworks enjoy strong industry adoption, but the use cases often determine the

Future Trends in ML Frameworks

Conclusion

Choosing between TensorFlow and PyTorch ultimately depends on your specific needs and experience level. If you’re building scalable, production-grade systems, TensorFlow’s ecosystem offers a robust solution. If you’re focused on research, experimentation, or learning, PyTorch provides an intuitive and flexible environment. Machine Learning Training guides learners through both ecosystems, helping them align framework choice with project goals and career paths. PyTorch and TensorFlow are both excellent deep learning frameworks. TensorFlow is more production-ready with a robust deployment ecosystem, while PyTorch is the go-to choice for research and fast prototyping. As both frameworks continue to evolve, choosing one may become a matter of specific project requirements rather than overall superiority. For the best of TensorFlow and PyTorch worlds, many developers learn to work with both, leveraging each framework’s strengths where appropriate.