- What is Data Warehousing?

- Data Warehouse vs Database

- Components of a Data Warehouse

- ETL Process Overview

- Star Schema and Snowflake Schema

- OLAP vs OLTP

- Data Marts and Aggregation

- Data Warehouse Architecture

- Tools Used in Data Warehousing

- Data Quality and Governance

- Case Studies in Data Warehousing

- Conclusion

What is Data Warehousing?

Data warehousing refers to the process of collecting, integrating, and storing data from different sources in a centralized repository to support business decision-making, analytics, and reporting. Unlike operational databases designed for day-to-day transactions, a data warehouse is optimized for complex queries, aggregations, and historical analysis. It serves as the backbone for business intelligence (BI) systems, enabling organizations to derive actionable insights from vast datasets through effective Data Science course. Data warehousing systems are designed to provide a consolidated view of the organization’s data by integrating information from different departments and formats. This unified view enhances decision-making, boosts productivity, and streamlines operations by offering reliable and consistent data.

Data Warehouse vs Database

A traditional database (such as those used in ERP or CRM systems) is optimized for handling real-time transaction processing (OLTP). These databases are best for managing inserts, updates, and deletes efficiently. In contrast, a data warehouse is designed for Online Analytical Processing (OLAP), where the focus is on complex queries and data analysis.

- Key Differences:

- Functionality: Databases handle transactions (e.g., placing orders), while data warehouses support analysis (e.g., monthly sales reports), often designed and visualized using tools like Erwin Data Modeler.

- Data Type: Databases typically contain current data; warehouses store both current and historical data.

- Schema Design: Databases use normalized schemas; warehouses use star or snowflake schemas for faster querying.

- Performance: Databases optimize write operations; warehouses optimize read and analytical operations.

- Users: Databases are used by operational staff; warehouses are used by analysts and decision-makers.

Do You Want to Learn More About Data Science? Get Info From Our Data Science Course Training Today!

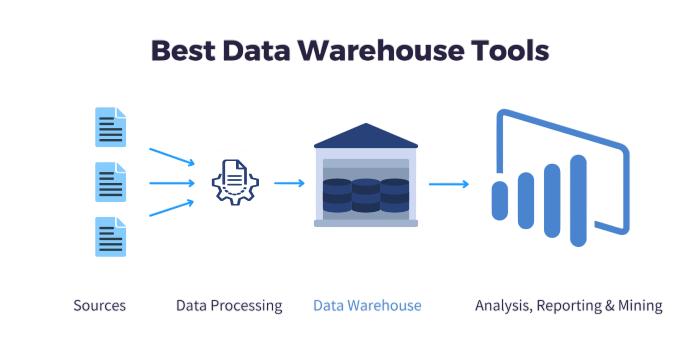

Components of a Data Warehouse

- Data Sources: Originate from internal systems (CRM, ERP) and external systems (web logs, market data).

- ETL Process: Responsible for extracting, transforming, and loading data into the warehouse.

- Staging Area: A temporary environment where data is cleaned and transformed before loading is often referred to as a staging area unrelated but equally important in data science is the concept of reinforcement learning, which raises the question: What is Q-Learning?

- Data Storage Layer: The centralized repository that stores structured, consistent, and historical data.

- Metadata: Provides context about data origin, transformation rules, and schema.

- Extract: Data is pulled from various source systems, including relational databases, APIs, and files.

- Transform: Data is cleaned (e.g., removing nulls), formatted (e.g., date standardization), deduplicated, and enriched.

- Load: The processed data is loaded into the data warehouse, often using incremental or full-load methods.

- Star Schema: The central fact table (e.g., Sales) is directly connected to dimension tables (e.g., Time, Product, Region). It is denormalized, which makes it easier and faster for analysts to query.

- Snowflake Schema: A normalized version of the star schema where dimension tables are split into additional related tables (e.g., Product → Category → Department). It reduces redundancy but can slow down query performance. Comparison:

- Star Schema: Simpler structure, faster queries, more storage.

- Snowflake Schema: Complex structure, slower queries, less storage.

- OLAP (Online Analytical Processing): Optimized for analytical queries that involve aggregations and multidimensional analysis.

- Example: Comparing sales across quarters.

- Characteristics: Read-heavy, supports historical data, involves complex joins.

- OLTP (Online Transaction Processing): Designed for real-time operations like data entry, updates, and deletions quite different from the batch processing and model training seen in TensorFlow Projects.

- Example: Banking transactions or inventory updates.

- Characteristics: Write-heavy, supports current data, uses simple queries.

- Data Marts: Focused subsets of a data warehouse. Departments like HR, Finance, or Marketing can have dedicated data marts, enabling faster access to relevant data without overloading the main warehouse.Can be Dependent (sourced from a central DW) or Independent (built separately), both of which are important concepts to understand when exploring Big Data Careers

- Aggregation: Pre-computed summaries of large data sets (e.g., daily sales totals) to improve query performance. Aggregated tables reduce computational overhead during peak reporting times.

- Accuracy: Data reflects real-world values.

- Completeness: No missing fields.

- Consistency: Uniform format and logic.

- Timeliness: Updated data available on time.

- Validity: Conforms to business rules.

Would You Like to Know More About Data Science? Sign Up For Our Data Science Course Training Now!

ETL Process Overview

ETL (Extract, Transform, Load) is the backbone of any data warehousing system:

ETL can be scheduled in batches (daily, weekly) or run in near real-time using stream-based tools concepts that, like ‘ Ridge Regression Explained,’ are essential for understanding modern data workflows. Quality ETL processes ensure that the data warehouse is reliable, accurate, and analytics-ready.

Star Schema and Snowflake Schema

These schemas define how data is structured within the warehouse:

OLAP vs OLTP

Data Marts and Aggregation

Data Warehouse Architecture

The architecture of a data warehouse can be divided into several levels, each of which is essential to understand in Data Science course. Due to its inefficient combination of data storage and analysis in a single layer, the single-tier design is rarely utilised. Client apps and the data warehouse are kept apart by the two-tier architecture, but as the system expands, scalability problems frequently arise. The most widely used method is the three-tier design, which has three layers: the OLAP engine and metadata repository are located in the middle tier, BI tools and front-end applications are located in the top tier, and data sources and storage are located in the bottom tier. Higher performance, simpler maintenance, and improved scalability are guaranteed by this three-tier design.

Gain Your Master’s Certification in Data Science Training by Enrolling in Our Big Data Analytics Master Program Training Course Now!

Tools Used in Data Warehousing

The management and analysis of company data is aided by a number of crucial data warehousing tools. ETL tools for effective data extraction, transformation, and loading include Microsoft SQL Server Integration Services (SSIS), Apache NiFi, Talend Open Studio, and Informatica PowerCenter. Google BigQuery, Microsoft Azure Synapse Analytics, Amazon Redshift, and Snowflake are well-known data warehousing platforms for processing and storing data. Commonly used BI technologies include Tableau, Power BI, Qlik Sense, and SAP BusinessObjects for data visualisation and analysis. When used in tandem, these solutions simplify the integration, archiving, and display of vast amounts of data from many organisations a process closely related to topics like ‘ What is SAS Analytics‘ in the realm of data management and analysis.

Commonly used BI technologies include Tableau, Power BI, Qlik Sense, and SAP BusinessObjects for data visualisation and analysis. When used in tandem, these solutions simplify the integration, archiving, and display of vast amounts of data from many organisations.

Data Quality and Governance

A critical aspect of successful data warehousing is maintaining high data quality, which ensures the information is accurate, consistent, and reliable. Effective ETL processes and governance frameworks help preserve data quality throughout the data lifecycle. Ultimately, strong data quality leads to trustworthy insights and better strategic outcomes for organizations.

Data Quality ensures the warehouse delivers reliable and consistent data. Key dimensions include accuracy, completeness, and timeliness all critical when working with Big Data Examples across industries.

Preparing for Data Science Job? Have a Look at Our Blog on Data Science Interview Questions & Answer To Acte Your Interview!

Case Studies in Data Warehousing

- Retail (Walmart):

Leverages data warehousing for inventory control, dynamic pricing, and trend forecasting.

Healthcare (UnitedHealth Group):Manages large-scale patient records, enables personalized care plans, and supports regulatory reporting.

Finance (American Express):Uses data warehouses for fraud detection, customer segmentation, and credit risk modeling.

E-commerce (Amazon):Applies warehousing for recommendation engines, delivery optimization, and revenue forecasting.

Conclusion

Data warehousing continues to evolve as an essential component of data-driven enterprises and is a key topic in Data Science course. With advancements in cloud technologies, real-time processing, and AI integration, modern data warehouses empower businesses to be more agile, responsive, and competitive. Investing in robust data warehousing solutions ensures that organizations can unlock the full value of their data assets and achieve strategic excellence.