- Introduction to Large Language Models

- Key Features of LangChain

- Building LLM Applications with LangChain

- Memory and Context Handling in LangChain

- LangChain for Chatbot Development

- Using LangChain with OpenAI API

- Data Retrieval and Processing with LangChain

- Agent-Based Systems in LangChain

- LangChain with Vector Databases

- Debugging and Optimizing LangChain Pipelines

- Deploying LangChain Apps

- Future of LangChain in AI Development

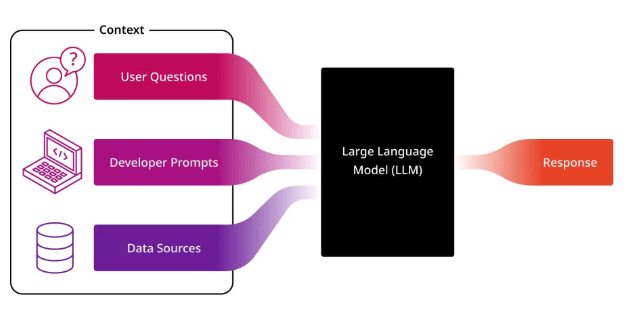

Introduction to Large Language Models

LangChain is an open-source framework designed to build applications powered by large language models (LLMs). Data Science Course Training simplifies the development of AI-powered applications by providing a flexible and modular architecture. LangChain integrates LLM APIs, memory, data sources, and external tools, enabling developers to create complex workflows and pipelines. The framework is particularly effective for building conversational agents, knowledge Data Retrieval systems, and autonomous AI applications. LangChain provides pre-built components that streamline tasks like prompt management, memory handling, and API integration. Its flexibility makes it popular for developing Chatbot Development , search engines, and AI-powered assistants.

Key Features of LangChain

LangChain offers several key features that simplify LLM application development:

- Modularity: LangChain provides modular components for various tasks, including prompt engineering, memory, and tool integration. This makes it easy to customize and extend functionality.

- Memory Management: It offers built-in support for stateful memory, allowing applications to maintain context over multiple interactions.

- Data Integration: LangChain enables seamless integration with databases, APIs, and external knowledge sources, making Uncertainty in Artificial Intelligence ideal for building retrieval-augmented generation (RAG) systems.

- Agent and Chain Support: LangChain supports autonomous agents that dynamically select actions, making it useful for multi-step tasks.

- Flexible LLM Integration: It supports multiple LLM providers such as OpenAI, Anthropic, and Cohere, allowing developers to switch between models easily.

Master Data Science skills by enrolling in this Data Science Online Course today.

Building LLM Applications with LangChain

LangChain simplified building LLM applications by providing reusable components called chains. A chain is a series of steps that execute a task, such as querying an LLM, processing data, or invoking external API integration. LangChain’s modular design makes adding components like prompt Streamlite Tutorial for Data Science Projects , document loaders, and custom tools easy, enhancing the functionality of LLM Integration. To create a basic LLM-powered app:

- Initialize the LLM: Connect to an Large Language Models provider like OpenAI or Anthropic.

- Define the Chain: Set up the workflow, specifying the sequence of operations (e.g., text generation, summarization).

- Add Memory: Incorporate memory management to maintain conversation history.

- Deploy and Test: Deploy the application and test its functionality.

Memory and Context Handling in LangChain

One of LangChain’s standout features is its memory management, which allows applications to maintain conversational context across interactions. This is crucial for chatbots, virtual assistants, and multi-turn conversations. LangChain offers several memory types, ConversationBufferMemory: Stores recent interactions in memory, maintaining context. ConversationSummaryMemory previous interactions to keep memory concise. EntityMemory Tracks specific entities mentioned during the conversation, enabling contextual responses. For example, in a customer support chatbot, memory handling allows the bot to remember user preferences or previous queries, creating a more personalized experience.

Enhance your knowledge in Data Science. Join this Data Science Online Course now.

LangChain for Chatbot Development

LangChain is widely used for chatbot development due to its state management, LLM integration, and agent capabilities. It allows developers to build rule-based and AI Development chatbots that handle complex queries. LangChain chatbots are used in customer service, e-commerce, and personal assistants, offering accurate and context-aware responses. To build a chatbot:

- Set Up LLM and Memory: Connect LangChain to an LLM and define Data Science Important to retain conversation history.

- Add Tools and APIs: Integrate with external APIs (e.g., weather, finance) to enable dynamic responses.

- Create Chains: Define multi-step processes for contextual and dynamic conversations.

- Deploy and Scale: Deploy the Chatbot Development on cloud platforms, ensuring scalability and reliability.

Using LangChain with OpenAI API

LangChain offers seamless integration with OpenAI’s LLMs, making building applications powered by GPT-3.5, GPT-4, and other OpenAI models easy. To use LangChain with OpenAI, To install LangChain and the OpenAI SDK, you can use pip, which is the Data Science Course Training package installer. Open your terminal or command prompt and run the following command: pip install langchain openai. Large Language Models will download and install both packages and their dependencies. LangChain is a framework for building applications powered by language models, while the OpenAI SDK allows you to interact with OpenAI API, including models like GPT. Make sure you have Python installed before running the command, and it’s a good idea to use a virtual environment to manage dependencies for your project.

Data Retrieval and Processing with LangChain

LangChain enables efficient data retrieval and processing by integrating external databases, API integration, and vector stores. Is Data Science A Good Career is commonly used in RAG (Retrieval-Augmented Generation) pipelines, where external data sources enhance the LMs knowledge base. LangChain supports vector database integrations like FAISS, Pinecone, and Weaviate. This allows applications to store, retrieve, and query embeddings for contextual search. For example, a document search application can:

- Ingest Documents: Convert them into embeddings.

- Store in Vector Database: Use FAISS or Pinecone for fast retrieval.

- Retrieve Relevant Data: Query the vector database and pass the results to the LLM.

Want to lead in Data Science? Enroll in ACTE’s Data Science Master Program Training Course and start your journey today!

Agent-Based Systems in LangChain

LangChain supports agent-based systems that can autonomously select actions, use tools, and make decisions. Agents enhance the capabilities of LLM applications by dynamically interacting with external services. For example, an e-commerce assistant can use an agent to Query product availability. Fetch customer data from a CRM. Provide tailored product recommendations. LangChain provides pre-built agents with different capabilities:

- Tool-Using Agents: Interact with APIs or databases.

- Action Agents: Execute complex workflows.

- Decision-Making Agents: Make context-aware decisions.

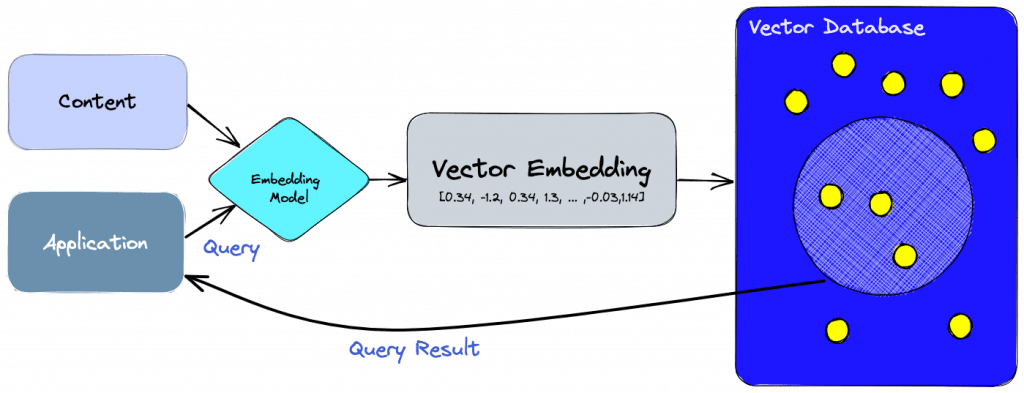

LangChain with Vector Databases

LangChain integrates with vector databases to enable semantic search and knowledge Data Retrieval. Agents in Artificial Intelligence combination powers contextual question-answering and RAG applications. This architecture is widely used in knowledge management systems, search engines, and AI Development. To use LangChain with FAISS:

- Generate Embeddings: Convert documents into embeddings using OpenAI API or Hugging Face models.

- Store in FAISS: Store the embeddings in FAISS for efficient retrieval.

- Query the Database: Retrieve the most relevant embeddings for the query.

- Pass to LLM: Use the retrieved context to enhance the LLM’s response.

Debugging and Optimizing LangChain Pipelines

Debugging and optimizing LangChain applications is Constraint Satisfaction Problem in AI for performance and reliability. LangChain’s modular architecture makes replacing or upgrading components easy, improving performance and scalability. Tips for optimizing LangChain pipelines:

- Use Tracing: Enable LangSmith tracing to debug and monitor pipeline execution.

- Reduce Latency: Cache intermediate results and use faster LLM Integration for non-critical tasks.

- Memory Management: Optimize memory usage by using conversation summary memory to reduce context size.

- Parallel Processing: Use asynchronous execution to speed up batch processing.

Deploying LangChain Apps

Deploying LangChain applications involves packaging your code and making it accessible to users through a web interface, API integration, or another platform. First, you develop your LangChain app using Python, integrating components like prompt templates, chains, tools, and memory. Once your application is working locally, you can deploy it using platforms like Streamlit, FastAPI, or Flask for the frontend or API interface. To host the app, you can use cloud services like Heroku, Render, AWS, or Vercel. It’s important to securely manage your API keys (like OpenAI API keys) using environment variables. For larger-scale or production-grade deployment, you may also use Docker containers and orchestration tools like Kubernetes.

Preparing for a job interview? Explore our blog on Data Science Interview Questions and Answers!

Future of LangChain in AI Development

LangChain is evolving rapidly, adding new features to enhance LLM-powered applications. The Data Science Course Training of LangChain includes, Multimodal Support Combining text, image, and audio models. Improved Agents Autonomous agents with better reasoning and action capabilities. Enhanced Debugging Tools Improved observability and tracing features. Low-Code Integrations Easier integration with cloud services and API integration.