- Introduction to Data Wrangling

- Why is Data Wrangling Important?

- Steps in Data Wrangling

- Techniques for Data Cleaning

- Data Transformation Methods

- Handling Missing and Duplicate Data

- Data Wrangling Tools and Technologies

- Challenges in Data Wrangling

- Conclusion

Introduction to Data Wrangling

Data wrangling, also known as data munging, refers to the comprehensive process of cleaning, transforming, and organizing raw, unstructured, or semi-structured data into a structured and usable format that is suitable for analysis. It is one of the most critical and foundational tasks in the field of data science, as raw data collected from various sources—such as databases, web APIs, spreadsheets, or sensors is often incomplete, inconsistent, erroneous, and not immediately ready for analytical tasks. In its essence, data wrangling, often emphasized in Data Science Training, involves a series of steps aimed at ensuring data quality and reliability. These steps may include removing or correcting data inconsistencies, handling missing or null values, standardizing formats, converting data types, filtering out irrelevant information, and restructuring data into a more accessible or meaningful form. The ultimate goal is to prepare the data so that it can be easily explored, visualized, modeled, or used for decision-making purposes. Ultimately, data wrangling is a crucial step that bridges the gap between raw data and actionable intelligence. It empowers organizations to make smarter choices, enables predictive analytics, and supports the development of innovative, data-driven solutions across a wide range of industries, from healthcare and finance to marketing and beyond.

Eager to Acquire Your Data Science Certification? View The Data Science Course Offered By ACTE Right Now!

Why is Data Wrangling Important?

- Improves Data Quality: Raw data collected from various sources is often incomplete, inconsistent, or inaccurate. Data wrangling ensures the data is cleaned, consistent, and error-free, making it reliable for analysis.

- Prepares Data for Analysis: Data must be in the correct format for analysis or machine learning models. Data wrangling allows the transformation of data into formats that can be used by algorithms or visualization tools.

- Time-Saving: A well-wrangled dataset minimizes the time spent on future analysis and model development. It ensures that analysts and Machine Learning Techniques and models can focus on insights rather than troubleshooting data issues.

- Enhances Decision-Making: Data wrangling enhances the decision-making process by ensuring that the data is accurate, complete, and well-structured. High-quality data leads to better outcomes and more reliable predictions.

- Facilitates Machine Learning Models: Many machine learning models require data in a structured and clean format to function optimally. Proper data wrangling ensures that models are trained using data free from errors and inconsistencies, leading to better performance.

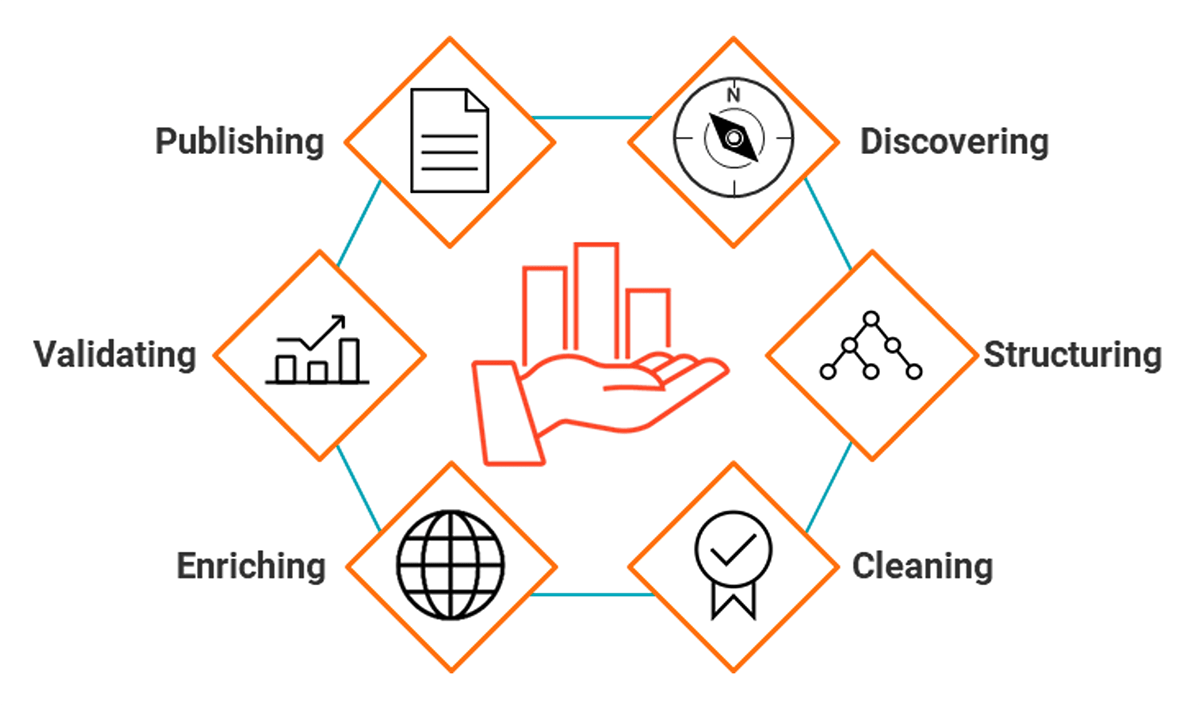

Steps in Data Wrangling

- Data Collection is the initial step in which data is gathered from various sources, such as databases, APIs, spreadsheets, or even text files. Data collection may involve extracting data from structured or unstructured sources.

- Data Exploration: After collecting the data, it is essential to explore the dataset to understand its structure, types, and potential issues. This step involves examining the data for missing values, duplicates, inconsistencies, and outliers.

- Data Cleaning: Cleaning the data is the process of removing or correcting inaccurate, incomplete, or irrelevant data. This may involve eliminating duplicates, correcting errors, or handling missing values.

- Data Transformation: In this step, data is transformed into a more useful format for analysis, supporting broader Digital Transformation efforts. This may involve changing data types, normalizing, aggregating, or performing calculations. It could also include converting text data into numerical data or encoding categorical variables for machine learning.

- Data Reduction: Data reduction involves simplifying or consolidating the dataset to make it more manageable for analysis. This can include eliminating irrelevant features or reducing the size of large datasets while retaining important information.

- Data Integration: If data comes from multiple sources, integrating it into a single dataset is critical. This includes merging or joining datasets based on standard features and resolving any discrepancies between them.

- Data Validation: After all the previous steps, it’s essential to validate the data to ensure it is clean, accurate, and ready for analysis. This can include checking for anomalies or verifying that the data meets certain predefined conditions.

Techniques for Data Cleaning

Data cleaning is a crucial component of the data wrangling process, involving various techniques to address common issues found in raw datasets and prepare the data for accurate analysis. One of the primary tasks is removing duplicates, as repeated entries can distort results and lead to incorrect conclusions. Ensuring each record is unique by identifying and eliminating duplicate rows is essential for data integrity. Another common issue is handling missing data, which may arise due to errors in data collection or entry. Several strategies are employed to deal with missing values: rows or columns with minimal missing data may be discarded, while imputation techniques such as replacing missing values with the mean, median, or mode—can be used to retain more of the dataset. Alternatively, forward or backward filling, which uses nearby data points to estimate missing values, may also be appropriate especially in the context of a Data Science Career Path where handling missing data is a fundamental skill. Standardizing data is equally important, as inconsistencies in units, formats, or naming conventions can introduce confusion and errors. This includes converting dates into a consistent format or aligning all measurement units, such as converting temperatures to Celsius. Additionally, outliers data points that significantly differ from the rest must be identified and addressed to prevent them from skewing analysis. Outliers can be removed, transformed, or managed using more advanced statistical techniques. Lastly, handling categorical data often requires standardizing category labels and converting them into formats suitable for analysis, such as using one-hot encoding for machine learning applications. Together, these data cleaning practices ensure the dataset is accurate, consistent, and ready for meaningful analysis.

Excited to Obtaining Your Data Science Certificate? View The Data Science Training Offered By ACTE Right Now!

Data Transformation Methods

Data transformation is converting data from one format or structure into another to make it suitable for analysis. Some standard methods include:

Normalization and Scaling: Data normalization involves adjusting values in a dataset to fit within a specific range, typically between 0 and 1. This is important when the dataset contains features with varying scales, ensuring that no single feature dominates the analysis or model.

Encoding Categorical Variables: Categorical data must often be converted into a numerical format for machine learning. Standard encoding techniques include:

- One-Hot Encoding: Converts categorical values into binary columns (e.g., turning “Red,” “Blue,” and “Green” into separate columns).

- Label Encoding: Assigns each category with a unique integer value.

Log Transformations: In cases where data is skewed, applying a logarithmic transformation—a technique rooted in Mathematics for data science can help normalize the data, making it more suitable for modeling.

Feature Engineering involves creating new features from existing data to improve the predictive power of models. For example, it consists of extracting the year, month, and day from a timestamp or creating a “Price-to-Income” ratio from financial data.

Interested in Pursuing Data Science Master’s Program? Enroll For Data Science Master Course Today!

Handling Missing and Duplicate Data

Handling missing and duplicate data is one of the most crucial steps in data wrangling:

Handling Missing Data:

- Removing Rows/Columns: If the missing data is sparse, you may remove rows or columns with missing values.

- Imputation: Replace missing values with statistical measures (e.g., mean, median, mode) or use more complex methods like regression or interpolation.

- Predictive Modeling: Machine learning models predict and fill in missing data based on other features.

Handling Duplicates:

- Identifying Duplicates: Duplicates can be determined based on unique identifiers or all columns being the same.

- Removing Duplicates: Once identified, duplicates can be removed from the dataset to avoid biasing the analysis.

Data Wrangling Tools and Technologies

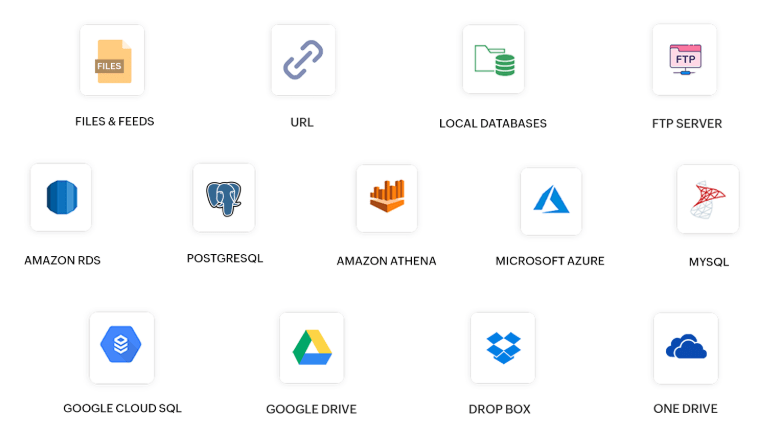

There are numerous tools and technologies available for data wrangling, ranging from lightweight scripting solutions to comprehensive software platforms designed for large-scale data processing. Among the most widely used are Python libraries such as Pandas, which offers powerful and flexible data structures ideal for manipulating and analyzing structured data, and NumPy, which complements Pandas by efficiently handling numerical operations and array-based computations. Another notable tool is OpenRefine, an open-source application specifically designed for cleaning messy data and transforming it into a more structured format, making it a valuable asset in Data science Training. In the R programming environment, dplyr stands out as a popular package for performing data wrangling tasks such as filtering, transforming, and summarizing datasets. Beyond programming libraries, ETL (Extract, Transform, Load) tools like Apache NiFi, Talend, and Microsoft SSIS are widely used in enterprise settings to automate and scale the data wrangling process, especially within complex data pipelines. Additionally, database management systems such as MySQL and PostgreSQL offer built-in capabilities for data wrangling through SQL queries, enabling users to manipulate, join, and filter large datasets directly within the database environment. Together, these tools provide a robust ecosystem for transforming raw data into a clean and analysis-ready form.

Preparing for a Data Science Job Interview? Check Out Our Blog on Data Science Interview Questions & Answer

Challenges in Data Wrangling

While data wrangling is essential, it is also one of the most challenging tasks in data analysis. Some common challenges include:

- Large and Complex Datasets: Managing big data or data with many features can be difficult and time-consuming.

- Missing or Inconsistent Data: Dealing with missing, incomplete, or inconsistent data from multiple sources can complicate the wrangling process.

- Data Transformation Complexity: Some data transformations, such as those implemented using Informatica Transformation, require complex logic, making them difficult to implement or test.

- Data Privacy and Security Concerns: Wrangling sensitive data may involve security concerns, especially in industries like healthcare and finance.

Conclusion

Data wrangling is an indispensable skill for anyone working with data. As the volume of data increases and becomes more complex, the importance of efficient and accurate wrangling will only grow. The advent of advanced tools, automation, and AI-powered data wrangling techniques promises to simplify the process, making it more efficient and accessible. However, the core principles of Data Science Training such as data wrangling, cleaning, transforming, and preparing data remain central to the success of any data-driven project. In the future, we can expect more intelligent, automated wrangling tools powered by machine learning, which will make it easier to handle even the most complex datasets with minimal manual intervention.