- Introduction to Data Extraction Tools

- Why Data Extraction is Crucial for Business Intelligence

- Top Data Extraction Tools for Efficient Collection

- Key Features to Look for in Data Extraction Tools

- Choosing the Right Tool for Your Needs

- How to Integrate Data Extraction Tools into Your Workflow

- Best Practices for Using Data Extraction Tools

- Conclusion

Introduction to Data Extraction Tools

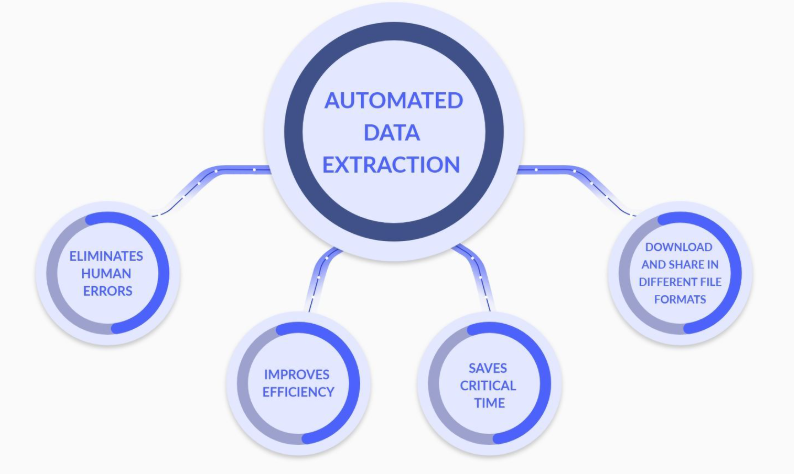

Data extraction refers to the process of retrieving valuable information from various sources such as websites, documents, databases, or APIs. In the past, manual extraction methods were commonly used, but they are labor-intensive, error-prone, and time-consuming. To address these challenges, businesses and organizations have increasingly turned to specialized data extraction tools that automate the process. These tools, often introduced during Data Science Training, enable efficient and accurate collection of structured, semi-structured, and unstructured data, often with minimal human input. Automated extraction helps businesses access a wide range of data sources quickly and accurately, enhancing decision-making and operations. These tools are especially valuable in industries that rely heavily on data, such as market research, competitive analysis, lead generation, and content aggregation. By streamlining the data collection process, these tools help organizations save time, reduce costs, and improve the quality of insights, making them indispensable in today’s data-driven world.

Interested in Obtaining Your Data Science Certificate? View The Data Science Course Training Offered By ACTE Right Now!

Why Data Extraction is Crucial for Business Intelligence

Data extraction tools are key to a successful business intelligence (BI) strategy. By automating gathering and cleaning data, these tools allow businesses to focus on data analysis and derive actionable insights. Here’s why data extraction is essential for BI:

- Speed: Data extraction tools can collect large datasets faster than manual methods.

- Accuracy: Automation minimizes human error, ensuring the collected data is reliable and accurate.

- Cost-Efficiency: Automating the extraction process reduces labor costs and boosts operational efficiency.

- Scalability: These tools can handle vast amounts of data across multiple sources, making them ideal for large businesses or those experiencing rapid growth, and are often discussed when exploring What Is Data Wrangling.

- Customization: Many data extraction tools offer customization options to tailor extraction processes according to specific requirements, improving the relevance and quality of the data.

- Data Consistency: Automated extraction ensures that data is collected uniformly, leading to more consistent and comparable datasets across various sources.

- Real-Time Data Collection: Some data extraction tools offer real-time scraping and continuous updates, providing businesses with the most current information for timely decision-making.

- Octoparse: A popular, user-friendly data extraction tool known for its no-code interface, Octoparse allows users to extract data from both static and dynamic websites by selecting page elements. It supports pagination, infinite scroll, cloud-based extraction, and built-in data cleaning tools. Use Case: Extracting product data for competitive analysis from e-commerce websites.

- WebHarvy: A point-and-click scraper designed for non-technical users, WebHarvy automatically detects patterns in web pages and can extract text, images, and videos. It features CAPTCHA bypassing, proxy management, and a built-in scheduler. Use Case: Collecting data from online directories and review sites.

- Diffbot: Using machine learning and NLP, Diffbot can analyze web content like a human, turning it into structured data. It excels at extracting information from complex layouts and offers API integration for automation. Use Case: Automating market research and news aggregation tasks.

- DataMiner: A browser extension for Chrome and Edge, DataMiner simplifies web scraping with a point-and-click interface and custom rule creation, making it a valuable tool in Data Science Training. It supports CSV, Excel, and Google Sheets exports, making it easy to collect and export data. Use Case: Gathering lead information from business directories.

- ParseHub: A no-code web scraping tool that uses machine learning to handle complex, JavaScript-heavy websites. ParseHub supports CAPTCHA handling, cloud-based extraction, and scheduling. Use Case: Scraping e-commerce data to track product pricing.

- Content Grabber: A powerful tool for professionals, Content Grabber provides a visual editor for building custom extraction agents. It supports proxy rotation, CAPTCHA bypassing, and large-scale projects. Use Case: Collecting competitor pricing data across various e-commerce platforms.

- Scrapy: An open-source Python-based framework designed for advanced users, Scrapy allows for flexible, large-scale web scraping. It handles AJAX, JavaScript, and supports various export formats. Use Case: Building custom bots for complex web scraping projects.

- Integration Options: Many tools provide APIs or built-in integration capabilities to connect with other platforms.

- Tool Selection: Choose the right data extraction tool based on your specific needs, such as the type of data, complexity, and scale.

- Automation: By linking data extraction tools to your existing systems, you can automate data collection processes, saving time and effort.

- Storage Solutions: Ensure the tool integrates with cloud storage solutions or databases for seamless data storage and retrieval.

- Analysis: Integration with platforms like Excel or Google Sheets allows for easier data analysis and reporting once extraction is complete, which can be particularly useful when applying techniques like Polynomial Regression.

- Real-Time Data Access: Automating the extraction and storage process enables real-time access to the most up-to-date data without manual intervention.

- Streamlined Workflow: Linking your data extraction tool with existing systems ensures a smooth and efficient workflow, enhancing overall productivity.

- Scalability: Integration with cloud-based platforms allows your data extraction process to scale as your data needs grow, accommodating larger datasets.

- Error Handling: Integration with monitoring and alerting systems can help identify and resolve any extraction issues or data discrepancies in real-time, ensuring data accuracy.

Top Data Extraction Tools for Efficient Collection

Here, we explore 10 popular data extraction tools that can help streamline your data collection process:

Are You Interested in Learning More About Data Science? Sign Up For Our Data Science Course Training Today!

Key Features to Look for in Data Extraction Tools

When selecting a data extraction tool, it’s crucial to focus on several key features that ensure efficiency and effectiveness. Ease of use is paramount, as a user-friendly interface with a point-and-click setup can save considerable time, especially for non-technical users. Customization is also important, as it allows for the creation of custom rules and facilitates the handling of more complex data extraction tasks. This feature ensures that the tool can adapt to specific business requirements, a consideration often highlighted in discussions of Pandas vs Numpy. The data export options offered by the tool should also be flexible, allowing you to export data in various formats such as CSV, Excel, JSON, or XML. This versatility is vital for integrating the extracted data into different systems and workflows. Scalability is another factor to consider, as the tool should be capable of handling large datasets and should be able to grow with your business needs.

As businesses expand, the ability to manage larger and more complex data becomes critical. Lastly, data accuracy is essential, as a good extraction tool should minimize errors and ensure that the data captured is reliable and correct. These features together help ensure that the tool will be both effective and efficient in managing data extraction tasks.

Choosing the Right Tool for Your Needs

Choosing the right data extraction tool for your project involves considering several factors that align with your specific needs. One of the most important aspects to assess is the complexity of the data. If your data is relatively simple and structured, many tools can handle it with ease. However, if you’re dealing with complex or unstructured data such as information from dynamic websites or PDFs you’ll need a tool with more advanced capabilities like machine learning or natural language processing to extract data efficiently. Another critical factor is the scale of your extraction. If you’re working with a large dataset or require ongoing data extraction from multiple sources, you’ll need a tool that is scalable and can handle high volumes of data without performance degradation, which is a key consideration when understanding What is a Web Crawler. Additionally, consider whether you need to extract structured or unstructured data. Structured data is easier to extract, while unstructured data often requires tools with more advanced features, such as content recognition or pattern detection. By matching the tool’s capabilities with the specific requirements of your project such as the complexity, scale, and type of data you ensure a smooth, efficient, and successful data extraction process. This strategic approach will save time and resources while maximizing the quality of the extracted data.

Are You Considering Pursuing a Master’s Degree in Data Science? Enroll in the Data Science Masters Course Today!

How to Integrate Data Extraction Tools into Your Workflow

Best Practices for Using Data Extraction Tools

To ensure the effectiveness and accuracy of your data extraction process, it’s important to follow a few best practices. First, always test your extraction setup before scaling it. Testing ensures that the tool is collecting the right data and that the process is functioning as expected. Additionally, using proxies and CAPTCHA-solving techniques can help avoid being blocked by websites, allowing for uninterrupted data collection. Websites often employ these measures to prevent automated scraping, so bypassing these defenses is essential for successful extraction, much like understanding error patterns using a Confusion Matrix in Python Sklearn. Another crucial practice is to regularly update your extraction rules. Websites frequently change their structure and layout, which can break your extraction process. By maintaining and adjusting your rules to match these changes, you ensure continuous and reliable data collection. These best practices help optimize your data extraction workflows, reduce the chances of errors, and improve the accuracy of the data collected, ultimately leading to more valuable insights and informed decision-making.

Preparing for a Data Science Job Interview? Check Out Our Blog on Data Science Interview Questions & Answer

Conclusion

Data extraction tools have become essential for efficiently gathering large volumes of structured or unstructured data. Whether you’re a developer building custom solutions, a marketer analyzing consumer behavior, or a business analyst conducting market research, leveraging the right data extraction tool can significantly streamline your workflow. These tools, commonly highlighted in Data Science Training, help automate data collection, saving time and minimizing the risk of human errors that often come with manual extraction methods. By using a reliable tool, you can ensure consistent and accurate data collection, which ultimately leads to more informed decision-making. The tools highlighted above are among the best in the industry, each offering unique capabilities suited to different extraction needs. Some are geared towards non-technical users with user-friendly interfaces, while others provide advanced features for developers working with complex datasets. Whether you’re extracting data from websites, APIs, or databases, selecting the right tool for your specific requirements is crucial. The right tool can increase operational efficiency, improve data accuracy, and enhance the quality of insights you derive from the extracted information.