- Introduction to Data Engineering Skills

- Programming Languages: Python, SQL, and Java

- Database Management Skills

- ETL (Extract, Transform, Load) Skills

- Big Data Tools: Hadoop, Spark, and Kafka

- Data Modeling and Warehousing Skills

- Cloud Technologies for Data Engineers

- Data Pipeline Development

- Version Control (Git) and CI/CD Skills

- Problem-Solving and Analytical Skills

- Communication and Collaboration Skills

- Continuous Learning and Certification

- Conclusion

Introduction to Data Engineering Skills

Data engineering plays a pivotal role in the modern data ecosystem, serving as the backbone for all data-related operations by ensuring that raw data is properly collected, cleaned, transformed, and made ready for analysis. Data engineers are responsible for building and maintaining the infrastructure, tools, frameworks, and systems needed to handle vast amounts of data efficiently and reliably. Their work involves creating robust data pipelines that enable the seamless flow of data from various sources to centralized storage systems such as data warehouses or lakes, while ensuring data quality, integrity, and security throughout the process. As organizations across industries increasingly rely on data-driven decision-making to gain competitive advantages and improve operational efficiency, the demand for skilled data engineers continues to grow, positioning this role as one of the most in-demand in the tech sector. The responsibilities of a data engineer extend far beyond basic data handling; they must collaborate with data scientists, analysts, and other stakeholders to understand business needs, design scalable data architecture, and ensure that the right data is available in the right format and at the right time. Developing new skills and gaining experience is essential. This requires a broad skill set that includes proficiency in programming languages like Python, SQL, and Java; experience with big data technologies such as Hadoop, Spark, and Kafka; familiarity with cloud platforms like AWS, Azure, or Google Cloud; as well as knowledge of data modeling, ETL (Extract, Transform, Load) processes, and workflow orchestration tools like Airflow or Luigi. For those looking to strengthen their skills and grow in this field, pursuing a Data Science Training can provide the necessary foundation and expertise. In addition to technical skills, soft skills such as problem-solving, communication, adaptability, and teamwork are crucial for effectively navigating complex data environments and aligning technical solutions with business goals. This article will delve deeper into both the technical and interpersonal competencies that are essential for a successful career in data engineering, providing a comprehensive overview of what it takes to thrive in this dynamic and rapidly evolving field.

Would You Like to Know More About Data Science? Sign Up For Our Data Science Course Training Now!

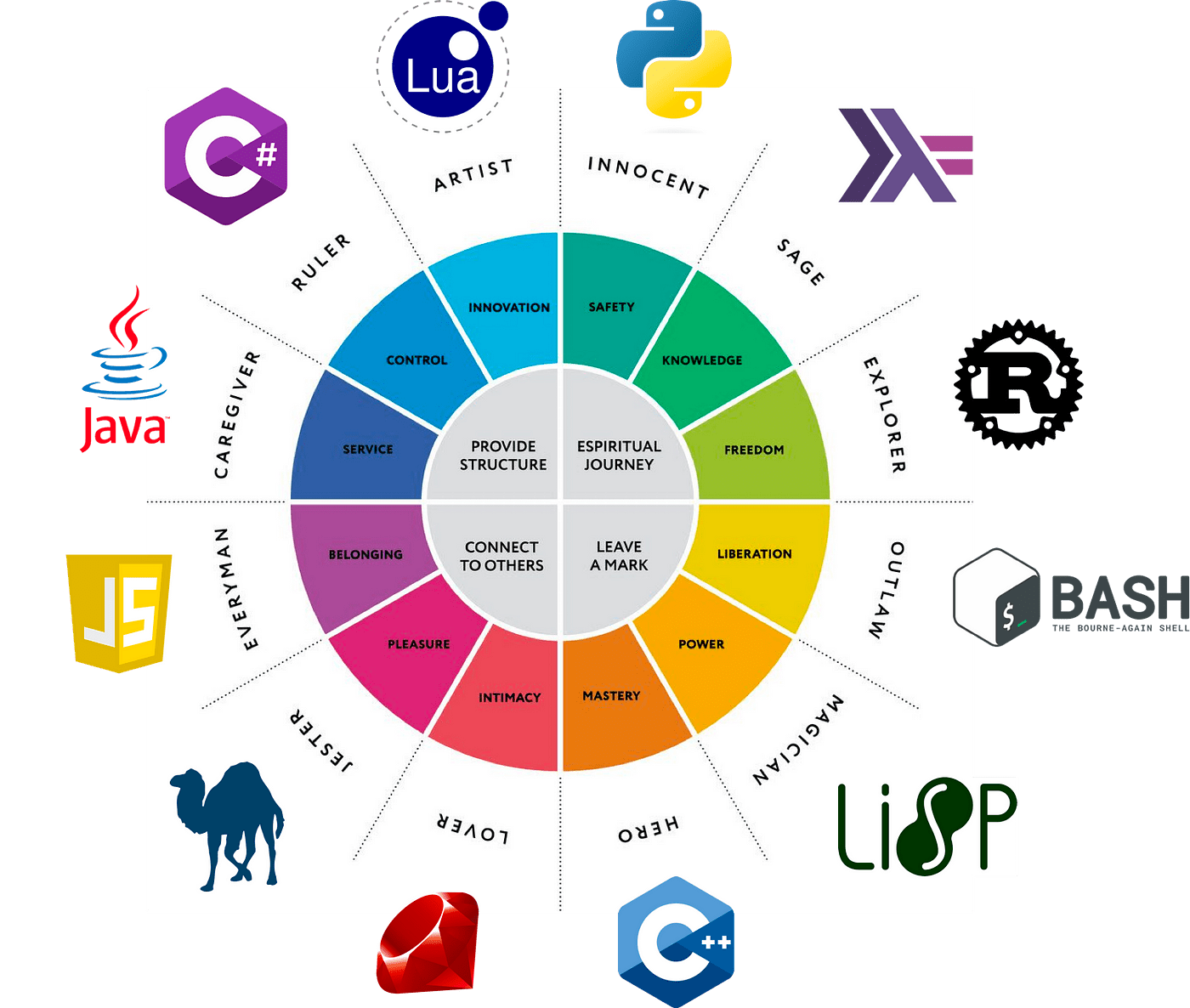

Programming Languages: Python, SQL, and Java

Programming is at the heart of data engineering. Data engineers need proficiency in multiple programming languages to work with data pipelines, develop ETL processes, and build scalable systems. The three most commonly used languages in data engineering are Python, SQL, and Java.

- Python: Python is the most widely used programming language in data engineering. It is a versatile language with a rich ecosystem of libraries and frameworks, such as Pandas, NumPy, and PySpark, that allow data engineers to efficiently manipulate, process, and analyze data. Python is also ideal for writing scripts and automating tasks, such as data extraction, transformation, and loading (ETL).

- SQL: SQL (Structured Query Language) is essential for querying and managing data in relational databases. Data engineers must be proficient in writing SQL queries to retrieve, update, and manipulate data stored in databases. SQL is also used for working with cloud databases and big data tools, as many of them support SQL-like querying.

- Java: Java is commonly used in enterprise-level applications and is important in building large-scale data pipelines. It is often used for developing data integration systems, especially in big data environments. Java is also a key language for working with frameworks like Hadoop and Apache Kafka.

Database Management Skills

Data engineers play a vital role in managing the backbone of data systems by working extensively with both relational and NoSQL databases to ensure efficient storage, retrieval, and management of large volumes of structured, semi-structured, and unstructured data. They are responsible for designing, implementing, and maintaining the data storage infrastructure that supports the diverse data needs of modern applications and analytics platforms. When working with relational databases (RDBMS) such as MySQL, PostgreSQL, and Microsoft SQL Server, data engineers must possess a deep understanding of how to structure data efficiently, develop and optimize SQL queries, manage indexes for performance enhancement, and apply normalization techniques to eliminate redundancy and maintain data integrity. Their expertise ensures that relational data systems are scalable, consistent, and capable of handling transactional workloads with high reliability. In addition to RDBMS, data engineers must also be adept at working with NoSQL databases like MongoDB, Cassandra, and Redis, which are specifically designed for large-scale data that doesn’t conform neatly to tabular formats. Understanding the differences between key-value stores, document databases, and column-family stores is crucial, as each type of NoSQL database offers distinct advantages depending on the nature of the data and the access patterns required by applications. A strong grasp of these systems enables data engineers to select the appropriate storage solution for each use case, ensuring flexibility and performance. To further advance your skills and knowledge, Learn Data Science to deepen your understanding of both relational and NoSQL databases. Beyond database design and implementation, maintaining data integrity is a top priority. Data engineers must enforce data consistency, accuracy, and availability through best practices such as indexing, the use of referential integrity constraints, and proper normalization strategies. These practices are critical in ensuring that data remains trustworthy and usable across various business applications and analytical processes. Altogether, this multifaceted knowledge empowers data engineers to build robust, scalable, and reliable data infrastructure that meets the growing demands of data-driven enterprises.

ETL (Extract, Transform, Load) Skills

One of the core tasks of a data engineer is to build and manage ETL processes. ETL refers to the process of extracting data from different sources, transforming it into a usable format, and loading it into data storage systems or data warehouses for analysis.

- Extract: The extraction phase involves collecting data from various sources like databases, APIs, flat files, and external data streams. Data engineers need to understand the best methods for connecting to and extracting data from these sources, including batch and real-time data extraction.

- Transform: The transformation step involves cleaning, formatting, and enriching the data to make it usable. This could include tasks like removing duplicates, handling missing values, converting data types, aggregating data, or applying business logic. Data engineers often use programming languages like Python or frameworks like Apache Spark to perform these transformations.

- Load: The loading phase is where the transformed data is stored in a data warehouse, database, or data lake. Data engineers need to ensure that the data is loaded efficiently and that it can be easily queried and accessed by data scientists and analysts.

Do You Want to Learn More About Data Science? Get Info From Our Data Science Course Training Today!

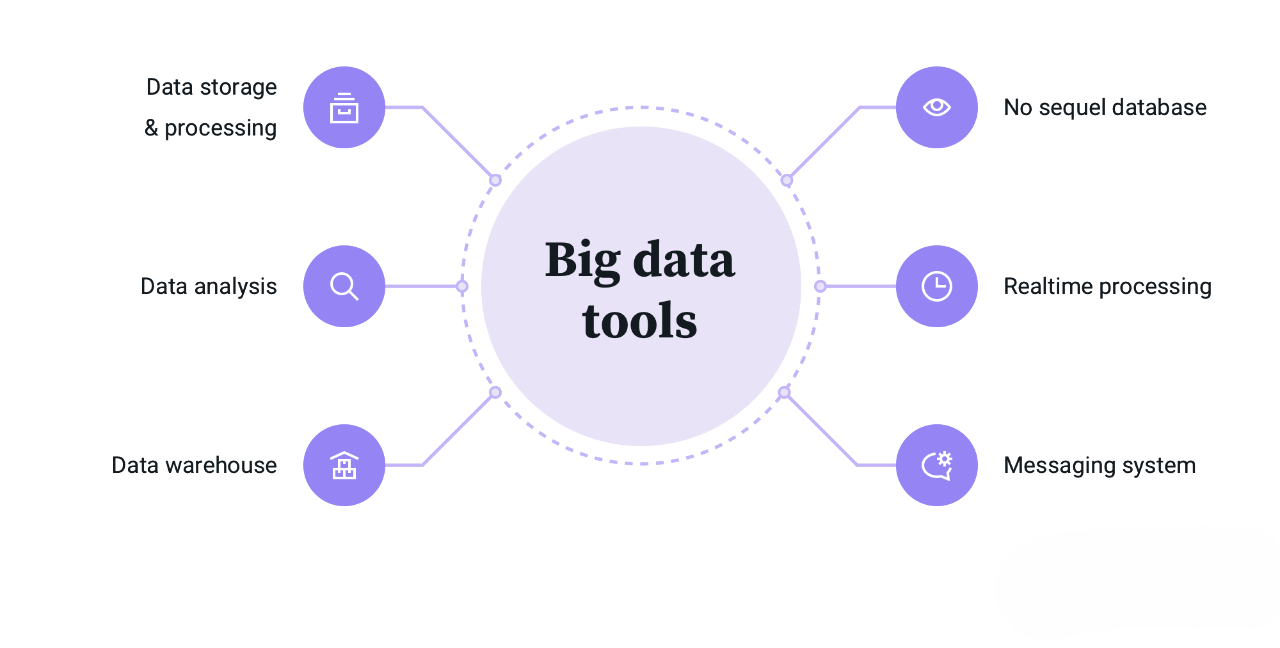

Big Data Tools: Hadoop, Spark, and Kafka

As data volumes continue to grow exponentially, traditional data processing tools often struggle to keep up with the scale and complexity of modern datasets, creating the need for more robust, distributed systems. This is where big data tools become essential. Apache Hadoop, one of the foundational technologies in the big data ecosystem, is an open-source framework designed to facilitate the distributed storage and processing of vast datasets across clusters of computers. It enables organizations to handle petabytes of data efficiently and cost-effectively. Data engineers working with Hadoop must be well-versed in its key components, particularly the Hadoop Distributed File System (HDFS), which manages data storage across nodes, and the MapReduce programming model, which handles parallel data processing. For those interested in career prospects, checking the Data Engineer Salary in India can provide valuable insights into compensation trends. However, as the demand for faster and more flexible processing has increased, Apache Spark has emerged as a preferred alternative. Spark is also an open-source, distributed computing system, but it offers significant performance improvements over Hadoop’s MapReduce by supporting in-memory computation and advanced analytics. Spark excels at batch and real-time processing and is used in a variety of applications, including data transformation, machine learning, and streaming analytics. Its support for multiple programming languages, such as Python, Scala, and Java, makes it accessible to many developers. Complementing Hadoop and Spark is Apache Kafka, a distributed streaming platform designed for real-time data ingestion and processing. Kafka allows systems to publish and subscribe to data streams, making it crucial for building real-time data pipelines and event-driven architectures. It seamlessly integrates with big data frameworks like Hadoop and Spark, enabling continuous data flow and analytics at scale. For data engineers, mastering these big data tools is essential to designing systems capable of processing, analyzing, and responding to data in real time, making them indispensable in modern data infrastructure.

Data Modeling and Warehousing Skills

Data modeling and warehousing are critical components of data engineering, as they ensure that data is stored and organized in a way that makes it easy to query and analyze.

- Data Modeling: Data modeling involves designing the structure and relationships between data elements. Data engineers need to know how to create normalized and denormalized schemas, design fact and dimension tables, and handle data types and relationships effectively. Common data modeling techniques include entity-relationship modeling (ERM) and dimensional modeling.

- Data Warehousing: A data warehouse is a central repository where data from multiple sources is consolidated and made available for analysis. Data engineers must be proficient in building and managing data warehouses using platforms such as Amazon Redshift, Google BigQuery, and Snowflake. They should also know how to design data pipelines to load and transform data into the warehouse.

Cloud Technologies for Data Engineers

Cloud computing has revolutionized how data is stored, processed, and analyzed, offering organizations scalable, flexible, and cost-effective solutions for managing large volumes of data. For data engineers, expertise in cloud platforms such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure is essential for designing and managing modern data infrastructure. AWS provides a comprehensive suite of services, including Amazon S3 for scalable object storage, RDS and DynamoDB for relational and NoSQL databases, AWS Lambda for serverless computing, EMR for big data processing using Hadoop and Spark, AWS Glue for serverless ETL, and Amazon Redshift for fast and scalable data warehousing. These services allow data engineers to create robust, cloud-based data pipelines that are optimized for performance and cost. Similarly, GCP offers powerful tools for data engineering, such as Google Cloud Storage for data archiving, Cloud Dataflow for unified stream and batch processing, BigQuery for serverless, scalable analytics, and Cloud Pub/Sub for real-time messaging and event-driven data ingestion. Mastery of these tools enables engineers to develop efficient and reliable pipelines for both real-time and batch processing scenarios. On Microsoft Azure, data engineers can utilize services like Azure Blob Storage for storing massive datasets, Azure SQL Database for managed relational storage, Azure Synapse Analytics for combining big data and data warehousing, Azure Data Factory for orchestrating data movement and transformation, and Azure Databricks for scalable data processing with Apache Spark. These tools support a wide variety of data engineering workflows, from ETL development to real-time analytics and machine learning. For those looking to expand their knowledge and expertise, pursuing a Data Science Training can be a valuable step toward mastering the skills needed for cloud-based data engineering. Understanding the full ecosystem of services offered by these cloud platforms is critical for building scalable, high-performance, and cost-effective data engineering solutions that support advanced analytics, machine learning, and business intelligence at scale.

Want to Pursue a Data Science Master’s Degree? Enroll For Data Science Masters Course Today!

Data Pipeline Development

Data pipelines are the backbone of data engineering. A data pipeline is a series of processes that automate the extraction, transformation, and loading of data. Data engineers are responsible for designing, developing, and maintaining these pipelines to ensure data flows smoothly from source to storage and analysis.

A good data pipeline should be:

- Scalable: Capable of handling increasing volumes of data.

- Reliable: Ensuring that data is delivered consistently and accurately.

- Efficient: Minimizing latency and optimizing resources.

- Automated: Reducing manual intervention by automating processes.

Data engineers use tools like Apache Airflow, Apache NiFi, and Luigi to orchestrate and schedule pipeline tasks.

Version Control (Git) and CI/CD Skills

Version control is an essential practice for managing code changes, ensuring collaboration, and maintaining the integrity of software development projects, especially in data engineering, where multiple team members often work on complex data pipelines and infrastructure. Git, the most widely adopted version control system, plays a central role in enabling data engineers to track changes, manage different versions of the codebase, collaborate seamlessly with teammates, and roll back to previous states when necessary. Proficiency in Git allows engineers to work effectively in distributed teams, streamline development workflows, and maintain a clean, organized code repository. In conjunction with version control, Continuous Integration and Continuous Deployment (CI/CD) practices are crucial for modern data engineering workflows. CI/CD automates the integration, testing, and deployment of code changes, significantly reducing the time and effort required to move code from development to production. By leveraging CI/CD pipelines, data engineers can ensure that their code is automatically tested for errors, validated for quality and performance, and deployed consistently across environments. To explore the growing field of AI, you can check out Artificial Intelligence in India which provides insights into the industry’s current trends. Familiarity with CI/CD tools such as Jenkins, GitHub Actions, GitLab CI, CircleCI, or Azure DevOps enables engineers to create robust and efficient deployment pipelines, thereby improving software reliability, accelerating release cycles, and minimizing the risk of introducing bugs into production environments. Together, version control with Git and CI/CD practices form the foundation of a scalable, collaborative, and automated development workflow that supports rapid iteration and continuous improvement in data engineering projects.

Problem-Solving and Analytical Skills

Data engineers must possess strong problem-solving and critical thinking skills to effectively address the complex challenges associated with data processing, storage, and management in large-scale systems. These skills are essential for analyzing data workflows, identifying inefficiencies or bottlenecks, troubleshooting errors, and optimizing performance across the entire data pipeline. In real-world scenarios, data engineers frequently encounter issues such as data inconsistencies, latency in data processing, or failures in automated pipelines, all of which require a methodical and analytical approach to diagnose and resolve. Strong analytical capabilities enable them to break down complex systems into manageable components, assess the root cause of problems, and design targeted, efficient solutions that improve system reliability and scalability. Furthermore, critical thinking empowers data engineers to anticipate potential issues, evaluate trade-offs between different architectural choices, and make informed decisions that align with technical and business objectives. For those interested in career opportunities, exploring the Top Data Science Companies in India can provide insights into potential employers in this field. These abilities are crucial not only for maintaining existing infrastructure but also for designing new systems that are resilient, efficient, and adaptable to evolving data needs. As data engineering continues to evolve with new technologies and increasing data complexity, the capacity to think critically and solve problems efficiently remains a fundamental skill for success in the field.

Communication and Collaboration Skills

Data engineers play a crucial role in working closely with data scientists, analysts, and other stakeholders across various teams. Their ability to communicate complex technical concepts in a clear and understandable manner is essential, especially when interacting with non-technical teams. They must be able to explain how data flows through various systems, identify and resolve any issues that may arise, and ensure that data is accessible and ready for analysis. Effective collaboration is key in ensuring that data is structured, processed, and maintained in a way that supports decision-making processes and business objectives. Data engineers also need to have strong problem-solving skills to address challenges related to data quality, integration, and scalability, while ensuring that systems are optimized for performance and security. Their work is foundational to enabling seamless data analysis and empowering data-driven insights across the organization.

Continuous Learning and Certification

The field of data engineering is dynamic and continuously evolving, driven by rapid advancements in technologies, tools, and industry best practices. To remain effective and competitive in this ever-changing landscape, data engineers must commit to continuous learning and professional development. Staying current with emerging trends, frameworks, and methodologies is essential for building scalable, efficient, and future-ready data systems. This commitment to learning not only enhances technical expertise but also ensures that data engineers can adapt to new challenges, integrate innovative solutions, and make informed decisions that align with modern data strategies. For those looking to deepen their understanding, learning about What is Statistical Modeling can offer valuable insights into the mathematical foundations that underpin many data science techniques. One of the most effective ways to demonstrate ongoing learning and validate skills is by pursuing industry-recognized certifications. Certifications such as the Google Professional Data Engineer, Microsoft Azure Data Engineer Associate, and AWS Certified Big Data Specialty are highly regarded in the tech industry and can significantly boost a data engineer’s credibility and career opportunities. These certifications cover key areas of cloud data architecture, pipeline design, data security, performance optimization, and integration with analytics and machine learning platforms. By earning these credentials, data engineers not only solidify their knowledge but also signal to employers and peers their dedication to excellence and staying at the forefront of the data engineering profession.

Conclusion

Data engineering is a dynamic, multifaceted, and rapidly evolving field that demands a well-rounded blend of technical and soft skills to effectively manage the ever-increasing complexities of modern data systems. To excel in this role, data engineers must master a wide range of technical competencies, including proficiency in programming languages such as Python, SQL, or Java; deep understanding of database management both relational and NoSQL; and expertise in designing and managing ETL (Extract, Transform, Load) processes that prepare raw data for meaningful analysis. In addition, familiarity with big data tools like Hadoop, Spark, and Kafka, along with hands-on experience in cloud platforms such as AWS, Google Cloud Platform, or Microsoft Azure, is essential for building scalable, high-performance data pipelines that support real-time and batch data processing. For those looking to deepen their expertise and advance in this field, pursuing a Data Science Training can be an excellent way to build the necessary skills and knowledge. Beyond technical know-how, success in data engineering also relies heavily on soft skills such as problem-solving, which is crucial for debugging complex data workflows and optimizing performance; communication, which enables collaboration across cross-functional teams; and a commitment to continuous learning to keep pace with rapidly evolving technologies and best practices. As organizations increasingly depend on data-driven insights to inform strategy and innovation, the demand for skilled data engineers continues to surge, making it not only a critical role in today’s data landscape but also an exciting and highly rewarding career path with vast opportunities for growth and advancement.