- Introduction to AI and Inference

- Definition and Importance of Inference in AI

- Types of Inference in AI

- Deductive vs Inductive Inference

- Bayesian Inference in AI

- Rule-Based Inference Systems

- Role of Inference in Machine Learning

- Logical Reasoning and AI Inference

- Conclusion

Introduction to Artificial Intelligence

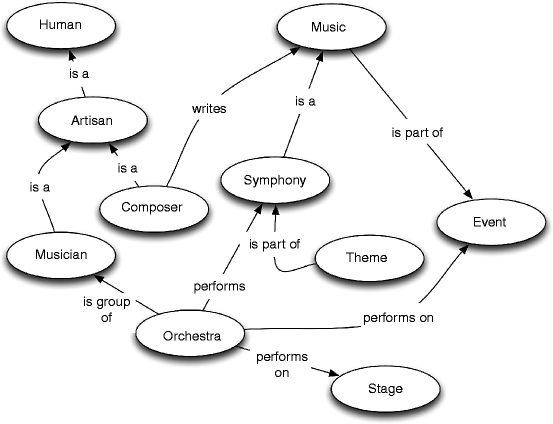

Artificial Intelligence (AI) is the simulation of human intelligence in machines that are programmed to think, learn, and solve problems autonomously. These intelligent systems are designed to mimic human cognitive functions such as reasoning, learning from experience, recognizing patterns, understanding language, and making decisions. A critical component of this intelligence is inference, which plays a central role in enabling AI systems to make decisions and draw logical conclusions from available data, facts, and observed patterns. In AI, inference refers to the process by which a system derives new knowledge or insights from existing information. This can involve using mathematical models, statistical algorithms, or logical rules to make predictions, detect trends, or generate explanations. Inference not only allows AI to perform tasks like classification, forecasting, and diagnostics, but also plays a crucial role in Data Science Training by helping systems update their knowledge bases and improve over time through learning By continuously analyzing and interpreting data, inference enables AI systems to adapt to new environments, reason about uncertainty, and act intelligently based on real-time information. Whether it’s powering recommendation engines, medical diagnosis tools, autonomous vehicles, or voice assistants, inference serves as a foundational mechanism that drives intelligent behavior in machines and bridges the gap between raw data and actionable decisions.

Eager to Acquire Your Data Science Certification? View The Data Science Course Offered By ACTE Right Now!

Definition and Importance of Inference in AI

Inference in AI refers to the ability of an AI system to make decisions, predict outcomes, or reason about data by applying logical rules, statistical models, or learned patterns to the existing knowledge base. In AI, inference enables systems to:

- Make predictions: Using trained models to forecast future events or classify new data.

- Draw conclusions: Deriving logical conclusions based on available data.

- Adapt: Making decisions based on dynamic or changing information.

Inference is vital in AI because it transforms raw data into actionable knowledge. It is the process that connects learning from data with decision-making, which is why it is essential in various applications such as medical diagnostics, autonomous vehicles, recommendation systems, and natural language processing.

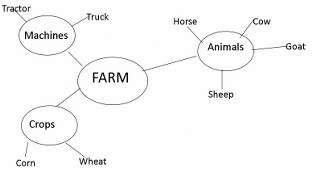

Types of Inference in AI

- Forward Inference (Deductive Inference): This inference starts with known facts or premises and applies rules to derive new facts or conclusions. It is based on existing knowledge and logical rules. For example, in expert systems, forward inference is used to apply a series of predefined rules to conclude from known facts, often seen in fields like AI vs Data Science.

- Backward Inference (Inductive Inference): In contrast, backward inference involves starting with a goal or hypothesis and working backward to check which facts or premises would support it. It is often used in diagnostic systems that work backward from symptoms to hypothesize possible causes.

Deductive vs Inductive Inference

Deductive Inference:

- Deductive reasoning starts with general principles and moves to specific conclusions. If the premises are true, the conclusion must also be true.

- Example: If all humans are mortal (general premise) and Socrates is a human (specific case), then Socrates is mortal (specific conclusion).

- In Artificial Intelligence, deductive inference is used in rule-based systems, where conclusions are derived from a set of rules.

Inductive Inference:

- Inductive reasoning works the other way around, from specific observations to general conclusions. Inference through induction often involves generalizing from examples or experiences.

- Example: If you observe that the sun rises every day, you might infer that the sun will rise again tomorrow.

- Inductive inference is often used in machine learning, where models learn patterns from data and then generalize those patterns to make predictions or decisions.

- Knowledge Base: Contains facts and rules.

- Inference Engine: Applies the rules to derive new information or make decisions.

- If the patient has a fever and cough, it might be the flu.

- If the patient has a headache and a runny nose, it might be a cold.

- Making Predictions: Once a machine learning model is trained, inference allows it to make predictions about new, unseen data.

- Model Deployment: Inference uses a trained machine learning model to classify data, predict future outcomes, or recommend actions.

- Real-Time Decisions: In production environments, AI systems use inference to make quick decisions based on incoming data.

Excited to Obtaining Your Data Science Certificate? View The Data Science Training Offered By ACTE Right Now!

Bayesian Inference in AI

Bayesian Inference is a powerful statistical method used to update the probability of a hypothesis as new evidence or information becomes available. Named after the English statistician Thomas Bayes, this approach forms a fundamental part of many AI systems, particularly those focused on probabilistic reasoning and machine learning. Bayesian inference allows AI to make informed decisions in environments characterized by uncertainty, ambiguity, or incomplete data. At the core of this method lies Bayes’ Theorem, which mathematically expresses how a hypothesis’s probability should be revised in light of new data. The theorem is formulated as: P(H|E) = [P(E|H) * P(H)] / P(E), where P(H|E) is the posterior probability—the likelihood of the hypothesis H given the evidence E. P(E|H) is the likelihood of observing the evidence E if the hypothesis H is true, P(H) is the prior probability of the hypothesis before considering the new evidence, and P(E) is the marginal probability of the evidence across all hypotheses. This method, central to Data science Training, provides a structured and dynamic framework for learning from data, enabling systems to refine their predictions and conclusions as more evidence is introduced. In the field of AI, Bayesian inference plays a critical role in various domains, including natural language processing (NLP), computer vision, robotics, and predictive modeling, where decisions must be made despite uncertainty or incomplete input. For example, in NLP, it helps disambiguate meanings based on context; in robotics, it assists in sensor fusion and navigation; and in machine learning, it enhances the reliability of model predictions by incorporating prior knowledge and quantifying uncertainty. By continuously updating its beliefs in response to new data, Bayesian inference enables AI to become more adaptive, intelligent, and accurate over time.

Interested in Pursuing Data Science Master’s Program? Enroll For Data Science Master Course Today!

Rule-Based Inference Systems

Rule-based inference systems are systems that apply predefined logical rules to make decisions or draw conclusions. They operate based on a knowledge base containing facts and regulations, which the system uses to infer new facts or make decisions—a method remains that Data science Important in building interpretable and logical models.

Components:

Example:

These systems are robust for applications like expert, diagnostic, and decision support systems.

Role of Inference in Machine Learning

Inference plays a critical role in machine learning (ML) in the following ways:

Preparing for a Data Science Job Interview? Check Out Our Blog on Data Science Interview Questions & Answer

Inference in machine learning is often optimized for speed and efficiency, especially in real-time applications such as autonomous vehicles, recommendation engines, and fraud detection systems.

Conclusion

Inference in AI is an essential component of intelligent systems, enabling machines to reason, predict, and make decisions. With advancements in machine learning, Bayesian inference, and rule-based systems, AI continues to improve its ability to make accurate and efficient inferences. In the future, AI inference systems will likely become more powerful, scalable, and interpretable, with potential applications in fields like healthcare, autonomous systems, and personalized recommendations and Data Science Training . As AI continues to evolve, inference will remain a core component driving decision-making and intelligent behavior in machines.