- Introduction to Natural Language Processing (NLP)

- Definition of Parsing in NLP

- Types of Parsing Techniques

- Syntactic Parsing vs Semantic Parsing

- Dependency Parsing and Its Applications

- Constituency Parsing in NLP

- Role of Parsing in Chatbots and AI

- Popular Parsing Tools and Libraries

- Challenges in NLP Parsing

- Parsing in Different Programming Languages

- Future Trends in NLP Parsing

- Conclusion and Key Takeaways.

Introduction to Natural Language Processing (NLP)

Natural Language Processing (NLP) is a branch of Artificial Intelligence (AI) focused on the interaction between computers and human (natural) languages. It involves designing algorithms that enable machines to process, understand, interpret, and generate human language in a meaningful and contextually relevant way. NLP is crucial in several applications, such as machine translation, speech recognition, sentiment analysis, and chatbots.For those interested in learning more about this field, consider exploring Data Science Training.

A central task in NLP is understanding the structure of sentences in human languages, which often involves parsing. Parsing refers to analyzing a sentence or phrase and determining its syntactic structure how the words in a sentence relate. This understanding helps computers perform tasks like translation, summarization, and responding intelligently to human queries.

Definition of Parsing in NLP

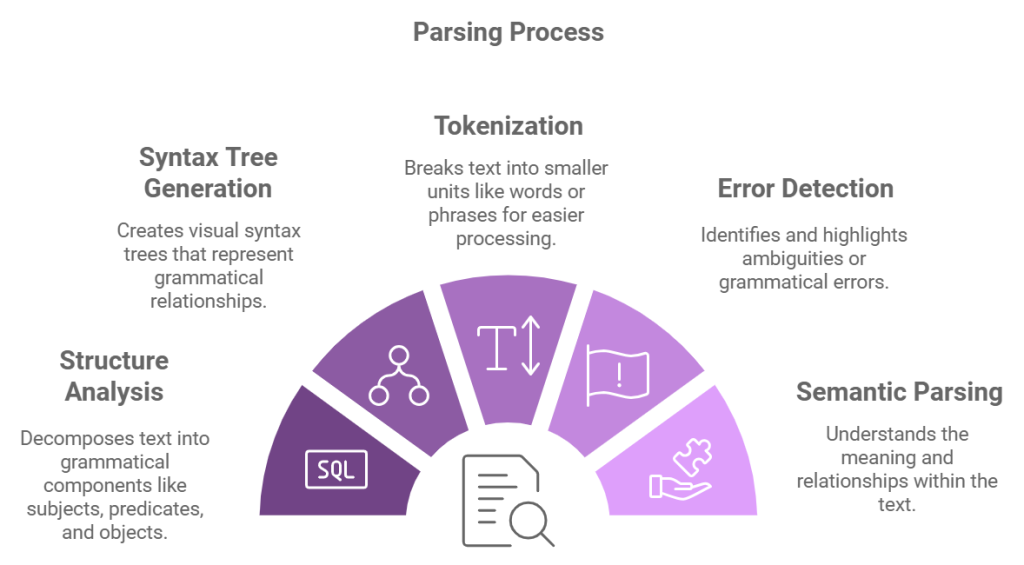

Parsing in NLP refers to the process of analyzing the structure of a sentence to identify its grammatical components and their relationships. This analysis is crucial for extracting meaningful information from the sentence. Parsing plays a key role in various NLP tasks such as machine translation, question answering, and speech recognition. In more technical terms, parsing involves breaking down a sentence into a hierarchical structure, often visualized as a tree, where each node represents a grammatical element (such as a noun phrase or verb phrase). Functions of Statistics Understanding the sentence’s structure allows NLP systems to identify the relationships between words, their syntactic roles, and the overall meaning of the sentence.

Types of Parsing Techniques

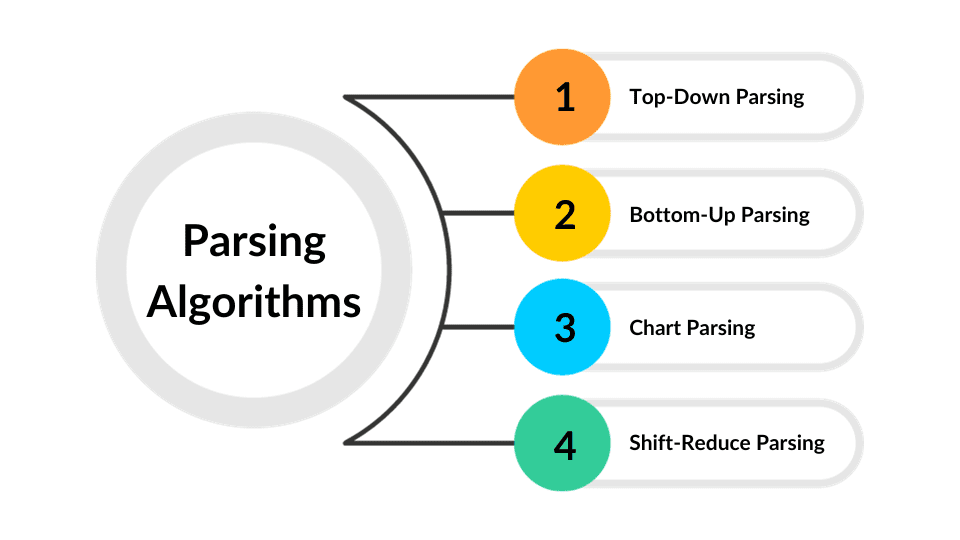

Several types of parsing techniques are used in NLP, depending on the specific goals and complexity of the task at hand. Below are the two most common types:

1. Syntactic ParsingSyntactic parsing is the process of determining the syntactic structure of a sentence. The goal is to figure out how the words in a sentence relate to one another according to grammar rules. This is typically done by creating a parse tree where each word is connected by grammatical relations (e.g., subject, predicate, object).You can learn more about this concept and its applications in data science.

2. Semantic ParsingSemantic parsing goes further than syntactic parsing by focusing on the sentence’s meaning rather than its structure. It seeks to map a sentence into a formal representation of its meaning, often using logic, databases, or knowledge graphs. This allows machines to understand the syntax, intent, and underlying meaning of the text.

Syntactic Parsing vs Semantic Parsing

While both syntactic and semantic parsing are concerned with understanding a sentence, they differ significantly in their goals and approaches:

Syntactic Parsing:- Focuses on the structure of the sentence.

- Analyzes how words in a sentence are related syntactically based on grammar rules.

- Produces a parse tree, visually representing the hierarchical relationship between words and phrases.

- Examples include tasks like part-of-speech tagging and constituency parsing. Semantic Parsing:

- Focuses on the meaning of the sentence.

- It goes beyond the structure and attempts to interpret the sentence’s meaning formally or logically.

- Produces representations such as logical forms, graphs, or other semantic representations.

- Examples include tasks like question answering, machine translation, and intent detection.

While syntactic parsing is crucial for ensuring that sentences are grammatically correct, semantic parsing ensures that the system understands the deeper meaning behind the text.

Dependency Parsing and Its Applications

Dependency parsing is a type of syntactic parsing where the structure of a sentence is represented as a set of dependencies between words. In dependency parsing, each word in the sentence is connected to another word on which it depends. For example, in the sentence “The cat chased the mouse,” “chased” is the main verb, and “cat” and “mouse” are its dependents. Learning about these concepts in a Data Science Training can help you understand how they are applied in real-world scenarios.

Applications of Dependency Parsing:- Machine Translation: Helps translate text by preserving the syntactic relationships between words.

- Information Extraction: Identifies relationships between entities, such as who did what to whom.

- Sentiment Analysis: Detects sentiment by examining relationships between words, particularly verbs and adjectives.

- Question Answering: Determines the dependencies between entities and actions in questions to derive accurate answers.

Dependency parsing is particularly useful for languages with free word order, like Latin or German, where the position of words in a sentence can vary, but their grammatical relationships remain constant.

Constituency Parsing in NLP

Constituency parsing, also known as phrase structure parsing, is a technique that breaks down a sentence into its constituent parts, which are typically noun phrases (NP), verb phrases (VP), prepositional phrases (PP), etc. It aims to represent the syntactic structure of a sentence as a tree, where each node corresponds to a grammatical unit (such as a phrase), and the edges represent the grammatical relationships between them.

For example, in the sentence “The cat chased the mouse,” a constituency parse tree would break down the sentence into its constituent parts:

- “The cat” (NP)

- “chased” (VP)

- “the mouse” (NP)

Constituency parsing is more commonly used in traditional grammar-based approaches and is helpful for syntactic structure analysis and language generation tasks.

Role of Parsing in Chatbots and AI

Parsing enables chatbots and AI systems to understand and respond to human queries. By parsing user inputs, chatbots can identify the key elements, such as the intent, entities, and relationships within the sentence, essential for providing accurate and relevant responses.

For example:

- In a chatbot, parsing can help identify user intents like “order a pizza” or “find a restaurant,” allowing the system to respond appropriately.

- In AI systems, parsing helps extract useful information from natural language inputs, such as instructions, commands, or queries. This information can then be processed to trigger appropriate actions.

Parsing ensures that AI systems can go beyond keyword matching and instead understand the grammatical structure and context of the input, making interactions with the system more natural and efficient.

Popular Parsing Tools and Libraries

Several libraries and tools are available to help implement parsing in NLP applications. Some of the most popular ones include

- SpaCy: A fast and efficient NLP library that provides syntactic and semantic parsing tools. It supports dependency parsing and constituency parsing with pre-trained models for several languages.

- NLTK (Natural Language Toolkit): A comprehensive Python library that provides tools for text processing, including parsers for both dependency and constituency parsing. NLTK is widely used for educational purposes, and you can find resources for Mastering Python to further enhance your skills.

- Stanford NLP: Developed by the Stanford NLP Group, this toolkit provides a suite of NLP tools, including dependency parsing, part-of-speech tagging, and named entity recognition. It offers both Java-based and Python APIs.

- AllenNLP: A library built on PyTorch that provides tools for deep learning-based NLP, including dependency parsing and other semantic tasks.

- Benepar: A library for performing neural network-based constituency parsing. It’s beneficial for parsing sentences in a linguistically structured manner.

These tools provide powerful parsing functionalities, allowing NLP practitioners to choose the best method for their specific use case.

Challenges in NLP Parsing

Despite its importance, NLP parsing faces several challenges that affect its performance and accuracy:

- Ambiguity: Natural languages often have ambiguous structures. For example, “I saw the man with the telescope” can have multiple interpretations depending on whether the telescope belongs to the man or the speaker.

- Complex Sentence Structures: Sentences can be long and complex, with various clauses and modifiers. Parsing such sentences accurately requires handling syntactic and semantic complexity.

- Language Variability: Different languages have different syntactic rules, word orders, and structures. A parsing system that works well for one language may not perform as effectively for others.

- Out-of-Vocabulary Words: NLP parsing systems depend on predefined vocabularies and models. Words not present during training may cause errors or inefficiencies in parsing.

- Lack of Annotated Data: Large datasets with accurate syntactic annotations are required to train high-quality parsing models. Annotating data manually can be resource-intensive and expensive.

Parsing in Different Programming Languages

Parsing can be implemented in different programming languages, each with its own tools and libraries. Popular languages used for parsing tasks include

- Python: Python has become the dominant language for NLP tasks due to its simplicity and the availability of libraries like SpaCy, NLTK, and AllenNLP.

- Java: Java has tools like Stanford NLP and the Apache OpenNLP library, which are widely used for parsing tasks in enterprise-level applications.

- C++: For performance-critical tasks, C++ can be used to implement parsing algorithms, though it is less common in NLP compared to Python and Java.

- R: While not as widely used as Python for NLP tasks, R has libraries like tm and text for text mining and analysis, including basic parsing tasks.

Future Trends in NLP Parsing

The future of NLP parsing is evolving rapidly with advances in deep learning, neural networks, and transformer-based models like BERT and GPT. Here are some key trends to watch for:

- Neural Parsing Models: Deep learning models, such as those based on transformers, are expected to improve the accuracy and efficiency of parsing. These models can learn complex patterns in large datasets, enabling better handling of ambiguities and complex sentence structures.

- Cross-Lingual Parsing: As NLP continues to globalize, the importance of cross-lingual parsing will rise. Techniques that enable parsing multiple languages simultaneously or transfer knowledge between languages will enhance the ability of parsing systems to manage diverse linguistic structures. For those interested, Data Science Training can provide deeper insights into these evolving techniques.

- End-to-end NLP Models: BERT and GPT are increasingly used for end-to-end NLP tasks. These models could perform parsing as part of more extensive tasks such as question answering or machine translation, eliminating the need for separate parsing steps.

- Low-Resource Language Parsing: A growing focus is on parsing for low-resource languages with limited annotated data. Advances in transfer and unsupervised learning will help improve parsing for these languages.

Conclusion and Key Takeaways

Parsing is a crucial component of Natural Language Processing (NLP) that enables machines to understand the structure and meaning of human language. Through syntactic and semantic parsing techniques, machines can analyze sentences, extract relationships, and derive insights from text. With the rise of deep learning and advanced models, the future of NLP parsing holds great promise in enhancing machine understanding of complex linguistic structures across various languages.

As NLP evolves, parsing techniques will become more sophisticated, enabling more accurate and context-aware applications like chatbots, AI, machine translation, and information extraction. The challenges in NLP parsing, such as ambiguity and language variability, will continue to drive research and innovation.