- Introduction to Data Engineering Projects

- Beginner-Level Projects

- Intermediate-Level Projects

- Advanced-Level Projects

- ETL Pipeline Project

- Data Warehouse Project

- Big Data Processing with Spark

- Cloud-Based Data Engineering Projects

Introduction to Data Engineering Projects

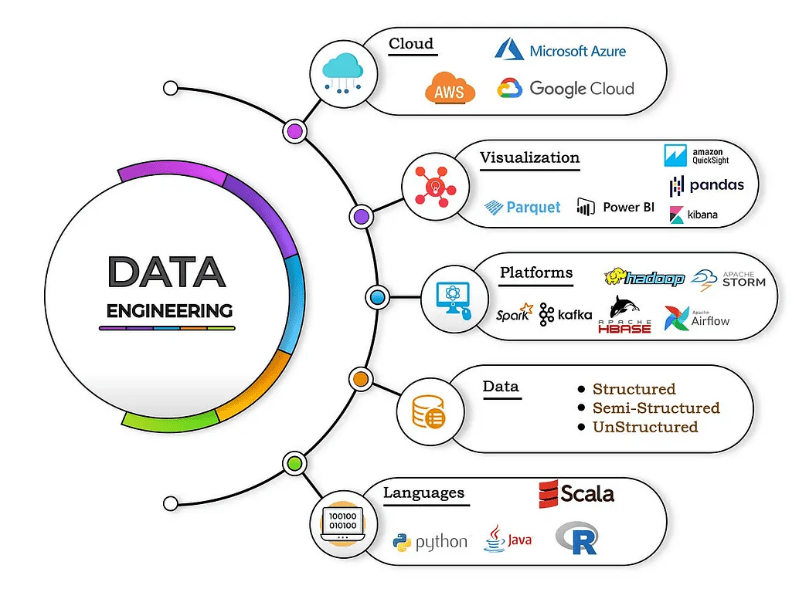

Data engineering is a vital part of the data ecosystem, focused on designing, building, and managing the infrastructure needed for data collection, storage, transformation, and analysis. Data engineers collaborate closely with data scientists, analysts, and software engineers to ensure data is accessible, reliable, and optimized for various analytics and machine learning applications. Projects in data engineering can range from simple tasks like creating ETL pipelines to more complex ones involving real-time data processing or working with big data frameworks such as Apache Spark. These projects require knowledge of multiple tools and technologies including SQL, Python, Hadoop, Spark, and cloud platforms like AWS, Microsoft Azure, and Google Cloud, which are often covered in Data Science Training. Understanding the complexity of different projects helps in planning and execution, as well as showcasing skills effectively. Simple projects might focus on batch processing and data cleaning, while advanced ones could involve streaming data pipelines, data lakes, and machine learning model deployment. In this guide, we will explore various types of data engineering projects categorized by their complexity and offer tips on how to present them professionally in your portfolio, helping you demonstrate your expertise and attract potential employers or clients.

Are You Interested in Learning More About Data Science? Sign Up For Our Data Science Course Training Today!

Beginner-Level Projects

Beginner-level data engineering projects are ideal for those new to the field and looking to build foundational skills. These projects typically focus on understanding core concepts such as data extraction, transformation, and loading (ETL), as well as basic data cleaning and integration. A common beginner project might involve creating simple ETL pipelines that extract data from CSV files or APIs, transform it by cleaning or aggregating, and load it into a relational database like MySQL or PostgreSQL as part of a Big Data Solution. These projects help learners get hands-on experience with SQL for querying databases, and Python or other scripting languages for automation. Another beginner-level task might include working with small datasets to practice data validation, handling missing values, and formatting data correctly.

Beginners often use tools like Jupyter notebooks, Pandas, and basic shell scripting to complete these tasks. Cloud platforms such as AWS or Google Cloud offer free tiers that allow learners to experiment with storage and basic compute resources, giving exposure to cloud-based workflows. These projects emphasize understanding data workflows, managing data quality, and automating routine tasks. Successfully completing beginner-level projects lays a solid foundation for tackling more complex data engineering challenges in the future.

Intermediate-Level Projects

- Multi-Source ETL Pipelines: Build ETL pipelines that extract, transform, and load data from various structured and semi-structured sources such as relational databases, APIs, and flat files, ensuring efficient data integration.

- Data Warehousing: Design and manage data warehouses using platforms like Snowflake, Amazon Redshift, or Google BigQuery, including tasks like schema creation, indexing, and partitioning for performance.

- Data Modeling: Create logical and physical data models using star or snowflake schemas to support analytics and business reporting, ensuring consistency and data integrity.

- Workflow Orchestration: Implement job scheduling and orchestration using tools like Apache Airflow or Prefect to automate workflows, manage task dependencies, and monitor pipeline execution, as outlined in the Project Report Format.

- Data Quality and Validation: Establish data quality checks and validation rules to detect anomalies, handle missing values, and maintain accurate and reliable datasets throughout the pipeline.

- Cloud Integration: Deploy data solutions on cloud platforms like AWS or Azure, utilizing services such as S3, Lambda, Azure Data Factory, and cloud-based storage for scalability and reliability.

- Dashboard and BI Integration: Deliver clean, transformed data to business intelligence tools like Power BI or Tableau to enable interactive dashboards and data-driven decision-making.

- Complex Data Pipelines: Advanced projects often involve designing end-to-end data pipelines that handle large-scale, multi-source data ingestion, incorporating batch and real-time processing.

- Real-Time Data Processing: Building streaming data applications using technologies like Apache Kafka, Apache Flink, or Spark Streaming enables immediate data transformation and analytics for time-sensitive insights.

- Big Data Frameworks: Working with big data platforms such as Apache Hadoop and Apache Spark to process and analyze massive datasets efficiently is a key component of advanced projects in Data Science Training.

- Cloud-Native Architectures: Leveraging cloud services (AWS, Azure, GCP) to build scalable, resilient, and cost-effective data infrastructure, including serverless compute, data lakes, and orchestration tools.

- Data Governance and Security: Implementing robust data governance frameworks, including data lineage, auditing, encryption, and access controls, to ensure data quality, compliance, and security.

- Machine Learning Pipelines: Integrating data engineering workflows with machine learning model training, deployment, and monitoring pipelines for predictive analytics and automation.

- Optimization and Scalability: Focusing on optimizing performance, resource utilization, and scalability of data systems by tuning cluster configurations, query optimization, and cost management.

- Objective: The main objective is to create a centralized data repository that consolidates data from multiple sources to support efficient analysis and reporting.

- Data Extraction: Data is extracted from diverse systems such as transactional databases, ERP, CRM, and external sources to gather relevant business information.

- Data Transformation: Extracted data undergoes cleaning, validation, and transformation to ensure consistency, remove duplicates, and conform to the warehouse schema.

- Schema Design: Designing an optimized schema, often using star or snowflake models, facilitates fast querying and simplifies complex analytical queries, which is a crucial part of a Business Plan.

- ETL Development: Robust ETL (Extract, Transform, Load) pipelines are developed to automate data ingestion and processing, ensuring that data remains up-to-date.

- Storage Selection: Choosing the right storage platform on-premises or cloud-based solutions like Amazon Redshift or Google BigQuery impacts performance and scalability.

- Analytics and Reporting: The data warehouse integrates with business intelligence tools such as Tableau or Power BI, enabling users to generate insights, build dashboards, and make informed decisions.

To Explore Data Science in Depth, Check Out Our Comprehensive Data Science Course Training To Gain Insights From Our Experts!

Advanced-Level Projects

ETL Pipeline Project

An ETL pipeline project is a fundamental data engineering task that involves extracting data from various sources, transforming it to meet business needs, and loading it into a target system such as a data warehouse or database. In this type of project, data is first extracted from sources like APIs, CSV files, or databases. The transformation phase involves cleaning the data by handling missing values, filtering out duplicates, and converting data formats to ensure consistency. Additional transformations might include aggregating data, calculating new metrics, or joining datasets from different sources to create a unified view, crucial for Using Big Data and AI to Fight COVID19 Pandemic. The final step is loading the processed data into a destination system, often a relational database or a cloud data warehouse like Amazon Redshift or Google BigQuery. Throughout the project, automation is key scripting the ETL process using tools such as Python, Apache Airflow, or Talend ensures the pipeline runs efficiently and reliably. Monitoring and logging are also crucial to detect failures or data quality issues early. An ETL pipeline project provides hands-on experience with data processing workflows and is essential for building scalable, maintainable data infrastructure that supports analytics and reporting. Completing such a project demonstrates practical skills valuable in real-world data engineering roles.

Gain Your Master’s Certification in Data Science by Enrolling in Our Data Science Masters Course.

Data Warehouse Project

Big Data Processing with Spark

Big data processing with Apache Spark is a powerful project for data engineers working with large-scale datasets. Spark is an open-source distributed computing system designed to process massive amounts of data quickly and efficiently by leveraging in-memory computation. In this type of project, data engineers work on ingesting data from sources like Hadoop Distributed File System (HDFS), cloud storage, or real-time streams. Spark’s core APIs allow processing data in batch or streaming modes, making it ideal for various use cases such as log analysis, recommendation systems, and fraud detection. The project typically involves writing Spark applications using languages like Scala, Python (PySpark), or Java in Jetbrains Com Pycharm to perform complex transformations, aggregations, and machine learning tasks. Spark’s ability to handle large volumes of data with fault tolerance and scalability helps engineers build robust pipelines that can scale with growing data demands. Additionally, integrating Spark with other big data tools like Hive, Kafka, or Delta Lake enhances its functionality for data warehousing and real-time analytics. Completing a big data processing project using Spark demonstrates proficiency in distributed computing, parallel processing, and advanced data engineering skills, which are highly sought after in industries dealing with large and fast-moving datasets.

Are You Preparing for Data Science Jobs? Check Out ACTE’s Data Science Interview Questions & Answer to Boost Your Preparation!

Cloud-Based Data Engineering Projects

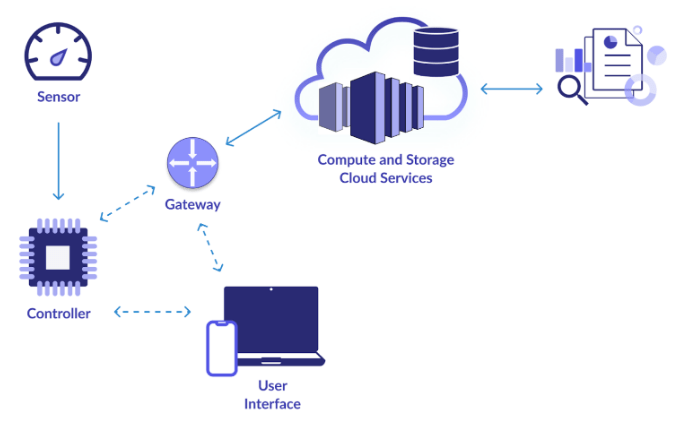

Cloud-based data engineering projects leverage the flexibility, scalability, and managed services offered by cloud platforms such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). These projects typically involve designing and implementing data pipelines that collect, process, and store data using cloud-native tools. For example, engineers might use AWS services like S3 for storage, Glue for ETL, and Redshift for data warehousing, or GCP’s BigQuery combined with Dataflow for real-time processing. Cloud projects in Data Science Training often focus on automating workflows using serverless computing and orchestration tools such as AWS Lambda or Google Cloud Functions, along with scheduling tools like Apache Airflow. One key advantage is the ability to scale resources on demand, which is essential when working with variable data volumes or spikes in traffic. Security and compliance are also integral, with cloud platforms providing built-in features for data encryption, identity management, and access controls. Cloud-based projects offer hands-on experience with modern data architecture, including data lakes, data warehouses, and streaming analytics. Successfully completing such projects showcases skills in cloud computing, infrastructure as code, and cost-effective resource management, making candidates highly valuable for organizations adopting cloud-first strategies.