- Introduction to Artificial Intelligence

- Basics of Python and R for AI

- Fundamentals of Machine Learning

- Supervised and Unsupervised Learning

- Deep Learning Techniques

- Natural Language Processing (NLP)

- Computer Vision Concepts

- Neural Networks and Their Applications

- AI Tools and Frameworks

- Real-World AI Projects

- AI Ethics and Bias Mitigation

- Certification and Final Assessment

Introduction to Artificial Intelligence

Artificial Intelligence (AI) refers to the simulation of human intelligence in machines designed to think, reason, learn, and act in ways that resemble human cognition. As a multidisciplinary domain, AI integrates elements from computer science, mathematics, statistics, Data Science Training cognitive science, and neuroscience to engineer systems capable of performing tasks that typically demand human intellect. These tasks include, but are not limited to, understanding natural language, recognizing complex patterns, solving intricate problems, and making informed decisions. The overarching objective of AI is to create systems that not only mimic human intelligence but can also operate independently in dynamic environments. AI can be broadly classified into three main categories: Artificial Narrow Intelligence (ANI), Artificial General Intelligence (AGI), and Artificial Superintelligence (ASI). ANI, also known as weak AI, is designed for specific tasks and is the most prevalent form in current use; it powers technologies like voice assistants, personalized recommendation engines, facial recognition systems, and autonomous vehicles. AGI, or strong AI, represents a more advanced stage where machines possess the ability to perform any intellectual task that a human can do, demonstrating generalized cognitive abilities. ASI, the most speculative and advanced form, refers to AI systems that exceed human intelligence across all domains, including creativity, problem-solving, and emotional intelligence. While AGI and ASI remain theoretical or in early research stages, the majority of today’s AI innovations and real-world applications revolve around ANI. These advancements continue to revolutionize industries such as healthcare, finance, transportation, and education, fundamentally transforming the way we live and interact with technology.

Would You Like to Know More About Data Science? Sign Up For Our Data Science Course Training Now!

Basics of Python and R for AI

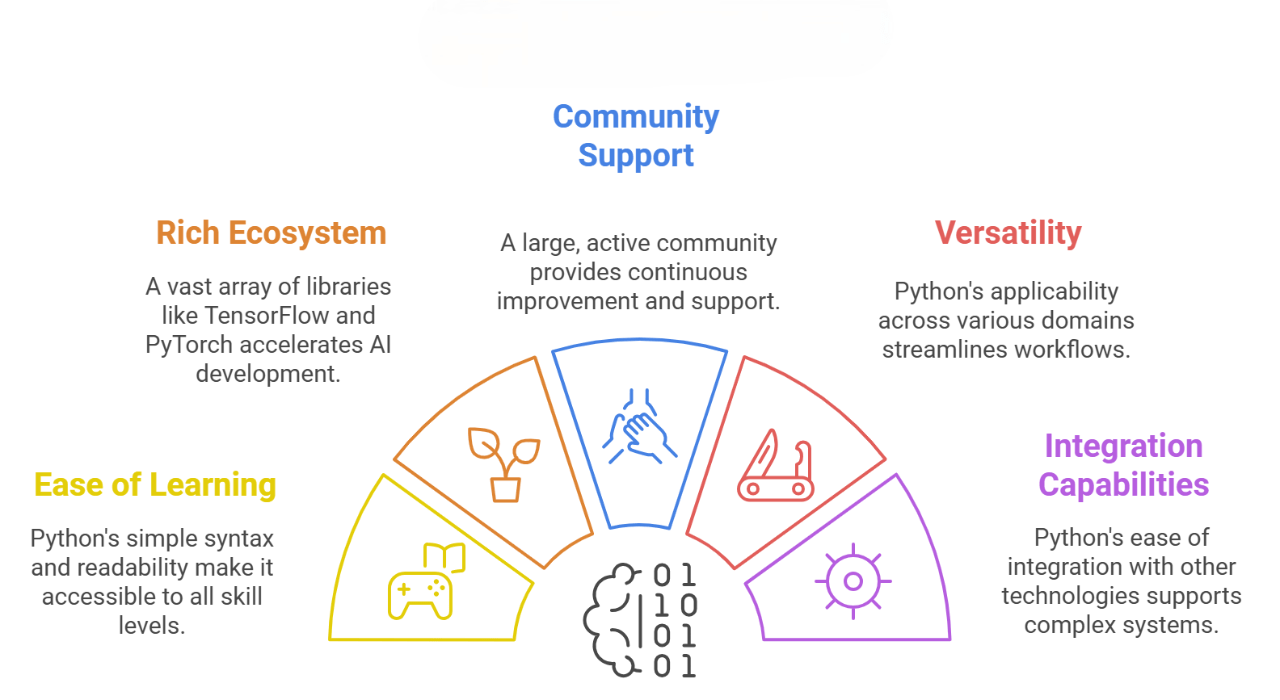

Python for AI DevelopmentPython is one of the most widely adopted programming languages in AI development, primarily due to its simplicity, readability, and extensive library ecosystem. Its clean and easy-to-understand syntax makes it an excellent choice for both beginners and experienced developers, allowing for fast prototyping and efficient coding. Python’s large and active community contributes significantly to its ongoing growth and support, ensuring that developers have access to a wealth of resources, tutorials, and troubleshooting advice. Python’s rich set of libraries is another reason for its popularity in AI applications. For example, NumPy provides robust functionality for numerical computing and handling arrays, making it a go-to library for many AI tasks. Pandas, another essential library, excels at data manipulation and analysis, offering efficient tools to work with structured data. To deepen your understanding of these tools and techniques, consider enrolling in our Learn Data Science program, designed to enhance your applied skills. For data visualization, Python includes libraries like Matplotlib, which can generate a wide variety of plots and charts. Moreover, Scikit-learn, a powerful library for machine learning, offers simple and efficient tools for data mining and data analysis, enabling developers to build predictive models and run various machine learning algorithms. Python is also highly compatible with other deep learning frameworks, such as TensorFlow and Keras, which enable developers to create and train complex neural networks, making Python a central tool in the development of sophisticated AI models. As AI continues to grow in importance across various industries, Python remains at the forefront of the field, thanks to its versatility and vast array of resources for machine learning, deep learning, and other AI-related tasks.

R for AI DevelopmentR is another prominent programming language frequently used in AI development, particularly for tasks that require advanced statistical analysis and data visualization. Its roots in statistics make R an excellent choice for data-driven applications, providing a wide range of tools specifically designed for exploring and analyzing complex datasets. One of the most celebrated features of R is its extensive ecosystem of packages. For data visualization, ggplot2 stands out, offering a powerful and flexible framework for creating visually appealing and informative graphs and charts. Additionally, dplyr, a data manipulation package, provides intuitive functions for filtering, summarizing, and transforming datasets, allowing for more efficient data handling. R’s caret package is another essential tool for machine learning, as it streamlines the process of building, evaluating, and tuning models across a range of algorithms. While Python dominates much of the AI landscape, R is still highly valued in specific areas, particularly when the focus is on statistical modeling or exploratory data analysis. Furthermore, like Python, R is compatible with many deep learning frameworks, including TensorFlow and Keras, ensuring that it remains a viable option for more complex AI applications, especially in the realms of statistical modeling and data science. R’s strong focus on data exploration and visualization makes it an indispensable tool for AI developers who require deep statistical insights and sophisticated data analysis techniques, making it an ideal complement to Python in AI and machine learning workflows.

Fundamentals of Machine Learning

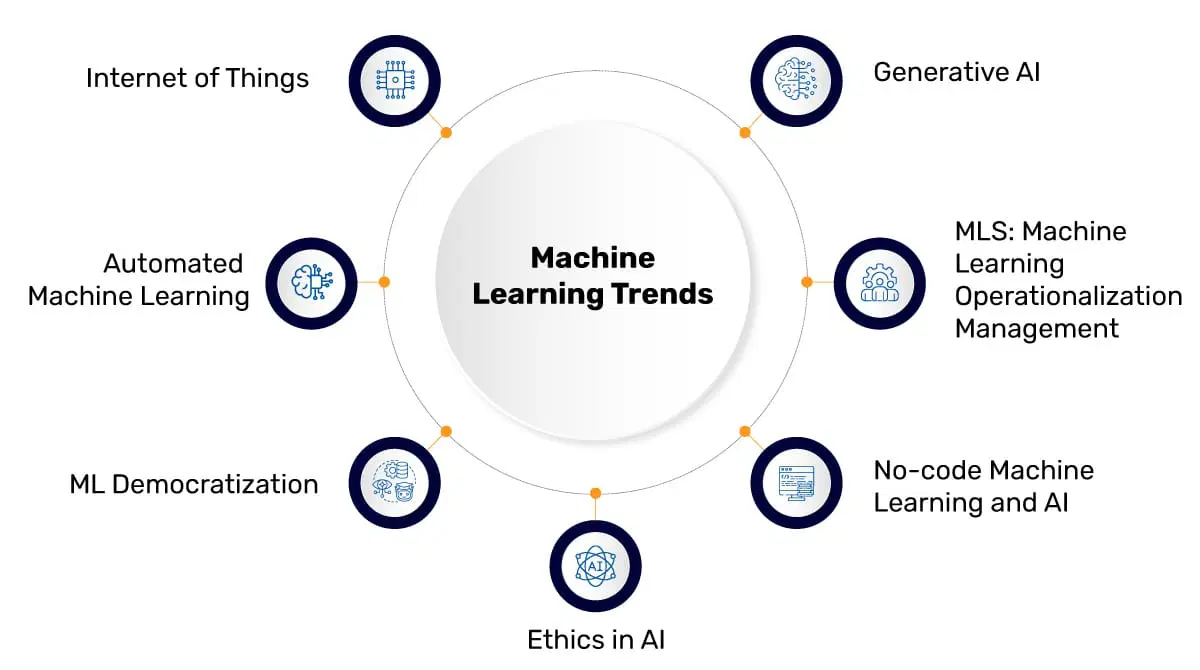

Machine Learning (ML) is a branch of Artificial Intelligence (AI) that allows systems to learn from data and enhance their performance without the need for explicit programming. By using algorithms, ML systems can identify patterns, make predictions, and automate decision-making processes. The process of machine learning typically involves several stages, including What is Data Collection preprocessing, model selection, training, evaluation, and deployment. ML plays a significant role in various industries, from improving customer service with chatbots to enhancing healthcare outcomes through predictive models.

Key Steps in Machine Learning- Data Collection: The first step in ML is gathering relevant data, which serves as the foundation for building and training the model.

- Preprocessing: Raw data often requires cleaning and transformation to be used effectively in machine learning models. This step ensures that the data is in the right format and free of errors.

- Model Selection: Choosing the right machine learning model is essential. Different models (e.g., decision trees, neural networks) are suited for different types of data and tasks.

- Training: During the training phase, the model is fed data and learns to make predictions or decisions based on that data. This step involves adjusting the model’s parameters to optimize performance.

- Evaluation: Once trained, the model is evaluated using new data to assess its accuracy and ability to generalize to real-world scenarios.

- Deployment: After evaluation, the model is deployed into production environments where it can make decisions or predictions on live data. Types of Machine Learning

- Supervised Learning: In supervised learning, models are trained on labeled data, where the input data is paired with the correct output. The model learns to map inputs to their corresponding outputs, making it ideal for tasks like classification and regression.

- Unsupervised Learning: Unlike supervised learning, unsupervised learning works with unlabeled data. The algorithm tries to find hidden patterns or groupings within the data without predefined labels. This is often used for clustering and anomaly detection.

- Reinforcement Learning: In reinforcement learning, an agent interacts with an environment and learns to take actions that maximize a reward. Through trial and error, the agent improves its strategy to achieve the best possible outcome, which makes it useful in applications like robotics, gaming, and autonomous systems.

Supervised and Unsupervised Learning

Supervised learning is a type of machine learning where a model is trained using a labeled dataset, meaning each training instance is paired with the correct output or label. The objective is for the model to learn the mapping between inputs and outputs to make predictions on new, unseen data. Some of the most widely used supervised learning algorithms include linear regression, which predicts a continuous output; logistic regression, often used for binary classification tasks; decision trees, which split data into branches based on feature values to make decisions; support vector machines (SVM), which find the optimal hyperplane to separate data into different classes; and neural networks, which are inspired by the human brain and are highly effective for complex tasks like image and speech recognition. If you’re looking to build practical expertise in these techniques, consider enrolling in a Data Science Training program. Supervised learning is commonly applied in various real-world scenarios, such as email spam detection, where the model is trained to classify emails as spam or not; sentiment analysis, which analyzes text data to determine the sentiment expressed (positive, negative, or neutral); and fraud detection, where the system learns to identify fraudulent transactions based on labeled data. In contrast, unsupervised learning deals with datasets that do not have labeled responses, and the primary goal is to uncover hidden patterns or structures within the data. Since there are no predefined labels, unsupervised learning techniques focus on discovering relationships or groupings in the data. Common methods in unsupervised learning include clustering, such as K-means and hierarchical clustering, which group similar data points together, and dimensionality reduction techniques like Principal Component Analysis (PCA) and t-SNE, which reduce the complexity of data while preserving important features. Unsupervised learning is frequently used for applications like customer segmentation, where businesses group customers based on similar behaviors or attributes; market basket analysis, which uncovers patterns in consumer purchasing habits; and anomaly detection, which identifies outliers or unusual patterns in data, such as detecting fraud or network intrusions.

Deep Learning Techniques

Deep Learning is a subset of machine learning that uses artificial neural networks with multiple layers (deep networks) to model complex patterns in data. It is particularly effective for handling large volumes of unstructured data such as images, audio, and text. Key techniques in deep learning include convolutional neural networks (CNNs), recurrent neural networks (RNNs), and generative adversarial networks (GANs).

CNNs are widely used for image and video recognition tasks, RNNs are suitable for sequential data like time series and natural language, and GANs are used to generate new data samples that resemble the training data. Deep learning frameworks such as TensorFlow, Keras, and PyTorch facilitate the development and training of deep learning models.

Natural Language Processing (NLP)

Natural Language Processing (NLP) is a specialized field within Artificial Intelligence (AI) that focuses on enabling machines to understand, interpret, and generate human language in a way that is both meaningful and contextually relevant. NLP merges computational linguistics with machine learning and deep learning techniques to process and analyze vast amounts of language data. The core goal of NLP is to bridge the gap between human communication and computer understanding, allowing machines to interact with humans in natural and intuitive ways. Key tasks in NLP include text classification, which categorizes text into predefined categories; sentiment analysis, which determines the sentiment behind a piece of text (positive, negative, or neutral); named entity recognition (NER), which identifies proper nouns or entities such as people, places, or organizations in text; machine translation, which automatically translates text from one language to another; and speech recognition, which converts spoken language into written text. With the advancement of technology, various tools and libraries such as the Natural Language Toolkit (NLTK), spaCy, Hugging Face Transformers, and GPT models have significantly transformed how NLP applications are built and executed. These tools provide robust frameworks for working with text data, offering powerful functionalities to analyze, model, and generate human language. For those exploring AI careers, understanding the Data Science Scope in India can provide valuable insights into emerging job markets and opportunities. In practical applications, NLP is utilized in chatbots and virtual assistants, which can interact with users in natural language and provide information or assistance; search engines, where NLP helps improve search relevance by understanding user queries more effectively; and language translation systems, which facilitate communication across different languages. By enabling machines to better understand and process human language, NLP has revolutionized many industries, from customer service to content generation and information retrieval.

Want to Pursue a Data Science Master’s Degree? Enroll For Data Science Masters Course Today!

Computer Vision Concepts

Computer Vision is an interdisciplinary field that enables computers to interpret and understand visual information from the world. It involves techniques for image processing, feature extraction, object detection, image classification, and video analysis. Key algorithms and models include edge detection, HOG features, CNNs, and YOLO (You Only Look Once).

Applications of computer vision span various domains such as healthcare (e.g., medical imaging), security (e.g., facial recognition), retail (e.g., inventory management), and autonomous vehicles (e.g., lane detection). Advances in hardware like GPUs and the availability of large labeled datasets have significantly contributed to the progress in computer vision.

Neural Networks and Their Applications

Neural networks are computational models inspired by the structure and function of the human brain. They consist of layers of interconnected nodes, often referred to as neurons, with each connection having an associated weight. The learning process in neural networks involves adjusting these weights to minimize the error between the predicted output and the actual result. Through this process, neural networks gradually improve their performance by learning from data. To better understand how this learning differs across disciplines, see Data Mining Vs Data Science. There are several types of neural networks, each designed for different tasks. Feedforward neural networks are the simplest, where information moves in one direction, from input to output. Convolutional neural networks (CNNs) are primarily used for processing image data, as they are designed to automatically and efficiently detect patterns and features in visual data. Recurrent neural networks (RNNs) are designed for sequential data, where the output of previous steps influences the subsequent steps, making them ideal for tasks like speech recognition and time-series forecasting. Neural networks have found applications across a wide range of domains, from image and speech recognition, where they enable machines to identify objects or transcribe spoken words, to game playing, where they can learn strategies and make decisions, and even medical diagnosis, where they assist in detecting diseases from images or patient data. Neural networks are the backbone of deep learning, a subfield of machine learning, and are central to many modern AI systems, powering technologies such as autonomous vehicles, personal assistants, and recommendation systems.

AI Tools and Frameworks

A variety of tools and frameworks are available to facilitate AI development. TensorFlow and PyTorch are the most popular deep learning frameworks, providing flexible and scalable platforms for building AI models. Scikit-learn is widely used for traditional machine learning algorithms.

Other essential tools include Jupyter Notebooks for interactive coding, Google Colab for cloud-based development, and MLflow for model tracking and deployment. Additionally, AutoML tools help automate the process of model selection, training, and tuning, making AI accessible to non-experts.

Real-World AI Projects

Hands-on experience with real-world AI projects is crucial for understanding practical challenges and applications. Example projects include:

- Predicting customer churn using classification models

- Building a sentiment analysis tool using NLP

- Creating a facial recognition system using CNNs

- Developing a chatbot using sequence-to-sequence models

- Forecasting sales using time-series analysis

These projects help learners apply theoretical knowledge, gain experience with datasets, and understand the workflow of AI development from data preprocessing to model deployment.

AI Ethics and Bias Mitigation

As AI systems are increasingly integrated into critical decision-making processes, ethical considerations become paramount. Key issues include data privacy, algorithmic bias, transparency, accountability, and the impact on employment. To explore the growing role of AI in various sectors, take a look at Artificial Intelligence in India and how it is shaping the future of technology. AI systems can inadvertently learn and propagate biases present in training data, leading to unfair outcomes.

Mitigation strategies include careful dataset curation, fairness-aware algorithms, regular auditing, and interpretability tools like SHAP and LIME. Ethical AI development also involves complying with legal frameworks and industry standards to ensure responsible use of AI technologies.

Certification and Final Assessment

To validate the skills gained throughout the course, participants are required to complete a comprehensive final assessment that includes multiple-choice questions, practical assignments, and a capstone project. This multi-faceted evaluation ensures a deep understanding of the key concepts, tools, and techniques used in AI. Upon successful completion, participants receive certification, which not only proves their proficiency in various aspects of AI but also significantly enhances career prospects in high-demand fields such as Data Science Training machine learning engineering, and AI research. The certification serves as a valuable asset, distinguishing individuals in the competitive job market by demonstrating their competence in cutting-edge AI technologies. Moreover, the certification process is designed to identify areas for improvement, offering participants the opportunity to reflect on their strengths and weaknesses. It also provides a solid foundation for pursuing further specialization in advanced areas of AI, such as reinforcement learning, AI applications in healthcare, or robotics. By successfully completing the course and receiving certification, individuals are equipped with the knowledge and skills needed to continue advancing in the AI field. This marks not just the end of the course, but the beginning of an ongoing journey into one of the most rapidly evolving and impactful areas of technology, enabling individuals to stay current and thrive in the expanding world of Artificial Intelligence.