- Introduction to Data Collection

- Web Scraping for Automated Data Collection

- APIs for Real-Time Data Collection

- Sensor-Based and IoT Data Collection

- Ethical Considerations in Data Collection

- Data Cleaning and Preprocessing Techniques

- Storing and Managing Collected Data

- Conclusion

Introduction to Data Collection

Data collection is the structured process of gathering and measuring information from diverse sources to derive insights, support decision-making, and analyze trends. It is a foundational step in various fields, such as scientific research, business analytics, healthcare, and artificial intelligence. The purpose of data collection is to obtain accurate and relevant data, which serves as the basis for analysis and interpretation. This process can involve both qualitative and quantitative data, with methods ranging from surveys, interviews, and observations to sensors, databases, and online data scraping. Proper data collection is essential for ensuring the accuracy, reliability, and validity of the data, thereby enhancing the quality of conclusions drawn from the research or analysis in Data Science Training. Inaccurate or biased data can lead to misleading results, which can adversely affect decision-making and strategy. Therefore, selecting appropriate data collection methods, defining clear objectives, and maintaining consistency throughout the process are vital. In industries like healthcare and AI, where decisions can have significant consequences, robust data collection practices are even more critical. Overall, data collection forms the backbone of analytical endeavors, driving evidence-based conclusions and informed decisions across sectors.

Do You Want to Learn More About Data Science? Get Info From Our Data Science Course Training Today!

Web Scraping for Automated Data Collection

Web scraping is the process of automatically extracting data from websites using specialized software or scripts. It has become a powerful tool for automated data collection, enabling users to gather vast amounts of data from the web quickly and efficiently. Web scraping involves retrieving information from HTML pages and parsing it to extract relevant content such as text, images, tables, and links. Python libraries like BeautifulSoup, Scrapy, and Selenium are commonly used for web scraping, offering user-friendly interfaces and tools to navigate complex web structures, which are essential for Data Cleaning in Data Science. One of the primary advantages of web scraping is its ability to gather real-time data, which can be essential for applications like price monitoring, news aggregation, competitive analysis, and social media sentiment analysis.

However, ethical considerations must be taken into account, as scraping may violate a website’s terms of service or overload its servers. To ensure responsible scraping, it is important to respect robots.txt files and implement rate limiting to avoid excessive requests. Additionally, web scraping can sometimes face challenges with dynamic content, requiring the use of advanced techniques like headless browsing. Despite these challenges, web scraping remains a powerful and cost-effective method for collecting large-scale data from the web.

APIs for Real-Time Data Collection

- Overview: APIs play a key role in real-time data collection by enabling seamless access and data exchange across applications.

- Accessing Real-Time Data: APIs facilitate the retrieval of up-to-the-minute data from various external services, such as market feeds, weather data, and IoT devices.

- Data Streaming: Many APIs support continuous data streams, allowing applications to receive live updates, crucial for real-time applications like stock trading or analytics.

- Methods for Data Delivery: APIs use techniques like webhooks and polling, which are important when learning How to Build and Annotate an NLP Corpus Easily.

- Ensuring Data Security: Real-time data collection requires strong security measures, and APIs often employ authentication methods like OAuth, API keys, and encryption to protect data.

- Integration with Other Systems: APIs allow easy integration with platforms, databases, and applications, ensuring smooth data flow and connectivity.

- Practical Applications: APIs for real-time data collection are widely used in industries such as finance, e-commerce, social media, and healthcare for monitoring, decision-making, and automation.

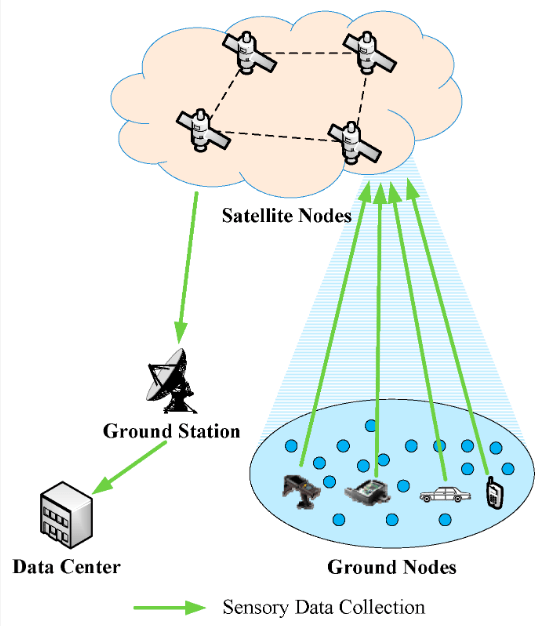

- Overview: Sensor-based and IoT data collection involves acquiring real-time data through interconnected devices equipped with sensors that monitor various environmental variables.

- IoT Devices: These internet-connected devices facilitate remote data collection and monitoring, such as smart home gadgets, wearables, and industrial sensors.

- Real-Time Data Acquisition: The key advantage of IoT data collection is its ability to capture continuous, real-time data, enabling quick decision-making and responses, a crucial concept in Data Science Training.

- Managing Large Data Volumes: IoT systems generate vast amounts of data, necessitating robust storage and processing solutions to effectively manage and analyze the information.

- Wireless Connectivity: IoT devices typically communicate wirelessly via technologies like Wi-Fi, Bluetooth, or LoRaWAN, making deployment more flexible and convenient.

- Ensuring Data Accuracy: The quality and calibration of sensors determine the accuracy of the collected data, which requires regular maintenance and calibration.

- Industry Applications: IoT and sensor-based data collection are applied in various fields such as healthcare for patient monitoring, agriculture for optimized farming, and smart cities for infrastructure management.

- Introduction: Data cleaning and preprocessing are essential steps in preparing raw data for analysis by removing inaccuracies and transforming it into a usable format.

- Handling Missing Data: Missing values can be dealt with through techniques such as imputation (replacing missing values with mean, median, or mode) or by removing rows or columns that have too many missing values.

- Removing Duplicates: Duplicate records can skew analysis and should be removed to ensure accuracy in results. Identifying and eliminating duplicates is an essential preprocessing step.

- Standardizing Formats: Data may come in different formats, such as date and time, currency, or numerical units. Standardizing these ensures consistency, a key topic in Data Science Course Fees.

- Outlier Detection: Outliers can significantly impact statistical analyses. Methods like the Z-score or IQR (Interquartile Range) are used to detect and manage outliers.

- Normalization/Scaling: Numerical data might need normalization or scaling to bring values into a comparable range, especially for algorithms sensitive to varying scales, such as machine learning models.

- Categorical Data Encoding: Categorical variables, such as labels or text, are encoded into numerical formats using techniques like one-hot encoding or label encoding for machine learning models to process effectively.

Would You Like to Know More About Data Science? Sign Up For Our Data Science Course Training Now!

Sensor-Based and IoT Data Collection

Ethical Considerations in Data Collection

Ethical considerations in data collection are critical to ensure that data is gathered, used, and shared responsibly. One of the primary concerns is privacy, particularly when collecting personal or sensitive data. It’s essential to obtain informed consent from individuals, ensuring they are aware of how their data will be used and stored. Additionally, organizations must respect individuals’ rights to control their own data and ensure that it is collected in compliance with privacy laws such as GDPR or HIPAA. Data accuracy is another ethical consideration; data must be collected and reported honestly, without manipulation or bias, to maintain integrity, a principle crucial in Top Deep Learning Projects. Incomplete or misleading data can lead to false conclusions and harmful decisions. Transparency is also key; researchers and organizations should clearly communicate their data collection methods and purposes to stakeholders. Furthermore, the principle of non-discrimination requires that data collection methods do not marginalize or harm specific groups. Finally, security is a crucial ethical issue protecting data from unauthorized access or breaches ensures that collected information remains safe and confidential. Adhering to these ethical guidelines ensures that data collection processes are trustworthy, responsible, and respectful of individuals’ rights, fostering public trust and confidence.

Gain Your Master’s Certification in Data Science by Enrolling in Our Data Science Masters Course.

Data Cleaning and Preprocessing Techniques

Storing and Managing Collected Data

Storing and managing collected data efficiently is essential for maintaining its integrity, security, and accessibility. The first step is to ensure that the data is stored in an organized and structured manner. Databases are commonly used for this purpose, offering a centralized system for storing large volumes of structured data. Relational databases, such as MySQL and PostgreSQL, are ideal for data with predefined structures, while NoSQL databases like MongoDB are suited for unstructured or semi-structured data. Proper data cleaning and preprocessing should also be performed before storage to remove inconsistencies or errors, which are essential Subjects in Data Science. Once data is stored, it’s vital to implement data backup strategies to protect against data loss due to hardware failures or security breaches. Data should also be categorized and indexed for easy retrieval, and metadata should be stored to provide context and improve searchability. Data security is paramount, and encryption techniques should be employed to safeguard sensitive information. Additionally, access control mechanisms must be put in place to ensure that only authorized personnel can access certain data. As data grows, organizations should implement data archiving strategies to optimize storage and maintain efficient management of historical datasets. Overall, proper storage and management are critical to ensure the long-term utility and security of collected data.

Go Through These Data Science Interview Questions & Answer to Excel in Your Upcoming Interview.

Conclusion

In conclusion, effective data collection, management, and ethical considerations are essential for deriving meaningful insights and making informed decisions. Data collection serves as the foundation for analysis, and the methods used must ensure accuracy, relevance, and reliability. With the growing importance of data in various fields, from scientific research to business analytics, it’s crucial to select appropriate tools and techniques, such as web scraping, to gather data efficiently. However, ethical concerns such as privacy, informed consent, and data security must always be prioritized to protect individuals’ rights and ensure the integrity of the data, a crucial aspect taught in Data Science Training. Once collected, managing and storing data appropriately becomes essential, requiring the use of secure, organized databases, effective backup solutions, and clear access controls to maintain the data’s value over time. Adhering to ethical standards, implementing robust storage systems, and utilizing the right tools for data collection and management ensures that data is both reliable and useful. By embracing these practices, organizations can maximize the potential of their data, leading to better decision-making and a more data-driven approach to problem-solving. As the world continues to generate vast amounts of data, proper handling of it will remain a critical factor for success in any data-driven domain.