- Autoencoders in Deep Learning: What Are They?

- Autoencoders’ Significance in Deep Learning

- Fundamental Components and Architecture

- Deep Learning Autoencoder Types

- Advantages and Limitations of Autoencoders

- Autoencoder Implementation in Deep Learning

- Applications of Autoencoders in the Real World

- Future Trends and Research in Autoencoders

Autoencoders have gained significant attention in deep learning for their robust capabilities in unsupervised learning tasks such as dimensionality reduction, feature extraction, and data denoising. By compressing data into a more miniature representation and then reconstructing Data Science Course Training , autoencoders can learn valuable insights about the underlying structure of the data. In this article, we will explore what autoencoders are, their significance in deep learning, the fundamental components, and architecture, types of autoencoders, how they are implemented, their applications in the real world, as well as the benefits and challenges of using them.

Autoencoders in Deep Learning: What Are They?

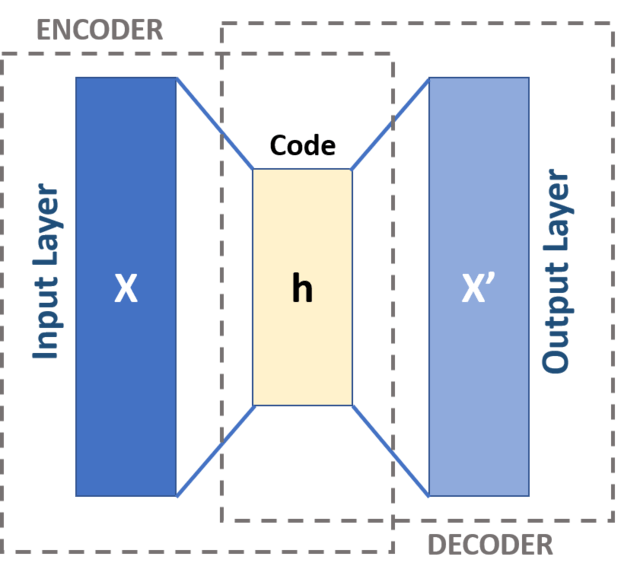

An autoencoder is a type of artificial neural network used for unsupervised learning. Its primary function is to learn a compressed, lower-dimensional representation of data (encoding) and then reconstruct the data back to its original form (decoding). This process forces the network to learn essential features of the data while ignoring irrelevant noise or redundant information. In the simplest terms, autoencoders aim to find an efficient representation of the input data by mapping the input to a smaller-dimensional space and then reconstructing the original input from this compressed version. What is Data to minimize the reconstruction error, the difference between the input data and the output after reconstruction. Autoencoders are typically composed of two main parts:

- Encoder: This part compresses the input into a lower-dimensional space and transforms it into a latent space, or “bottleneck,” which is the compressed representation.

- Decoder: This part reconstructs the data from the compressed representation, attempting to bring it as close as possible to the original input.

Start your journey in Data Science by enrolling in this Data Science Online Course .

Autoencoders’ Significance in Deep Learning

Autoencoders have multiple significant uses in deep learning and are widely applied in various fields for data preprocessing, anomaly detection, and unsupervised learning tasks. The significance of autoencoders in deep learning can be summarized as follows:

- Dimensionality Reduction: One of the primary benefits of autoencoders is their ability to reduce the dimensionality of data while preserving its essential features. This is especially useful when the dataset has many features, and reducing the number of features can make the learning process more efficient. Autoencoders perform a similar role to traditional methods like PCA (Principal Component Analysis) but more flexibly and non-linearly.

- Feature Learning: Autoencoders can learn robust features of data without needing labeled examples. Unlike supervised learning models, autoencoders work with unlabeled data and can learn important representations of the input that are useful for various downstream tasks.

- Anomaly Detection: Autoencoders are excellent for identifying anomalies or outliers in datasets. Since the network learns to reconstruct the Data Processing , it will generally perform poorly on anomalous data or differ significantly from the training data. By measuring reconstruction errors, Variational Autoencoder can effectively flag anomalous instances.

- Noise Reduction (Denoising): Denoising autoencoders are specifically trained to remove noise from data, which can be valuable in applications like image processing. The model learns to reconstruct clean data from noisy inputs by understanding the data’s inherent structure, allowing it to filter out noise.

- Generative Models: Autoencoders, particularly Variational Autoencoders (VAEs), are used as generative models that learn to produce new data samples. VAEs enable the generation of entirely new data similar to the original dataset and are used in applications like image generation, drug discovery, and more.

- Vanilla Autoencoder: This is the most basic form of an autoencoder, consisting of a simple encoder-decoder structure. data denoising is typically used for unsupervised learning tasks like dimensionality reduction and feature extraction.

- Convolutional Autoencoder (CAE): Convolutional autoencoders use convolutional layers in the encoder and decoder, making them ideal for image-related tasks. The encoder extracts features from the input image using convolutional filters, and the decoder reconstructs the image from the latent space using transposed convolutions. CAEs are widely used in tasks like image compression, data denoising , and generation.

- Variational Autoencoder (VAE): A Variational Autoencoder introduces a probabilistic element to the model by learning distributions over the latent space rather than deterministic representations. Highest Paying Jobs in India are particularly useful in generative tasks and can generate new data samples that resemble the input data. VAEs have gained popularity in applications like image generation, text generation, and unsupervised learning.

- Denoising Autoencoder (DAE): A Denoising Autoencoder is trained with noisy input data, and its objective is to reconstruct the original, clean data. DAE benefits applications like noise reduction in images, signals, and speech recognition. The network is encouraged to learn robust features that are resilient to noise.

- Sparse Autoencoder: A Sparse Autoencoder is designed to enforce sparsity in the latent space, meaning that only a small subset of neurons are activated at any given time. This is achieved by adding a sparsity constraint to the loss function. Sparse autoencoders are helpful in feature learning and discovering the underlying structure in high-dimensional data.

- Contractive Autoencoder (CAE): The Contractive Autoencoder adds a regularization term to the loss function to penalize significant changes in the latent space concerning small changes in the input. This forces the model to learn robust and invariant features. CAEs are often used in tasks like feature learning and dimensionality reduction, where the goal is to learn stable features invariant to slight variations in the data.

- input_layer = layers.Input(shape=(784,))

- Example: 784-dimensional input (e.g.,

- flattened 28×28 image)

- encoded = layers.Dense(128, activation=’relu’)(input_layer)

- Encoding layer

- decoded = layers.Dense(784, activation=’sigmoid’)(encoded)

- Decoding layer

- autoencoder = models.Model(input_layer, decoded)

- encoder = models.Model(input_layer, encoded)

- autoencoder.compile(optimizer=’adam’, loss=’binary_crossentropy’)

- X_train is the training data

- autoencoder.fit(X_train, X_train, epochs=50, batch_size=256, shuffle=True)

- encoded_data = encoder.predict(X_test)

- Image Denoising: Autoencoders can remove noise from images by learning to reconstruct clean images from noisy inputs. Data Analysis is especially useful in medical imaging, where noise reduction is critical for accurate diagnosis.

- Anomaly Detection: Autoencoders are widely used for anomaly detection in finance, healthcare, and cybersecurity fields. Training an autoencoder on standard data can quickly identify data points that deviate significantly from the norm, such as fraudulent transactions or system intrusions.

- Data Compression: Autoencoders can be used for data compression by encoding high-dimensional data into a lower-dimensional space. This is particularly beneficial in scenarios where storage or bandwidth is limited, such as image

Advance your Data Science career by joining this Data Science Online Course now.

Fundamental Components and Architecture of Autoencoders

The architecture of an autoencoder consists of two primary components: the encoder and the decoder. Below is a breakdown of these components and how they interact within the autoencoder’s architecture, The encoder part of the Variational Autoencoder compresses the input data into a lower-dimensional representation. It takes the high-dimensional input (for example, an image) and passes it through a series of layers that gradually reduce its dimensionality. The encoder typically uses fully connected layers or convolutional layers (in the case of convolutional autoencoders) to map the input to a latent space or “bottleneck” representation. This representation holds the essential features of the input data while removing irrelevant information. The “latent space” is the compressed version of the input data. This lower-dimensional representation contains the most critical information needed to reconstruct the input. The Data Collection of the latent space is one of the most essential factors in determining the level of compression and the information retained from the original data. The latent space is often thought of as the autoencoder’s “memory,” where the network attempts to store the compressed features of the input. The decoder part is responsible for reconstructing the input data from the latent space representation. It works in reverse order to the encoder, gradually increasing the dimensions until the original input is reconstructed. Like the encoder, the decoder can use fully connected layers or convolutional layers, depending on the type of autoencoder used. A key component of the training process is the loss function, which measures the difference between the input data and its reconstruction. Loss functions commonly include Mean Squared Error (MSE) and Binary Cross-Entropy. The autoencoder learns to minimize this error during training by adjusting its weights using backpropagation.

Advantages and Limitations of Autoencoders

Autoencoders are highly effective in unsupervised learning, especially for tasks like dimensionality reduction, feature extraction, and data denoising. Data Science Course Training can learn compressed representations of input data without requiring labeled datasets. However, they also have limitations. Autoencoders can easily overfit, especially if the model is too complex or the training data is limited. Additionally, they may not perform well on highly complex or diverse datasets unless carefully designed with proper regularization, such as dropout or sparsity constraints.

Deep Learning Autoencoder Types

Over time, several variations of autoencoders have been developed to suit different tasks. Some of the most notable types of autoencoders include:

Aspiring to lead in Data Science? Enroll in ACTE’s Data Science Master Program Training Course and start your path to success!

Autoencoder Implementation in Deep Learning

Implementing an autoencoder in deep learning involves designing the encoder-decoder network, selecting an appropriate loss function, and training the model on the data. Below is a high-level implementation of a basic autoencoder using Python and TensorFlow/Keras, From tensorflow.Keras import layers, models import numpy as np

Define encoder

Define decoder

Autoencoder model

Define the encoder model for extracting the encoded representation

Compile the model

Train the autoencoder on data (e.g., MNIST dataset)

Use the encoder to extract the encoded representation

Applications of Autoencoders in the Real World

Autoencoders have several practical applications in real-world scenarios:

Are you getting ready for your Data Science interview? Check out our blog on Data Science Interview Questions and Answers!

Future Trends and Research in Autoencoders

Recent research is exploring the integration of autoencoders with advanced models like Generative Adversarial Networks (GANs) to improve their ability to generate realistic data. Data Science Course Training also a growing interest in making autoencoders more robust, interpretable, and generalizable. Autoencoders are increasingly being used in self-supervised learning, where models learn from unlabelled data, and in data synthesis for creating artificial data samples, which is useful in fields like healthcare, finance, and image generation.