- Introduction to Transformers

- Overview of the Hugging Face Library

- Fine-tuning Transformers

- Pre-trained Models and Model Hub

- Transformers for Computer Vision

- Zero-shot Classification with Hugging Face

- Text Generation with GPT Models

- Performance Optimization Techniques

Introduction to Transformers

Transformers have revolutionized modern artificial intelligence, becoming the foundation for breakthroughs in natural language processing (NLP) and computer vision (CV). Introduced by Vaswani et al. in the landmark 2017 paper “Attention is All You Need,” the Transformer architecture replaced traditional sequential models by leveraging self-attention mechanisms. These mechanisms allow the model to weigh and focus on the most relevant parts of an input sequence, enabling it to capture long-range dependencies more effectively than previous models. One of the key distinctions of Transformers compared to RNNs (Recurrent Neural Networks) and LSTMs (Long Short-Term Memory Networks) is their ability to process data in parallel, a feature that significantly enhances performance and scalability especially in the context of Data Science Training, where handling large datasets efficiently is crucial. While RNNs and LSTMs handle data sequentially, Transformers analyze entire sequences at once. This parallelism not only boosts training speed but also allows the model to scale efficiently with large datasets. Hugging Face has popularized the use of Transformers through its open-source library, offering pre-trained models that can be easily fine-tuned for various NLP tasks such as text classification, translation, summarization, and question answering. Their flexibility, efficiency, and performance have made Transformers the go-to architecture for a wide range of AI applications today.

Are You Interested in Learning More About Data Science? Sign Up For Our Data Science Course Training Today!

Overview of the Hugging Face Library

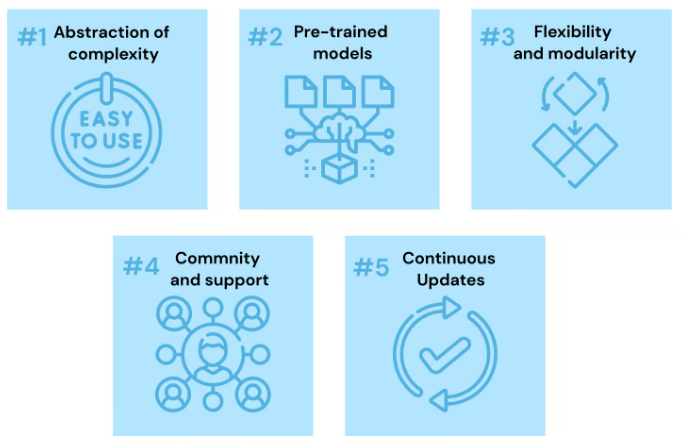

The Hugging Face library is a leading open-source platform that provides tools, models, and datasets for natural language processing (NLP), computer vision (CV), and more. At its core is the Transformers library, which offers easy access to thousands of pre-trained models built on state-of-the-art architectures like BERT, GPT, RoBERTa, T5, and more. These models support a wide range of tasks, including text classification, question answering, translation, summarization, and tokenization many of which can be framed or enhanced using techniques from a Constraint Satisfaction Problem in AI. One of the key advantages of Hugging Face is its user-friendly API, which allows developers and researchers to quickly fine-tune and deploy models with minimal code. The library supports both PyTorch and TensorFlow backends, ensuring flexibility across different machine learning environments.

It also includes tools like Datasets for loading and preprocessing large-scale datasets efficiently, and Tokenizers, a fast, customizable tokenization library. Hugging Face encourages collaboration through its model hub, where users can share and explore community-trained models. Additionally, it offers inference APIs, AutoTrain tools, and integration with platforms like AWS and Google Cloud for scalable deployment. Overall, Hugging Face has become a vital resource for accelerating the development and deployment of AI models in both research and industry.

Fine-tuning Transformers

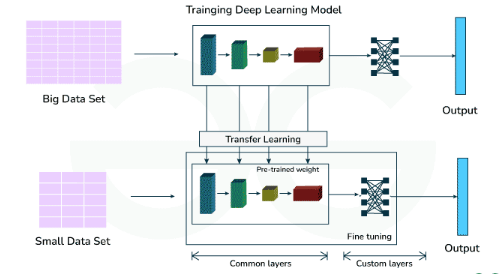

Fine-tuning allows pre-trained models to specialize in specific tasks by training them on domain-specific data, enhancing the performance of Agents in Artificial Intelligence by tailoring their behavior to specific environments or goals. Hugging Face simplifies this with the Trainer API. By using libraries like BertTokenizer, BertForSequenceClassification, and Trainer, developers can efficiently fine-tune models for tasks like text classification.

- from datasets import load_dataset

- Load pre-trained model and tokenizer

- model = BertForSequenceClassification.from_pretrained(“bert-base-uncased”)

- tokenizer = BertTokenizer.from_pretrained(“bert-base-uncased”)

- Load dataset

- dataset = load_dataset(“IMDb”)

- Define training arguments

- training_args = TrainingArguments(

- output_dir=”./results”,

- num_train_epochs=3,

- per_device_train_batch_size=8

- )

- Train the model

- trainer = Trainer(

- model=model,

- args=training_args,

- train_dataset=dataset[‘train’]

- )

- trainer.train()

- Fine-tuning improves accuracy on custom tasks, making models domain-specific.

The TrainingArguments class provides essential configurations for training, including batch size, learning rate, and the number of epochs. With these tools, developers can quickly adapt pre-trained models to new domains, improving model performance on specific tasks.

To Explore Data Science in Depth, Check Out Our Comprehensive Data Science Course Training To Gain Insights From Our Experts!

Pre-trained Models and Model Hub

- Community and Organization Contributions: Both individual users and large organizations contribute models, fostering a collaborative ecosystem.

- Easy Integration: Models can be easily loaded using simple Python commands and integrated into applications using PyTorch, TensorFlow, or JAX.

- Extensive Model Repository: The Hugging Face Model Hub hosts thousands of pre-trained models across NLP, computer vision, and audio processing tasks, making it a central resource for developers and researchers involved in Data Science Training and real-world AI applications.

- Wide Range of Architectures: It includes popular architectures like BERT, GPT-2/3, RoBERTa, T5, DistilBERT, and CLIP, supporting diverse use cases such as text generation, classification, translation, and image-text retrieval.

- Multi-Task Support: Pre-trained models are available for tasks like sentiment analysis, question answering, summarization, named entity recognition, and more ready to use with just a few lines of code.

- Fine-Tuning Capabilities: Users can fine-tune models on custom datasets to improve performance on domain-specific tasks.

- Version Control and Metadata: Each model on the hub includes documentation, training details, tags, and version history for transparency and reproducibility.

- Accessible via API and Web UI: Models can be accessed via Hugging Face’s Python API or directly through the intuitive web interface for quick testing and deployment.

- import requests

- Load model

- model = ViTor Image

- Classification.from_pretrained(“google/vit-base-patch16-224”)

- extractor = ViTFeatureExtractor.from_pretrained(“google/vit-base-patch16-224”)

- Prepare image

- url = “https://example.com/image.jpg”

- image = Image.open(requests.get(URL, stream=True).raw)

- inputs = extractor(images=image, return_tensors=”pt”)

- Perform classification

- outputs = model(**inputs)

- print(outputs.logits)

- ViTs offer state-of-the-art accuracy for image-related tasks.

- Multi-Label Classification: Zero-shot models can handle multi-label tasks, where an input text can belong to multiple categories simultaneously.

- Efficient: This approach reduces the need for extensive retraining and labeled data, saving both time and resources in model deployment.

- Flexibility: It’s highly flexible because the model can generalize to new, unseen categories or tasks, making it adaptable to different use cases.

- No Task-Specific Training: Unlike traditional models that require task-specific labeled data, zero-shot classification uses the model’s pre-trained knowledge to make predictions on new, unlabeled data a concept that can be applied alongside DFS in Artificial Intelligence to explore and solve problems with minimal prior data.

- Definition: Zero-shot classification allows models to classify text into categories without needing specific training for those categories, using prior knowledge and language understanding.

- Applications: It’s useful in dynamic environments where new categories emerge frequently, such as sentiment analysis, topic categorization, or customer feedback classification.

- Use of Prompts: Zero-shot models typically use natural language prompts to understand the classification task, making them user-friendly and accessible for non-experts in machine learning.

Transformers for Computer Vision

Transformers are also widely used in Computer Vision (CV). Vision Transformers (ViTs) divide images into patches and apply self-attention mechanisms for superior performance highlighting the evolving tools and technologies that make people ask, Is Data Science a Good Career? The answer often lies in innovations like these. Example: Image Classification with ViT from transformers import ViTFeatureExtractor, ViTForImageClassification from PIL import Image:

This approach significantly enhances performance in tasks like image classification, enabling models to learn more effectively from image data and outperform traditional convolutional neural networks (CNNs) in many cases. By leveraging the power of transformers, ViTs have become a leading architecture for various computer vision applications.

Gain Your Master’s Certification in Data Science by Enrolling in Our Data Science Masters Course.

Zero-shot Classification with Hugging Face

Text Generation with GPT Models

Text generation with GPT (Generative Pre-trained Transformer) models is a powerful application of AI that allows for the creation of coherent, contextually relevant text based on given prompts. GPT models, developed by OpenAI, are trained on vast amounts of diverse text data, enabling them to generate human-like responses for a wide range of applications. These models work by predicting the next word in a sequence, using the context provided by the input text. The more context they receive, the more accurate and relevant their output becomes. GPT models are highly versatile and can be fine-tuned for specific tasks such as story generation, dialogue systems, content creation, code generation, and more, which makes Mastering ChatGPT Prompts for Better Results an essential skill for maximizing their potential. Text generation with GPT models can be fine-tuned with domain-specific data, improving the quality and relevance of generated content for particular industries or subjects. One of the main strengths of GPT models is their ability to understand and maintain context over long passages of text, allowing for the creation of coherent and logically structured outputs. In practical use, GPT models power applications like chatbots, automated writing tools, and creative writing assistants, enhancing productivity and creativity in various fields.

Want to Learn About Data Science? Explore Our Data Science Interview Questions & Answer Featuring the Most Frequently Asked Questions in Job Interviews.

Performance Optimization Techniques

Performance optimization techniques are essential for improving the speed, efficiency, and scalability of applications, especially in high-demand environments. One of the first steps is algorithm optimization, where selecting the most efficient algorithms based on time and space complexity can greatly enhance performance. Another critical strategy is caching, which stores frequently accessed data in memory or local storage, reducing the need for repetitive calculations or database queries. Parallelism and concurrency boost performance by enabling simultaneous task execution core concepts in Data Science Training. For systems with heavy traffic, load balancing ensures that requests are distributed across multiple servers, preventing overloading and ensuring high availability. Database optimization, including techniques like indexing, query optimization, and partitioning, helps reduce data retrieval times in data-intensive applications. Code profiling and refactoring are valuable in identifying bottlenecks and improving inefficient code, thereby enhancing overall performance. Lastly, network optimization minimizes latency and data transfer times between systems, ensuring smoother and faster communication in cloud-based applications. By applying these techniques, systems can achieve better resource utilization, handle larger workloads, and provide superior user experiences.