- What is Fine-Tuning in AI?

- Benefits of Fine-Tuning AI Models

- Pre-trained Models vs. Fine-Tuned Models

- Steps in Fine-Tuning a Model

- Selecting the Right Dataset for Fine-Tuning

- Hyperparameter Tuning in Fine-Tuning

- Transfer Learning and Fine-Tuning

- Tools for Fine-Tuning (Hugging Face, TensorFlow)

- Challenges in Fine-Tuning Models

- Fine-Tuning Large Language Models (LLMs)

- Cost and Compute Considerations

- Future of Fine-Tuning in AI

What is Fine-Tuning in AI?

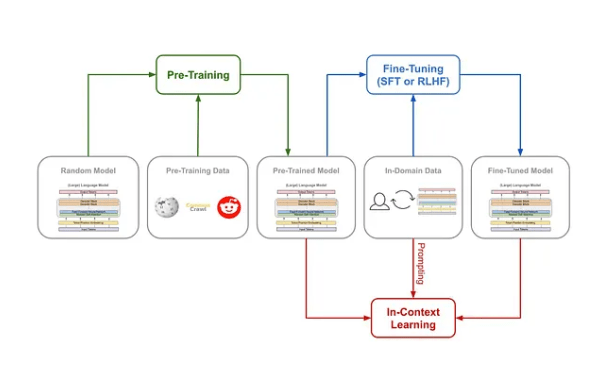

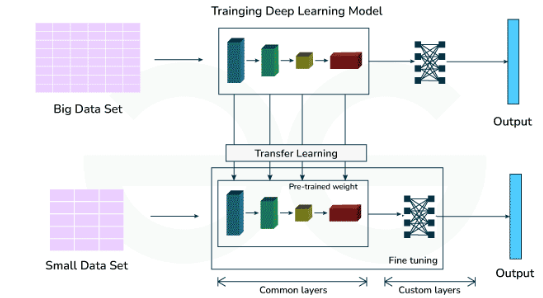

Fine-tuning in AI takes a pre-trained model and further trains it on a smaller, task-specific dataset. This process refines the model’s parameters to adapt it for particular use cases while retaining the general knowledge acquired during its initial large-scale training phase. Fine-tuning is widely used in natural language processing (NLP), computer vision, and audio processing; instead of Data Science Course Training models from scratch, which is computationally expensive and time-consuming, fine-tuning leverages pre-existing models and customizes them for specific tasks. For example, a pre-trained language model (e.g., GPT-3) fine-tuned on financial news data can provide better insights into market trends. In computer vision, a ResNet model pre-trained on ImageNet can be fine-tuned to identify specific types of medical anomalies. Fine-tuning enables models to deliver domain-specific accuracy without extensive retraining, making it a powerful and cost-effective AI technique.

Benefits of Fine-Tuning AI Models

Fine-tuning offers several advantages, making it a widely adopted practice in the AI industry:

- Enhanced Performance for Specific Tasks: Pre-trained models contain generalized knowledge, but fine-tuning makes them highly effective for niche tasks. For example, fine-tuning GPT on legal documents makes it proficient in legal language interpretation.

- Reduced Training Time and Costs: Since the model is already pre-trained, fine-tuning requires significantly less time and computational resources. Mastering Chat Gpt Prompts for Better Results reduces the need for large-scale data collection and extensive hardware.

- Increased Efficiency and Accuracy: Fine-tuned models perform more efficiently on specific tasks with higher accuracy. They also generalize better when exposed to domain-specific data.

- Lower Data Requirements: Fine-tuning typically requires smaller datasets, making it ideal for niche applications where data collection is costly or limited.

- Improved Adaptability: It allows organizations to quickly customize models for new use cases without building models from scratch. This boosts AI deployment speed and flexibility.

Master Data Science skills by enrolling in this Data Science Online Course today.

Pre-trained Models vs. Fine-Tuned Models

Fine-tuning transforms pre-trained models into specialized models for specific tasks. For instance, BERT (Bidirectional Encoder Representations from Transformers) is a pre-trained model for general language understanding. Fine-tuning BERT on medical texts makes it suitable for healthcare applications, such as clinical text analysis or medical Q&A systems. Here’s how they differ:

- Pre-trained Models: Built on massive, diverse datasets. Designed for general-purpose tasks. Not tailored for specific industries or use cases.

- Fine-Tuned Models: Customized using domain-specific datasets. Optimized for particular tasks (e.g., sentiment analysis, fraud detection). Provide higher accuracy for targeted applications.

Steps in Fine-Tuning a Model

Fine-tuning follows a structured process:

- Select a Pre-Trained Model: Choose a model suitable for your task (e.g., BERT for NLP, ResNet for vision). Leverage frameworks like Hugging Face or TensorFlow to load the model.

- Prepare the Dataset: Gather and clean task-specific Informed Search in Artificial Intelligence . Split the dataset into training, validation, and test sets. Preprocess data (tokenization, normalization).

- Load the Pre-Trained Model: Use pre-trained weights as the starting point. Modify the output layers to match the target task (e.g., binary classification, multi-class output).

- Fine-Tune the Model: Adjust hyperparameters like learning rate, batch size, and epochs. Use smaller learning rates (e.g., 1e-5) to prevent overfitting.

- Evaluate and Optimize: Use F1-score, precision, and recall metrics to evaluate the fine-tuned model. Perform regularization and fine-tune further if necessary.

- Domain-Specific Data: Select datasets relevant to your industry or task. For instance, FINRA financial reports can be used to fine-tune an AI model for economic analysis.

- Data Quality: Ensure the dataset is clean and labeled accurately. Remove noisy or irrelevant data.

- Dataset Size: Fine-tuning requires less data than pre-training, but it still needs sufficient samples to generalize effectively.

- Diversity and Representation: Ensure the dataset covers various scenarios, Master Data Wrangling Steps Tools Techniques bias and improving generalization..

- Data Augmentation: Apply techniques like image flipping, text paraphrasing, or random noise addition to increase Right Dataset diversity.

- Transfer Learning: Uses knowledge from one domain to improve performance in another.

- Example: Applying a pre-trained model on ImageNet to detect medical anomalies.

- Fine-Tuning: Customizes the transferred model for a specific task using new data. Retains the pre-trained knowledge while specializing in the model. Transfer learning accelerates AI adoption by reducing data and computation needs.

- Overfitting: Fine-tuning on small datasets risks overfitting. Use dropout and regularization techniques.

- Catastrophic Forgetting: The model may forget its pre-trained knowledge during fine-tuning. Use smaller learning rates to mitigate this.

- Data Bias and Quality Issues: Poor-quality or biased Right Dataset lead to Emerging Tech in Civil to Software Engineering fine-tuning.

- Resource Intensity: Large models require potent GPUs, making fine-tuning expensive.

- Adapter Layers: Lightweight layers added to prevent catastrophic forgetting. LoRA (Low-Rank Adaptation): Reduces the number of trainable parameters, making fine-tuning efficient.

- PEFT (Parameter Efficient Fine-Tuning): Minimizes the memory footprint during LLM fine-tuning.

Enhance your knowledge in Data Science. Join this Data Science Online Course now.

Selecting the Right Dataset for Fine-Tuning

Choosing the appropriate dataset is critical for effective fine-tuning. Key Factors:

Hyperparameter Tuning in Fine-Tuning

Hyperparameter tuning in fine-tuning is both an art and a science, requiring a deep understanding of the model architecture and training data. Small tweaks in values can significantly impact the model’s ability to generalize to Fine-Tuning Models. For instance, learning rate schedules or decay can help stabilize training over longer periods. Grid search and random search are Data Science Course Training methods for tuning, but more advanced approaches like Bayesian optimization are gaining popularity for their efficiency. Automated tools like Optuna and Ray Tune are increasingly used to streamline the tuning process. The choice of optimizer (e.g., Adam, SGD, NLP) also influences training dynamics and should be considered part of hyperparameter tuning. Warm-up steps before applying the full learning rate can prevent early training instability. Gradient clipping may be used to avoid exploding gradients in certain architectures. Monitoring validation loss and accuracy across epochs helps detect overfitting early. Ultimately, successful hyperparameter tuning enables fine-tuned models to perform robustly across diverse real-world tasks.

Want to lead in Data Science? Enroll in ACTE’s Data Science Master Program Training Course and start your journey today!

Transfer Learning and Fine-Tuning

Fine-tuning is often combined with transfer learning, which reuses knowledge from pre-trained models.

Tools for Fine-Tuning (Hugging Face, TensorFlow)

A variety of powerful tools are available for fine-tuning AI models, each catering to different needs and levels of expertise. Hugging Face Transformers is a leading choice, especially for NLP tasks, offering a wide range of pre-trained models like BERT and GPT along with user-friendly fine-tuning Best AI Chatbot Tools for 2025 Use Cases. TensorFlow, paired with Keras, provides flexible APIs and modular components that simplify the fine-tuning process through intuitive layers and callbacks. PyTorch is another widely adopted framework, known for its dynamic computation graph and support for custom loss functions and optimizers, making it ideal for more tailored deep learning applications. Additionally, AutoML platforms such as Google AutoML and Amazon SageMaker streamline the fine-tuning process by automating model selection, training, and optimization, making advanced AI capabilities more accessible to non-experts and accelerating deployment in production environments.

Preparing for a job interview? Explore our blog on Data Science Interview Questions and Answers!

Challenges in Fine-Tuning Models

Fine-tuning is not without its challenges:

Fine-Tuning Large Language Models (LLMs)

Fine-tuning large language models (LLMs) like GPT-4, LLaMA, and PaLM requires advanced techniques:

Cost and Compute Considerations

Fine-tuning large AI models is a resource-intensive process that requires significant computational power, often relying on GPUs or TPUs for efficient processing. To manage this demand, many organizations turn to scalable cloud services like AWS, Azure, and Google Cloud Platform, which provide the necessary infrastructure for training and deploying models. However, these services can be costly, prompting the use of cost-optimization strategies such as model distillation and quantization, which reduce the size and complexity of models without significantly compromising performance, thereby lowering compute requirements and overall expenses.

Future of Fine-Tuning in AI

The future of fine-tuning in AI is rapidly evolving, driven by advancements that make the process more efficient and widely applicable. Emerging frameworks like Parameter-Efficient Fine-Tuning (PEFT) are enabling Data Science Course Training to adapt to new tasks with fewer resources, while meta-learning techniques are paving the way for adaptive fine-tuning that allows models to learn how to learn. Additionally, federated learning is playing a crucial role in enabling privacy-preserving fine-tuning by keeping data decentralized and secure. As AI continues to advance, fine-tuning is expected to become more streamlined, accessible, and essential for tailoring models to domain-specific applications.