- Introduction to Interpolation

- Importance of Interpolation in Data Science

- Types of Interpolation Techniques

- Applications of Interpolation in Real Life

- Interpolation in Machine Learning

- Tools and Libraries for Interpolation

- Best Practices for Accurate Interpolation

- Conclusion

Introduction to Interpolation

Interpolation is a mathematical method used to estimate unknown values that lie within the range of a known set of data points. It is a fundamental concept in fields such as mathematics, engineering, computer science, and data analysis. By applying interpolation techniques, one can predict or approximate values that are missing or unmeasured between two or more known values. This makes interpolation extremely useful for smooth data representation and function approximation. There are various interpolation methods, including linear interpolation, polynomial interpolation, and spline interpolation, each offering different levels of accuracy and complexity, and all of which are essential concepts in Data Science Training. For example, linear interpolation connects known data points with straight lines, while spline interpolation uses smooth curves for more precise estimation. Interpolation has practical applications in areas such as signal processing, where it helps reconstruct missing signals; image processing, for tasks like image scaling and enhancement; and data visualization, for creating continuous curves from discrete data points. It is also widely used in scientific computing and numerical simulations, enabling more accurate data modeling. Overall, interpolation is a valuable tool for analyzing data and making informed predictions within a known data range.

Interested in Obtaining Your Data Science Certificate? View The Data Science Course Training Offered By ACTE Right Now!

Importance of Interpolation in Data Science

- Data Preprocessing: It helps fill missing values in datasets, making them suitable for analysis and machine learning models.

- Function Approximation: Many machine learning models rely on function approximation, where interpolation provides a smooth function representation from discrete data points.

- Time-Series Analysis: In financial and climate modeling, interpolation is used to estimate values between recorded time intervals, often in conjunction with analyzing Probability Distributions in Finance.

- Image Processing: Image resizing and enhancement rely on interpolation techniques to generate pixel values between known points.

- Scientific Computing: Engineers and scientists use interpolation to analyze experimental data and derive meaningful insights.

- Computer Graphics: Interpolation is used for rendering smooth transitions in animations, shading, and texture mapping.

- Geospatial Analysis: In mapping and GIS, interpolation estimates values like elevation or temperature at unsampled geographic locations.

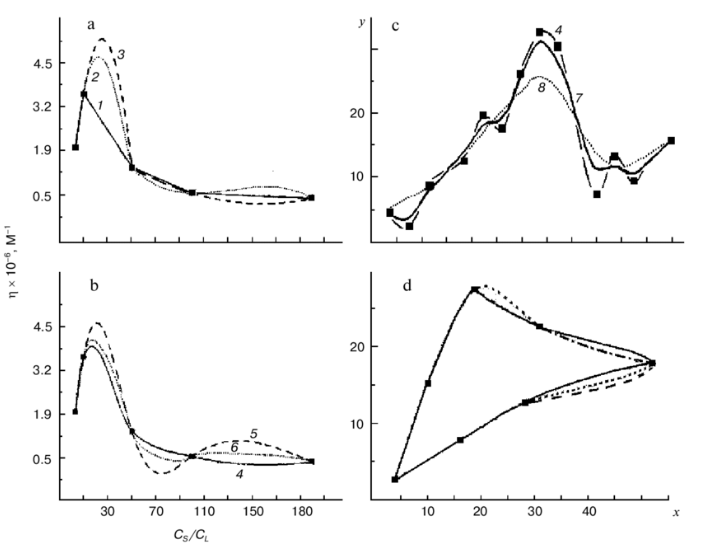

Types of Interpolation Techniques

- Kriging: A geostatistical interpolation method used for spatial data.

- Linear Interpolation: The simplest form, connecting two adjacent data points with a straight line.

- Polynomial Interpolation: Uses polynomials to approximate the function.

- Spline Interpolation: Uses piecewise polynomials to create a smooth curve through the data points.

- Nearest-Neighbor Interpolation: Assigns the nearest known value to an unknown data point, showing How AI Is Redefining Design Workflows.

- Cubic Interpolation: Uses cubic polynomials to create a more accurate approximation.

- Bilinear and Bicubic Interpolation: Used in image processing to interpolate data in two dimensions.

- Lagrange Interpolation: A higher-order polynomial interpolation technique that constructs a polynomial passing through all given points.

- numpy.interp: Simple linear interpolation for numerical arrays.

- pandas: Used for handling missing data interpolation.

- Script.Interpolation: Provides functions for linear, spline, and polynomial interpolation.

- interp1, interp2, and spline functions for different interpolation methods, highlighting the Power and Promise of Generative AI in enhancing data analysis.

- approx() for linear interpolation.

- Spline () for spline interpolation.

- FORECAST and TREND functions for data interpolation.

- TensorFlow and PyTorch use interpolation techniques for data augmentation.

To Earn Your Data Science Certification, Gain Insights From Leading Data Science Experts And Advance Your Career With ACTE’s Data Science Course Training Today!

Applications of Interpolation in Real Life

Interpolation plays a vital role in many real-world applications across diverse fields, helping to estimate missing data and improve overall accuracy. In weather forecasting, interpolation is used to estimate missing temperature, humidity, or pressure values between recorded data points, allowing for more precise and localized predictions. In finance, it helps predict stock prices, interest rates, and currency exchange rates by filling gaps in time-series data, aiding in trend analysis and decision-making, which are key components of Data Science Training. Medical imaging benefits significantly from interpolation, especially in enhancing the resolution of MRI and CT scans by estimating pixel values between known points, leading to clearer diagnostic images. In geographical information systems (GIS), interpolation is used to map terrain elevations and other environmental variables like rainfall or pollution levels in areas where data isn’t directly available.

Audio processing also leverages interpolation to improve sound quality, reconstruct lost audio signals, and remove background noise. In robotics, interpolation assists in path planning and motion control by generating smooth transitions between points, enabling robots to move efficiently and accurately. These examples highlight the importance of interpolation in turning incomplete or discrete data into meaningful, continuous information for analysis and practical use.

Interpolation in Machine Learning

In machine learning, interpolation plays a crucial role in enhancing model accuracy and reliability by addressing data gaps and supporting various processes. One of its key applications is in feature engineering, where interpolation is used to fill in missing values within datasets. This ensures that models can learn effectively without being hindered by incomplete information. Another important use is in data augmentation, where synthetic data points are generated through interpolation techniques to expand training datasets. This helps improve model generalization and performance, especially when dealing with limited or imbalanced data, where concepts like the Median in Statistics play a crucial role. In neural networks, interpolation is essential for approximating complex functions. The model adjusts weights between neurons in a way that effectively interpolates between known input-output pairs, enabling the network to learn intricate patterns and make accurate predictions. Similarly, in regression models, interpolation is used to estimate values between observed data points, contributing to the construction of continuous predictive functions. Overall, interpolation enhances model robustness by smoothing data distributions and ensuring that missing or sparse data does not disrupt the learning process. This makes it an indispensable technique in building effective and reliable machine learning systems.

Gain Your Master’s Certification in Data Science by Enrolling in Our Data Science Masters Course.

Tools and Libraries for Interpolation

Python:

MATLAB:

R:

Excel:

Machine Learning Frameworks:

Best Practices for Accurate Interpolation

To ensure accurate and reliable interpolation, it is essential to follow certain best practices that enhance the quality and relevance of the results. One of the first steps is to choose the right interpolation method based on the nature and distribution of the data. For example, linear interpolation works well for simple, evenly spaced data, while spline or polynomial methods are better suited for more complex datasets. It is equally important to preprocess the data by removing outliers and reducing noise, as these can distort the interpolation and lead to inaccurate estimates, which are critical steps in Data Mining and Data Warehousing. Another critical consideration is to avoid overfitting, particularly when using higher-degree polynomials. Overfitting can cause excessive oscillations between data points, reducing the model’s generalizability. Instead, using lower-degree polynomials or smooth splines often yields better results. Additionally, validating the interpolated values against actual data, when available, helps ensure the method is producing realistic and trustworthy results. Lastly, incorporating domain knowledge can significantly improve the interpolation process, as understanding the dataset’s context and expected behavior guides the selection of the most appropriate technique.

Preparing for a Data Science Job Interview? Check Out Our Blog on Data Science Interview Questions & Answer

Conclusion

Interpolation is an essential technique in data science, mathematics, and engineering, allowing for the accurate estimation of missing or unknown values within a given dataset. It enables smooth data representation and is crucial for building continuous models from discrete data points. Common interpolation methods include linear, polynomial, and spline interpolation, each suited for different types of data and complexity levels. These methods are widely used in real-world applications across various fields such as finance, where they help predict stock trends; healthcare, for improving the resolution of medical imaging; and machine learning, to fill data gaps and enhance model performance all of which are integral topics in Data Science Training. Interpolation can present challenges such as selecting the appropriate method or avoiding overfitting following best practices like data preprocessing, method validation, and leveraging domain knowledge helps maintain accuracy and reliability. With growing amounts of data and increasing demand for real-time insights, interpolation continues to be a vital tool in data-driven decision-making processes. As technology evolves, its applications are expected to expand even further, supporting innovation across many industries.