- Introduction to AI Techniques

- Reinforcement Learning Techniques

- Natural Language Processing (NLP) Techniques

- Computer Vision Techniques

- Neural Networks and Deep Learning

- AI Optimization Techniques

- Transfer Learning in AI

- Feature Engineering and Selection

Introduction to AI Techniques

Artificial Intelligence (AI) techniques refer to a broad set of methods and algorithms that enable machines to mimic human intelligence and perform tasks such as learning, reasoning, problem-solving, and decision-making. These techniques are foundational to many modern applications, ranging from voice assistants and image recognition to autonomous vehicles and recommendation systems. AI techniques covered in Data Science Training can be broadly categorized into several types, including machine learning, deep learning, natural language processing, and computer vision. Machine learning involves training algorithms on data to identify patterns and make predictions without explicit programming. Deep learning, a subset of machine learning, uses neural networks with multiple layers to model complex patterns, especially in unstructured data like images and text. Natural language processing enables machines to understand, interpret, and generate human language, powering applications like chatbots and language translation. Other AI techniques include reinforcement learning, where agents learn by interacting with environments to maximize rewards. These techniques often rely on large datasets and powerful computational resources. AI is transforming industries by automating tasks, enhancing decision-making, and creating new opportunities for innovation. Understanding these techniques is essential for leveraging AI’s potential effectively and responsibly in real-world applications.

Are You Interested in Learning More About Data Science? Sign Up For Our Data Science Course Training Today!

Reinforcement Learning Techniques

Reinforcement Learning (RL) is a specialized area of machine learning where an agent learns to make decisions by interacting with its environment. Unlike supervised learning, where models learn from labeled data, RL relies on trial and error, guided by rewards and penalties. The agent takes actions in an environment, receives feedback in the form of rewards or punishments, and uses this feedback to improve its future actions, much like how Python Keywords guide the flow of a program. The goal of reinforcement learning is to maximize cumulative rewards over time by learning an optimal policy a strategy that defines the best action to take in each state of the environment. Key techniques in RL include value-based methods like Q-learning, which estimate the value of taking specific actions, and policy-based methods, which directly optimize the policy.

Another advanced approach is deep reinforcement learning, which combines deep neural networks with RL algorithms to handle complex, high-dimensional environments such as video games or robotics. RL is widely used in applications like autonomous driving, robotics, game playing (e.g., AlphaGo), and recommendation systems. However, it requires significant computational resources and careful tuning to balance exploration (trying new actions) and exploitation (using known strategies). Overall, reinforcement learning offers powerful techniques for solving sequential decision-making problems where explicit programming is difficult.

Natural Language Processing (NLP) Techniques

- Tokenization: The process of breaking down text into smaller units like words, phrases, or sentences, which serve as the basic building blocks for analysis.

- Part-of-Speech Tagging: Assigns grammatical categories (e.g., noun, verb, adjective) to each token, helping understand the syntactic structure of sentences.

- Named Entity Recognition (NER): Identifies and classifies key entities in text, such as names of people, organizations, locations, dates, and more.

- Sentiment Analysis: What is Data Science? It determines the emotional tone or opinion expressed in a piece of text, widely used in social media monitoring and customer feedback.

- Machine Translation: Automatically translates text from one language to another using models like Google Translate, often powered by neural networks.

- Text Summarization: Produces concise summaries of longer documents, either by extracting key sentences (extractive) or generating new sentences (abstractive).

- Language Modeling: Predicts the next word or sequence of words in a sentence, forming the basis for applications like autocomplete and conversational AI.

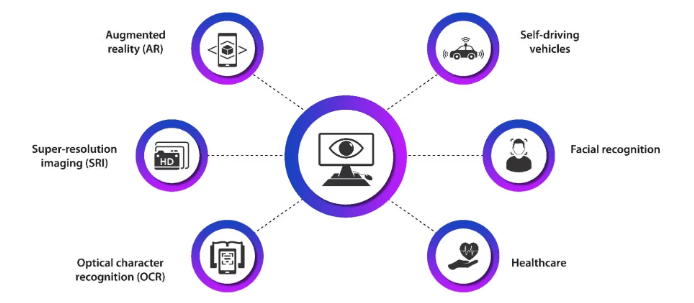

- Image Classification: Assigns a label to an entire image, identifying the main object or scene, using algorithms like convolutional neural networks (CNNs).

- Object Detection: Locates and classifies multiple objects within an image by drawing bounding boxes around them. Popular methods include YOLO (You Only Look Once) and Faster R-CNN.

- Image Segmentation: Data Science Training covers techniques that divide an image into segments or regions to identify objects at the pixel level. Semantic segmentation labels every pixel, while instance segmentation differentiates between individual objects.

- Feature Extraction: Detects important visual features such as edges, corners, or textures. Techniques include SIFT (Scale-Invariant Feature Transform) and SURF (Speeded-Up Robust Features).

- Optical Character Recognition (OCR): Converts images of text into machine-readable text, enabling digitization of documents and real-time text recognition.

- 3D Reconstruction: Creates three-dimensional models from two-dimensional images or video streams, useful in augmented reality and robotics.

- Facial Recognition: Identifies or verifies individuals by analyzing facial features using deep learning models and embedding techniques.

- Regularization: Techniques like L1 (Lasso) and L2 (Ridge) add penalties to the loss function to prevent overfitting by discouraging overly complex models.

- Gradient Descent: A fundamental optimization algorithm that iteratively adjusts model parameters to minimize the loss function by moving in the direction of the steepest descent.

- Stochastic and Mini-Batch Gradient Descent: Variants of gradient descent that update parameters using small random subsets of data, improving training speed and convergence stability.

- Hyperparameter Tuning: Python Career Opportunities include the process of searching for the best model hyperparameters (e.g., learning rate, batch size) using methods such as grid search, random search, or Bayesian optimization to enhance model performance.

- Pruning and Quantization: Methods to reduce model size and computational load by removing unnecessary neurons or reducing precision, enabling faster inference without major accuracy loss.

- Knowledge Distillation: A technique where a smaller “student” model learns to mimic a larger “teacher” model, achieving similar performance with fewer resources.

- Evolutionary Algorithms: Optimization inspired by natural selection, using mechanisms like mutation and crossover to explore the search space and find optimal model parameters.

To Explore Data Science in Depth, Check Out Our Comprehensive Data Science Course Training To Gain Insights From Our Experts!

Computer Vision Techniques

Neural Networks and Deep Learning

Neural networks are a fundamental technology in the field of deep learning, inspired by the structure and function of the human brain. They consist of layers of interconnected nodes, called neurons, which process and transmit information. Each neuron receives input, applies a weight, adds a bias, and passes the result through an activation function to produce an output. Deep learning refers to neural networks with many hidden layers, enabling the model to learn complex patterns and representations from large amounts of data. Big Data vs Data Science These multi-layered networks excel at handling unstructured data such as images, audio, and text, which traditional algorithms struggle to process effectively. Training deep neural networks involves adjusting weights and biases through a process called backpropagation, which minimizes the error between predicted and actual outputs. Deep learning powers numerous applications, including image and speech recognition, natural language processing, autonomous vehicles, and medical diagnosis. Advances in hardware, such as GPUs, and availability of big data have accelerated deep learning research and deployment. Despite their impressive capabilities, neural networks require substantial computational resources and careful tuning to avoid issues like overfitting. Overall, neural networks and deep learning have revolutionized artificial intelligence by enabling machines to perform tasks that previously required human intelligence.

Want to Pursue a Data Science Master’s Degree? Enroll For Data Science Masters Course Today!

AI Optimization Techniques

Transfer Learning in AI

Transfer learning is an advanced technique in artificial intelligence that enables models to leverage knowledge gained from one task to improve performance on a different, but related, task. Instead of training a model from scratch which can be time-consuming and data-intensive transfer learning uses a pre-trained model, often trained on large datasets, as a starting point. The model’s learned features, such as patterns in images or language structures, are then fine-tuned with a smaller, task-specific dataset. One of the Top Reasons To Learn Python is its support for approaches like this, which are especially useful when there is limited labeled data available for the target task, significantly reducing the need for extensive computational resources and training time. Transfer learning has been highly successful in fields like computer vision, where models pre-trained on massive image databases like ImageNet can be adapted to recognize specific objects or medical images. It’s also widely used in natural language processing, with models like BERT and GPT being fine-tuned for tasks such as sentiment analysis, translation, or question answering. By building on existing knowledge, transfer learning accelerates AI development, improves accuracy, and enables broader applicability across various domains, making it a powerful and efficient tool in modern AI workflows.

Go Through These Data Science Interview Questions & Answer to Excel in Your Upcoming Interview.

Feature Engineering and Selection

Feature engineering and selection are critical steps in building effective machine learning models. Feature engineering involves creating new input variables, or features, from raw data to improve a model’s predictive power. This can include transforming existing data, extracting relevant information, handling missing values, or encoding categorical variables into numerical formats. Well-engineered features can reveal hidden patterns and relationships that enhance model accuracy. On the other hand, Data Science Training teaches that feature selection focuses on identifying the most important features that contribute significantly to the model’s performance while removing redundant or irrelevant ones. This helps reduce model complexity, improve training speed, and prevent overfitting. Common feature selection methods include filter techniques (like correlation coefficients), wrapper methods (such as recursive feature elimination), and embedded methods (which incorporate feature selection during model training). Effective feature engineering and selection not only boost model performance but also make the model more interpretable and easier to maintain. In many real-world applications, these steps require domain expertise to understand which data aspects are most relevant. Overall, investing time in thoughtful feature engineering and selection is essential for developing robust, efficient, and accurate AI and machine learning solutions.