- Definition of MLOps

- Need for MLOps in Industry

- ML Lifecycle Management

- Continuous Integration/Deployment (CI/CD)

- Version Control for Models

- Monitoring and Logging

- Tools and Frameworks

- Real-world Use Cases

- Challenges and Solutions

- Summary

Definition of MLOps

MLOps, short for Machine Learning Operations, is a set of practices that combines machine learning (ML), software engineering, and DevOps principles to streamline the deployment, monitoring, and maintenance of ML models in production. Just as DevOps aims to shorten the development lifecycle of software applications while maintaining high quality, Machine Learning Training operations (MLOps) do the same for ML workflows automating the ML lifecycle and promoting collaboration between data scientists and operations teams. At its core, MLOps is about reliability, scalability, and efficiency in deploying ML models. It covers everything from model training and evaluation to deployment, version control, continuous integration/continuous deployment (CI/CD), monitoring, and model retraining.

Ready to Get Certified in Machine Learning? Explore the Program Now Machine Learning Online Training Offered By ACTE Right Now!

Need for MLOps in Industry

The explosive growth of machine learning applications has revealed several challenges when transitioning from research or development to production environments. Without a systematic approach, models often fail to scale, degrade in performance, or become difficult to reproduce. To understand how models make structured predictions, explore Decision Trees in Machine Learning. MLOps has become crucial in today’s machine learning environments. Organizations face challenges when turning experimental models into dependable production systems. To move from development notebooks to scalable, enterprise-grade setups, teams need to manage various important aspects. These include handling data drift, ensuring reproducibility, and keeping up with continuous performance monitoring. As fields like healthcare, finance, and manufacturing incorporate machine learning models into their decision-making, organizations must create workflows that allow data scientists, engineers, and business stakeholders to work together smoothly. Good MLOps frameworks address technical issues like maintaining consistent environments and managing model versions. They also provide necessary compliance and governance systems to ensure traceability, accountability, and adherence to regulations. By adopting effective MLOps strategies, companies can reduce risks linked to model quality, simplify deployment procedures, and develop a systematic method that turns machine learning from an experimental field into a reliable, predictable business resource.

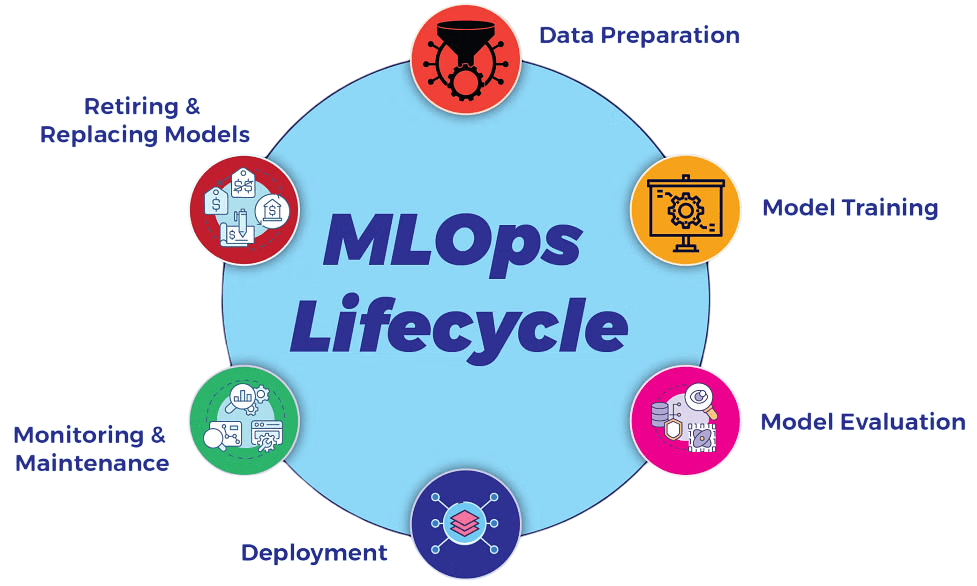

ML Lifecycle Management

MLOps enables comprehensive of the ML Lifecycle Management, which includes the following stages:

- Data Collection and Preparation: Data is gathered, cleaned, and transformed. Version control and metadata tracking are key at this stage to ensure consistency.

- Model Development: Data scientists build, experiment, and validate models using tools like Jupyter, TensorFlow, PyTorch, or Scikit-learn.

- Model Training and Validation: Training pipelines are created using orchestration tools. Cross-validation, hyperparameter tuning, and metric tracking are essential here.

- Model Evaluation: Trained models are evaluated on validation/test datasets. Model cards or reports help document strengths and weaknesses.

- Model Deployment: The chosen model is deployed to production environments either as batch jobs, REST APIs, or edge deployments.

- Monitoring and Feedback: Performance monitoring (accuracy, latency, etc.), data drift detection, and user feedback are incorporated to assess real-world behavior.

- Model Retraining: As data evolves, retraining pipelines automatically update models, ensuring relevance and accuracy over time.

ML Lifecycle Management ensures that each of these stages is automated, reproducible, and monitored to close the loop between experimentation and production.

To Explore Machine Learning in Depth, Check Out Our Comprehensive Machine Learning Online Training To Gain Insights From Our Experts!

Continuous Integration/Deployment (CI/CD)

To explore how machines identify and categorize data trends, see Pattern Recognition and Machine Learning. CI/CD is a crucial pillar of MLOps. While traditional CI/CD pipelines focus on software code, MLOps extends this to include data, models, and configurations.

CI (Continuous Integration) in ML

- Code, data pipelines, and model artifacts are automatically tested and validated when changes are made.

- Automated unit tests for data schema validation and model performance checks.

- Integration of testing frameworks like pytest, Great Expectations, and mlflow test.

CD (Continuous Deployment) in ML

- Once validated, the model is packaged and deployed automatically to a production or staging environment.

- Canary releases, A/B testing, or shadow deployment are used to safely introduce new models.

Tools like GitHub Actions, GitLab CI, Jenkins, and Azure Pipelines, when paired with ML-specific tools (e.g., MLflow, TFX, Seldon), help build robust ML CI/CD workflows.

Version Control for Models

In the fast-paced world of MLOps, teams are expanding Version Control for Models. They include not just code management but also datasets, features, model configurations, and trained weights. This approach is crucial for ensuring reproducibility, making experiment tracking smoother, and providing strong methods for performance analysis. Machine Learning Training frameworks support these goals by standardizing workflows and enabling scalable experimentation. By using version control tools like DVC, MLflow, Weights & Biases, and Pachyderm, data science teams can manage complex machine learning workflows. These tools make it easier to roll back changes during system failures and help teams understand how changes in code and data affect model performance. Additionally, a clear versioning strategy improves technical transparency and makes audits simpler. This is especially important in regulated industries where compliance and traceability are essential. These Version Control for Models practices enable organizations to maintain high-quality, responsible, and flexible machine learning systems that adapt to changing technology.

Monitoring and Logging

Post-deployment monitoring is essential for ensuring that machine learning models perform well in real-world settings. Unlike traditional software, ML systems can experience performance issues due to data and concept drift. To understand earning potential in AI careers, explore the Machine Learning Engineer Salary. This means teams need to keep a close eye on key metrics. Important monitoring parameters include prediction accuracy using live data, changes in input data distribution, and system performance indicators such as latency, throughput, and resource usage. By using tools like Prometheus, Grafana, Evidently AI, and Arize AI, organizations can set up strong logging and observability systems. These systems help track model behavior, spot unusual activities, and understand what causes problems. Effective monitoring strategies not only help teams keep their models reliable but also offer insights into critical business metrics like conversion rates and fraud detection accuracy. This approach allows organizations to improve and trust their machine learning deployments consistently.

Looking to Master Machine Learning? Discover the Machine Learning Expert Masters Program Training Course Available at ACTE Now!

Tools and Frameworks

Numerous open-source and commercial tools have emerged to support MLOps workflows:

Experiment Tracking

- MLflow

- Weights & Biases

- Neptune.ai

Data & Feature Management

- DVC

- Feast (Feature Store)

- LakeFS

Model Deployment

- Seldon Core

- BentoML

- KFServing

- TorchServe

Pipeline Orchestration

- Kubeflow Pipelines

- Apache Airflow

- Metaflow

- Dagster

Monitoring

- Evidently AI

- Arize AI

- Prometheus & Grafana

These tools can be used individually or combined to create an end-to-end MLOps platform tailored to specific needs.

Real-World Use Cases

- Netflix: Netflix uses MLOps for recommendation systems, dynamic content generation, and A/B testing. Models are trained and retrained on user behavior data and automatically deployed using CI/CD.

- Uber Michelangelo: Uber’s internal ML platform handles the end-to-end model lifecycle from feature engineering to deployment and monitoring at scale. MLOps practices enable quick model iteration and robust deployment.

- Airbnb: To explore the platforms that power modern AI workflows, see Machine Learning Tools. Airbnb uses MLOps to operationalize fraud detection and search ranking models. They use Apache Airflow and custom tools for workflow orchestration and monitoring.

- Healthcare: In healthcare, AI models assist with diagnostics and patient monitoring. MLOps helps ensure these models remain accurate over time, comply with regulations, and handle sensitive data responsibly.

- Retail (Walmart, Amazon): Retail giants leverage MLOps for demand forecasting, dynamic pricing, and inventory management. With rapid data changes, continuous training and monitoring are essential.

Preparing for Machine Learning Job Interviews? Have a Look at Our Blog on Machine Learning Interview Questions and Answers To Ace Your Interview!

Challenges and Solutions

- Challenge: Performance drops over time due to data drift.

- Solution: Implement data and concept drift detection; automate retraining.

- Challenge: Training large models requires significant computational resources.

- Solution: Use cloud platforms (AWS SageMaker, GCP Vertex AI) and distributed training.

- Challenge: Inconsistent environments and lack of version control.

- Solution: Use containerization (Docker), virtual environments, and versioning tools (DVC, MLflow).

- Challenge: Handling PII and regulatory constraints.

- Solution: Apply access control, encryption, and audit trails. Align with HIPAA, GDPR.

- Challenge: Shortage of MLOps-skilled professionals.

- Solution: Invest in training, build cross-functional teams, adopt low-code MLOps platforms.

Model Degradation

Scalability

Reproducibility

Security and Compliance

Lack of Expertise

Summary

MLOps is changing how organizations develop, deploy, and maintain machine learning systems. It addresses the complex challenges of ML workflows that go beyond traditional software development. By focusing on important aspects like data management, experimentation, reproducibility, and continuous improvement, MLOps helps companies speed up their time-to-market for ML products while improving model reliability and maintainability. Machine Learning Training plays a key role in this process by enabling consistent, scalable, and high-quality model development. This method encourages strong collaboration among teams and gives organizations a solid framework for scaling AI solutions. Even with potential technical and organizational challenges, MLOps allows businesses to provide intelligent, data-driven solutions more efficiently and accurately. As artificial intelligence becomes crucial for gaining a competitive edge, mastering MLOps is essential for forward-thinking companies that want to fully utilize machine learning’s potential.