- What is Azure Load Balancer?

- Types of Azure Load Balancers

- How Azure Load Balancer Works

- Setting Up Azure Load Balancer

- Configuring Load Balancer Rules

- Backend Pool and Health Probes

- Traffic Distribution Methods

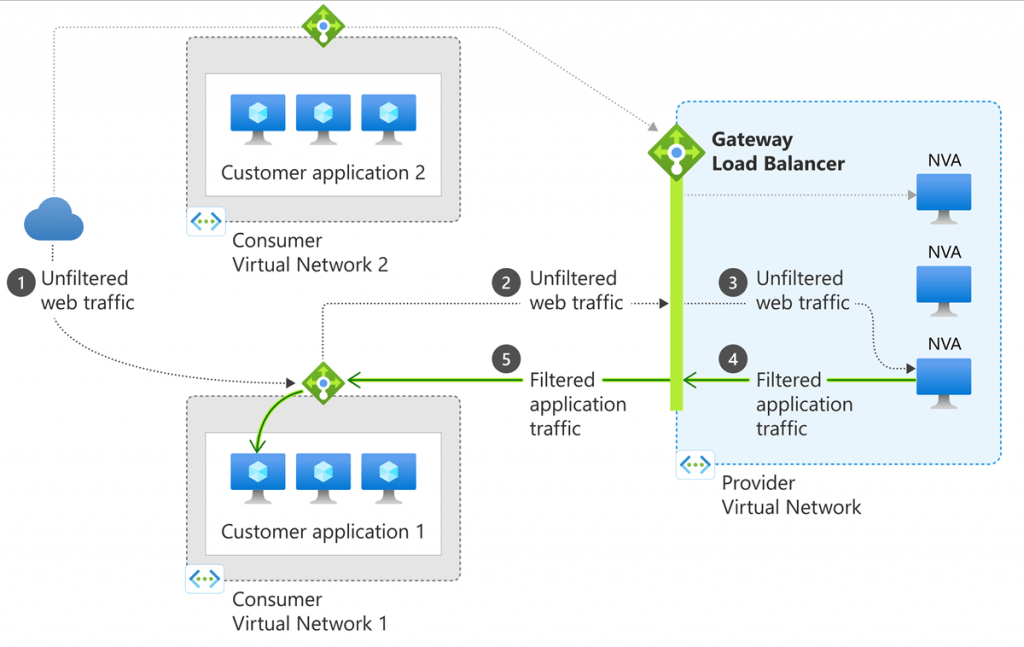

- Security and Firewall Considerations

- Azure Load Balancer vs Other Load Balancers

- Everyday Use Cases for Azure Load Balancer

- Monitoring and Troubleshooting Azure Load Balancer

- Pricing and Cost Optimization

- Conclusion

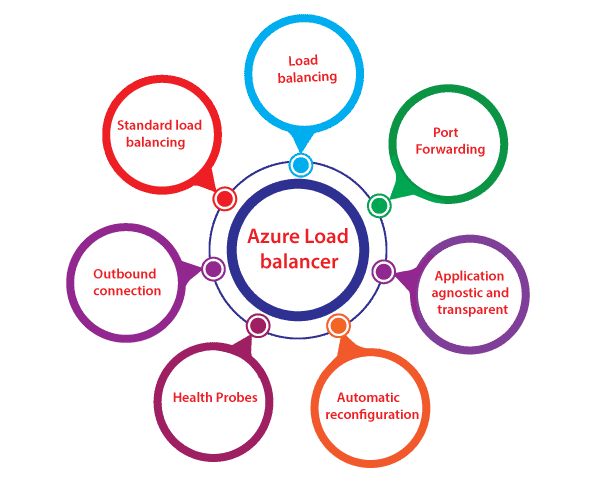

What is Azure Load Balancer?

Azure Load Balancer is a highly available, fully managed service that allows users to balance traffic across many instances of applications or services deployed in Microsoft Azure. It keeps applications and services highly available and reliable by distributing incoming network traffic to healthy resources so that none of the instances or services receive too many requests. Microsoft Azure Training covers various services, including how Azure Load Balancer can be used to manage application and service traffic with both internal and external workloads. In this guide, we will explain Azure Load Balancer’s types, configuration, and use cases, contrast it with other load balancing products, and cover security considerations, monitoring, and cost optimization strategies.

Types of Azure Load Balancers

Azure Load Balancer has two main types:

- Public Load Balancer: A Public Load Balancer is intended to direct internet-facing traffic to backend resources. It acts as a gateway to your services and directs incoming traffic from the internet to your virtual machines (VMs) or other resources in your Azure virtual network (VNet). It is highly scalable and supports millions of requests per second with high availability and low latency. It is perfect for use cases such as web applications, APIs, Website Server , or any publicly accessed services.

- Internal Load Balancer (ILB): An Internal Load Balancer distributes traffic to internal resources in a private network (e.g., within a VNet). Unlike the Public Load Balancer, the ILB is not internet-facing but routes traffic between VMs or services in the same network. This is the appropriate type for situations where internal applications or microservices, such as databases, backend services, or internal APIs, must scale without exposing them to external users.

Eager to Acquire Your Microsoft Azure Certification? View The Microsoft Azure Training Offered By ACTE Right Now!

How Azure Load Balancer Works

Azure Load Balancer works at Layer 4 (Transport Layer) of the OSI model, distributing traffic on IP addresses and TCP/UDP ports. It leverages algorithms for effective traffic balancing to achieve high availability, fault tolerance, and maximum resource utilization.

Traffic Distribution Mechanism:- Frontend IP: A Load Balancer also possesses a public or private IP address that acts as the front end for accepting incoming traffic.

- Backend Pool: The backend pool comprises the services or virtual machines to which traffic is routed. Depending on health checks, they can be automatically added or deleted. Additionally, Integrate Azure CDN with web apps storage enhances performance by caching content closer to users, reducing latency and improving scalability.

- Health Probes: Azure Load Balancer conducts health checks on the backend resources to determine whether they are available. If a resource does not pass the health probe, traffic is sent to healthy resources in the backend pool.

- The traffic distribution is done as follows: A client directs a request to the frontend IP of the Load Balancer. The Load Balancer checks the health of resources in the backend pool. The traffic is forwarded to the healthiest resource per the chosen load-balancing algorithm.

Setting Up Azure Load Balancer

Create Load Balancer

- Go to the Azure portal’s Load Balancer service and click “Create.” Select whether you desire a Public or Internal Load Balancer. Provide minimal information, including the resource group, region, and frontend IP configuration (public or private).

Set up the Backend Pool

- The backend pool consists of virtual machines or services to which the traffic will be routed. You can include several virtual machines (VMs) or other Azure resources in the backend pool.

- Health Probes are used to check the availability of resources in the backend pool. Select the probe type (TCP, HTTP, HTTPS) and set up the probe settings, such as the probe interval and unhealthy threshold.

- Load Balancer Rules determine how the Load Balancer distributes traffic. You can set up the protocol (TCP or UDP), port, and backend pool to which traffic should be routed. You can set up various rules for various types of traffic depending on ports and protocols. Microsoft Azure Application Gateway provides application-level (Layer7) load balancing, while the Azure Load Balancer operates at the transport layer (Layer4). The Application Gateway offers advanced routing capabilities, SSL termination, and WebApplication Firewall (WAF) features, allowing you to create custom rules based on HTTP requests.

- If needed, set up outbound rules to manage the backend pool’s outbound traffic. This comes in handy when your VMs require external access to resources such as an API or a database.

- Protocol: Select the rule’s protocol (TCP, UDP).

- Frontend IP Configuration: Public or private IP address where the traffic will be incoming.

- Backend Pool: Collection of virtual machines or resources to where traffic will be sent.

- Port Mapping: Specify what port on the frontend IP maps to what port on the backend VM. For example, an HTTP request on port 80 might be mapped to a backend VM on port 80.

- Hash-based Distribution (Default): The Load Balancer employs a hash of the client’s IP address and port and the backend IP address and port to decide which backend resource would service the request. This guarantees consistent routing of the same client’s traffic to the same backend resource.

- Source IP Affinity (Session Persistence): This technique ensures that traffic from a particular client IP address is sent to the same backend VM for the duration of the session, giving it persistence. It is helpful when applications store session information in memory and need traffic from the same client to be sent to the same backend.

- Round Robin: This method distributes incoming requests equally across all available backend resources, regardless of the client’s IP or session. It is ideal when no session-based affinity is required and ensures even load distribution.

- AWS Elastic Load Balancer (ELB): AWS ELB provides both application-level and network-level load balancing, as does Azure. However, Azure Load Balancer emphasizes Layer 4 load balancing (TCP/UDP), whereas ELB provides Layer 7 for HTTP/HTTPS traffic (Application Load Balancer).

- Google Cloud Load Balancer: Google Cloud Load Balancer provides both regional and global load balancing, as well as Azure’s. However, Azure Load Balancer is ideal for dealing with traffic inside the Azure network, whereas Google Cloud Load Balancer also provides support for HTTP(S) load balancing.

- F5 Big-IP: F5 Big-IP is a software and hardware solution for load balancing and application delivery. F5 Big-IP is more complex to configure and manage manually than Azure Load Balancer but provides more sophisticated features such as SSL offloading, application firewalls, and deep app monitoring.

- Azure Monitor: Azure Monitor can gather and monitor Load Balancer performance-related metrics, such as traffic flow, health probe status, and backend pool health.

- Azure Network Watcher: Network Watcher offers network diagnostic features to monitor traffic patterns, verify connectivity issues, and analyze performance metrics on your Load Balancer.

- Log Analytics: Azure Log Analytics enables you to query and analyze diagnostic logs to diagnose problems with Load Balancer configurations, health probes, and traffic distribution.

Set up Health Probes

Set up Load Balancer Rules

Set up Outbound Rules (Optional)

Configuring Load Balancer Rules

Load Balancer rules enable you to determine how traffic will be distributed from the frontend IP address to the backend pool. When you set up a rule, you will have to define the following parameters:

Backend Pool and Health Probes

The backend pool in Azure Load Balancer consists of resources like virtual machines (VMs), virtual machine scale sets, or IP addresses that handle incoming traffic. These resources are dynamically added or removed from the pool based on their availability and scaling requirements, ensuring that only healthy instances are used to process requests. Health probes are employed to monitor the status of these backend resources. If a resource fails to pass the health probe—whether it’s a TCP, HTTP, or HTTPS check—it is temporarily removed from the backend pool. Once the resource is deemed healthy again, it is reintroduced into the pool. This approach ensures that traffic is always routed to available and responsive resources, maintaining high availability and fault tolerance, a key consideration for a Certified Azure Developer .

Traffic Distribution Methods

Azure Load Balancer accommodates various traffic distribution techniques that control the traffic distribution among backend resources. Some of the most prevalent techniques include:

Thrilled to Achieve Your Microsoft Azure Certification? View The Microsoft Azure Online Course Offered By ACTE Right Now!

Security and Firewall Considerations

When configuring Azure Load Balancer, it’s crucial to implement strong security measures to safeguard your resources from unauthorized access. Network Security Groups (NSGs) are essential for managing both incoming and outgoing traffic to resources within a virtual network, ensuring that only valid traffic reaches your VMs behind the load balancer. Additionally, Azure Firewall provides an extra layer of security by offering more granular control over traffic filtering and network traffic inspection, helping to prevent malicious activity. To further enhance protection, Microsoft Azure Training is recommended to help users understand best practices, and Azure DDoS Protection is recommended to defend against distributed denial-of-service (DDoS) attacks. This service provides continuous traffic monitoring and automated mitigation of large-scale attacks, ensuring your load balancer remains resilient even under heavy, malicious traffic. These combined security features help maintain the integrity and availability of your applications.

Azure Load Balancer vs Other Load Balancers

Azure Load Balancer is competing with other cloud-based load balancing services, including:

Interested in Pursuing Cloud computing Master’s Program? Enroll For Cloud computing Master Course Today!

Everyday Use Cases for Azure Load Balancer

Azure Load Balancer plays a crucial role in supporting a variety of workloads, ensuring high availability and scalability for different types of applications. For web applications, it efficiently balances HTTP/HTTPS traffic, maintaining fault tolerance and minimizing downtime. In microservice-based architectures, Azure Load Balancer ensures that traffic is evenly distributed across multiple backend services running on different virtual machines, allowing for scalable and highly available microservices. It is also highly effective in balancing traffic to databases, such as Azure SQL Database, and other backend services, ensuring redundancy and consistent performance. Additionally, Azure Load Balancer is frequently used to distribute traffic across virtual machines and virtual machine scale sets, ensuring workloads are evenly balanced and resources are utilized effectively. This versatility makes it an ideal choice for diverse cloud-based deployments that require reliable traffic distribution.

Monitoring and Troubleshooting Azure Load Balancer

Azure offers several tools to troubleshoot and monitor Azure Load Balancer performance:

Preparing for Your Microsoft Azure Interview? Check Out Our Blog on Microsoft Azure Interview Questions & Answer

Pricing and Cost Optimization

Azure Load Balancer offers a straightforward pricing model, with charges applied for public IP addresses and data processing. Public IP addresses incur fees for both static and dynamic IPs used in the Public Load Balancer, while data processing costs depend on the amount of traffic the Load Balancer handles. To optimize costs, it is recommended to use the Standard SKU load balancer over the Basic SKU, as the Standard SKU offers more advanced features and better cost-effectiveness for large-scale deployments. Additionally, leveraging virtual machine (VM) scaling can help minimize unnecessary expenses by ensuring that only the required resources are active, thus reducing the overall cost of load balancing. These cost optimization strategies are crucial for maintaining both performance and budget efficiency in cloud environments.

Conclusion

Azure Load Balancer is an essential service in Microsoft’s cloud ecosystem, offering highly available and scalable load balancing solutions for applications and services deployed in Azure. It is designed to handle both internal and external traffic, ensuring that resources such as virtual machines (VMs) and services remain accessible and well-utilized. With its support for public and internal load balancing, Azure Load Balancer is versatile enough to accommodate a wide range of use cases, from web applications to microservices and backend systems. By understanding the different types of load balancers (Public and Internal) in Microsoft Azure Training , how to configure them, the role of health probes, and various traffic distribution methods, users can fine-tune their deployments to achieve the highest level of reliability and performance.