- Introduction to Distributed Computing

- Key Characteristics and Benefits

- Distributed Computing Architectures

- Challenges and Security Concerns

- Real-World Applications of Distributed Computing

- Future Trends and Innovations

- Conclusion

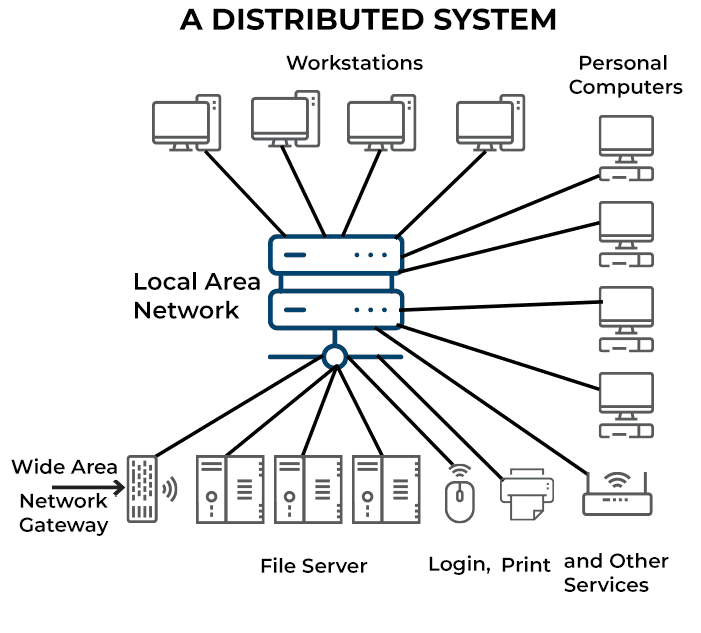

Distributed computing is a model in which multiple independent computers work together to perform complex tasks as a unified system. AWS Training interconnected computers, often called nodes, communicate over a network and coordinate their actions to achieve a shared goal. Distributed computing enables resource sharing, scalability, and fault tolerance, making it ideal for handling large-scale, data-intensive applications. It allows tasks to be executed concurrently across different machines, improving efficiency and performance. Common applications include cloud computing platforms, distributed databases, peer-to-peer networks, and blockchain systems. While it offers significant benefits like flexibility and cost-effectiveness, distributed computing also introduces challenges such as system complexity, data synchronization, and security concerns.

Introduction to Distributed Computing

Distributed computing refers to a system in which computational tasks are spread across multiple interconnected devices, or nodes, working together to perform complex calculations or manage large amounts of data. Unlike traditional, centralized computing, where a single machine handles all tasks, distributed computing breaks down the workload into smaller, manageable parts and distributes them across a network of computers. These systems operate independently but collaborate to achieve a shared goal, offering enhanced processing power, fault tolerance, and AWS Amazon Comprehend NLP Solutions . Distributed computing systems are essential in today’s technology landscape, powering services like cloud computing, big data processing, and distributed databases. These systems are designed to ensure high availability, reliability, and performance, often processing vast amounts of data across a global network of devices.

Dive into Docker by enrolling in this Docker Training Course today.

Key Characteristics and Benefits

- Scalability: One of the most significant advantages of distributed computing is its scalability. As demand increases, additional nodes can be added to the network to distribute the computational load. This enables systems to handle growing data volumes or user requests without compromising performance.

- Fault Tolerance and Reliability: Distributed systems are designed to be fault-tolerant, meaning that if one node fails, others can continue operating, preventing system-wide disruptions. Data replication across multiple ensures that the system remains functional even when individual parts fail.

- Parallelism: Tasks can be divided into smaller sub-tasks and processed simultaneously across multiple nodes. This parallel processing improves overall system performance and speeds up computations, making distributed computing ideal for large-scale data analytics and complex simulations.

- Resource Sharing: Distributed computing allows multiple devices to share resources such as storage, processing power, and bandwidth. AWS Training optimizes resource usage, ensuring that each device only handles tasks for which it is best suited, leading to more efficient computing.

- Cost Efficiency: By leveraging a network of low-cost commodity hardware (such as personal computers or cloud resources), distributed computing can offer high processing power at a lower cost than traditional centralized systems.

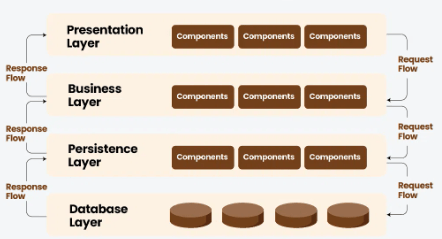

- Client-Server Architecture: In this model, clients (users or applications) request services or resources from a centralized server. The server processes these requests and returns the results to the clients. While is a simple and widely-used architecture, it can suffer from scalability and performance issues as demand increases.

- Peer-to-Peer (P2P) Architecture: In P2P systems, all nodes (peers) are equal, and each can both request and provide services. This decentralized approach helps to distribute the workload more evenly across the system and is commonly used in file-sharing networks or blockchain systems.

- Master-Slave Architecture: This model involves a central node (the master) that controls and manages other worker nodes (the slaves). The master is responsible for task allocation and coordination, while the slaves perform the computations or handle specific tasks. This architecture is often used in parallel computing clusters.

- MapReduce Architecture: Popularized by Hadoop, MapReduce is designed to handle large-scale data processing by breaking down tasks into smaller map and reduce operations. The map function processes data in parallel, and the reduce function AWS Outposts Powering Hybrid Cloud the results. This architecture is widely used in big data processing.

- Service-Oriented Architecture (SOA): SOA is a design principle where distributed services communicate over a network to perform business tasks. Each service is independent and can be accessed through well-defined APIs. SOA allows for modular, flexible, and scalable systems, and it is often used in enterprise applications.

- Cloud Computing: Services like AWS, Google Cloud, and Microsoft Azure leverage distributed computing to provide scalable, on-demand computing resources to users worldwide. Cloud platforms distribute workloads across multiple centers to ensure reliability and scalability.

- Big Data Processing: Distributed computing is crucial for processing large datasets in real-time. Frameworks like Hadoop and Apache Spark use distributed systems to store and analyze massive amounts of data across a network of nodes.

- Blockchain and Cryptocurrencies: Blockchain technology relies on distributed computing to maintain a decentralized ledger of transactions across a network of nodes. Bitcoin, Ethereum, and other cryptocurrencies use distributed consensus mechanisms to ensure the integrity and security of transactions.

- Distributed Databases: Databases like Cassandra, MongoDB, and Google Spanner are designed to operate in distributed environments, allowing for horizontal scaling and high availability. Guide to AWS Neptune and Graph Databases databases distribute data across multiple nodes to improve performance and fault tolerance.

- Scientific Simulations: Distributed computing is used in scientific research for running complex simulations, such as climate models, particle physics simulations, and drug discovery. Projects like SETI@home and Folding@home use distributed computing to process large amounts of scientific data.

- Edge Computing: As the number of Internet of Things (IoT) devices grows, edge computing will play an essential role in distributed systems. By processing data closer to the source (at the edge), latency is reduced, and real-time decision-making is enabled.

- Quantum Computing: Quantum computing has the potential to revolutionize distributed computing by solving complex problems that traditional systems struggle with. Quantum computers will be able to handle massive datasets and perform computations at speeds exponentially faster than current technology.

- 5G Networks: The rollout of 5G networks will enable faster, more reliable communication between distributed nodes, supporting the growth of real-time, data-intensive applications such as autonomous vehicles, smart cities, and industrial automation.

- Artificial Intelligence (AI) and Machine Learning (ML): Distributed computing will be increasingly used in AI and ML applications. Distributed machine learning algorithms can process vast amounts of AWS EC2 Instance With Terraform in parallel, accelerating model training and improving the performance of AI systems.

- Serverless Architectures: Serverless computing, where developers focus on writing code without worrying about managing infrastructure, is a growing trend. Serverless computing platforms often rely on distributed systems to scale dynamically in response to workload demands.

Learn the fundamentals of Docker with this Docker Training Course.

Distributed Computing Architectures

There are several types of architectures in distributed computing, each offering different approaches to task distribution, resource management, and fault tolerance. Some key architectures include:

Take charge of your Cloud Computing career by enrolling in ACTE’s Cloud Computing Master Program Training Course today!

Challenges and Security Concerns

While distributed computing provides numerous benefits, it also introduces a set of challenges and security concerns, The communication between distributed nodes often occurs over a network, which can introduce latency. This delay can affect the performance of the system, particularly when nodes are geographically distant or when there is high traffic. Maintaining data consistency across distributed nodes can be difficult, especially in scenarios where multiple nodes are updating the same data. Techniques like eventual consistency or distributed consensus algorithms (e.g., Paxos or Raft) are used to handle this challenge. While distributed systems are designed to be fault-tolerant, managing failures and ensuring data recovery in case of node crashes or network partitions is still a complex issue. Distributed systems must implement robust failure detection and recovery mechanisms to ensure reliability. Distributed systems can be vulnerable to various security threats, such as data breaches, unauthorized access, and attacks like denial-of-service (DoS). Ensuring AWS ECR Secure Container Storage communication between nodes, enforcing strong authentication mechanisms, and implementing encryption techniques are essential for protecting sensitive data. Managing a distributed system can be challenging due to its complexity. Coordinating tasks across multiple nodes, handling failures, and monitoring performance require specialized tools and expertise. Automation and orchestration tools like Kubernetes and Apache Mesos help simplify these tasks.

Real-World Applications of Distributed Computing

Distributed computing has widespread applications in various industries. Some notable use cases include:

Want to ace your Docker interview? Read our blog on Docker Interview Questions and Answers now!

Future Trends and Innovations

The future of distributed computing is shaped by several emerging trends and innovations:

Conclusion

Distributed computing has transformed the way modern applications are developed, deployed, and scaled. Its ability to harness the power of multiple nodes and distribute computational tasks has revolutionized industries, enabling innovation in cloud services, big data analytics, blockchain, and more. However, to AWS Training leverage the benefits of distributed systems, organizations must address challenges related to security, performance, and complexity. As technology continues to evolve, distributed computing will remain a cornerstone of innovation, driving the future of computing.