- Introduction

- Kubernetes Components Overview

- Kubernetes Control Plane

- Kubernetes Nodes

- Kubernetes Pods

- Kubernetes Services

- Kubernetes Volumes, Storage, and Resource Management

- Kubernetes Scheduling and Autoscaling

- Security and Monitoring in Kubernetes

- Kubernetes Deployment Strategies

- Conclusion

Introduction

Kubernetes is an open-source container orchestration platform designed to automate the Kubernetes deployment, scaling, and management of containerized applications in Cloud Computing Course. Google originally developed it, and it is now maintained by the Cloud Native Computing Foundation (CNCF). Kubernetes provides a unified platform for managing distributed applications and services, ensuring reliability, scalability, and ease of Kubernetes deployment. It is widely used in cloud-native environments to manage microservices architectures and handle complex workloads efficiently.

Master Cloud Computing skills by Enrolling in this Cloud Computing Online Course today.

Kubernetes Components Overview

Kubernetes is made up of several key components that work together to ensure the smooth operation of containerized applications. The Controller Node is responsible for controlling and managing the Kubernetes cluster, running the control plane components, including the API Website Server, scheduler, and controller manager. Worker Nodes, on the other hand, are where the containers run and contain the necessary components to manage containers, such as the kubelet, kube proxy, and container runtime. The Control Plane oversees the Kubernetes cluster’s state and makes decisions about its health, such as scheduling Kubernetes pods and responding to Kubernetes cluster events.

Kubernetes Control Plane

The control plane is the brain of the Kubernetes cluster and is responsible for making decisions about the cluster’s state.

API Server: The API server is the entry point for all Kubernetes REST Nmap-commands. It exposes the Kubernetes API and processes all requests from users, components, or other services.

Scheduler: The scheduler is responsible for assigning workloads (pods) to worker nodes based on resource availability and other constraints.

Controller Manager: The controller manager runs controllers that monitor the cluster state, ensuring the desired state is maintained (e.g., the correct number of replicas are running).

etcd: etcd is a distributed key-value store used to store the cluster’s configuration data, including the state of all resources. It serves as the source of truth for the Kubernetes cluster.

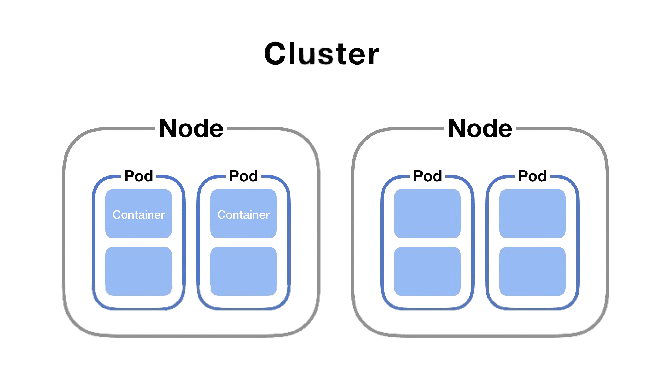

Kubernetes Nodes

Kubernetes nodes are the physical or virtual machines that run the containerized applications.

- Node Components: Each node contains essential components for running containers.

- Kubelet: The kubelet is an agent running on each node. It ensures that containers are running in a pod by communicating with the API server and managing container lifecycle events.

- Kube Proxy: The kube proxy manages network communication between Kubernetes pods and services, ensuring traffic is routed correctly.

- Container Runtime: This is the software responsible for running the containers, such as Docker or containers.

- ClusterIP: Exposes the service only within the cluster.

- NodePort: Exposes the service on each node’s IP address at a static port.

- LoadBalancer: Exposes the service externally through a cloud load balancer.

- ExternalName: Maps the service to an external DNS name.

- A temporary storage volume that is tied to the pod’s lifecycle. Data in EmptyDir is lost when the pod is deleted or restarted.

- A volume type that mounts a file or directory from the host node’s file system into a pod, allowing the pod to access files on the host.

- Durable, cluster-wide storage resources that exist independently of pods. They provide persistent storage that can be used across pod lifecycles.

- Requests for storage made by users. PVCs are bound to available Persistent Volumes, and they specify the amount and type of storage needed.

- Define different tiers or types of storage in Kubernetes, allowing for dynamic provisioning of Persistent Volumes based on user-defined requirements like performance or cost.

- Provide logical isolation within a Kubernetes cluster, allowing resources like pods, services, and volumes to be grouped and managed separately. Namespaces also help with access control.

- Define the minimum amount of CPU and memory that a container needs to function properly. These requests help Kubernetes schedule pods on appropriate nodes.

- Specify the maximum amount of CPU and memory that a container can use Web Security . Limits prevent a container from consuming excessive resources, ensuring fair resource distribution.

- Volumes like EmptyDir are tied to the pod lifecycle. When the pod is deleted, the data stored in EmptyDir is lost, whereas Persistent Volumes provide long-term storage that persists beyond pod lifecycles.

- Kubernetes can automatically provision Persistent Volumes based on Storage Classes, making it easier to manage storage allocation and scalability in a Kubernetes environment.

- Kubernetes provides various Kubernetes deployment strategies to ensure efficient and reliable application rollouts.

- Kubernetes allows you to deploy new versions of an application incrementally, with the option to roll back to a previous version if necessary.

- A canary release allows testing a new version of an application on a small subset of users before rolling it out to the entire cluster.

- This strategy involves maintaining two identical environments, one (blue) for the live version and the other (green) for staging. Traffic is shifted to the green environment once the new version is validated.

Become a Cloud Computing expert by enrolling in this Cloud Computing Online Course today.

Kubernetes Pods

A pod is the smallest and simplest unit in Kubernetes, acting as a wrapper for one or more containers and representing a single instance of a running process within the cluster. Pods are often used to host a single application or microservice and are essential for managing containerized workloads.

A pod goes through various phases during its lifecycle, including Pending, Running, Succeeded, Failed, and Unknown. When a pod has multiple containers, they share the same network namespace, storage, and IP address, enabling them to work closely together. This shared Sandbox Environment allows containers to efficiently communicate and coordinate, making pods a key building block in Kubernetes for managing distributed applications.

Kubernetes Services

Kubernetes services are abstractions that define a set of Kubernetes pods and a way to access them. Services enable communication between Kubernetes pods and external clients, abstracting the complexity of pod IP addresses.

Service Types:Load Balancing and Service Discovery: Kubernetes provides built-in load balancing and DNS-based service discovery, making it easier to manage service access and balance traffic across multiple pods.

Kubernetes Networking

Kubernetes networking enables seamless communication between containers across nodes and services. Cloud Computing Course each pod a unique IP address, allowing all pods in a cluster to communicate regardless of the node. Kubernetes also supports network policies, which allow users to define rules to control pod communication, such as restricting access between certain pods or namespaces. Additionally, Kubernetes networking provides DNS services that automatically resolve service names to IP addresses, simplifying inter-pod communication and making it easier to manage services across the cluster.

Take charge of your Cloud Computing career by enrolling in ACTE’s Cloud Computing Master Program Training Course today!

Kubernetes Volumes, Storage, and Resource Management

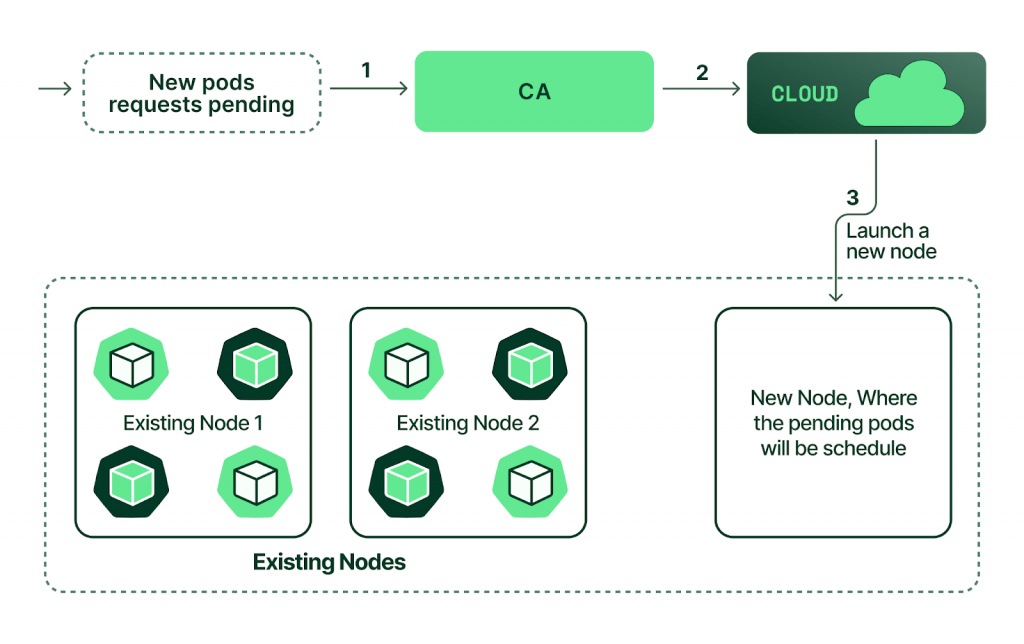

Kubernetes Scheduling and Autoscaling

Kubernetes scheduling and autoscaling features are essential for managing the placement and scaling of applications.

Scheduling Strategies: The Kubernetes scheduler selects which node a pod should run on based on resource availability, constraints, and policies.

Horizontal Pod Autoscaling: Kubernetes can automatically scale the number of pod replicas based on CPU usage or other custom metrics.

Cluster Autoscaling: Cluster autoscaling automatically adjusts the size of the cluster by adding or removing nodes based on resource requirements.

Preparing for Cloud Computing interviews? Visit our blog for the best Cloud Computing Interview Questions and Answers!

Security and Monitoring in Kubernetes

Security is a critical aspect of Kubernetes, with features like Role-Based Access Control (RBAC) for managing API access by defining user and service account roles, and network policies to limit pod communication, enhancing security by reducing exposure. Kubernetes also offers secure secrets management, allowing sensitive data like Password Authentication and API keys to be safely stored and accessed. In terms of monitoring, the Metrics Server collects resource usage data for autoscaling and monitoring, while Prometheus integrates with Grafana to provide powerful metric collection and visualization. Additionally, Fluentd supports centralized logging, forwarding logs to various destinations to enhance observability.

Kubernetes Deployment Strategies

Conclusion

Kubernetes is a powerful platform for managing containerized applications at scale. Its architecture includes key components like the control plane, nodes, pods, and services in Cloud Computing Course , which collaborate to provide automation, scalability, and reliability. By efficiently managing resources and orchestrating containers, Kubernetes enables organizations to enhance DevOps practices and streamline deployment processes. It improves application reliability, scalability, and flexibility, allowing teams to focus on development rather than infrastructure management. Kubernetes is essential for modern, large-scale application deployments.