- Introduction to Docker and Its Benefits

- Setting Up a Basic Docker Container

- Docker-Based Web Application Deployment

- Multi-Container Applications Using Docker Compose

- CI/CD Pipeline with Docker and Jenkins

- Monitoring Docker Containers with Prometheus and Grafana

- Building a Secure Docker Container

- Best Practices for Docker in Production

- Conclusion

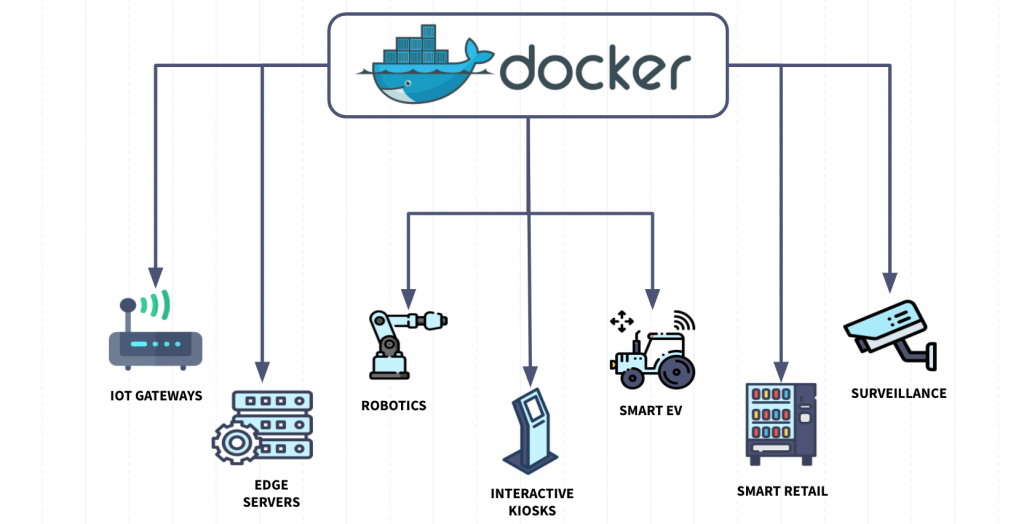

Introduction to Docker and Its Benefits

Docker is an open-source platform that allows developers to automate application deployment, scaling, and management by using containerization. Containers are lightweight, portable, and isolated environments that can run any application and its dependencies consistently, regardless of the underlying infrastructure. Docker has become a critical tool in modern DevOps workflows because it can streamline development, testing, and deployment processes. Docker Training simplifies the process of building, packaging, and distributing applications, making it easier for developers to create consistent environments across different stages of development. It eliminates the “works on my machine” problem by ensuring that applications run the same way in development, testing, and production. Docker also enables faster scaling by allowing applications to be easily replicated across different environments. Additionally, Docker’s integration with various orchestration tools, like Kubernetes, allows for efficient container management at scale. With its flexibility and robust ecosystem, Docker has become essential for continuous integration and delivery (CI/CD) pipelines.

Learn how to manage and Deploy by joining this Docker Training Course today.

The key benefits of Docker include:

- Portability: Docker containers encapsulate everything an application needs to run, from the code to system libraries and dependencies. This makes applications portable across different environments, whether a developer’s local machine, a staging environment, or production servers.

- Consistency: Docker ensures that an application behaves the same regardless of where it’s deployed, eliminating the “it works on my machine” problem developers often face.

- Isolation: Each Docker container runs in isolation from others, providing a clean environment for each application. This avoids conflicts between different applications or versions of dependencies.

- Scalability: Docker containers can be easily scaled up or down, which benefits high-traffic environments or Ansible Kubernetes and Docker.

- Efficiency: Containers share the host system’s OS kernel, making them lighter and more resource-efficient than traditional virtual machines.

- Ecosystem: Docker integrates well with various development tools and platforms, including CI/CD tools, Kubernetes, and cloud providers.

Setting Up a Basic Docker Container

Setting up a Docker container is straightforward and involves the following basic steps:

Install Docker:

- On Linux, you can use the package manager to install Docker.

- You can install Docker Desktop on Windows and MacOS.

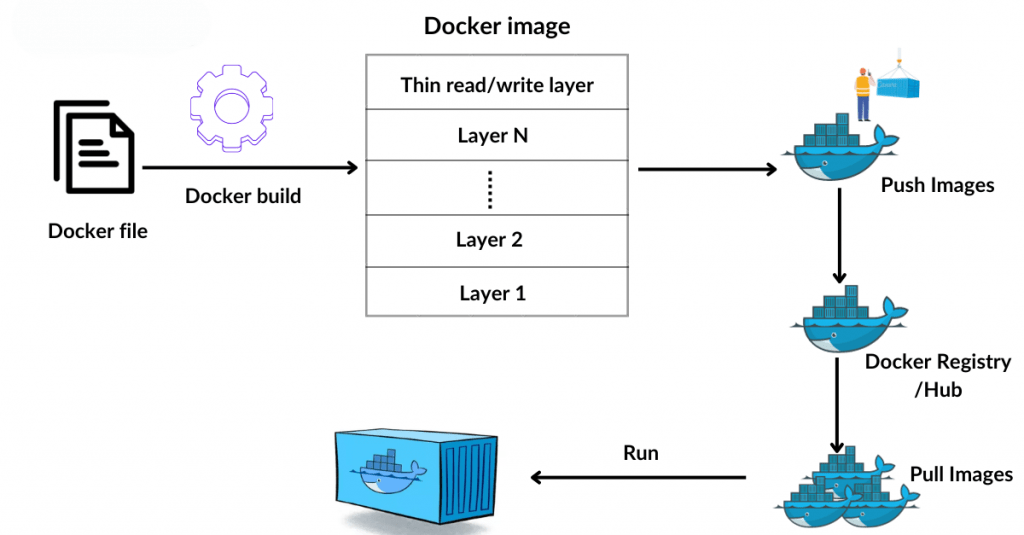

Create a Dockerfile: A Dockerfile is a script containing a series of commands to create a Docker image. Below is an example of a simple Dockerfile that creates a container for a Node.js application:

- # Use the official Node.js image

- FROM node:14

- # Set the working directory inside the container WORKDIR /app

- # Copy the application code into the container COPY . .

- # Install dependencies

- RUN npm install

- # Expose the port the app will run on

- EXPOSE 3000

- # Start the application

- CMD [“node,” “app.js”]

Build the Docker Image: Once the Dockerfile is created, you can build the image using the following command:

Docker build -t my-node-app .Run the Docker Container: After building the image, you can run it in a container:

docker run -p 3000:3000 my-node-appThis will start the application inside a container and expose it on port 3000.

Unlock your potential in Docker with this Docker Training Course .

Docker-Based Web Application Deployment

Docker is commonly used to deploy web applications by packaging the app and its dependencies into containers. This process ensures that the application runs consistently across different environments, whether it’s on a local machine, a staging server, or in production. For instance, deploying a Python Flask application using Docker involves creating a Dockerfile to define the environment and steps required to build the container. The Dockerfile outlines the base image to use, copies the necessary application files, installs dependencies, and exposes the correct port for the application to run.

Once the Dockerfile is prepared, you can build the Docker image with the following command:

docker build -t flask-app .After building the image, you can run the application using:

docker run -p 5000:5000 flask-appIf you wish to deploy the container on a cloud platform, you can push the Docker image to a container registry like Docker Hub or Amazon Elastic Container Registry (ECR). From there, you can deploy the container on cloud services such as AWS ECS, Google Kubernetes Engine (GKE), or Azure App Services, allowing for scalable and efficient management of the application in production environments. By using Docker in cloud environments, you benefit from seamless scaling, consistent deployments, and simplified orchestration, which makes it an ideal choice for modern web application deployment.

Multi-Container Applications Using Docker Compose

Docker Compose allows you to define and run multi-container applications. This is particularly useful when your application has multiple services, such as a web app and a database. Docker Training Compose allows you to define and run multi-container applications. This is particularly useful when your application has multiple services, such as a web app and a database. With Docker Compose, you can define the configuration of these services in a docker-compose.yml file, making it easier to manage complex environments. It automates the setup of networking between containers and handles inter-container dependencies, which simplifies the process of running multi-container applications. Docker Compose also ensures that all services start up in the correct order and can be stopped or scaled with a single command.

Create a docker-compose.yml File: Below is an example docker-compose.yml file for a web application that depends on a MySQL database:

- version: ‘3’

- services:

- Web:

- image: my-web-app

- ports:

- – “5000:5000”

- depends_on:

- – DB

- DB:

- image: mysql:5.7

- environment:

- MYSQL_ROOT_PASSWORD: example

Start the Application: To start the application, run:

docker-compose upThis will create and start the web and database containers, automatically handling the inter-container dependencies.

Stopping and Removing Containers: To stop and remove all containers, networks, and volumes defined in the docker-compose.yml file:

docker-compose downCI/CD Pipeline with Docker and Jenkins

Docker and Jenkins can be combined to create a robust CI/CD pipeline. Jenkins can build Docker images automatically whenever there are changes in the code repository.

- Install Jenkins: Install Jenkins on a server or use a Docker container to run Jenkins.

- Install Docker Plugin for Jenkins: Jenkins has a Docker plugin that allows it to interact with Docker containers. Install the plugin from the Jenkins plugin marketplace.

- Create a Jenkins Pipeline: Create a Jenkins pipeline to automate building, testing, and deploying Docker images whenever code is pushed to Docker Compose for Multi Container Apps.

- Configure Webhooks for Automation: Set up webhooks between Jenkins and your version control system (e.g., GitHub) so that Jenkins automatically triggers a build when there are changes in the code repository.

- Add Docker Build and Deployment Steps: In the pipeline, define steps to build the Docker image, run tests, and deploy the image to a staging or production environment.

- Monitor Builds: Use Jenkins’ built-in tools to monitor the status of builds and deployments, and set up notifications for failed builds or deployments.

Create a Jenkins Pipeline: A Jenkins pipeline defines the steps to automate the build and deployment process. Below is an example pipeline that builds and deploys a Docker image:

- pipeline {

- agent any

- stages {

- stage(‘Build’) {

- steps {

- script {

- docker.build(“my-web-app”)

- }

- }

- }

- stage(‘Deploy’) {

- steps {

- script {

- docker.image(“my-web-app”).run(“-d -p 5000:5000”)

- }

- }

- }

- }

- }

Configure Webhooks: To automatically trigger builds when new changes are pushed to your code repository (e.g., GitHub), configure a webhook to notify Jenkins.

Monitoring Docker Containers with Prometheus and Grafana: Prometheus and Grafana are potent tools for monitoring and visualizing Docker container metrics.

Set Up Prometheus: Prometheus collects metrics from Docker containers by scraping the metrics endpoint exposed by Docker’s cAdvisor or other exporters.

Looking to master Cloud Computing? Sign up for ACTE’s Cloud Computing Master Program Training Course and begin your journey today!

Monitoring Docker Containers with Prometheus and Grafana

Prometheus and Grafana are potent tools for monitoring and visualizing Docker container metrics.

Set Up Prometheus: Prometheus collects metrics from Docker containers by scraping the /metrics endpoint exposed by Docker’s cAdvisor or other exporters.

Example prometheus.yml configuration:- scrape_configs:

- – job_name: ‘docker’

- static_configs:

- – targets: [‘docker_host:8080’]

Set Up Grafana: Grafana visualizes the metrics collected by Prometheus. You can use pre-built dashboards for Docker metrics or create custom dashboards.

Monitor Containers: Once Prometheus and Grafana are configured, you can visualize metrics such as CPU usage, memory consumption, and network I/O for each container.

Kubernetes and Docker Integration

Kubernetes is an open-source platform for orchestrating containerized applications. It integrates seamlessly with Docker and can manage the deployment, scaling, and operations of Understanding Docker containers in production.

Kubernetes Setup: First, set up a Kubernetes cluster. This can be done on-premises or on cloud platforms like AWS (EKS), Google Cloud (GKE), or Azure (AKS).

Deploy Docker Containers in Kubernetes: Kubernetes manages Docker containers using Pods. A Kubernetes Deployment defines how many instances (replicas) of your Docker container should run.

Example Kubernetes Deployment YAML file for a Docker container:

- apiVersion: apps/v1

- kind: Deployment

- metadata:

- name: my-web-app

- spec:

- replicas:

- selector:

- matchLabels:

- app: my-web-app

- template:

- Metadata:

- Labels:

- app: my-web-app

- spec:

- Containers:

- – name: my-web-app

- image: my-web-app:latest

- ports:

- – containerPort: 5000

Manage Docker Containers with Kubernetes: Kubernetes handles rolling updates, scaling, and self-healing for your containers, making it ideal for large-scale production environments.

Building a Secure Docker Container

Security is a top concern when working with Docker containers, as vulnerabilities in containers can lead to serious security risks. To build a secure Docker container, it’s important to follow several best practices. First, always use trusted base images—preferably official or verified images—from reputable sources. Avoid outdated or untrusted images, as they may contain known vulnerabilities. Secondly, minimize the image size by using minimal base images (e.g., Alpine Linux), which reduces the attack surface by eliminating unnecessary dependencies. Additionally, ensure containers are run with the least privilege principle—avoid running containers as root unless absolutely necessary to limit the impact of potential security breaches. Docker Daemon Complete Guide is also crucial to scan Docker images for vulnerabilities using tools like Docker Security Scanning, Trivy, or Clair. These tools can help identify potential issues early in the development process. Encrypt sensitive data such as environment variables, passwords, or API keys, using Docker Secrets or similar secure methods to prevent unauthorized access. Regularly update the containers and images to ensure they are protected against the latest security threats. Finally, ensure that your containers are isolated properly from each other and the host system to limit the spread of any security issues that may arise.

Boost your chances in Docker interviews by checking out our blog on Docker Interview Questions and Answers!

Best Practices for Docker in Production

- Use Multi-Stage Builds: Multi-stage builds create smaller, optimized images by separating the build and runtime stages in the Dockerfile.

- Monitor and Log Containers: Implement centralized logging and monitoring for your Docker containers using tools like the ELK stack, Prometheus, or Grafana.

- Use Docker Swarm or Kubernetes for Orchestration: For scaling and managing containers in production, use orchestration tools like Docker Swarm or Kubernetes.

- Regularly Update Containers: Keep your containers updated with the latest patches to avoid security vulnerabilities.

- Use Version Control for Dockerfiles: It’s essential to store and version control your Dockerfiles alongside your application’s source code. This ensures that changes to the Dockerfile are tracked, and you can easily roll back to previous versions if necessary.

- Limit Container Resource Usage: Set resource limits (CPU and memory) for your containers to ensure that they don’t consume excessive resources and affect other services running on the same system. Docker allows you to specify these limits in the Docker run or Docker Compose file.

- Enable Container Networking: Use Docker’s networking features to enable secure communication between containers. Set up custom networks for isolating different services and reducing the risk of unauthorized access or vulnerabilities between containers.

- Implement Automated Testing: Integrate automated testing into your CI/CD pipeline to ensure that Docker containers are built and tested properly before deployment. This can help catch bugs or issues early in the development lifecycle, saving time and reducing errors in production.

Future of Docker and Containerization

Docker has become a central part of modern application development, and its adoption is expected to continue growing as more companies embrace cloud-native technologies. In the future, Docker’s integration with container orchestration platforms like Kubernetes will become even more profound, and new features will emerge to improve security, scalability, and management. The containerization ecosystem will likely expand, supporting serverless computing, AI/ML workloads, and other emerging technologies. Docker’s future looks promising as it continues to evolve alongside the growing trend of microservices architectures and distributed systems. As cloud-native applications become the standard, Docker Redis in enabling seamless, portable, and efficient deployment will only increase. We can expect more advanced tools and features for container management, enhanced support for multi-cloud environments, and better integration with edge computing. Docker’s growing ecosystem will likely include tighter integration with AI/ML platforms, making it easier to deploy resource-intensive applications. Additionally, the community-driven development of Docker will drive innovation and ensure that it remains a key player in modern software engineering.

Conclusion

Docker has revolutionized the way we build, deploy, and manage applications, offering a powerful solution for containerization. With its ability to create portable, consistent, and isolated environments, Docker Training has become a cornerstone of modern DevOps practices. From simplifying development workflows to enabling seamless scaling, Docker facilitates faster and more efficient application deployment. The integration with orchestration tools like Kubernetes and monitoring systems such as Prometheus and Grafana further enhances its capabilities, making it ideal for production environments. As organizations continue to embrace containerization and cloud-native technologies, Docker’s role will only grow, driving innovation in application deployment, security, and scalability. The future of Docker looks promising, with more advanced features and greater interoperability with emerging technologies on the horizon.