- Introduction

- What is Docker Redis?

- Advantages of Using Redis with Docker

- Best Practices for Using Redis with Docker

- How to Install and Run Redis in Docker

- Conclusion

Docker Redis is an efficient and powerful solution for building scalable applications by combining the flexibility of Docker containers with the high-performance capabilities of Redis. Redis, an in-memory data store, is widely used for caching, session management, and real-time data processing. When run in Docker containers, Redis can be easily deployed, scaled, and managed across various environments. Cloud Computing Courses can help you understand how to deploy and scale Redis in cloud environments, optimizing performance and reliability. This combination allows developers to streamline application deployment, ensure consistent performance, and quickly scale their applications as demand grows, all while maintaining the reliability and speed Redis is known for.

To Earn Your Cloud Computing Certification, Gain Insights From Leading Cloud Computing Experts And Advance Your Career With ACTE’s Cloud Computing Online Course Today!

Introduction

Docker has emerged as a game-changer in the world of modern software development and deployment, offering a streamlined and efficient way to manage applications across various environments. It enables developers to package applications and all their dependencies into isolated, transportable containers, ensuring that they can run uniformly across different environments, whether on a local machine, a testing server, or in production. By using Docker, developers can eliminate the complexities of managing diverse operating systems, configurations, and software environments, ensuring smooth and consistent execution no matter where the application is deployed. This aligns with the concept of What is Utility Computing, where resources are provided as a service and scaled as needed, simplifying application deployment and management. This makes Docker one of the most powerful and widely adopted tools in contemporary development. One of the best ways Docker shines is when it’s used alongside high-performance solutions like Redis. Redis, an open-source, in-memory data structure store, is widely known for its exceptional speed and reliability. It is frequently utilized for caching, real-time data processing, session management, message brokering, and other high-demand applications where performance and speed are critical. When Redis is run within Docker containers, it allows developers to leverage both Redis’s superior performance and Docker’s flexibility, ensuring rapid deployment, scalability, and efficient resource management.

What is Docker Redis?

Docker Redis refers to the practice of running the Redis data store inside a Docker container. Redis, an open-source in-memory key-value database, is widely recognized for its ultra-fast read and write capabilities, which make it an ideal solution for applications that demand low-latency data access. Its ability to handle high-throughput workloads efficiently makes Redis the go-to choice for real-time data processing, session management, caching, and even pub/sub messaging systems. As a result, Redis is often used in critical infrastructure for web applications, mobile apps, and other high-performance systems. Leveraging Docker in Linux Software Development allows for seamless deployment and scaling of Redis, ensuring reliable performance across various environments.

Running Redis inside a Docker container brings several key advantages, particularly in terms of deployment flexibility, scalability, and ease of management. Docker containers provide an isolated environment where Redis runs alongside all of its dependencies, ensuring consistency and portability across multiple environments. Whether you’re working in a local development environment, testing a staging setup, or deploying Redis to a production cloud infrastructure, Docker containers allow Redis to function uniformly with minimal configuration. The containerization of Redis significantly simplifies the setup process, eliminating the need for complex dependency management and ensuring that Redis behaves the same way no matter where it’s deployed.

Interested in Obtaining Your Cloud Computing Certificate? View The Cloud Computing Online Course Offered By ACTE Right Now!

Advantages of Using Redis with Docker

There are numerous significant benefits to running Redis inside Docker containers:

- Portability: Docker containers allow Redis to be deployed consistently across a variety of platforms. Whether you’re developing locally on your machine, testing in a staging environment, or deploying to a cloud-based production platform, Docker ensures that Redis operates uniformly across these different systems. This portability is a significant benefit, as it eliminates the need for complex configurations and reduces the potential for errors that can arise from inconsistent environments. Additionally, Docker’s compatibility with various orchestration tools, like Kubernetes, further streamlines the management and scaling of Redis instances.

- Isolation: One of the core features of Docker is the ability to isolate applications within containers. This isolation ensures that Redis runs independently of the host system and other applications. By eliminating dependency conflicts, Docker helps maintain a secure environment, where Redis can run without interference from other services or the underlying infrastructure. This isolation also makes it easier to run multiple Redis instances on the same host machine without them interfering with one another. Furthermore, it enhances fault tolerance, as issues within one container do not affect others or the host system.

- Simplified Configuration and Deployment: Docker allows you to specify all the necessary Redis configurations in a single, easily shared file, such as a Dockerfile or Docker Compose file. This simplified approach makes it easier to manage Redis instances, especially when scaling Redis across multiple nodes in a distributed system. It is a key strategy for building Cloud-Native Applications, where scalability, flexibility, and efficient management of resources are crucial for performance. With Docker Compose, for example, you can define multi-container applications that include Redis, along with any other services or dependencies your application needs, making deployment a breeze. Additionally, this configuration management ensures consistency, reducing the risk of configuration drift between different environments.

- Fast Deployment: Docker offers an incredibly fast deployment process. By pulling the official Redis Docker image, developers can quickly launch Redis containers with minimal effort. This is especially useful in scenarios where rapid prototyping or testing is needed. With just a few commands, you can deploy Redis in minutes, which accelerates your application development lifecycle and reduces the time spent on manual configuration and deployment tasks. Moreover, Docker’s caching mechanisms ensure that subsequent deployments are even faster, as it reuses existing layers, making Redis deployment more efficient.

- Scalability: Scaling Redis in Docker is straightforward. Docker’s container orchestration tools, such as Docker Compose and Kubernetes, allow you to easily scale Redis horizontally. As your application grows and requires more resources, you can run multiple Redis containers across different nodes to handle increased demand. Docker’s lightweight nature ensures that scaling remains efficient, without introducing the overhead typically associated with virtual machines. Additionally, Docker’s flexible networking capabilities make it easy to configure and manage communication between multiple Redis instances, ensuring smooth performance at scale.

- Resource Efficiency: Docker containers are resource-efficient compared to virtual machines. They use fewer resources because they share the host system’s kernel, reducing the overhead associated with running multiple isolated environments. This efficiency makes Docker an ideal solution for deploying Redis in environments with limited resources, such as edge devices or small cloud instances. You can run multiple Redis containers without worrying about excessive resource consumption. Additionally, Docker’s ability to allocate resources dynamically allows you to fine-tune resource usage, ensuring optimal performance even in resource-constrained environments.

Best Practices for Using Redis with Docker

When using Redis in Docker, it’s important to follow best practices to ensure efficient, secure, and scalable deployments. One of the key recommendations is to use Docker volumes for persistent data. Since Docker containers are ephemeral by default, meaning any data inside them will be lost after the container is stopped or removed, using volumes ensures that Redis data is retained even after container restarts or removals. Another important practice is to limit resource usage. Cloud Computing Courses can teach you how to efficiently manage and allocate resources in cloud environments, ensuring optimal performance while minimizing waste. Redis can be resource-intensive, especially with high-throughput workloads. Docker allows you to allocate specific CPU and memory limits to containers, which prevents Redis from consuming excessive resources and affecting other containers or the host system. For more complex setups where Redis needs to interact with other services, using Docker Compose is highly beneficial. Docker Compose helps manage multi-container applications, ensuring that Redis is correctly integrated with other components like web servers, databases, or caches. Additionally, it’s crucial to secure Redis. By default, Redis does not have built-in authentication, so it’s important to configure Redis securely within Docker, either by setting up passwords or restricting access using firewalls. Running Redis inside a container doesn’t automatically make it secure, so additional measures are necessary.

Looking to Master Cloud Computing? Discover the Cloud Computing Masters Course Available at ACTE Now!

How to Install and Run Redis in Docker

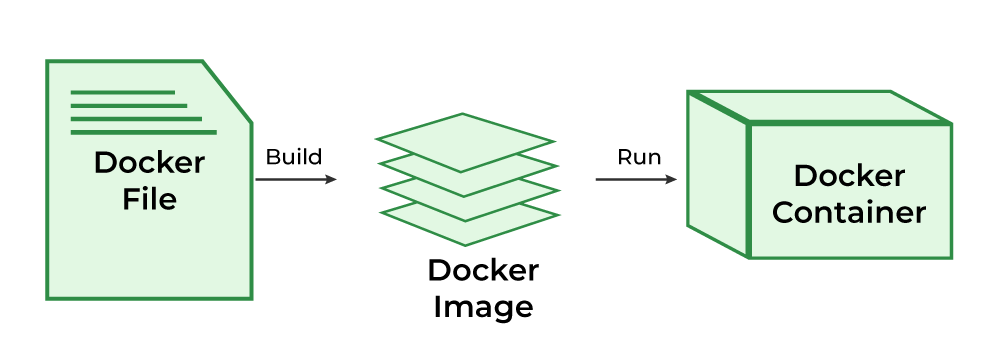

It only takes a few easy steps to run Redis in a Docker container, making it a seamless process for developers. This approach can be further optimized by using AWS EC2 Spot Instances, which offer cost-effective compute capacity for running Redis containers at scale. Here is a simple how-to for getting started:

Step 1: Install Docker:You must have Docker installed on your computer before you can run Redis in Docker. You can follow the operating system’s installation instructions after downloading Docker from the official Docker website.

Step 2: Pull the Official Redis Docker Image:Pulling the official Redis Docker Docker The image from Docker Hub is the next step after installing Docker. The Redis team maintains the official image, which includes all the necessary components for running Redis within a container.

Launch a terminal or command prompt, then type the following to pull the Redis image:

- Redis is pulled by Docker.

image has finished downloading:

- docker run -d redis -name redis-container

Let’s dissect the command:

- –redis-container to be named: This gives the container a name.

- -d: This option launches the container in the background, or detached mode.

- Redis: This indicates which image—in this example, the official Redis image—should be used.

- A Redis instance within a Docker container will be launched using this command.

- Docker ps can be used to confirm that the Redis container is operational.

You can use the following command to enter the Redis container’s shell and communicate with it:

- it redis-container redis-cli docker exec

As with a standard Redis server, you can execute Redis operations like setting and obtaining keys using the Redis command-line interface (CLI), which is opened with this command.

Step 5: Persist Data:Docker containers are ephemeral by default, which means that any data saved within them will be erased upon removal. Mounting a host directory as a disk is one way to preserve Redis data. Here’s the method:

- Redis-container -d -v /path/to/host/directory:/data redis docker run

Redis keeps its data in the /data directory inside the container, which is mounted by this command from the provided host directory. Even if the container is stopped or deleted, any data that Redis writes will remain on the host system.

To stop the Redis container, run: This process is part of managing your containers effectively, as explained in Docker Daemon A Complete Guide, which covers how to control and manage containers and their lifecycle through the Docker daemon.

- docker stop redis-container

To remove the container, run:

- docker rm redis-container

Go Through These Cloud Computing Interview Questions & Answer to Excel in Your Upcoming Interview.

Conclusion

For developers looking to harness the incredible speed and efficiency of Redis while enjoying the convenience, flexibility, and portability that Docker containers provide, Docker Redis proves to be an exceptionally powerful combination. In today’s fast-paced software development landscape, modern applications demand consistent, scalable, and manageable environments, and Docker Redis fulfills these requirements seamlessly. By running Redis in Docker, developers can achieve the optimal balance between performance and flexibility, empowering them to build highly responsive and robust applications that can be easily deployed across diverse environments. Cloud Computing Courses can provide the necessary skills to leverage Docker and Redis effectively within cloud-based architectures for scalable and efficient application development. With Docker, setting up and managing Redis instances becomes much more streamlined, reducing the complexity often associated with configuration and deployment. Whether you’re using Redis for high-speed real-time data processing, handling large-scale caching, or managing sessions for web applications, Docker Redis simplifies the process and accelerates the development cycle. This portability allows you to move Redis containers effortlessly across different environments—from local development machines to production servers without worrying about inconsistencies or compatibility issues.