- Introduction to Data Integration and Azure Data Factory

- What is Azure Data Factory?

- Key Concepts in Azure Data Factory

- Setting Up Your Azure Data Factory Environment

- Creating a Data Pipeline in Azure Data Factory

- Transforming Data in Azure Data Factory

- Monitoring and Managing Data Pipelines

- Best Practices and Use Cases for Azure Data Factory

- Conclusion

Introduction to Data Integration and Azure Data Factory

In today’s data-driven world, businesses face the challenge of managing vast amounts of data from multiple sources. Integrating this data into a usable format and moving it to storage or analytics platforms is critical for making informed, data-driven decisions. Data integration combines data from different sources to provide a cohesive and unified view for better insights and analysis. Azure Data Factory (ADF) is a cloud-based SQL Database integration service offered by Microsoft Azure Training , designed to help automate and orchestrate the movement and transformation of data across various systems. It enables organizations to seamlessly collect, integrate, and analyze data from disparate sources, making it easier to leverage the full potential of their data. This guide will walk you through using Azure Data Factory for data integration. From setting up the service to executing data workflows, we will cover the key steps and best practices that ensure a smooth and efficient data integration process. Whether you’re looking to automate ETL (Extract, Transform, Load) workflows or manage complex data pipelines, Azure Data Factory provides a robust and scalable solution for all your data integration needs.

What is Azure Data Factory?

Azure Data Factory (ADF) is a fully managed, serverless data integration service designed to facilitate data movement and transformation. Azure Data Factory Overview enables you to build, manage, and monitor data workflows that move data between on-premises and cloud-based sources. Microsoft Azure Data Factory provides several features, including:

- Data Movement: Moving data from various sources to cloud storage or other databases.

- Data Transformation: Data can be transformed using various activities (like Data Flows) before being loaded into a target system.

- Pipeline Orchestration: Involves organizing data workflows across different data sources, including SQL Database , data lakes, and external Azure services.

- Monitoring and Management: ADF provides tools to monitor the success and failure of data pipelines and debug issues in the data integration process.

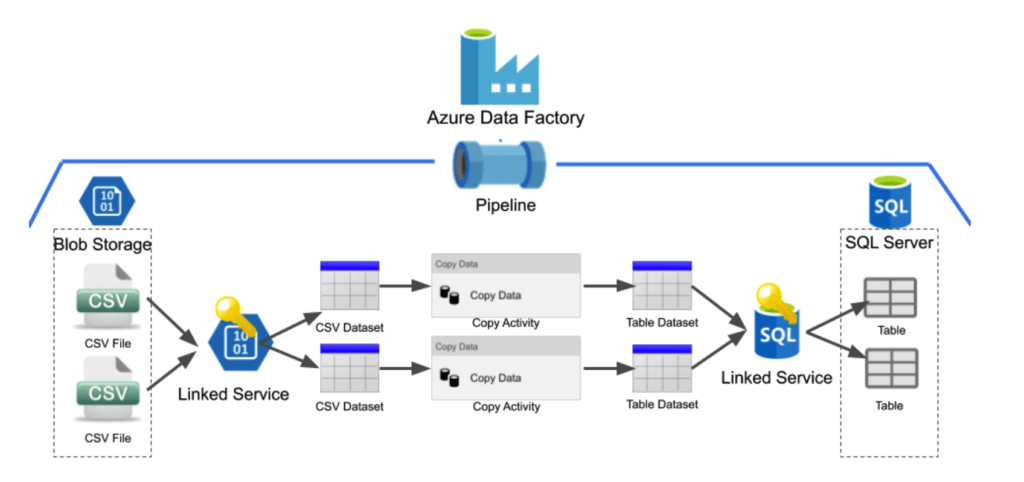

- Pipeline: In ADF, a pipeline is a logical grouping of activities that together perform a task. A pipeline can include multiple steps, such as data movement, transformation, and orchestration.

- Dataset: A dataset represents the data structure that you will work with. It defines the shape of the data, such as the file format or the SQL Database table structure.

- Linked Service: A linked service defines the connection information to your data sources and destinations. For example, it includes the connection string to your Azure SQL Database or Azure Blob Storage.

- Activity: An activity defines a step in the pipeline. It can be anything from moving data to transforming it. Some everyday activities include Understanding Azure Monitor , data flow, and Executing Pipelines.

- Triggers: Triggers start pipelines automatically based on certain conditions, such as time-based schedules or event-based actions.

- Integration Runtime: The integration runtime (IR) is the compute infrastructure ADF uses to perform data movement and transformation tasks. Depending on your data source location, it can be either cloud-based or self-hosted.

- If you don’t already have an Azure account, visit the Azure portal and sign up. You may be eligible for free credits when you sign up for the first time.

- Log in to the Azure portal.

- In the left sidebar, click on Create a resource.

- In the Search the Marketplace box, type Data Factory and select Azure Data Factory.

- Click Create, and you will be prompted to fill in the Azure Devops , Subscription Choose the appropriate Azure subscription. Resource Group Select an existing resource group or create a new one. Region Select the region where the ADF instance will reside. Name Give a name to your Azure Data Factory Environment instance. After filling in the necessary details, click Review + Create and Create.

- Next, you need to define connections to your data sources.

- In your Azure Data Factory instance, click on Author & Monitor.

- Click on the Manage tab in the ADF UI, then Linked Services.

- Click New to create a new linked service.

- Choose your data source type (e.g., Azure Blob Storage, SQL Database).

- Provide details like connection strings, authentication methods, and other necessary credentials.

- You may need an Integration Runtime (IR) to perform data integration tasks. The default Azure IR should be sufficient for cloud-based data, while a self-hosted IT will be needed for on-premises data.

- In the Manage tab, go to Integration Runtimes.

- Click New, and select the appropriate IR type.

- For cloud-based IR, follow the prompts and install the necessary agents for on-premises IR.

- In the Author tab, click on Pipelines and then New Pipeline.

- Give your pipeline a name.

- From the activities pane on the left, drag an activity to the pipeline canvas.

- For instance, the Copy Data activity can copy data from one location to another.

- Configure the source and destination datasets by Introduction to Amazon Cognito” the corresponding linked Azure services.

- Define additional settings like mapping, file formats, and data transformations (if necessary).

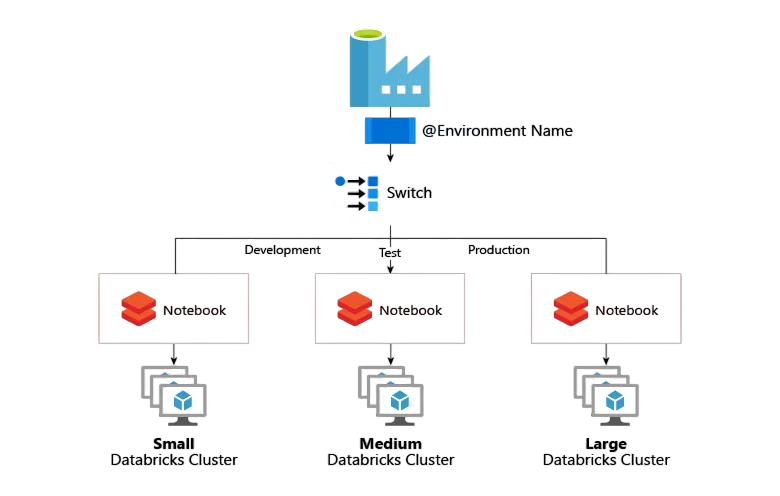

- You can configure triggers to automate the pipeline execution based on time or event.

- In the pipeline editor, click on Add Trigger.

- Choose to trigger the pipeline on a schedule or in response to an event.

- In the ADF UI, go to the Monitor tab.

- Here, you can see the status of your pipeline runs (Succeeded, Failed, or In Progress).

- For failed runs, click on the error message to troubleshoot the issue.

- Azure Data Factory Environment provides detailed logs for each activity in a pipeline, allowing you to trace errors and Introduction to Azure Cosmos DB .

- Click on a pipeline run to see detailed logs.

- Logs include activity-level diagnostics, including data movement Progress, transformation status, and failure messages.

- Use Parameterization: Make your pipelines, datasets, and linked Azure services reusable and flexible by using parameters.

- Monitor Pipelines Regularly: Set up alerts to notify you of failures or anomalies in data pipelines.

- Version Control: Keep track of pipeline versions and configurations for traceability and rollback capabilities.

- ETL (Extract, Transform, Load): Moving and transforming data from multiple sources to a data warehouse for analytics.

- Data Migration: Migrating on-premises data to cloud platforms like Azure Data Lake Storage or Azure Synapse Analytics.

- Real-time Data Integration: Using ADF to manage real-time data pipelines for near-instantaneous data processing.

Start your journey in Cloud Computing by enrolling in this Cloud Computing Online Course .

Key Concepts in Azure Data Factory

Before you dive into using Microsoft Azure Data Factory, it’s essential to understand some key concepts:

Gain in-depth knowledge of Cloud Computing by joining this Cloud Computing Online Course now.

Setting Up Your Azure Data Factory Environment

To begin using Azure Data Factory, you must set up the environment. Here’s how you can get started:

Step 1: Create an Azure Account

Step 2: Create an Azure Data Factory Instance

Step 3: Set Up a Linked Service

Step 4: Set Up the Integration Runtime

Creating a Data Pipeline in Azure Data Factory

Once the environment is set up, you can build your first data pipeline. A pipeline is where you define your data integration workflow.

Step 1: Create a New Pipeline

Step 2: Add Activities to the Pipeline

Step 3: Add a Trigger (Optional)

Aspiring to lead in Cloud Computing? Enroll in ACTE’s Cloud Computing Master Program Training Course and start your path to success!

Transforming Data in Azure Data Factory

ADF provides various ways to transform data before it’s loaded into the destination system. Here are a few options, Data flows in ADF are visually designed components that allow you to transform data. You can perform various operations like filtering, aggregating, and joining data within the flow. In the pipeline editor, select Data Flow from the activity list. Design your data flow by adding transformation activities like Source, Sink, Join, and Aggregate. Link your source and sink datasets to the data flow activity and configure any transformations. To check your transformations, ADF offers a debugging feature to preview the data as Microsoft Azure Training passes through the transformation steps. In the Data Flow editor, click Debug. Review the output and adjust transformations accordingly.

Monitoring and Managing Data Pipelines

Once you’ve created and deployed your data pipeline, monitoring its execution is key to ensuring the success of data Integration Runtime tasks.

Step 1: Monitor Pipeline Runs

Step 2: Viewing Logs and Debugging

Best Practices and Use Cases for Azure Data Factory

Preparing for Cloud Computing interviews? Visit our blog for the best Microsoft Azure Interview Questions and Answers!

Conclusion

Azure Data Factory (ADF) is a powerful and flexible tool that enables you to automate and orchestrate data Integration Runtime tasks in the cloud. Its intuitive interface and robust monitoring and transformation capabilities make it an efficient solution for managing complex data workflows. By leveraging ADF, you can easily set up and manage data pipelines, transform data as needed, and integrate it with various cloud services to support advanced analytics and data-driven decision-making. This guide is designed to help you make the most out of Microsoft AzureData Factory Environment , whether you’re just starting or an experienced user. Step-by-step, you’ll learn how to create and configure data pipelines, connect to different data sources, and apply transformations to meet your business needs. Additionally, you’ll gain an understanding of how ADF can work alongside other Azure services to streamline your data integration processes. With its scalability and wide range of features, Microsoft Azure Training Data Factory provides an ideal platform for organizations looking to harness the power of their data. By following this guide, you’ll gain the knowledge and skills to effectively manage your data integration workflows and unlock valuable insights from your data.