- Introduction to Kubernetes

- Key Benefits of Kubernetes

- Security in Kubernetes

- Core Components of Kubernetes

- Networking in Kubernetes

- Kubernetes Architecture Explained

- Worker Nodes

- How Kubernetes Works

- Persistent Storage in Kubernetes

- Kubernetes Use Cases

- Kubernetes vs. Traditional Deployment Models

- Kubernetes Ecosystem and Tooling

- Conclusion

Introduction to Kubernetes

Kubernetes has become one of the most influential tools in the world of containerized application management. It has revolutionized the way applications are deployed, managed, and scaled. If you’re new to Kubernetes or looking to deepen your understanding, this blog will provide an overview of what Kubernetes is, its core concepts, and how it works without diving into complex code, which is also covered in Cloud Computing Courses to help you build a solid foundation in container orchestration and cloud-native technologies. In the era of cloud-native computing and microservices, managing applications efficiently has become a challenge for developers and IT operations teams. This is where Kubernetes comes into play. Kubernetes is an open-source platform that automates container orchestration. It is used to manage and scale containerized applications reliably and efficiently. Originally designed by Google and now maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes enables developers to deploy, scale, and manage containers seamlessly across a cluster of machines. Containers, like Docker, allow applications to run in isolated environments, and Kubernetes helps in automating their deployment, scaling, and monitoring.

Are You Interested in Learning More About Cloud Computing? Sign Up For Our Cloud Computing Online Course Today!

Key Benefits of Kubernetes

Kubernetes is a powerful platform that offers numerous benefits, especially for businesses running complex applications in dynamic environments. Here are some key advantages:

-

Automation:

- Kubernetes automates the deployment, scaling, and management of containerized applications, reducing manual intervention and operational overhead. Scalability:

- With Kubernetes, applications can be easily scaled up or down depending on resource requirements. It can automatically scale containers based on demand, ensuring high availability and optimal resource usage. High Availability:

- Kubernetes ensures that your application is highly available by automatically rescheduling containers if a node fails, ensuring that the application continues to run with minimal disruption. Efficient Resource Utilization:

- Kubernetes allows you to optimize resource allocation by efficiently managing compute, storage, and network resources across the cluster, a concept that aligns with Why Azure Container Service Matters in enabling efficient container orchestration and resource management in the Azure cloud environment. Portability:

- Kubernetes abstracts the underlying infrastructure, allowing applications to be moved easily across different cloud providers or on-premises data centers. Self-healing:

- Kubernetes can automatically detect failures and reschedule containers as necessary to ensure your application is always running.

Security in Kubernetes

Security is a critical aspect of any application deployment, and Kubernetes provides various built-in features to help secure workloads. Role-Based Access Control (RBAC) ensures that users and services have the appropriate permissions, preventing unauthorized access. Network policies allow administrators to control communication between pods, restricting exposure to potential threats. Secrets management securely stores sensitive data such as API keys and credentials, ensuring they are not hardcoded into applications. Kubernetes also supports container runtime security features like seccomp and AppArmor, which limit what actions a containerized application can perform. Continuous monitoring and logging, using tools like Prometheus and Fluentd, help detect and respond to security threats in real-time, enhancing overall system resilience.

Core Components of Kubernetes

To understand how Kubernetes works, it’s crucial to familiarize yourself with its core components. These components are designed to ensure that applications are deployed, managed, and scaled properly in a distributed environment. Here’s a breakdown:

- Pods: A pod is the smallest and most basic unit in Kubernetes. It consists of one or more containers that share the same network namespace. Pods enable containers to run together as a single unit and share resources like storage and networking.

- Nodes: A node is a physical or virtual machine in a Kubernetes cluster that runs the containerized applications. Each node contains the necessary components to run Kubernetes, including the kubelet, a container runtime (like Docker), and the kube-proxy.

- Kubernetes Master: The Kubernetes Master is the control plane responsible for managing the cluster. It consists of several components, including the API server, controller manager, scheduler, etc., which is similar to concepts covered in A A Basic Guide to Computer Networks, where understanding distributed systems and network management is crucial for managing modern cloud infrastructures.

- Services: A Kubernetes service is an abstraction layer that defines a logical set of pods and provides a way to access them. Services enable communication between different components within a Kubernetes cluster, offering stability in accessing pods even if they are dynamically created and destroyed.

- Deployments: Deployments provide a way to manage and update the applications running in the Kubernetes cluster. They ensure that the specified number of replicas of a pod are running at all times and facilitate easy rollouts and rollbacks of application updates.

- Replica Sets: ReplicaSets ensure that a specified number of pod replicas are running at all times. They are often used in conjunction with deployments to maintain high availability.

- Config Maps & Secrets: Config Maps and Secrets store configuration data and sensitive information (like passwords and API keys) for containers, making it easier to manage configurations across different environments.

- API Server (kube-Episerver): The API server serves as the entry point for all REST requests and acts as a bridge between users and the cluster. It validates and processes API requests, including those that come from Kubectl, the Kubernetes CLI.

- Controller Manager (kube-controller-manager): This component manages controllers that handle the overall state of the system, such as the replication controller and the deployment controller. It ensures that the desired state of the application is maintained.

- Scheduler (kube-scheduler): The scheduler assigns pods to nodes based on resource availability and other factors like affinity, taints, and tolerations.

- etcd: etcd is a highly available, consistent, and distributed key-value store that stores all the cluster’s data, such as configurations and state information.

- Kubelet: The kubelet is an agent that runs on each worker node. It ensures that the containers are running as expected and communicates with the API server.

- Kube Proxy: The Kube proxy handles networking and load balancing for services in the cluster. It ensures that requests are routed to the appropriate pod based on the service’s IP and port.

- Container Runtime: The container runtime is responsible for running containers. Docker was traditionally used, but newer container runtimes like containers and CRI-O are also supported.

- Deploying Applications: When an application is deployed to Kubernetes, the user submits a configuration file (in YAML or JSON format) that defines the desired state of the application. This file includes information about the containers, services, and resources needed. Kubernetes reads this file and automatically deploys the necessary resources in the cluster.

- Scheduling Pods: Once the configuration file is submitted, the Kubernetes scheduler assigns the pods to specific nodes in the cluster based on factors like available resources, node affinity, and taints. The scheduler ensures that the application is placed in the most appropriate node for optimal performance.

- Managing Resources: Kubernetes continuously monitors the state of the applications and adjusts resources as needed. If there are not enough resources on a node, Kubernetes can automatically move the pod to another node or scale the pod count up or down based on demand.

- Load Balancing: Kubernetes provides built-in load balancing to ensure that traffic is evenly distributed across the containers. Services enable communication between pods, and Kubernetes ensures that the traffic is routed correctly, even if pods are created or destroyed dynamically.

- Self-healing: Kubernetes actively monitors the health of the system. If a container or pod fails, Kubernetes will automatically try to restart or reschedule the pod to another node to maintain the desired state of the application.

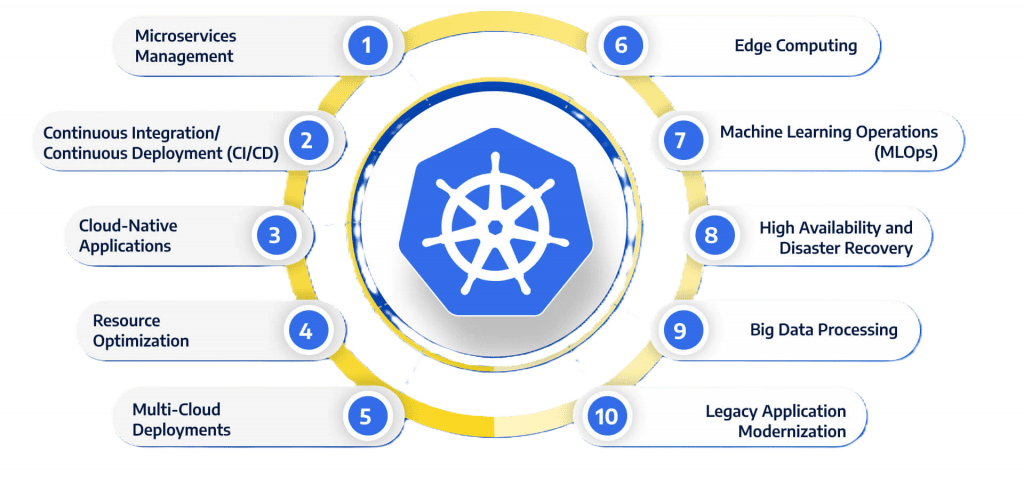

- Microservices Architecture: Kubernetes simplifies the management of microservices-based applications by providing features like automated scaling, self-healing, and distributed networking.

- DevOps and CI/CD Pipelines: Kubernetes can be integrated into DevOps workflows to automate application deployment, testing, and updates. Its ability to manage resources dynamically is ideal for Continuous Integration and Continuous Deployment (CI/CD) pipelines.

- Hybrid and Multi-cloud Deployments: Kubernetes enables organizations to deploy applications seamlessly across public and private clouds, offering greater flexibility and redundancy, which can be further enhanced by using Simplify Azure Management Using Resource Groups to organize and manage resources effectively across cloud environments.

- Big Data Processing: Kubernetes is increasingly being used for managing large-scale big data applications by orchestrating distributed containers that run machine learning models or large data pipelines.

- Resource Efficiency: Kubernetes allows for better resource utilization by running multiple containers on the same machine, reducing the overhead associated with virtual machines.

- Automation: Unlike traditional methods, which often require manual intervention, Kubernetes automates many operational tasks, reducing the likelihood of human error and operational overhead.

- Scalability: Scaling applications in traditional environments often requires manual configuration and provisioning of resources. In contrast, Kubernetes automatically scales containers based on demand.

- Portability: Kubernetes provides a consistent environment across various cloud providers and on-premises data centers, making it easy to move workloads between environments.

To Earn Your Cloud Computing Certification, Gain Insights From Leading Cloud Computing Experts And Advance Your Career With ACTE’s Cloud Computing Online Course Today!

Networking in Kubernetes

Kubernetes provides a robust networking model that simplifies communication between containers, pods, and external systems. Each pod in a Kubernetes cluster is assigned a unique IP address, ensuring seamless communication without the need for explicit port mappings. Kubernetes supports multiple networking models, including the default Kubernetes Network Model, which allows all pods to communicate directly with each other. Additionally, Kubernetes enables service discovery and load balancing through Services, ensuring that applications remain accessible even when individual pods are dynamically created or removed. Ingress controllers further enhance networking capabilities by managing external access to services, and providing features such as SSL termination and path-based routing. Networking plugins like Calico, Flannel, and Cilium extend Kubernetes.

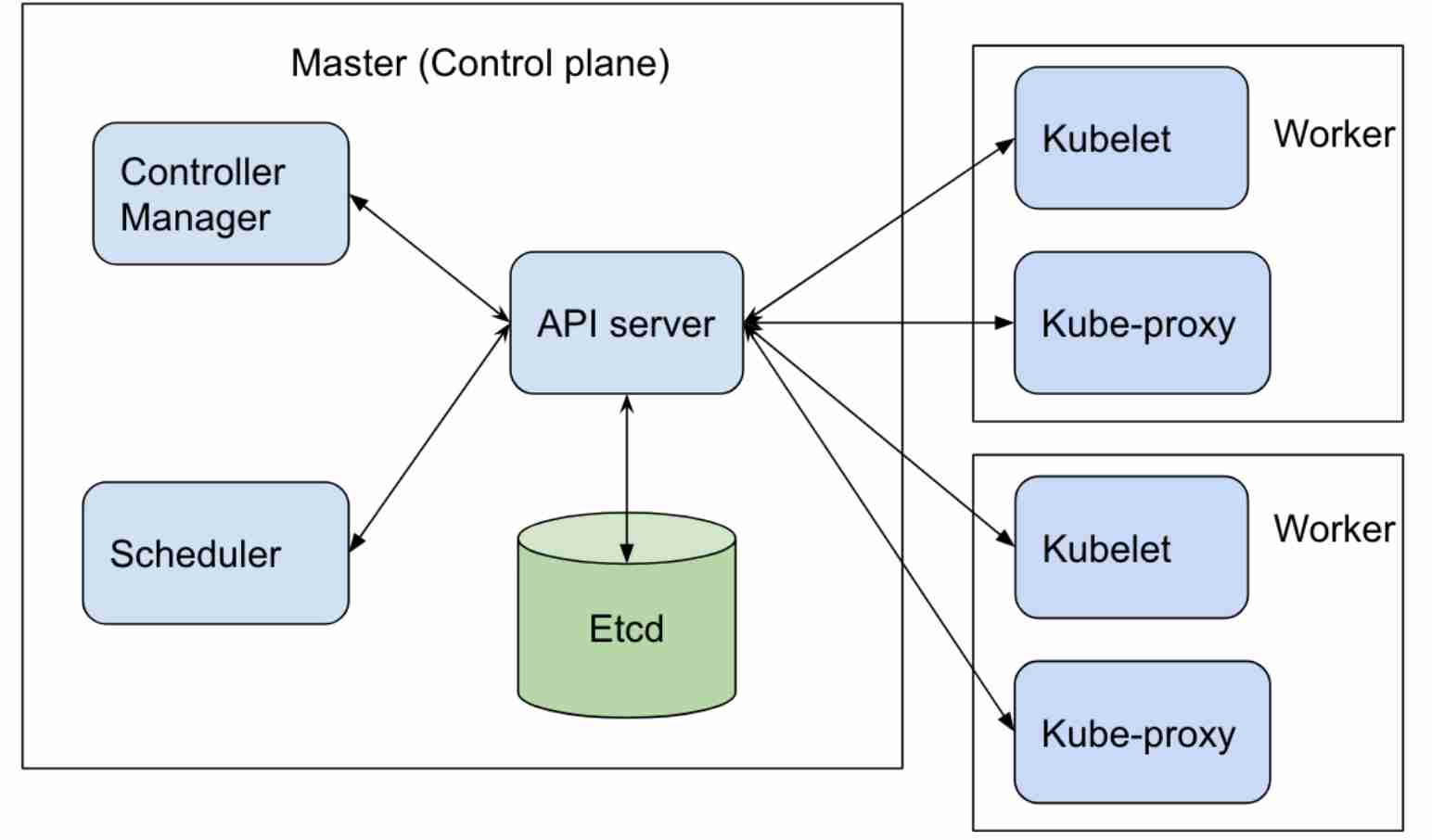

Kubernetes Architecture Explained

Kubernetes architecture is based on a master-slave (or client-server) model, consisting of the control plane and worker nodes, a concept that is further explored in Cloud Computing Models Types, Benefits, and Examples to help understand how different cloud models support scalable and efficient application management.

Control Plane (Master Node)The control plane is responsible for managing the Kubernetes cluster. It makes global decisions (such as scheduling) about the cluster and monitors the state of the system. Here are the key components within the control plane:

Worker Nodes

Worker nodes are the machines where the actual applications (containers) run, a concept that is thoroughly explained in Cloud Computing Courses to help you understand how containerized applications are deployed and managed in cloud environments. Each worker node has the following components:

How Kubernetes Works

To understand how Kubernetes works, it’s important to break down the process into key functions and workflows. Here’s how Kubernetes operates:

Are You Considering Pursuing a Master’s Degree in Cloud Computing? Enroll in the Cloud Computing Masters Course Today!

Persistent Storage in Kubernetes

While containers are typically ephemeral, many applications require persistent storage to retain data across pod restarts, which is an important consideration when Exploring the Cloud-First Strategy to ensure that cloud-native applications are resilient and data is consistently available even in dynamic environments. Kubernetes provides a solution through Persistent Volumes (PVs) and Persistent Volume Claims (PVCs), which abstract underlying storage solutions and allow applications to request storage dynamically.

Kubernetes supports various storage backends, including NFS, Ceph, AWS EBS, Google Persistent Disks, and Azure Disks, offering flexibility across different environments. StatefulSets ensure stable network identities and persistent storage for stateful applications, such as databases and distributed systems. Kubernetes also enables dynamic provisioning, automatically allocating storage resources based on predefined Storage Classes, reducing the need for manual intervention and ensuring efficient storage management.

Kubernetes Use Cases

Kubernetes has numerous use cases that benefit organizations across various industries. Some of the key scenarios include:

Kubernetes vs. Traditional Deployment Models

Kubernetes provides several advantages over traditional deployment methods, such as virtual machines (VMs) or manual container management:

Preparing for a Cloud Computing Job Interview? Check Out Our Blog on Cloud Computing Interview Questions & Answer

Kubernetes Ecosystem and Tooling

The Kubernetes ecosystem is vast, with numerous tools and integrations that extend its functionality. Helm, a package manager for Kubernetes, simplifies application deployment by managing Kubernetes manifests as reusable charts. Service meshes like Istio and Linkerd enhance microservices networking by providing traffic control, security, and observability. Kubernetes-native CI/CD tools like ArgoCD and Tekton streamline automated application delivery, ensuring faster and more reliable deployments. Monitoring and logging solutions such as Prometheus, Grafana, and Fluentd help administrators track application performance and troubleshoot issues effectively. Additionally, serverless frameworks like Knative allow developers to run event-driven applications on Kubernetes without managing the underlying infrastructure, further expanding Kubernetes’ capabilities in cloud-native environments.

Conclusion

Kubernetes has revolutionized the way organizations deploy, manage, and scale their applications, a key topic covered in Cloud Computing Courses to help professionals understand container orchestration and its impact on modern cloud infrastructure. With its powerful features and automation capabilities, Kubernetes has become the de facto platform for managing containerized applications. Understanding the core components, architecture, and functionality of Kubernetes is essential for leveraging its full potential. Whether you’re building microservices, running large-scale applications, or optimizing infrastructure, Kubernetes provides the flexibility and scalability needed for modern software development and deployment.