- Introduction to DP-201 Exam

- Eligibility and Prerequisites

- Exam Format and Structure

- Key Domains and Objectives

- Data Storage Solutions in Azure

- Designing for Data Security and Compliance

- Monitoring and Optimization Strategies

- Recommended Study Resources

- Career Benefits of DP-201 Certification

- Conclusion

Introduction to DP-201 Exam

The DP-201 certification exam, also known as Exam DP-201: Designing an Azure Data Solution, is one of the exams that form Microsoft’s professional certification route for candidates who want to prove their ability to design and implement data solutions on Microsoft Azure. Microsoft Azure Training is for data platform professionals, data architecture professionals, and data management professionals and evaluates the capacity to design and manage scalable and secure data solutions with Azure services. DP-201 is one of the two exams (the other being DP-200) for Microsoft Certified: Azure Data Engineer Associate certification. With companies and organizations moving more data workloads to the cloud, Azure Data Engineers are needed in large quantities. Passing the DP-201 exam proves your skills in planning, deploying, and managing multiple Azure data services.

Eligibility and Prerequisites

While there are no strict prerequisites for taking the DP-201 exam, Microsoft recommends that candidates have prior experience with the following:

- Experience with Data Engineering: Candidates should have hands-on experience designing and managing data solutions, including using Azure data services to process and manage large datasets.

- Knowledge of Azure: Familiarity with Microsoft Azure services, particularly Azure storage solutions, Azure Key Vault Secure Your Sensitive Data , and security, is crucial. A working understanding of cloud concepts such as virtual machines, networking, and Azure SQL Database is beneficial.

- Completion of DP-200: It is also recommended, though not mandatory, to complete Exam DP-200 (Implementing an Azure Data Solution), as this exam focuses more on the implementation aspects, whereas DP-201 focuses more on design and planning.

- Hands-on Experience: Practical experience using Azure data solutions is critical. Candidates should have at least 6-12 months of hands-on experience with Azure’s data services, such as Azure Data Factory, Azure SQL, and Azure Synapse Analytics.

Exam Format and Structure

The DP-201 exam follows a multiple-choice format designed to test your ability to create Azure data solutions. Here’s a breakdown of the exam format. The exam typically consists of 40-60 questions, though the number may vary. The questions are primarily multiple-choice but may include other formats such as, A scenario where you must apply knowledge to design a solution.

- Drag-and-Drop: Sorting or matching tasks to demonstrate your understanding.

- Hot Area: Selecting areas in diagrams or images to indicate solutions.

- Simulations: Some practical scenarios where you’ll select the right solution or complete a configuration.

You have 180 minutes (3 hours) to complete the exam. A passing score typically ranges around 700-750 out of 1000 points, although the exact passing score can vary. The exam is available in multiple languages, and you can take Understanding Azure Kubernetes Service at an official Pearson VUE test center or remotely online.

Become a Microsoft Azure expert by enrolling in this Microsoft Azure Training today.

Key Domains and Objectives

The DP-201 exam assesses your ability to design solutions across several domains. These domains are:

Designing Data Storage Solutions (40-45%)

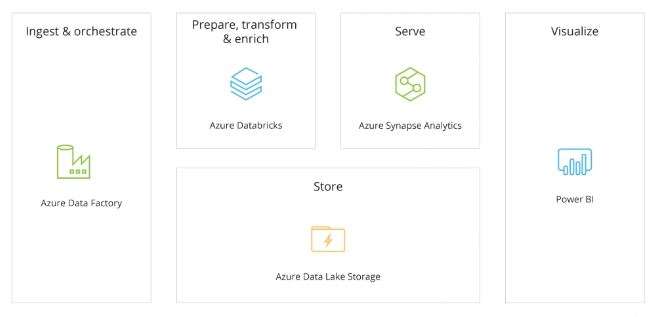

Design for Data Partitioning and Indexing, Knowledge of partitioning and indexing data for large-scale systems. Design for Data Security: Implementing security measures such as encryption, access control, and data masking for Azure data solutions. Design for Data Recovery and Backup, Solutions for ensuring business continuity and disaster recovery. Design for Data Integration, Integrating data across Azure services like Azure Data Lake, Blob Storage, and SQL Database.

Designing Data Processing Solutions (25-30%)

Design for Batch Processing, Design batch-process architectures using Azure Data Factory, HDInsight, or Databricks. Design for Real-Time Processing, Solutions involving event-based data processing using Azure Stream Analytics or Azure Databricks. Design for Data Flow and Data Pipeline, Introduction to Azure Cosmos DB that integrate data from various sources and enable transformation.

Designing for Data Security and Compliance (10-15%)

Design for Security, Applying security principles and frameworks to ensure data is safe and compliant with regulatory requirements. Design for Compliance, Ensuring the design adheres to regulations like GDPR, HIPAA, or industry-specific standards. Designing Auditing and Logging, Implementing audit trails for security and regulatory requirements.

Monitoring and Optimization Strategies (15-20%)

Design for Monitoring, Implementing logging, monitoring, and alerts for tracking the performance of data systems. Design for Performance Tuning, Optimizing the performance of data pipelines, databases, and queries. Cost Optimization, Ensuring the designed solutions are cost-effective using appropriate resources and scaling.

Data Storage Solutions in Azure

Azure provides a variety of data storage solutions, and the DP-201 exam requires a deep understanding of the following:

- Azure Blob Storage: A scalable solution for storing unstructured data, including text and binary data, often used for data lakes.

- Azure Data Lake Storage: An Understanding Azure Monitor storage service for big data analytics, allowing the creation of large-scale data lakes.

- Azure SQL Database: A fully managed relational database (DBaaS) service suitable for structured data and transactional workloads.

- Azure Cosmos DB: A NoSQL database offering low-latency and scalable solutions for global data distribution.

- Azure Synapse Analytics: A cloud-based analytics platform that integrates big data and data warehousing.

Designing the appropriate storage solution depends on the nature of the data, its volume, and its access patterns.

Enhance your knowledge in Microsoft Azure. Join this Microsoft Azure Training now.

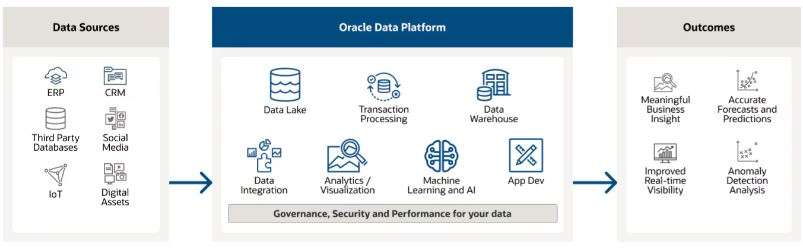

Data Processing and Security in Azure

Efficient data processing and strong security measures are essential components of any modern Azure-based data solution. Organizations rely on robust data processing frameworks to handle large-scale data workloads while ensuring security and compliance with industry standards.

Data Processing Solutions in Azure

Processing data efficiently is critical for organizations dealing with high volumes of structured and unstructured data. Azure provides several data processing solutions designed for batch and real-time processing, enabling organizations to transform, analyze, and integrate data seamlessly.

- Azure Data Factory: A cloud-based ETL (Extract, Transform, Load) service that allows users to create, schedule, and orchestrate complex data pipelines. It is used to automate the movement and transformation of Microsoft Azure Training different storage and processing systems. Azure Data Factory supports both batch and real-time data processing, making it an essential tool for enterprise-level data management.

- Azure Stream Analytics: A real-time data processing service that enables users to analyze streaming data from sources such as IoT devices, application logs, and social media feeds. This service allows organizations to detect anomalies, generate alerts, and gain actionable insights with minimal configuration.

- Azure Databricks: A fast, scalable, and collaborative Apache Spark-based platform designed for big data analytics and machine learning. It integrates with Azure services and supports a wide range of data processing use cases, including ETL workflows, predictive analytics, and AI-powered solutions.

- Azure HDInsight: A fully managed cloud service for running open-source frameworks such as Hadoop, Spark, and Hive. It enables organizations to process massive datasets efficiently using distributed computing, making it suitable for large-scale analytics, reporting, and business intelligence applications.

Designing an optimal data processing solution requires a clear understanding of batch processing versus real-time processing. Batch processing involves handling large volumes of data in scheduled intervals, making Azure Devops ideal for data aggregation, reporting, and historical analysis. Real-time processing, on the other hand, deals with continuous data streams, enabling immediate insights and decision-making. A well-architected solution integrates both approaches, leveraging the right Azure services for each scenario.

Want to lead in Cloud Computing? Enroll in ACTE’s Cloud Computing Master Program Training Course and start your journey today!

Designing for Data Security and Compliance

Ensuring data security and compliance is paramount when designing Azure data solutions. Organizations must implement best practices to protect sensitive data, maintain regulatory compliance, and safeguard against security threats. Protecting data at rest and in transit is a critical security measure. Azure offers built-in encryption services such as Azure Key Vault, which provides secure key management and encryption capabilities. Encrypting sensitive data ensures that unauthorized access is prevented, even in the event of a data breach. Implementing fine-grained access control mechanisms is essential for protecting data assets. Azure Active Directory (Azure AD) and Azure Role-Based Access Control (RBAC) allow organizations to define user permissions, restrict data access, and enforce authentication protocols. These access control policies help prevent unauthorized data exposure. Regulatory compliance is a major concern for businesses operating in various industries. Microsoft Azure Solutions Architect provides built-in compliance tools to help organizations adhere to standards such as GDPR, HIPAA, and SOC. Implementing audit trails and logging mechanisms allows organizations to track data access, monitor security events, and generate compliance reports as needed. Protecting personally identifiable information (PII) and sensitive business data is crucial for maintaining privacy and security. Azure SQL offers Dynamic Data Masking, which automatically obfuscates sensitive data to prevent unauthorized viewing. Anonymization techniques further enhance data security by ensuring that datasets used for analytics and testing do not expose confidential information.By integrating advanced security measures and compliance frameworks into Azure data solutions, organizations can mitigate risks, protect critical assets, and maintain trust with customers and stakeholders. Combining efficient data processing capabilities with strong security practices ensures the development of scalable, reliable, and secure data solutions on the Azure platform.

Preparing for a job interview? Explore our blog on Microsoft Azure Interview Questions and Answers!

Monitoring and Optimization Strategies

Effective monitoring and optimization of Azure data solutions are essential to ensure that systems are running efficiently and cost-effectively:

- Monitoring Solutions: Leverage Azure Monitor, Log Analytics, and Application Insights to collect metrics and logs so data solutions work optimally.

- Performance Optimization: Apply methods to enhance performance, including query optimization, data partitioning, and scaling resources.

- Cost Optimization: Create solutions that optimize performance while minimizing cost by using cost-efficient storage, selecting appropriate compute resources, and optimizing data pipelines for optimal usage.

Recommended Study Resources

Preparing for the DP-201 exam requires a combination of hands-on experience and study. Here are some recommended resources. Microsoft offers free learning paths tailored for the DP-201 exam, covering each domain in detail. This official study guide provides in-depth knowledge of the exam objectives. Platforms like Pluralsight, Udemy, and LinkedIn Learning offer courses that cover Azure Data Engineering topics. Azure Data Factory, Azure documentation is an invaluable resource for understanding the features and capabilities of Azure services.

Career Benefits of DP-201 Certification

Obtaining the Microsoft Certified: Azure Data Engineer Associate certification demonstrates your expertise in designing data solutions in Azure, which can open up many career opportunities:

- Increased Job Opportunities: With the rise of cloud technologies, businesses seek qualified Azure professionals to manage their data workloads.

- Higher Earning Potential: Certified professionals often earn higher salaries than their non-certified counterparts.

- Skill Recognition: The certification validates your skills in designing, implementing, and managing Azure data solutions globally.

Conclusion

The DP-201 exam thoroughly assesses your ability to design and deploy Azure data solutions. It examines your knowledge of critical areas like data storage, integration, and security in the Azure environment. By preparing well, you will know how to use different Azure services and tools to solve business problems. Successful applicants need to prove they can plan, design, and execute data solutions that are secure and efficient. This involves data model creation, data processing implementation, and data security management within Azure environments. Familiarity with foundational services like Azure SQL Database, Microsoft Azure Training , Azure Synapse Analytics, and Azure Blob Storage is imperative to your study. Moreover, knowledge of best practices, design patterns, and Azure security features will enable you to clear the exam and be proficient in developing scalable and dependable data solutions on the Azure platform. Hands-on lab practice and exam study materials will increase your preparedness. Knowing the exam’s objectives and making time to study will allow you to confidently sit for the DP-201 exam and acquire the Azure certification.