- Introduction

- Setting Up Apache Kafka on AWS MSK

- MSK Cluster Configuration and Management

- Security and Access Control in MSK

- Integrating MSK with AWS Lambda and Other Services

- Performance Tuning and Best Practices

- Conclusion

AWS Managed Streaming for Apache Kafka (MSK) is a fully managed service that simplifies the deployment and management of Kafka clusters, enabling real-time data streaming applications. Setting up Kafka on MSK involves creating a cluster, configuring networking, and monitoring performance using AWS CloudWatch. Effective cluster management includes topic creation, partitioning, and scaling to optimize throughput and reliability, all of which are key topics covered in AWS Training to ensure a robust and scalable cloud infrastructure. Security is ensured through encryption, IAM-based access control, VPC configurations, and audit logging. MSK seamlessly integrates with AWS Lambda, S3, Redshift, and other services for efficient data processing. Performance tuning involves optimizing broker configurations, scaling strategies, and consumer lag management. By following best practices, organizations can ensure high availability and efficient streaming workflows. MSK reduces operational complexities while offering scalability, security, and seamless integration with AWS ecosystems.

To Earn Your AWS Certification, Gain Insights From Leading AWS Experts And Advance Your Career With ACTE’s AWS Certification Training Today!

Introduction

Amazon Managed Streaming for Apache Kafka (MSK) is a fully managed service provided by AWS that simplifies the process of setting up, managing, and scaling Apache Kafka clusters. Kafka is a distributed streaming platform widely used for building real-time data pipelines and streaming applications. MSK allows developers to focus on building their applications without having to manage the underlying infrastructure for Kafka clusters, a concept that can be applied in Mastering Snowflake Architecture & Integration for seamless integration of data pipelines without infrastructure complexities. It offers a fully managed solution, ensuring that users can leverage Kafka’s powerful streaming capabilities with ease while benefiting from high availability, automatic scaling, and seamless integration with other AWS services. With AWS MSK, you get all the benefits of Kafka’s distributed event streaming capabilities along with the added convenience of a managed service, including automated patching, monitoring, and cluster scaling. It also integrates well with other AWS services, making it a robust solution for organizations building large-scale, data-driven applications.

Setting Up Apache Kafka on AWS MSK

Setting up Apache Kafka on AWS MSK is relatively straightforward due to the fully managed nature of the service. Here’s an outline of the process for setting up Kafka on MSK.

- Create an MSK Cluster: Start by creating an MSK cluster through the AWS Management Console, AWS CLI, or AWS SDKs. Specify the desired number of broker nodes, storage, and other configuration options. You can choose between different instance types depending on your workload requirements.

- Cluster Configuration: Choose a Kafka version and configure cluster settings such as encryption at rest, VPC settings, and public accessibility, which are crucial steps in Key Artifacts in DevOps for Efficient Delivery to ensure secure and optimized infrastructure management.

- Broker and Zookeeper Setup: MSK automatically sets up and manages Kafka brokers and Zookeeper nodes. Kafka brokers are responsible for storing and distributing the streams, while Zookeeper is used for managing metadata and coordinating the Kafka brokers.

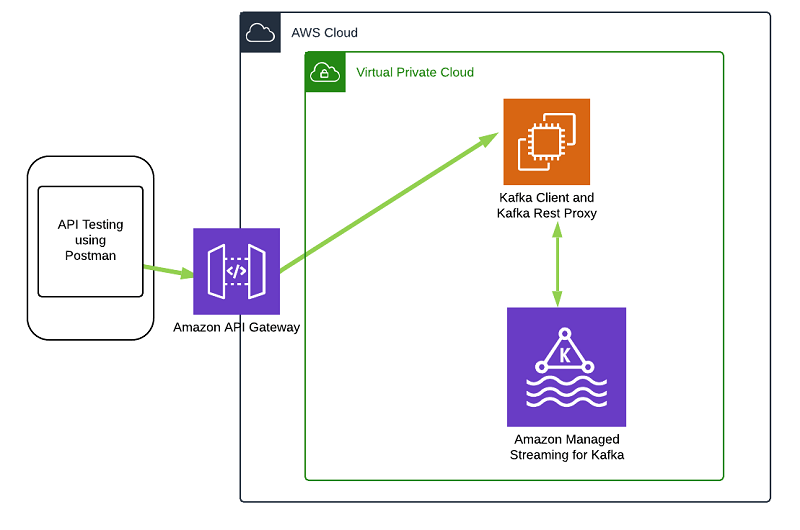

- Networking Configuration: Define the VPC, subnet, and security group configurations for the Kafka brokers. The brokers can either be private or publicly accessible, depending on the configuration.

- Monitoring and Metrics: Once the cluster is set up, you can monitor the health and performance of the cluster through AWS CloudWatch. MSK integrates with CloudWatch for monitoring key metrics such as topic and partition usage, disk space, and throughput.

- Encryption: MSK supports encryption at rest and in transit. By default, Kafka data is encrypted at rest using AWS Key Management Service (KMS). You can also enable encryption in transit, which uses TLS (Transport Layer Security) to encrypt data as it moves between clients and brokers, ensuring secure communication.

- Access Control Using IAM: MSK uses AWS Identity and Access Management (IAM) for access control. IAM policies can be used to restrict access to MSK clusters and resources. You can define roles and permissions to control who can perform specific actions, such as creating or deleting topics and managing consumer groups.

- VPC and Security Groups: MSK clusters can be deployed within a VPC, allowing you to control network traffic and access to the cluster. Security groups can be used to define inbound and outbound traffic rules, ensuring that only authorized traffic can reach your MSK cluster.

- Authentication: MSK integrates with AWS Secrets Manager to manage and retrieve credentials for Kafka clients securely. Additionally, Kafka clients can use SSL certificates for authentication and authorization, ensuring that only authorized users can interact with the Kafka brokers.

- Audit Logging: AWS CloudTrail can be used to log API calls made to MSK. This allows you to track access and changes to your Kafka clusters, helping to identify potential security risks or unauthorized actions.

- Data Access Policies: MSK integrates with AWS Lake Formation and other AWS data access management tools to help enforce data access policies, allowing you to manage who can access sensitive data streams.

- Optimize Broker Configuration: Proper configuration of the Kafka brokers is crucial for performance. Set the appropriate replication factor, partition size, and number of partitions based on the expected workload. Consider factors like network bandwidth, disk throughput, and memory capacity when selecting instance types for your brokers.

- Monitor Cluster Health: Regularly monitor key metrics such as broker CPU usage, disk space, and replication lag. Use AWS CloudWatch to set up alarms for important metrics, and ensure you receive notifications when performance drops below a certain threshold.

- Scale as Needed: MSK allows you to scale your cluster by adding or removing brokers. Scale your cluster based on the number of partitions, throughput requirements, and consumer load, a key concept in Cloud Technologies for optimizing performance and resource management in scalable cloud environments. Ensure that scaling is done incrementally to avoid potential disruptions.

- Implement Data Retention Policies: Configure appropriate data retention policies for your topics to ensure that old data is deleted in a timely manner. This helps optimize storage usage and ensures that your cluster remains performant.

- Use Compression: Enable message compression in Kafka to reduce the amount of data transferred across the network and to optimize storage usage. Kafka supports various compression algorithms such as GZIP, Snappy, and LZ4.

- Tune Kafka Consumer Lag: Ensure that Kafka consumers are consuming data at a rate that matches the production rate. Monitor consumer lag and optimize consumers to ensure that they keep up with the flow of data. Consider implementing auto-scaling for consumers if the load is high.

To Explore AWS in Depth, Check Out Our Comprehensive AWS Certification Training To Gain Insights From Our Experts!

MSK Cluster Configuration and Management

Once your MSK cluster is created, you will need to manage and configure it to ensure optimal performance and reliability. This includes configuring topics, partitions, replication, and managing consumer groups. Here are the key configuration and management tasks Creating and Configuring Topics in Kafka are logical channels that organize data streams. MSK allows you to easily create and manage topics for your application. You can specify the number of partitions and replication factors based on your requirements for throughput and fault tolerance, a concept that can also be applied when connecting a Raspberry Pi to the Internet for managing data and ensuring reliability in IoT projects. Replication and Partitioning Kafka provides data replication and partitioning, ensuring high availability and fault tolerance. MSK allows you to configure the replication factor for each topic. By default, Kafka replicates data across multiple brokers, and MSK ensures that this is handled automatically. Managing Consumer Groups Consumer groups are a mechanism for parallel processing of data streams. MSK allows you to manage consumer groups and assign consumers to specific topics. Properly managing consumer groups ensures that data processing is done in an efficient and scalable manner. Scaling the Cluster MSK allows you to scale your Kafka cluster by adding or removing broker nodes to meet the needs of your application. It automatically handles the scaling process, ensuring that partitions are redistributed and data replication is maintained. Monitoring and Performance Metrics MSK provides detailed performance metrics such as broker health, partition counts, and throughput. These metrics can be tracked in real-time using CloudWatch, which helps in managing and troubleshooting Kafka clusters.

Security and Access Control in MSK

Security is a critical aspect when working with AWS MSK, especially when handling sensitive data, and is an essential focus in AWS Training to ensure secure data management and compliance in cloud environments. AWS MSK integrates with various AWS security features to provide comprehensive security and access control.

Are You Considering Pursuing a Cloud Computing Master’s Degree? Enroll For Cloud Computing Masters Course Today!

Integrating MSK with AWS Lambda and Other Services

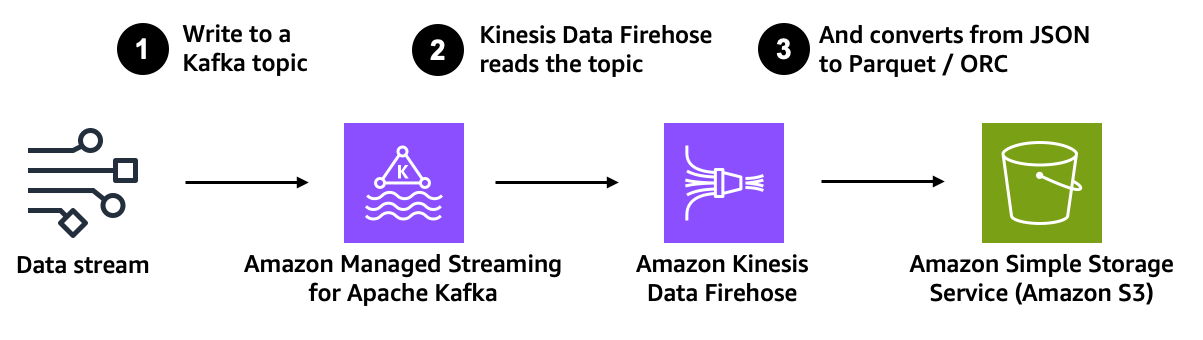

AWS MSK is highly integrated with various AWS services, making it easier to build data-driven, event-driven applications. One of the key integrations is with AWS Lambda. Here’s how you can integrate MSK with Lambda and other AWS services AWS Lambda Integration You can use AWS Lambda to process data from Kafka topics in real-time. By subscribing a Lambda function to an MSK topic, you can automatically trigger the Lambda function whenever a new message is published to the topic, a concept that aligns with Understanding Grid Computing for distributed processing and real-time event handling. This integration is ideal for processing real-time data streams and automating workflows based on Kafka events. Amazon S3 MSK can be integrated with Amazon S3 to persist Kafka streams. You can use AWS Lambda to consume Kafka records and then write the processed data to an S3 bucket. This allows you to create long-term storage for your streaming data. Amazon Redshift MSK can be used to stream data into Amazon Redshift for analytics and reporting. By consuming Kafka topics in real-time, you can push the data into Redshift and then use SQL queries for analytics purposes. AWS Glue AWS Glue can be used in conjunction with MSK to perform ETL (extract, transform, load) operations on data streams. You can use Glue jobs to process data from Kafka topics and load it into other data stores like Amazon S3, Redshift, or RDS. Amazon Elasticsearch Service You can stream data from Kafka topics into Amazon Elasticsearch for real-time log analytics and search functionality. This is useful for monitoring and analyzing data in real-time, such as logs, metrics, or social media feeds. AWS Kinesis MSK can also integrate with Amazon Kinesis, enabling you to use MSK for streaming data into Kinesis Data Streams or Kinesis Firehose for further processing, transformation, or delivery to downstream systems.

Performance Tuning and Best Practices

To ensure that your MSK cluster operates at optimal performance, it’s important to follow best practices for configuration, scaling, and monitoring. Here are some key performance tuning tips and best practices

Want to Learn About AWS? Explore Our AWS Interview Questions and Answers Featuring the Most Frequently Asked Questions in Job Interviews.

Conclusion

AWS Managed Streaming for Apache Kafka (MSK) is a powerful, fully managed service designed to eliminate the complexities of deploying, maintaining, and scaling Apache Kafka clusters. It provides enterprises with a reliable, scalable, and cost-efficient streaming solution, allowing them to build and manage real-time data pipelines effortlessly. MSK seamlessly integrates with AWS services like Lambda for event-driven computing, S3 for long-term storage, Redshift for data warehousing, and Elasticsearch for log analytics and full-text search, concepts that are extensively covered in AWS Training to build scalable and efficient cloud architectures. Security is a top priority, with built-in encryption at rest and in transit, IAM-based fine-grained access control, VPC isolation for secure networking, and AWS CloudTrail for audit logging and compliance tracking. Businesses can optimize performance by configuring brokers effectively, fine-tuning partitioning and replication settings, monitoring key performance indicators through CloudWatch, and setting appropriate data retention policies to manage storage efficiently. MSK automates essential maintenance tasks such as patching, scaling, and monitoring, reducing operational overhead and enabling teams to focus on application development rather than infrastructure management.