- Introduction to Snowflake Development

- Snowflake Architecture Overview

- Key Features of Snowflake for Developers

- Working with Snowflake Databases

- Writing SQL Queries in Snowflake

- Data Loading and Unloading in Snowflake

- Snowflake Security and Access Control

- Performance Optimization in Snowflake

- Integrating Snowflake with Other Tools

- Conclusion

Introduction to Snowflake Development

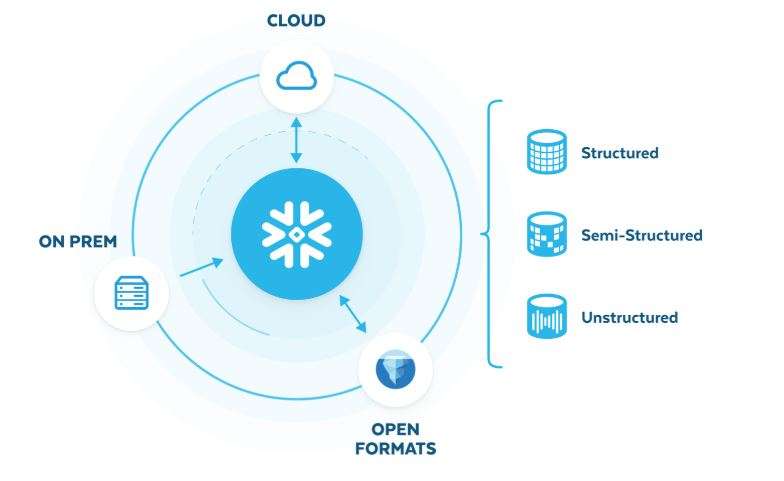

Snowflake is a cloud-based data warehousing platform that provides an innovative approach to data storage and analysis. Its architecture is designed to support data integration, sharing, and analytics at scale. Unlike traditional data warehouses, Snowflake is built on cloud-native infrastructure, which enables it to provide seamless scalability, real-time data sharing, and the ability to handle diverse workloads.Snowflake’s multi-cloud support allows developers to use AWS, Azure, and Google Cloud, offering flexibility and scalability across platforms. Developers can perform complex SQL queries, process structured and semi-structured data, and integrate Snowflake with a wide variety of other tools, which is an important aspect covered in Cloud Computing Courses to help students understand how to manage and analyze data effectively in cloud environments. Snowflake is designed for ease of use, with a simple interface for both developers and data analysts. Its auto-scaling capabilities ensure that compute resources can be scaled up or down based on demand, offering efficient resource management. Snowflake also provides a secure environment for data storage and processing, supporting encryption, access controls, and data masking.

Interested in Obtaining Your Cloud Computing Certificate? View The Cloud Computing Online Course Offered By ACTE Right Now!

Snowflake Architecture Overview

Snowflake’s architecture is composed of three main layers: Storage, Computer, and Cloud Services.

- Storage Layer: In Snowflake, all data is stored in a columnar format in the storage layer. This layer is completely decoupled from the compute layer, allowing for efficient scaling of both storage and compute independently. Snowflake uses a cloud-optimized format to store data, providing efficient data retrieval.

- Computer Layer: The compute layer is where data processing happens. This layer consists of virtual warehouses, which are scalable clusters of compute resources that run queries and perform other data processing tasks, similar to the way Why Azure Container Service Matters, as it provides scalable compute resources for containerized applications, enabling efficient data processing and workload management. Virtual warehouses in Snowflake can be scaled up or down depending on the workload, providing flexibility and cost efficiency. Multiple virtual warehouses can operate on the same dataset simultaneously without any contention.

- Cloud Services Layer: This layer handles management, security, metadata, query optimization, and access control. It provides a centralized platform for handling user requests, session management, and transaction management. Snowflake’s cloud services layer is responsible for managing caching, query optimization, and coordination of operations across compute and storage layers.

Key Features of Snowflake for Developers

Snowflake offers several features that make it an appealing choice for developers working with large datasets:Scaling compute and storage independently allows for efficient resource management. Storage can be expanded without impacting compute performance, and compute power can be increased as needed to handle high query volumes.Snowflake can store both structured (relational) data and semi-structured data (JSON, XML, Parquet, etc.) without the need for special configurations. This allows developers to work with a wide variety of data types.Snowflake’s virtual warehouses can be automatically scaled up or down depending on demand, ensuring that queries run efficiently. The platform allows for high concurrency, meaning multiple users or processes can query the same data without impacting performance. Time Travel allows developers to query historical versions of data, which can be helpful for auditing or recovering deleted data, similar to understanding MAC Addresses Functions, Risks, and Operation, where tracking and managing data over time is crucial for maintaining network security and addressing potential risks. Fail-safe provides data recovery in the event of a failure, giving developers peace of mind when working with critical data. Snowflake manages all maintenance tasks, including software updates, patches, and hardware management. This eliminates the need for developers to worry about the infrastructure, allowing them to focus on building applications and analytics solutions Snowflake seamlessly integrates with a variety of third-party tools, such as ETL platforms, BI tools, and machine learning frameworks. It also supports a rich set of connectors and APIs for easy integration with other data platforms.

To Earn Your Cloud Computing Certification, Gain Insights From Leading Cloud Computing Experts And Advance Your Career With ACTE’s Cloud Computing Online Course Today!

Working with Snowflake Databases

To start working with Snowflake, developers must first create a database and load data into it. Snowflake databases can contain multiple schemas, which group related tables and views. Each schema can include a range of objects, including tables, views, file formats, and stored procedures.

- Creating a Database: The CREATE DATABASE SQL command in Snowflake creates a database. After creating the database, developers can develop schemas within it to organize data.

- Schemas and Tables: Snowflake databases are divided into schemas. Each schema contains tables, views, and other database objects. Tables can be defined using the CREATE TABLE command, and Snowflake supports both structured (SQL) and semi-structured (JSON, Avro, Parquet) data types.

- Data Sharing: One of Snowflake’s key features is data sharing. Developers can share data between Snowflake accounts without moving or copying the data, allowing for real-time collaboration with partners or departments within the organization.

- Database Management: Snowflake allows for easy database management using the web interface or SQL commands. Developers can perform operations such as renaming tables, dropping columns, or cloning databases for backup and recovery.

- Loading Data: Developers can load data into Snowflake using the COPY INTO command. Data can be loaded from various sources such as local files, cloud storage (Amazon S3, Azure Blob Storage, or Google Cloud Storage), and other external stages. Snowflake supports loading structured and semi-structured data formats, such as CSV, JSON, and Parquet.

- Unloading Data: Developers can unload data from Snowflake to external cloud storage using the COPY INTO command with the FROM clause. This is useful for creating backups or sharing data with other systems. Snowflake supports unloading data into multiple formats, such as CSV, JSON, or Parquet.

- Snow pipe for Continuous Data Loading: Snowpipe is an automated data loading feature that continuously loads data into Snowflake as it is ingested into an external stage. This is especially useful for real-time analytics applications, much like Azure Data Box Secure Data Transfer Solution, which ensures secure and efficient data transfer, particularly in scenarios that require seamless and timely data ingestion into cloud platforms.

- File Formats: Snowflake uses file formats (CSV, JSON, Avro, etc.) to define how data should be read when loading and unloading. Developers can create and manage file formats to specify options like delimiter, encoding, and compression type.

- Role-Based Access Control (RBAC): Snowflake uses a role-based access control system to define who can access data and what actions they can perform. Developers can create roles, assign them to users, and control permissions for various database objects.

- Encryption: Snowflake automatically encrypts all data at rest and in transit. The platform uses strong encryption algorithms (AES-256) to protect sensitive data. Developers can also apply custom encryption keys for additional security, which aligns with recommendations from Azure Advisor to enhance security and compliance when managing cloud-based data and resources.

- Data Masking: Snowflake supports dynamic data masking, allowing developers to apply masking policies to sensitive data (e.g., credit card numbers or personal information). Masking policies can be defined at the column level.

- Multi-Factor Authentication (MFA): Snowflake supports multi-factor authentication, adding an extra layer of security when users log in to the platform. This helps prevent unauthorized access to sensitive data.

- Audit Logging: Snowflake provides detailed audit logs that track all user activity. Developers can review logs for compliance purposes and monitor access to sensitive data.

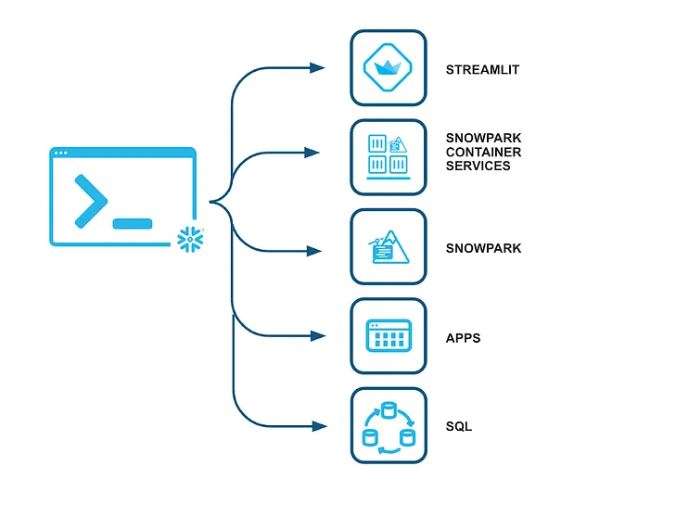

- ETL Tools: Snowflake can be integrated with ETL tools like Talent, Informatical, Apache NiFi, and Five tran for automated data extraction, transformation, and loading.

- Business Intelligence (BI) Tools: Snowflake integrates with popular BI tools like Tableau, Power BI, Looker, and Qlik for creating interactive data visualizations and reports.

- Machine Learning Tools: Snowflake supports integration with machine learning platforms like Python (via Snowflake’s Python connector) and Data Robot. This enables developers to perform data science tasks such as predictive modeling directly within Snowflake.

- Data Integration with Other Databases: Snowflake allows seamless integration with other databases, such as PostgreSQL, MySQL, and SQL Server, allowing data to be shared and synchronized across platforms.

- Cloud Services: Snowflake’s native integration with AWS, Azure, and GCP provides developers with easy access to cloud storage, data lakes, and other cloud-based services.

Writing SQL Queries in Snowflake

SQL is the primary language used to interact with Snowflake, and developers can write SQL queries to perform operations like selecting, filtering, and aggregating data.Developers can perform basic SQL operations such as SELECT, INSERT, UPDATE, and DELETE in Snowflake. Snowflake supports all standard SQL operations and advanced features such as window functions, common table expressions (CTEs), and subqueries. Snowflake supports a wide range of SQL join operations, including inner joins, outer joins, and cross joins. Developers can efficiently join large tables using these operations, which is a key concept taught in Cloud Computing Courses to help students understand advanced data manipulation and optimization techniques in cloud-based data platforms. Snowflake offers a rich set of built-in SQL functions for data manipulation, such as TO_DATE(), TO_TIMESTAMP(), SUM(), COUNT(), and GROUP BY. Snowflake also supports custom functions and stored procedures.Snowflake’s query optimizer ensures that queries are executed as efficiently as possible. Developers can use techniques like partition pruning and clustering to improve query performance on large datasets.

Data Loading and Unloading in Snowflake

Snowflake provides several methods for loading and unloading data efficiently:

Gain Your Master’s Certification in Cloud Computing by Enrolling in Our Cloud Computing Masters Course.

Snowflake Security and Access Control

Snowflake offers robust security features that help developers protect data and manage user access.

Performance Optimization in Snowflake

To ensure high performance in Snowflake, developers can employ several optimization techniques. Snowflake caches the results of frequently executed queries. If the same query is executed multiple times, Snowflake can return cached results, reducing processing time. Developers can create clustering keys on tables to improve query performance for large datasets. Clustering optimizes data storage and speeds up queries by reducing the amount of data scanned. Materialized views store precomputed results of queries, allowing for faster retrieval of complex data. They can be used to optimize frequently accessed data. Snowflake’s auto-scaling feature allows developers to adjust the compute resources used for processing queries based on demand. This ensures that queries are processed quickly, even during peak usage. Developers can use query profiling tools to analyze and optimize slow-running queries. Snowflake’s query optimizer ensures that queries are executed efficiently.

Integrating Snowflake with Other Tools

Snowflake integrates with a wide range of third-party tools and services, enabling developers to build comprehensive data processing and analytics workflows, much like Azure DNS Management, which allows developers to efficiently manage domain name system settings and integrate them into broader cloud-based services and workflows.

Want to Learn About Cloud Computing? Explore Our Cloud Computing Interview Questions & Answer Featuring the Most Frequently Asked Questions in Job Interviews.

Conclusion

Mastering Snowflake development equips professionals with a powerful skillset for building and managing data-intensive applications in the modern cloud ecosystem. Snowflake’s unique architecture, with its separation of compute and storage, offers scalability and flexibility that allows organizations to process large amounts of data efficiently. Its features, such as zero-copy cloning, automatic scaling, and seamless integration with a wide range of tools and platforms, make it an attractive choice for developers and businesses alike, which is why these concepts are often covered in Cloud Computing Courses to help students understand scalable and efficient cloud-based solutions. As organizations continue to prioritize data-driven decision-making, mastering Snowflake will provide a competitive edge in leveraging the full potential of their data. By understanding the core concepts of Snowflake architecture, its key features, and how to integrate it with other technologies, developers can effectively build solutions that not only streamline data workflows but also enhance business insights, all while ensuring high performance and cost efficiency in the cloud.In summary, Snowflake development expertise is invaluable in today’s data-centric landscape, and by mastering its features and integration strategies, developers can drive innovation and help businesses succeed in their digital transformation journey.