- Overview

- In Linux, What is Docker?

- Why Make Use of Docker?

- System Prerequisites for Linux Docker Installation

- How is Docker installed on Linux?

- Building a Container in Docker for Linux

- Conclusion

Overview

Managing dependencies, maintaining consistency across environments, and deploying apps can become difficult tasks in the fast-paced world of software development that exists today. However, Docker has become a revolutionary tool for developing software that runs on Linux. It streamlines these procedures so that developers can concentrate less on configuration and more on code. We’ll go over what Docker is, why you should use it, and how to install it and create a container on Linux in this blog. Additionally, Cloud Computing Courses can provide you with the knowledge to integrate Docker effectively within cloud environments. With Docker, you can easily isolate applications, manage multiple versions, and eliminate the “it works on my machine” problem. It enables faster development cycles and better collaboration across teams. Whether you’re building microservices or managing legacy applications, Docker provides the flexibility and power needed to scale your projects efficiently. Let’s explore how Docker simplifies the complexities of software deployment and development.

Interested in Obtaining Your Cloud Computing Certificate? View The Cloud Computing Online Course Offered By ACTE Right Now!

In Linux, What is Docker?

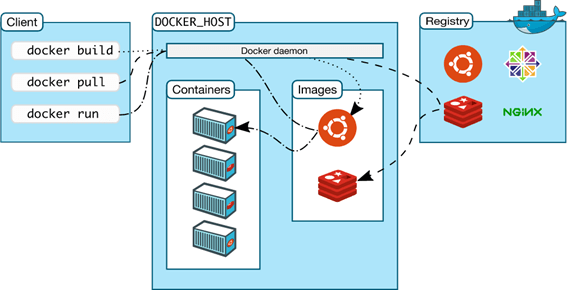

Docker is also beneficial for continuous integration and continuous delivery (CI/CD) pipelines, where developers can quickly build, test, and deploy applications across various environments. By using Docker, developers can eliminate the “it works on my machine” issue, ensuring consistent environments across different stages of the software lifecycle. Additionally, Docker images, which are templates for creating containers, can be stored and shared through Docker Hub or private registries, making it easy to reuse and distribute application environments. Understanding Grid Computing can also help in optimizing resource allocation when working with distributed systems like Docker in cloud environments. Docker Compose, a tool that works alongside Docker, allows users to define and run multi-container Docker applications, simplifying complex deployments. Docker’s lightweight nature ensures minimal overhead, making it more efficient than traditional virtual machines, which require their own operating system. This efficiency allows for faster scaling of applications and improved resource utilization. In terms of security, Docker provides isolation between containers, helping prevent one container from affecting others or the host system. However, users must still be cautious and ensure proper configuration and security practices when using Docker, as misconfigurations can lead to vulnerabilities.

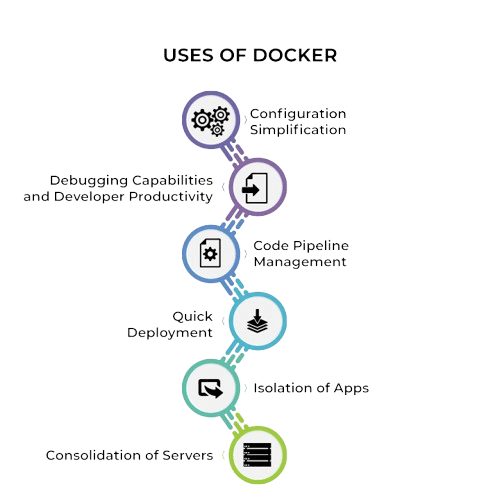

Why Make Use of Docker?

Developers favor Docker for Linux-based software development for a variety of reasons. Among the main advantages are:

- Consistency Across Environments: Docker containers guarantee that your program will function just as it does in production on your development computer. By doing this, the “it works on my machine” issue that frequently occurs when transferring software between environments is resolved.

- Simplified Dependency Management: Docker allows you to bundle all of your software dependencies into a single container, including configuration files and libraries. This makes management easier and guarantees that your program is portable and self-contained.

- Improved Efficiency and Performance: Compared to virtual machines (VMs), Docker containers are smaller and use less power. Raspberry Pi to the Internet can also benefit from Docker containers, as they allow for lightweight and efficient deployments on low-power devices like the Raspberry Pi. You can run several containers on a single host without sacrificing speed thanks to quicker startup times and more effective use of system resources.

- Scalability: Docker makes it simple and rapid to scale apps. With minimal overhead, you may spin up more containers or alter current ones as your application expands. To further improve scalability, Docker also easily interfaces with orchestration technologies like Kubernetes.

- Simplified Continuous Integration and Continuous Delivery (CI/CD) Docker streamlines the CI/CD process by ensuring that the same containerized application can be tested, built, and deployed across various stages of the development pipeline. This reduces the chances of errors and speeds up the release cycle.

- Isolation and Security: Docker provides isolated environments for each application, which helps prevent conflicts between different services or dependencies. This isolation also enhances security by limiting the impact of a potential security breach to just the container, rather than the entire host system.

- Version Control and Rollbacks: With Docker, you can version your container images, which means you can easily roll back to previous versions of your application if something goes wrong. This feature makes managing updates or bug fixes more predictable and safe.

- Simplified Collaboration: Docker simplifies collaboration among teams by providing a consistent environment. Developers can share container images that can be run on any machine, ensuring that everyone is working in the same environment, regardless of operating systems or machine configurations.

- Cross-Platform Compatibility: Docker containers can run on any platform that supports Docker, including Linux, Windows, and macOS. This cross-platform compatibility means developers can build and test their applications on different platforms without having to worry about discrepancies in behavior.

- Large Ecosystem and Community: Docker has a robust ecosystem, with a wide range of tools, pre-built images, and support for various programming languages. The large Docker community also provides a wealth of knowledge, best practices, and resources, making it easier for developers to troubleshoot issues and improve their workflows.

- A 64-bit version of Linux (Debian, Fedora, CentOS, or Ubuntu)

- At least 1GB of RAM

- A Linux kernel version that is supported (3.10 or later)

- Having access to a root-privileged terminal

- Swap Memory: It’s recommended to disable swap memory, as Docker performs better with it disabled. This aligns with the Key Artifacts in DevOps for Efficient Delivery, ensuring optimal performance and resource management during containerized application deployments. If you must use swap, Docker requires a minimum swap size of 1GB.

- A compatible CPU: Docker requires a modern 64-bit processor with virtualization support. Most Intel and AMD processors support this feature.

- Internet connection: Docker requires an internet connection to pull images from Docker Hub and download updates.

- Up-to-date software dependencies: Ensure that your system is up-to-date with the latest software dependencies to avoid compatibility issues during installation.

- sudo apt-get update

- sudo apt-get install apt-transport-https ca-certificates curl software-properties-common

- Add-apt-repository sudo “deb [arch=amd64]” “$(lsb_release -cs) stable” in “linux/ubuntu”

- sudo apt-get install docker-ce

- sudo apt-get update

- sudo docker pull ubuntu

- sudo docker run -it ubuntu

- sudo docker ps

- sudo docker rm

Together, these points highlight why Docker has become a favored tool for Linux-based software development, offering benefits ranging from portability and scalability to enhanced security and collaboration.

To Explore Cloud Computing in Depth, Check Out Our Comprehensive Cloud Computing Online Course To Gain Insights From Our Experts!

System Prerequisites for Linux Docker Installation

Make sure your machine satisfies the following requirements before installing Docker:

How is Docker installed on Linux?

Step 1: Make System UpdatesTo make sure you’re using the most recent versions, start by updating the system packages. Cloud Computing Courses can also teach you how to manage and update packages in cloud environments efficiently. Launch the following command in your terminal:

You must add Docker’s GPG key in order to confirm the legitimacy of Docker packages: curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add –

Gain Your Master’s Certification in Cloud Computing by Enrolling in Our Cloud Computing Masters Course.

Step 4: Add Docker’s RepositoryThe next step is to install Docker’s repository on your computer:

Update your package list one more after adding the repository, and then install Docker:

To verify that Docker has been successfully installed, use sudo docker –version. This will show the version of Docker that is installed on your computer. Additionally, To install Docker on Linux, start by updating your system packages with sudo apt-get update.Next, install the required dependencies using sudo apt-get install apt-transport-https ca-certificates curl software-properties-common. This step is crucial for Mastering Snowflake Architecture & Integration, as setting up the right dependencies ensures smooth integration of containerized environments with cloud-based data architectures. Afterward, add Docker’s official GPG key to verify the legitimacy of the packages with the command curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -. Then, add Docker’s repository to your system by running sudo add-apt-repository “deb [arch=amd64] $(lsb_release -cs) stable”. After updating your package list, install Docker with sudo apt-get install docker-ce. Finally, verify the installation by checking the Docker version with sudo docker –version.

Building a Container in Docker for Linux

After installing Docker successfully, it’s time to build your first container!

Step 1: Take Out an ImageDocker creates containers using images. Docker Hub is a collection of pre-configured Docker images from which you can pull an image. Let’s take the official Ubuntu image, for instance:

You can make and launch a container from the picture once it has been pulled:

By doing this, a new container will launch and you will be able to interact with the container through a terminal session. Now, you may execute commands inside the container in the same way as you would on a standard Linux computer.

Step 3: List Running ContainersUse the following command to view every container that is executing on your system right now:

To take the container out:

In Concise create and manage a Docker container, first, you pull an image from Docker Hub, such as the official Ubuntu image, using the command sudo docker pull ubuntu. Once the image is downloaded, you can create and run a container interactively with sudo docker run -it ubuntu, which opens a terminal session inside the container for executing commands. Understanding this process is helpful for grasping the Key Differences Between Open Shift and Kubernetes in 2025, as both platforms offer unique approaches to managing containers and orchestrating workloads. To see all active containers on your system, use sudo docker ps. When finished with a container, stop it using sudo docker stop

Preparing for Cloud Computing Job Interviews? Have a Look at Our Blog on Cloud Computing Interview Questions & Answer To Ace Your Interview!

Conclusion

Docker on Linux also leverages the underlying kernel’s features, such as namespaces and control groups (cgroups), to ensure lightweight, secure, and isolated execution environments. This leads to faster deployment cycles and reduces the resource overhead typically associated with virtual machines. Moreover, Linux-based systems are a natural fit for Docker because the containers run natively on the host operating system without the need for additional layers or virtualization. For Linux admins and DevOps teams, Docker’s integration with tools like Kubernetes for orchestration and CI/CD systems like Jenkins allows for automation of container deployments, scaling, and management. Additionally, Cloud Computing Courses provide valuable skills to effectively manage these tools in a cloud environment. This combination simplifies infrastructure management, accelerates development pipelines, and improves system reliability. Additionally, Docker’s flexibility allows it to work across different Linux distributions (such as Ubuntu, CentOS, and Debian), making it a versatile choice for teams with diverse system environments. Whether building microservices, legacy applications, or new cloud-native applications, Docker’s consistency ensures that software behaves identically across all stages, from local development to production environments.