- Introduction to Azure Data Factory

- Key Features of Azure Data Factory

- How Azure Data Factory Works

- Data Integration and ETL Pipelines in Azure Data Factory

- Azure Data Factory vs Traditional ETL Tools

- Security and Compliance in Azure Data Factory

- Pricing Model and Cost Considerations

- Connecting Data Sources with Azure Data Factory

- Monitoring and Debugging in Azure Data Factory

- Best Practices for Using Azure Data Factory

- Limitations and Challenges in Azure Data Factory

- Getting Started with Azure Data Factory (Step-by-Step Guide)

- Conclusion

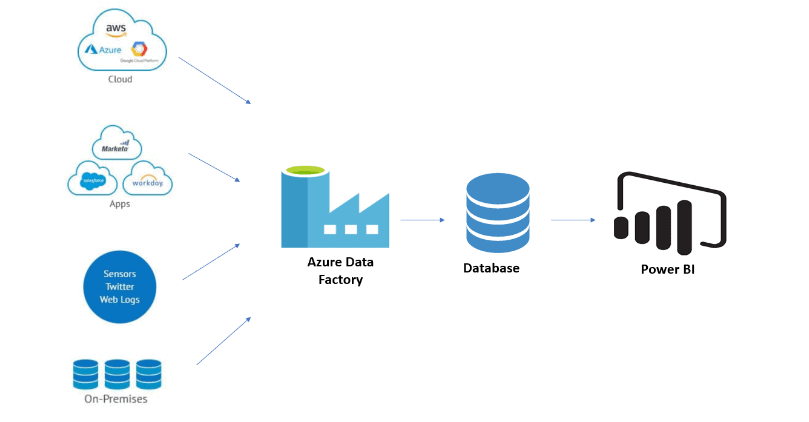

Introduction to Azure Data Factory

Azure Data Factory (ADF) is a cloud-based data integration service provided by Microsoft Azure. It enables organizations to create, schedule, and orchestrate ETL (Extract, Transform, Load) workflows. ADF allows users to move and transform data from various sources to Azure data services like Azure Data Lake, Azure SQL Database, and more. With a visual interface and broad integration capabilities, it empowers developers and data engineers to build scalable data pipelines that support both on-premises and cloud-based data sources. In the modern data landscape, organizations handle large volumes of data spread across different environments (on-premises, cloud, and hybrid). Azure Data Factory provides the tools to integrate and process data from disparate sources, transforming and loading it into desired destinations with minimal effort.

Key Features of Azure Data Factory

- Data Integration and Orchestration: Azure Data Factory allows seamless data integration from a wide range of sources (on-premises, cloud, SaaS, etc.) into a centralized data repository for processing and analysis.

- ETL and ELT Pipelines: ADF supports Extract, Transform, Load (ETL) and Extract, Load, Transform (ELT) workflows, enabling users to build complex data pipelines that can handle large volumes of data.

- Data Movement and Transformation: The service supports data movement between on-premises and cloud storage and transformation using activities like data flows, stored procedures, and external transformations.

- Scalability: ADF is designed to scale automatically based on the volume of data being processed. It supports parallel execution and scaling compute resources to handle high-demand workloads.

- Built-in Data Connectors: Azure Data Factory provides over 90 pre-built connectors for familiar data sources, including SQL, NoSQL, file-based systems, APIs, and cloud storage. It simplifies the process of integrating with various data sources.

- Monitoring and Management: ADF provides a comprehensive monitoring and management interface for tracking pipeline performance, viewing logs, and receiving notifications for errors or performance bottlenecks.

- Integration with Azure Services: ADF can be easily integrated with other Azure services such as Azure Machine Learning, Azure Databricks, Azure Synapse Analytics, and Azure SQL Database to enhance its capabilities for data processing and analytics.

- Serverless Data Flow: Azure Data Factory supports serverless data flows, which can be run without worrying about managing infrastructure, allowing users to focus purely on data transformations.

- Secure Data Movement: It ensures secure data movement through encryption, both in transit and at rest, ensuring data privacy and security during integration and transformation.

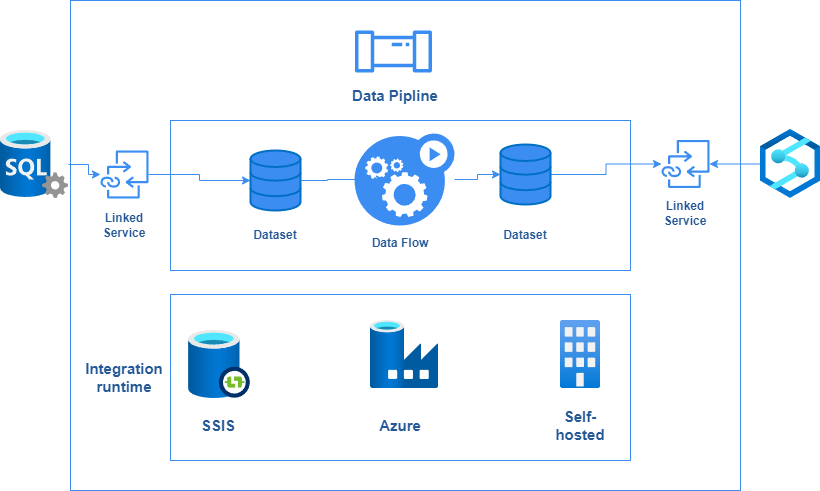

How Azure Data Factory Works

Azure Data Factory (ADF) builds and orchestrates data pipelines that define the flow of data between sources, destinations, and various transformation activities. A data pipeline comprises one or more data movement and transformation activities, allowing for efficient data processing. ADF facilitates data movement by enabling the transfer of data between on-premises and cloud-based data stores, using linked services to connect multiple data sources. In terms of data transformation, Azure Data Factory offers the capability to transform data using data flows for complex transformations or by invoking external services like Azure Databricks or Azure HDInsight. Additionally, transformations can be performed within Azure SQL Database or via Azure Functions. ADF supports orchestration of data workflows across different systems, allowing users to define activities such as copying, transforming, and executing stored procedures. Pipeline control features include scheduling, error handling, retry logic, and logging. Once a pipeline is defined, users can monitor its progress through the Azure portal, providing real-time status updates on data movements and transformations. Furthermore, ADF allows for triggering pipelines based on specific time schedules, events, or data changes, ensuring automated data processing at the right time. With the ability to schedule pipeline runs at regular intervals or trigger them event-driven, Azure Data Factory optimizes data workflows efficiently. This scalability and flexibility in handling complex data workflows make Azure Data Factory a vital tool for modern data engineering.

Data Integration and ETL Pipelines in Azure Data Factory

- ETL (Extract, Transform, Load): This process extracts data from various sources (databases, files, cloud services), transforms it using data flows or external services, and loads the data into target destinations such as Azure Data Lake, Azure SQL Database, or Azure Synapse Analytics.

- ELT (Extract, Load, Transform): In ELT pipelines, data is loaded into the target storage first, and transformations are performed afterward, usually within services like Azure Databricks or Azure Synapse Analytics.

Azure Data Factory is primarily used to integrate data across different environments and build ETL pipelines. It helps with:

ADF offers several transformation activities within the pipeline, such as mapping data flows (for complex transformations), running stored procedures, and invoking Azure services (e.g., Azure HDInsight or Azure Machine Learning).

Azure Data Factory vs Traditional ETL Tools

Azure Data Factory offers several advantages over traditional ETL tools. As a fully managed cloud service, ADF is cloud-native and scalable, automatically adjusting resources as needed without the complexity of server management or infrastructure scaling. Unlike traditional on-premises ETL tools, ADF supports hybrid integration, enabling seamless connectivity between both on-premises and cloud-based data stores. This makes it ideal for modern data architectures that require data integration from multiple, diverse sources. ADF also provides serverless data flows, eliminating the need for dedicated infrastructure to run transformations, unlike traditional ETL tools, which often require manually managed servers. Furthermore, ADF offers flexibility in data transformation, allowing users to define custom transformation logic using built-in transformations or external tools like Azure Databricks, HDInsight, or Azure SQL. The deep integration with the Azure ecosystem further enhances its capabilities, offering seamless connectivity with services like Azure Machine Learning, Azure Databricks, and Azure Synapse Analytics. This integration not only improves the flexibility of data workflows but also empowers organizations to leverage advanced analytics, machine learning, and big data processing without leaving the Azure platform. Additionally, ADF’s robust monitoring and management features ensure smooth operation, enabling users to keep track of pipeline performance and troubleshoot effectively. These advantages position Azure Data Factory as a superior tool for data integration, transformation, and orchestration.

Security and Compliance in Azure Data Factory

- Data Encryption: All data moving through Azure Data Factory is encrypted in transit and at rest. ADF uses Azure Storage Service Encryption (SSE) for data at rest and TLS encryption for data in transit.

- Identity and Access Management: ADF integrates with Azure Active Directory (AAD) for role-based access control (RBAC), ensuring that only authorized users can access specific data or pipeline operations.

- Private Endpoints: Azure Data Factory supports private endpoints for secure communication between services and ensures that sensitive data never leaves the Azure network.

- Compliance Certifications: Azure Data Factory complies with regulatory and industry standards such as GDPR, HIPAA, SOC 1, SOC 2, and ISO 27001, ensuring that data handling meets required compliance protocols.

- Network Security: You can implement network security using Virtual Networks (VNet) and private links, ensuring that data traffic is secured and does not traverse the public internet.

Security and compliance are essential aspects of working with sensitive data in any cloud-based service, and Azure Data Factory offers several features to ensure both:

Pricing Model and Cost Considerations

Azure Data Factory pricing is based on several components that reflect the usage and scale of the data integration processes. Pipeline orchestration and monitoring charges are determined by the number of activities executed within a pipeline and the volume of monitoring and management tasks performed. Data movement costs depend on the volume of data transferred across regions or between on-premises systems and the cloud. For data transformations using data flows, the pricing is tied to the compute resources required for execution, which are measured in Data Flow Execution Units. Additionally, if external services such as Azure Databricks or Azure SQL Database are used for data transformations, additional compute charges are applied based on the resources consumed. To optimize costs, users can leverage Azure’s cost management tools to track spending, scale compute resources appropriately to avoid over-provisioning, and take advantage of auto-scaling where applicable. Implementing best practices like minimizing unnecessary data movement, using batch processing, and optimizing pipeline designs can further reduce costs. Azure also offers different pricing tiers and options that allow for greater flexibility and cost control based on specific needs and usage patterns. By carefully managing resources and monitoring usage, organizations can ensure that they maximize the value of Azure Data Factory while keeping their cloud costs under control.

Connecting Data Sources with Azure Data Factory

- Azure Blob Storage and Data Lake: To store large volumes of data.

- SQL Databases: Including Azure SQL Database, SQL Server, and MySQL.

- Cloud Platforms: Amazon S3, Google Cloud Storage, and other cloud-based systems.

- On-Premises Data: Through Self-hosted Integration Runtime, ADF can connect to on-premises systems.

Azure Data Factory supports integration with a wide range of data sources. Common connections include:

You can also use pre-built connectors to connect to SaaS data sources like Salesforce, Dynamics 365, and Google Analytics.

Monitoring and Debugging in Azure Data Factory

Azure Data Factory offers several tools to monitor the health and performance of pipelines, ensuring smooth data operations. The pipeline monitoring interface within the Azure portal allows users to view the status of pipeline runs, including whether they were successful, failed, or partially successful. Additionally, ADF provides debugging capabilities, enabling users to track pipeline and data flow execution step-by-step, helping to identify and resolve issues quickly. Alerts can also be configured to notify users when pipeline failures occur or when certain thresholds, such as execution time or resource usage, are exceeded, ensuring proactive issue management. Activity logs in Azure Data Factory capture detailed information about all pipeline activities, including execution times, data throughput, and other relevant metrics. These logs help users gain insights into performance and troubleshoot any anomalies. Furthermore, Azure Data Factory integrates with Azure Monitor, providing advanced analytics, custom alerts, and detailed reporting, which enhances the ability to track and optimize pipeline health. Continuous monitoring and performance tuning help ensure that data workflows remain efficient and cost-effective.

Best Practices for Using Azure Data Factory

- Modularized Pipelines: Break down complex data pipelines into smaller, reusable components (e.g., datasets, linked services, and activities).

- Use Parameterization: Leverage parameters in ADF to make your pipelines dynamic and reusable across different environments and scenarios.

- Optimize Data Flow: When using data flows, optimize transformations and partitioning to improve performance and minimize costs.

- Monitor Regularly: Set up regular monitoring and alerting to manage pipeline performance and resolve issues quickly and proactively.

Limitations and Challenges in Azure Data Factory

Azure Data Factory offers powerful transformation capabilities, but it has some limitations when compared to fully featured data transformation tools like Azure Databricks or SQL-based ETL processes. The native transformation features in ADF may not be as flexible or comprehensive as those provided by specialized tools. Additionally, setting up Azure Data Factory in hybrid environments, where on-premises systems are integrated, can be complex and may require the installation and configuration of the Self-hosted Integration Runtime, adding to the overall setup time. For large-scale data operations, complex data flows with multiple transformation steps can lead to performance issues, especially when scaling to handle vast datasets. This can impact both execution times and resource usage, requiring careful optimization and resource management. Moreover, the learning curve associated with data flow development and orchestration in ADF can pose challenges for teams new to cloud-based ETL processes. Finally, troubleshooting issues in complex data pipelines can be time-consuming without proper monitoring and debugging strategies in place.

Getting Started with Azure Data Factory (Step-by-Step Guide)

- Log into the Azure portal and select Create a Resource.

- Search for Azure Data Factory, then click Create and fill in the necessary details like resource group, region, and name.

- After creating the instance, navigate to the Author tab and click Create Pipeline.

- Add activities like Copy Data, Execute Data Flow, or Run Stored Procedure.

- Set up Linked Services to connect to your data sources (e.g., Azure Blob Storage, SQL Database).

- Once the pipeline is configured, click Publish to save the changes.

- Set up triggers or schedules to run the pipeline automatically.

Create an Azure Data Factory Instance:

Create a Pipeline:

Configure Linked Services:

Publish and Trigger Pipelines:

Conclusion

Azure Data Factory is a powerful tool for integrating, transforming, and orchestrating data across cloud and on-premises environments. By following best practices and addressing challenges, organizations can optimize their data workflows and drive better analytics and business intelligence outcomes. It enables seamless data movement and transformation at scale, making it easier to process large volumes of data from various sources. With its ability to connect to both on-premises and cloud-based data stores, Azure Data Factory ensures flexibility in data integration. Additionally, its rich monitoring and logging capabilities provide visibility into data pipelines, helping teams quickly identify and resolve issues. Organizations can also leverage Azure Data Factory’s scalability to handle fluctuating data loads while maintaining cost efficiency.