- Introduction to Kubernetes

- Importance of Kubernetes in Container Orchestration

- Kubernetes Architecture Overview

- Installing Kubernetes (Minikube & K8s Cluster)

- Understanding Pods, Nodes, and Deployments

- Kubernetes Services and Networking

- Kubernetes Storage and Persistent Volumes

- Scaling Applications in Kubernetes

- Managing ConfigMaps and Secrets

- Monitoring and Logging in Kubernetes

- Kubernetes Security Best Practices

- Real-World Kubernetes Use Cases

- Conclusion

Introduction to Kubernetes

In the rapidly evolving world of containerized applications, Kubernetes has emerged as the leading platform for container orchestration. Developed by Google, Kubernetes is an open-source system that automates containerized applications’ deployment, scaling, and management. It allows developers and system administrators to easily manage complex applications, ensuring scalability, availability, and fault tolerance. Kubernetes provides a robust platform for building modern applications, making it essential for cloud-native architectures. This article will explore kubernetes architecture overview importance in container orchestration, provide an overview of microservices architecture kubernetes architecture, and delve into its key concepts and features. We will also cover the installation process, explain core components like Pods and Nodes, and discuss best practices for security, scaling, and monitoring in Kubernetes.

Importance of Kubernetes in Container Orchestration

Before Kubernetes, managing containers at scale was challenging and manual. Containers, such as those provided by Docker, offer a lightweight method for packaging applications and their dependencies. However, once these containers needed to be deployed in large numbers or across different environments, managing them manually became cumbersome. Kubernetes solves this problem by providing a powerful and automated orchestration layer for managing containers in a kubernetes architecture overview.

- Automated Deployment and Scaling: Kubernetes handles application deployment and scaling, ensuring they run efficiently across multiple containers, clusters, and environments.

- Self-Healing: Kubernetes can automatically restart failed containers, reschedule them on healthy nodes, and manage service discovery and load balancing.

- High Availability: Kubernetes provides built-in redundancy and failover mechanisms, which ensure applications remain available even when failures occur in individual components.

- Resource Efficiency: Kubernetes optimizes resource usage by scheduling containers based on available resources, reducing wasted capacity, and maximizing performance.

- Infrastructure Agnostic: Kubernetes works on all cloud providers (AWS, Azure, GCP) and on-premises infrastructure agnostic, making it a versatile tool for managing hybrid or multi cloud deployments environments.

Key Benefits of Kubernetes:

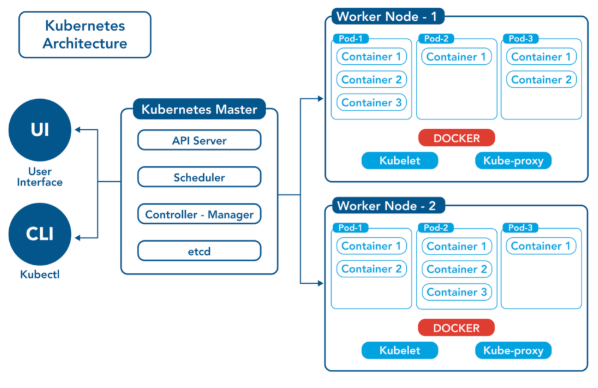

Kubernetes Architecture Overview

Kubernetes is built on a master-slave microservices architectures kubernetes architecture consisting of a Kubernetes control plane (master) and worker nodes. The control plane manages the overall system and handles tasks like scheduling, scaling, and updates while the worker nodes run the containers.The control plane is responsible for the global state of the kubernetes cluster management. CI/CD Pipelines manages task scheduling, monitors the cluster’s state, and makes global decisions about the cluster (e.g., scaling and rolling updates).Kubernetes API Server (Episerver) acts as the front end for the Kubernetes control plane. Infrastructure agnostic exposes the Kubernetes API and processes RESTful requests.etc A distributed key-value store that stores the configuration data, cluster state, and metadata. It is the source of truth for the Kubernetes cluster.The controller manager runs controllers responsible for maintaining the desired state of the cluster, such as replication controllers, node controllers, and kubernetes architecture overview. The scheduler assigns workloads (pods) to available nodes in the cluster based on various factors, such as resource availability and node affinity. Worker nodes are responsible for running the actual workloads (containers) and include the following components. An agent that ensures the kubernetes container orchestration are running as expected and reports back to the Kubernetes control plane. The software responsible for running containers, such as Docker or containers. Maintains network rules for pod communication and load-balancing services between pods.

Installing Kubernetes (Minikube & K8s Cluster)

Kubernetes can be installed in different environments depending on the use case. Minikube is often used for local development and testing, while managed Kubernetes services like Amazon EKS, Google GKE, or Azure AKS can be used for production deployments.

- Install dependencies: Ensure you have Docker, VirtualBox, or a similar hypervisor installed on your system.

- Download Minikube: Install Minikube using a package manager or manually download it from the official Minikube website.

- Start Minikube: Once installed, run the command minikube to create a local kubernetes cluster management.

- Kubeadm: A tool that helps set up a kubernetes cluster management by automating tasks such as initializing the controller node, configuring the worker nodes, and setting up networking.

- Cloud Managed Services: A AWS (EKS), Google Cloud (GKE), and Azure (AKS) provide managed Kubernetes services that handle cluster creation, scaling, and management.

Installing Minikube:

Installing Kubernetes Cluster:

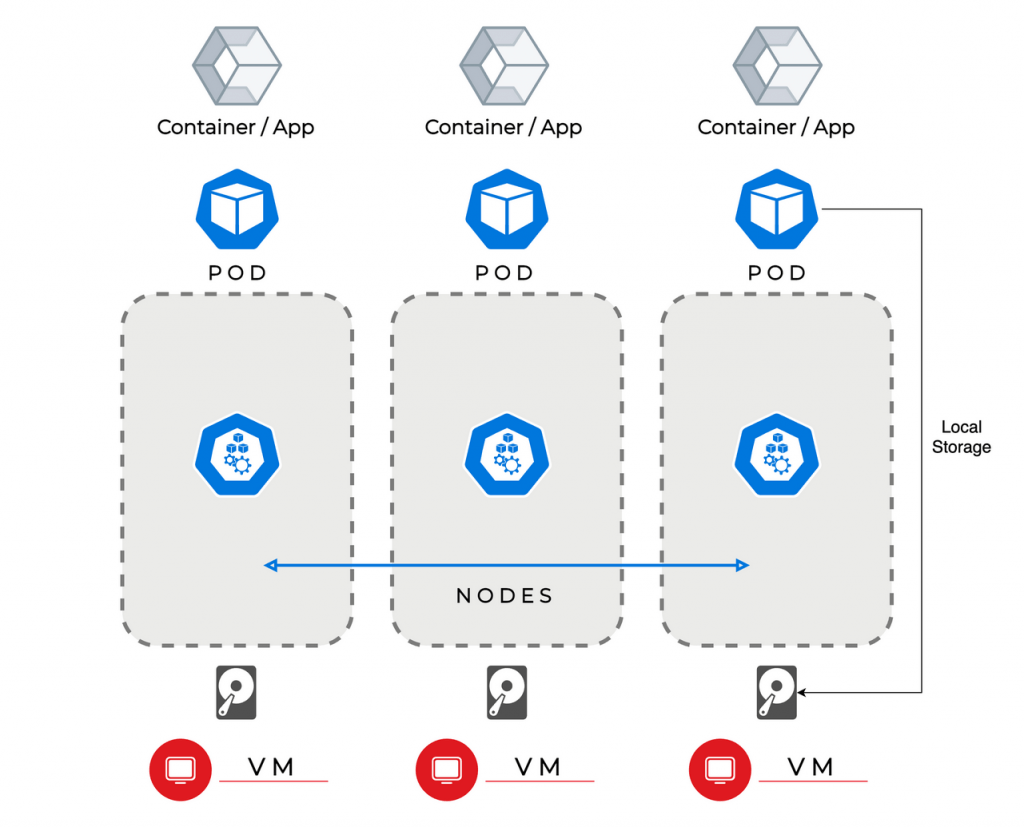

Understanding Pods, Nodes, and Deployments

- Pods: A Pod is a group of one or more containers that share the same network namespace, CI/CD Pipelines, storage volumes, and configuration. Pods are used to run applications and ensure that containers within the same Pod can communicate.Pods can be deployed as single-container or multi-container units.Pods are ephemeral, meaning they can be created and destroyed automatically by Kubernetes based on the infrastructure agnostic.

- Nodes: A Node is a physical or virtual machine in the Kubernetes cluster that runs the required services to run Pods, such as the kubelet and kubernetes container orchestration runtime. Kubernetes cluster management typically has one or more worker nodes.Each node runs several Pods, and the number of Pods that run on a node depends on the available resources.The Kubernetes control plane manages nodes and schedules Pods to run on them based on available resources.

- Deployments: Deployment is a higher-level abstraction in Kubernetes to manage application deployments. It defines how Pods should be created, updated, and scaled.Deployments provide features like rolling updates, which allow updating an application without downtime.Kubernetes automatically manages the scaling of Pods based on resource needs, ensuring high availability.

Kubernetes Services and Networking

Kubernetes provides a range of networking concepts and services to facilitate communication between Pods and external clients. A Service in Kubernetes defines a logical set of Pods and provides a stable endpoint, such as an IP address and DNS name, for accessing them. This abstraction ensures that load balancing is applied and that requests are directed to the appropriate Pods. There are several types of services in Kubernetes ClusterIP, the default service type, is accessible only within the cluster; NodePort exposes the service on a static port across all nodes in the cluster, allowing external access through any node’s IP at the specified port; LoadBalancer integrates with cloud providers to provision an external load balancer, enabling access from outside the cluster with automatic load balancing; and Ingress, an API object, manages HTTP and HTTPS routing to services, acting as a reverse proxy to route external traffic to internal services based on the URL or domain. These services ensure efficient, secure communication between Pods and external clients, enhancing the flexibility and scalability of Kubernetes environments.

Kubernetes Storage and Persistent Volumes

In Kubernetes, Pods are ephemeral, meaning that data stored inside a Pod will be lost if it is deleted. Kubernetes uses Persistent Volumes (PVs) and Persistent Volume Claims (PVCs) to manage persistent storage.

- Persistent Volumes (PV): A storage resource in the cluster, abstracting physical storage like disks, NFS, or cloud storage services.

- Persistent Volume Claims (PVC): A request for storage by a user or application. PVCs allow Pods to claim and use PVs.

- Storage Classes: Kubernetes supports different types of storage for dynamic provisioning of PVs, such as SSD, NFS, or multi cloud deployments.

Scaling Applications in Kubernetes

Kubernetes makes scaling applications easy through horizontal pod scaling and auto-scaling.Kubernetes ensures that applications remain responsive and performant under varying loads.

- Horizontal Pod Autoscaler (HPA): Automatically scales the number of Pods based on CPU or memory usage.

- Manual Scaling: Developers can manually increase or decrease the number of Pods or instances for an application using the kubectl scale command.

Managing ConfigMaps and Secrets

Kubernetes allows developers to separate configuration data from application code using ConfigMaps and Secrets, which helps enhance security, scalability, and maintainability. ConfigMaps are used to store non-sensitive data such as environment variables, configuration files, or command-line arguments. These can be consumed by Pods either as environment variables or by mounting them as files, allowing easy management of configuration data separately from the application code. In contrast, Secrets are specifically designed to store sensitive information like passwords, API keys, and certificates. Secrets are encoded and managed securely to prevent exposure of sensitive data. By separating configuration data and sensitive information from the application code, Kubernetes ensures that both security and operational efficiency are maintained, making it easier to manage complex systems in a secure manner.

Monitoring and Logging in Kubernetes

Effective monitoring and logging are essential to ensuring that Kubernetes cluster management runs smoothly and to troubleshooting issues when they arise. Prometheus is a popular open-source monitoring solution that integrates well with Kubernetes, collecting metrics and monitoring system health. Grafana is often used alongside Prometheus to create visual dashboards, providing a clear view of the cluster’s health and performance. In terms of logging, kubernetes Logging supports centralized logging through solutions like the ELK Stack (Elasticsearch, Logstash, Kibana) or Fluentd, which aggregate logs from all containers and nodes in the cluster, making it easier to analyze and identify issues. By leveraging these tools, administrators can maintain the stability and performance of their Kubernetes environments while quickly resolving any problems that arise.

Kubernetes Security Best Practices

Security is a critical aspect of Kubernetes, and implementing the following best practices helps protect your cluster from vulnerabilities:

- Use RBAC (Role-Based Access Control): Control who can access the Kubernetes API and what actions they can perform.

- Network Policies: Restrict communication between Pods using network policies, ensuring that only authorized traffic is allowed.

- Pod Security Policies: Enforce security standards for Pods, such as restricting privileged containers or ensuring that kubernetes container orchestration run as non-root users.

- Secrets Management: Use Kubernetes Secrets to manage sensitive data and limit access based on the least privilege.

Real-World Kubernetes Use Cases

Microservices Architectures Kubernetes is a popular choice for managing microservices architectures due to its ability to scale, monitor, and manage distributed services effectively.

- CI/CD Pipelines: Many organizations use Kubernetes as part of their continuous integration and deployment workflows, ensuring that new features and updates are deployed seamlessly.

- Multi-Cloud Deployments: Kubernetes allows businesses to deploy and manage applications across multiple cloud platforms, ensuring high availability and fault tolerance.

Conclusion

Kubernetes has revolutionized how we deploy and manage applications in the cloud. With its powerful kubernetes container orchestration, scalability, and management features, Kubernetes has become the industry standard for building and deploying multi cloud deployments-native applications. Whether working with microservices architectures, handling large-scale workloads, or improving your CI/CD Pipelines, Kubernetes provides the flexibility and tools necessary to meet modern application demands. Understanding the key concepts of Kubernetes will allow developers and operators to leverage its full potential for efficient cloud management.