- Overview of AWS Batch

- How AWS Batch Works

- Advantages of AWS Batch

- Key Components of AWS Batch

- Setting Up AWS Batch

- Job Definitions and Queues

- Compute Environments in AWS Batch

- Use Cases of AWS Batch

- Monitoring and Logging in AWS Batch

- AWS Batch Pricing

- Comparison with Other Batch Processing Services

- AWS Batch Best Practices

- Conclusion

Overview of AWS Batch

AWS Batch is a completely managed service provided by Amazon Web Services (AWS) which enables the users to run and handle batch processing workloads of any size with ease. Batch processing is executing jobs, typically computational jobs, in the background without direct human intervention. These types of tasks are generally utilized for high-performance computing (HPC), scientific simulations, finance modeling, image processing, data analysis, and training machine learning models.Batch task management can prove to be complex, with many being coordinating computing resources, scheduling jobs, scaling infrastructure, and resource allocation. AWS Batch removes all the frustration of these tasks by automating deployment, scale, and running them. Regardless of whether you have several or many jobs, AWS Batch is a convenient and effective platform that will meet your batch processing needs, and you can enhance your skills with Amazon Web Services Training . This article will explain how AWS Batch works and its key components, benefits, and uses. We will talk about the details of using AWS Batch, job queue and definition configurations, and managing compute environments as well. Additionally, we will cover monitoring, pricing, and best practices on how to optimize AWS Batch usage best.

How AWS Batch Works

AWS Batch enables you to execute batch computing workloads without having to manage the underlying infrastructure. The service provisions and manages compute resources automatically based on your specified requirements. Below is an overview of how AWS Batch works:

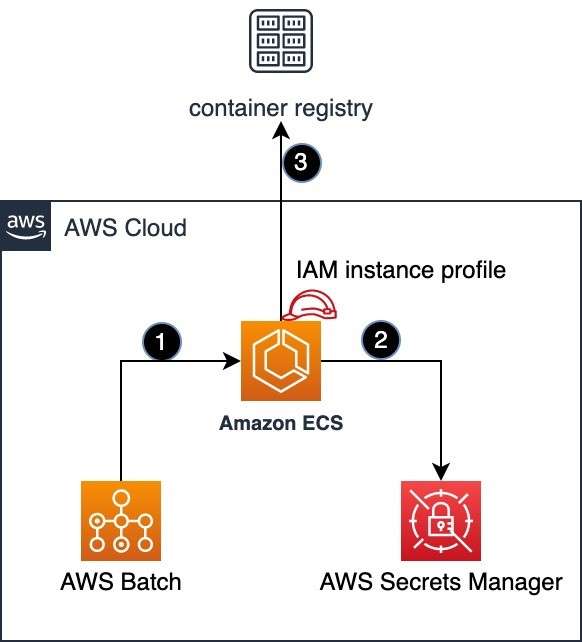

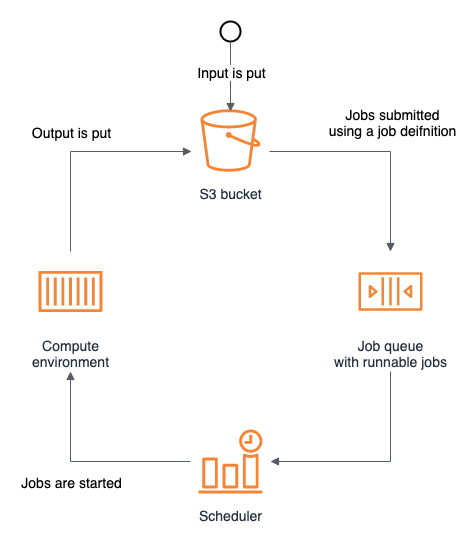

- Job Definition and Job Queues: Job Definitions specify the characteristics of your batch jobs, including the Docker image to run, the compute resources needed, the environment variables, and any job dependencies. Job Queues are employed to schedule and control job execution. Jobs are placed in a queue, and AWS Batch chooses the right computing resources based on priority and resource needs.

- Compute Environments: Compute Environments are the pool of resources AWS Batch uses to run jobs. AWS Batch provides two types of environments. AWS Batch will provision and scale EC2 instances automatically based on jobs. You can use your own EC2 instances and configure them as per your requirements.

- Job Scheduling and Execution : AWS Batch uses a job scheduler for the allocation of compute resources effectively. The jobs are allocated by the scheduler to the right resources depending on priority and available capacity. The service can be scaled up or down based on requirements to make efficient use of resources. Understanding AWS Batch is essential for achieving Amazon Web Service Certification , as it demonstrates knowledge of cloud-based batch processing and resource optimization.

- Monitoring and Logging: AWS Batch provides real-time monitoring and logging by integrating with Amazon CloudWatch. This helps in tracking job status, resource utilization, and failures. Logs can be used for troubleshooting and optimizing job performance.

- Job Dependencies: AWS Batch supports job dependencies, thus allowing you to schedule jobs to be executed sequentially. An example is a job waiting for completion of the other job before execution. This is helpful for workflows that entail sequential task processing.

Excited to Obtaining Your AWS Certificate? View The AWS Course Offered By ACTE Right Now!

Advantages of AWS Batch

AWS Batch offers a number of advantages to organizations executing high-scale batch processing workloads:

- AWS Batch handles all the hassles of infrastructure for you. You don’t need to get your hands dirty provisioning servers, scaling instances, or handling job queues. AWS Batch handles all that for you.

- One of the biggest advantages of using AWS Batch is that it can scale resources automatically according to demand. If you want to process a small batch of jobs or go big and scale to a thousand jobs, AWS Batch is capable of dynamically provisioning and deallocating compute resources accordingly.

- AWS Batch takes advantage of AWS EC2 instance types and AWS Fargate in order to lower the cost of batch workloads. Spot Instances allow you to use idle EC2 capacity at a lower price, making it an extremely cost-effective method for batch processing.

- AWS Batch supports various options for compute, including EC2 instances of several types and AWS Fargate, which provides you with a way to run containers without running the underlying infrastructure.

- AWS Batch supports high-performance computing jobs, such as simulations, computational science calculations, and data processing, by making dedicated computing capacities, such as GPU instances, available for use in computationally intensive jobs.

- AWS Batch plays nicely with other AWS services, such as S3 for input and output data storage, CloudWatch for monitoring, and IAM for access control, so it is easy to integrate into your existing AWS infrastructure.

Key Components of AWS Batch

AWS Batch is built on top of several key components that work together to manage and execute batch jobs. A Job Definition in AWS Batch specifies how a job must be run. It sets parameters like the Docker job image, environment variables, required resources (vCPUs, RAM), and job retries on failure. On security grounds, you may also specify the IAM role of the job. Job Queues hold jobs waiting to be run. Each queue has a priority, and the jobs for higher-priority queues are run before the jobs for lower-priority queues. You can have multiple job queues, one per type of job or priority. AWS Batch provides Managed Compute Environments and Unmanaged Compute Environments where you can run your jobs. A Managed Compute Environment gives AWS Batch the power to provision the necessary EC2 instances automatically based on your job’s requirements in AWS Training . Otherwise, in an Unmanaged Compute Environment, you provide your EC2 instances that AWS Batch will use to run jobs. AWS Batch compute resources can be EC2 instances, EC2 Spot Instances, or AWS Fargate to execute containerized jobs. AWS Batch automatically scales these resources based on job requirements. Job Scheduler determines where and when to execute jobs based on available compute resources. It optimizes job placement based on job requirements, available resources, and job priority.

Setting Up AWS Batch

Setting up AWS Batch involves a number of steps to configure the service to your processing needs. Following is a step-by-step process:

- The Docker image should be used for containerized jobs.

- Resource needs like vCPUs and memory.

- Environment variables are job-specific.

- Retry mechanisms in the event of job failure.

- IAM roles are utilized to provide required permissions to the job.

- Job definitions are reusable, i.e., you can submit jobs to the same queue using the same job definition.

- High-Performance Computing (HPC): Scientific simulations, weather forecasting, seismic processing, and bioinformatics tend to demand strong, scalable computing resources. AWS Batch accommodates these workloads by launching specialized EC2 instances, such as GPU instances.

- Data Analytics: AWS Batch is well suited for executing data transformation, ETL (Extract, Transform, Load), and big-scale analytics jobs, such as log processing, big data, or streaming data.

- Machine Learning: AWS Batch can be employed to train machine learning models at scale. AWS Batch supports training jobs that demand distributed compute resources and GPU acceleration.

- Image and Video Processing: Video transcoding, image processing, and rendering jobs may be handled efficiently with AWS Batch.

- Job status (e.g., running, completed, failed).

- Resource usage (e.g., vCPUs and memory).

- Job-generated logs.

- EC2 Instance Pricing – Fees for EC2 instances utilized in Managed Compute Environments or if you’re utilizing EC2 Spot Instances.

- AWS Fargate Pricing – If you use Fargate for serverless computing, you are charged for the container resources.

- Storage and Data Transfer – Data storage (e.g., in Amazon S3) and data transfer incur charges.

- Take advantage of EC2 Spot Instances: Utilize Spot Instances to save costs while running enterprise-scale batch workloads.

- Monitor and Tune Jobs: Frequently check CloudWatch metrics to diagnose resource bottlenecks.

- Leverage Job Dependencies: Link connected jobs together using job dependencies within Amazon Web Services Architecture to ensure that jobs execute sequentially.

- Test Job Definitions: Execute your job definitions on a low-volume subset of data before using large workloads.

Step 1: Create a Compute Environment You have two choices: either a Managed Compute Environment (where AWS Batch allocates resources for you) or an Unmanaged Compute Environment (where you supply your own instances).

Step 2: Create a Compute Environment You have two choices: either a Managed Compute Environment (where AWS Batch allocates resources for you) or an Unmanaged Compute Environment (where you supply your own instances).

Step 3: Create a Job Queue Create one or more job queues that reflect the priority level of processing the job. Jobs will be placed into these, and the AWS Batch scheduler will select compute resources based on the priority of the queue and the job requirements from the Amazon Web Services list .

Step 4: Define Job Definitions Specify the job properties, such as the Docker image, the type and number of compute resources required, and any job-specific parameters. For security, you can also specify retry policies and IAM roles.

Step 5: Submit Job After your environment is configured, you can submit jobs to the queues for processing. AWS Batch will take care of scheduling and resource provisioning.

Step 6: Monitor and Log Jobs You can use Amazon CloudWatch to track job execution and look at logs. CloudWatch offers visibility into job performance, resource usage, and potential problems.

Thrilled to Achieve Your AWS Certification? View The AWS Online Course Offered By ACTE Right Now!

Job Definitions and Queues

Job Definitions specify the resource and execution needs for batch jobs. Important aspects of a job definition are:

Job Queues:

A Job Queue is where jobs are queued for execution. You may set up several queues to handle varying types of workloads. Every queue has a specified priority, and AWS Batch processes jobs in the higher-priority queues first before processing jobs in the lower-priority queues.

Compute Environments in AWS Batch

A Compute Environment in AWS Batch is the compute power used to execute jobs. AWS Batch enables you to select from:

Managed Compute Environment: AWS provisions and manages the compute resources to be used for job execution automatically.

Unmanaged Compute Environment: You use your own EC2 instances, which are utilized to execute the jobs.

Use Cases of AWS Batch

AWS Batch can be utilized for many workloads that need to execute large-scale batch processing, including selecting the right AWS EC2 instance types to optimize performance and cost.

AWS Master’s Degree in Cloud Computing? Enroll For AWS Master Certification Today!

Monitoring and Logging in AWS Batch

AWS Batch is integrated with Amazon CloudWatch for the monitoring of job performance and logging. You can monitor:

CloudWatch also offers metrics for monitoring job performance and diagnosing bottlenecks or errors.

AWS Batch Pricing

AWS Batch pricing is computed on the compute resources utilized for job processing. The most important pricing factors are:

Preparing for Your AWS Interview? Check Out Our Blog on AWS Interview Questions & Answer

Comparison with Other Batch Processing Services

AWS Batch is unique from other batch processing offerings such as Google Cloud Dataflow or Azure Batch in a number of aspects, including integration with physical AWS services, cost savings with Spot Instances, and support for both containerized and native workloads.

AWS Batch Best Practices

To achieve the best from AWS Batch:

Conclusion

AWS Batch is a powerful and fully managed service that simplifies batch processing workloads by automating job scheduling, resource provisioning, and scaling. It supports a wide range of use cases, including high-performance computing, data analytics, machine learning, and image processing. With flexible compute environments, cost-effective options like EC2 Spot Instances, and seamless integration with AWS services, AWS Batch provides an efficient and scalable solution for organizations of all sizes. Additionally, AWS Training helps users maximize the benefits of AWS Batch by equipping them with the necessary skills to optimize workload execution and resource management. By following best practices such as leveraging Spot Instances, optimizing job scheduling, and monitoring performance with CloudWatch, users can maximize efficiency and cost savings. Whether handling a few jobs or scaling up to thousands, AWS Batch ensures a reliable and optimized batch processing experience.