VMware vCenter server is the main control center of your vSphere environment. Whether you install it on a Windows or Linux operating system, the following best practices can help you maintain it in a secure state:

- To help keep VMware secure, make sure your vCenter Server systems use static IP addresses and host names. Each IP address must have a valid internal DNS registration, including reverse name resolution.

- By default, the password for the vpxuser account expires after 30 days. Change this setting if necessary to comply with your security policy.

- If you are running vCenter Server on Windows, make sure the remote desktop host configuration settings ensure the highest level of encryption.

- Make sure that the operating system is up to date on security patches.

- Install an antivirus solution and keep it up to date.

- Make sure that the time source is configured to sync with a time server or a time server pool, in order to ensure proper certificate validation.

- Do not allow users to log directly into the vCenter server host machine.

To secure your ESXi hypervisor, implement the following best practices:

- Add each ESXi host to the Microsoft Active Directory domain, so you can use AD accounts to log in and manage each host’s settings.

- Configure all ESXi hosts to synchronize time with the central NTP servers.

- Enable lockdown mode on all ESXi hosts. That way, you can choose whether to enable the direct console user interface (DCUI) and whether users can log in directly to the host or only via the vCenter Server.

- Configure remote logging for your ESXi hosts so you have a centralized store of ESXi logs for a long-term audit record.

- Keep ESXi hosts patched to mitigate vulnerabilities. Attacks often try to exploit known vulnerabilities to gain access to an ESXi host.

- Keep secure shell (SSH) disabled (this is the default setting).

- Specify how many failed login attempts can be made before the account is locked out.

- ESXi version 6.5 and later supports UEFI secure boot at each level of the boot stack. Use this feature to protect against malicious configuration changes within the OS bootloader.

VMware maintains a hardware compatibility list for each of the versions of their vSphere platform. Before purchasing or attempting to use any hardware configuration you should first make sure that all of the components are properly supported. You also need to make sure that your hardware meets the minimum configuration necessary for proper installation and operation.

When possible use newer hardware with hardware-assist featuresRecent processors from both AMD and Intel include features that allow the hardware to assist VMware in it’s virtualization efforts. Hardware assistance comes in two forms: CPU virtualization and memory management virtualization.

Run your system through a burn-in/stress testWhen building or buying a new system for use in a server capacity it’s always a good idea to run the system through a thorough stress test or burn-in period. Various software exists that allows you to do this and many are available as live CDs that you can boot and run the system through it’s paces. This helps to weed out faulty components and will allow you to ensure a solid platform when you switch the system to production.

Choose appropriate back-end storage given the applicationMany diverse systems exist for back-end storage and your choice of technology can have a tremendous impact on the overall performance of your system. Disk I/O is one of the major sticking points remaining in the computer hardware industry and has been one of the slowest to grow.

The choice of back-end storage devices and configurations depends on the type of applications you will be running. Commodity SATA drives in the server itself for instance will not suffice for high-speed writing to disk of large data sets produced by scientific equipment. If you have a need for high-speed or high-volume storage you may want to consider some of the more robust and effective storage mediums such as iSCSI or NFS. You storage must be able to handle the required volume and read/write times for your applications to function smoothly while also accommodating the overhead of the host operating system and the vSphere system itself.

Use server-class network interface cardsNot all network interface cards are created equal; built-in adapters tend to only support the needs of run-of-the-mill users. For enterprise-grade servers you’ll want to use server-class NICs that support checksum offloading, TCP segmentation offloading, the ability to handle 64-bit DMA addresses, the ability to handle scatter gather elements occurring multiple times within a single frame, and jumbo-sized frames. These features will allow vSphere to make use of its built-in advanced networking support.

Optimize your servers’ BIOS settingsMotherboard manufacturers ship their product with the BIOS configured to function in a variety of configurations and because they have to support so many different configurations they’re not able to optimize the motherboard for your hardware configuration. You should always update your BIOS to the latest version and then enable hardware assists that vSphere will use such as Hyperthreading, “Turbo Mode”, VT-x, AMD-V, EPT, RVI, etc. Power saving modes should also be disabled as they have adverse effects on server performance.

Zoning considerations- Each host must have at least 1 path to each InfiniBox node, with 2 HBAs. The total number of paths should not exceed 12

- Avoid zoning the hosts to unnecessary storage arrays or volumes, in order to shorten the HBA rescan times

- Straight after enabling FC connectivity between VMware hosts and InfiniBox, Install Host PowerTools for VMware by deploying the OVF template from http://repo.infinidat.com/#host-power-tools-for-vmware

- It is recommended to perform registration of the cluster or datacenter via the Host Power Tools and not manually, to avoid human mistakes and register only those HBAs actually connected to InfiniBox.

- Make sure that you “prepare host / cluster” to work with InfiniBox, since it changes valuable configuration and optimises the performance, sometimes with over 50% performance gain

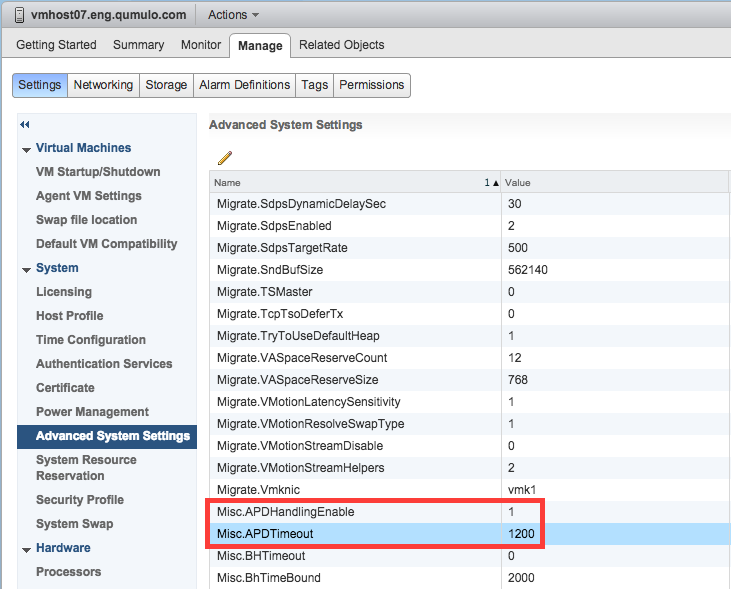

At Qumulo headquarters, we have implemented a configuration setting to our internal VMware vSphere 6 hosts that allows our hosts to better handle Qumulo cluster upgrades & reboots.

A storage device is considered to be in an All Paths Down (APD) state when the it remains unreachable for a specified length of time. The default on vSphere 6 is 140 seconds, typically not long enough to survive a reboot of the host. If the host marks the datastore as APD, further I/O will cause read-only file systems in Linux guests, and has the potential to blue-screen Windows systems.

To help avoid these types of issues during an upgrade, we recommend increasing the APD timeout values:

- From Hosts and Clusters, select the Host

- Click the Manage tab > Settings

- Select Advanced System Settings

- Ensure Misc.APDHandlingEnable is set to 1

- Change Misc.APDTimeout to the value you’d like in seconds. Note that the default value is 140

As seen in the screenshot below, we use a setting of 1200 which gives the hosts twenty minutes to retry accessing physical storage. Note that it is best to keep VMware Tools up to date on your guest operating systems to ensure the latest scsi timeouts are applied as well.

You should now be able to successfully configure your VMware vSphere to better handle cluster upgrades and reboots

There are several ways to store your Virtual Machines that run on your VMware Cloud Backend storage. You can store them locally on internal storage or on direct attached storage (DAS). Another way is to tie your ESXi servers to a central backend storage network, with protocols like FC and iSCSI (Block) or through NFS (File). Network Attached Storage (NFS) is a solid, mature, high available, high performing foundation for virtualization environments.

I have helped several customers’ last couple of years to make sure that their virtualized environment is stable and high performing. Often when there are (performance) problems people tend to focus on a specific part of the infrastructure like, network or storage. Always look at it as a complete chain from the user using an application to the location where the data and/or application is stored.

This blog post will give an overview of deployment considerations and best practices for running with Network Attached Storage in your VMware environment. Hoping it gives some guidance and solves some often seen issues with customers. You can also have a high performing, stable NFS storage foundation for your VMware virtualized environment!

Highlights- Make sure your VMware environment is updated and runs on patch 5 or newer if you have ESXi 5.5 and on Update 1a if you run with ESXi 6.0

- Run with portfast network ports on the network switches if you have STP enabled.

- Also check your network settings on the ESXi side, the switches used in between and of course on the storage side.

- If you use NFS version 3 and NFS version 4.1, do not mix them on the same volumes/data shares.

- Also in a mixed VMware environment it is best to run NFS version 3 all over.

- Separate the backend storage NFS network from any client traffic.

- Check your current design so that all paths used are redundant, so high availability is covered to your clients.

- Within your naming convention just use ASCII characters for your NFS network topology to prevent unpredictable failures.

- Always refer to your storage-array vendor’s best practices for guidelines, to run optimal with NFS in your environment.

- Check and adjust the default security, because NFS version 3 is unsecure by default.

- Configure the advanced setting for NFS.MaxVolumes, Net.TcpipHeapSize and Net.TcpipHeapMax.

- Requires VMware vStorage APIs for Data Protection (VADP); VADP is included with all licensed vSphere editions: Standard, Enterprise, and Enterprise Plus

- Provides image-based agentless protection of guest virtual machines (VMs)

- Quick and easy to configure, no need to add VMs as individual sources:

- Configure vCenter server as a source if managing multiple ESX servers in a cluster

- Use ESX servers as a source if standalone or not managed by vCenter

- Automatically detects new and removed VMs

- Provides granular file/directory recovery via VMDK Browsing

- Preferred method of protection for quick disaster recovery and to meet stringent recovery time objectives

- Use LiveBoot to instantly spin up a VM on an ESX server

- Use Cloud LiveBoot to spin up a VM in Barracuda Cloud Storage

- Quicker and easier method of data restoration, including:

- Restore to any ESX server

- Choose destination datastore

- Overwrite or rename the existing VM

- Download individual virtual disks

- Uses VMware’s Changed Block Tracking (CBT) for incremental forever backups

- Host-based VM backups do not include the following as VMware cannot snapshot:

- Physical Raw Disk Mapping (RDM) devices

- Independent disks

- Barracuda LiveBrowse does not support Windows Dynamic Disk partitions; to restore files and folders from a VMDK, back up these disks using the Barracuda Backup Agent

- You cannot exclude individual files or directories from backup

- Can cause an increased storage footprint due to image-based backup method, better for short-term retention

- Protect Microsoft applications running on VMs using the Barracuda Backup Agent as separate sources in conjunction with host-based backups, including:

- Microsoft Exchange Server – Only databases must be protected using the Barracuda Backup Agent. If you are using host-based VM backup in conjunction with Agent backup, you do not need to include the File System and System State with the Agent backup.

- Microsoft SQL Server – Only databases must be protected using the Barracuda Backup Agent. If you are using host-based VM backup in conjunction with Agent backup, you do not need to include the File System and System State with the Agent backup.

- Microsoft Active Directory – Databases and System State must be protected using the Barracuda Backup Agent.