- Introduction to AWS S3 CLI

- Setting Up AWS CLI for S3 Operations

- Managing Buckets with AWS S3 CLI

- Basic AWS S3 CLI Commands

- Working with Objects in S3 Using CLI

- S3 Bucket Policies and Permissions via CLI

- Troubleshooting S3 CLI Commands

- Advanced S3 CLI Features

- Security Best Practices for S3 CLI Usage

- Conclusion

Introduction to AWS S3 CLI

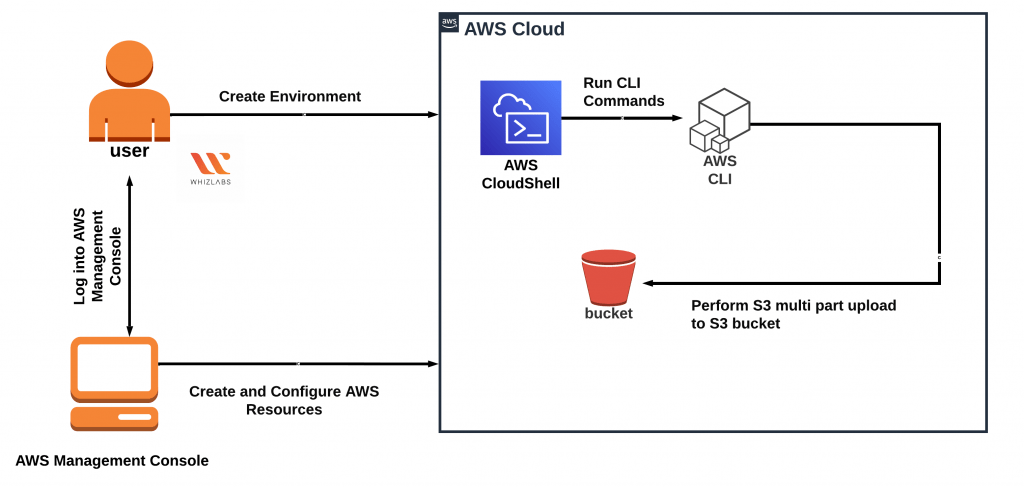

The AWS S3 CLI is a powerful command-line tool that allows users to interact with Amazon Simple Storage Service (S3) directly from their terminal. Part of the broader AWS Command Line Interface (CLI) suite, it provides a convenient, scriptable, and efficient method for managing S3 resources, such as buckets, objects, and access permissions. Through the S3 CLI, users can automate tasks, manage data storage, upload and download files, and configure access controls without needing to use the AWS Management Console. Amazon S3 is a highly scalable, durable, and secure object storage service that stores vast amounts of data, a core component covered in Amazon Web Service Training, where learners gain in-depth knowledge on how to effectively use and manage S3 for storing and securing large-scale data in the AWS cloud. The AWS S3 CLI makes it possible to efficiently work with S3 buckets and objects from the command line, providing enhanced flexibility and automation options. Whether you need to sync large datasets, manage access permissions, or automate backups, the S3 CLI is a go-to tool for developers and administrators working with cloud storage. The AWS S3 CLI commands follow a straightforward syntax, making it easy to integrate into scripts and cloud workflows. For users who prefer working in a terminal environment or those who need to manage multiple resources programmatically, the AWS S3 CLI is an invaluable tool.

Are You Interested in Learning More About AWS? Sign Up For Our AWS Course Today!

Setting Up AWS CLI for S3 Operations

Before you can use the AWS S3 CLI, you need to install the AWS CLI and configure it with your AWS credentials. The setup process involves several simple steps to ensure that your local machine or server can securely communicate with AWS services, including S3.

Step 1: Install the AWS CLI

- Windows: Download the AWS CLI installer from the official AWS website and run the installer.

- #sudo apt install awscli

- # pip install awscli

- macOS: Install AWS CLI with Homebrew:

- # brew install awscli

- #aws configure

- AWS Access Key ID: Obtain this from the IAM section of your AWS Console.

- AWS Secret Access Key: This is generated along with your Access Key.

- Default Region Name: Specify the AWS region (e.g., us-west-2).

- Default Output Format: Choose the format in which you want the CLI to output results (e.g., JSON).

- #aws s3 ls

- List Buckets: To list all the S3 buckets in your AWS account:

- # aws s3 ls

- Create a New Bucket: To create a new bucket in a specified AWS region (bucket names must be globally unique):

- # aws s3 mb s3://my-new-bucket-name –region us-west-2

- List Objects in a Bucket: To list the contents of a specific bucket:

- # aws s3 ls s3://my-bucket-name/

- Upload Files to S3: To upload a file to your S3 bucket:

- # aws s3 cp localfile.txt s3://my-bucket-name/

- Download Files from S3: To download a file from an S3 bucket to your local system:

- # aws s3 cp s3://my-bucket-name/myfile.txt ./localfile.txt

- Sync Local Folder to S3: To sync a local directory with an S3 bucket, ensuring that only changed or new files are uploaded:

- # aws s3 sync ./localdir s3://my-bucket-name/

- Remove Files from S3: To delete a file from S3:

- # aws s3 rm s3://my-bucket-name/myfile.txt

- Delete a Bucket: To delete an empty S3 bucket:

- # aws s3 rb s3://my-bucket-name

- Upload Files to S3:To upload a file from your local system to an S3 bucket:

- # aws s3 cp localfile.txt s3://my-bucket-name/

- Download Files from S3: To download a file from S3 to your local system:

- # aws s3 cp s3://my-bucket-name/myfile.txt ./localfile.txt

- Sync Files Between Local and S3: To sync a local directory to an S3 bucket, use the sync command:

- # aws s3 sync ./localdir s3://my-bucket-name/

- Delete Files from S3: To remove a file from S3:

- # aws s3 rm s3://my-bucket-name/myfile.txt

- Copy Files Within S3:To copy a file from one S3 bucket to another:

- # aws s3 cp s3://source-bucket-name/myfile.txt s3://destination-bucket-name/myfile.txt

- Check IAM Permissions: If you receive an “Access Denied” error, make sure your IAM role or user has the appropriate S3 permissions to perform the desired operation. This can include permissions like s3:PutObject, s3:GetObject, and s3:ListBucket.

- Verify Bucket Name and Region: Ensure that the bucket name is correct. Bucket names are globally unique. Also, verify that you are operating in the correct region by using the –region flag if necessary.

- Check for Typos: AA common issue with CLI commands is a simple typo in the bucket name or file path, which is something you can avoid by following best practices covered in the Complete Microsoft Azure Course, where students learn the importance of accuracy and troubleshooting techniques when working with cloud resources and command-line tools. Always double-check the spelling of your S3 bucket names and paths.

- Review Command Output: If you run into an error, the CLI will often provide detailed feedback. Review the error message for hints on what went wrong. For example, if you’re having trouble with permissions, the CLI might suggest adding certain actions to your IAM policy.

- Check Network Connectivity: Ensure that your network connection is stable and that you can reach AWS services. If you’re behind a proxy or firewall, you may need to configure the CLI to work with it.

- Encrypting Objects in S3: Enforcing encryption ensures data security at rest. Users can apply Server-Side Encryption (SSE) using AWS-managed keys (SSE-S3) or customer-managed keys (SSE-KMS), a concept closely related to Understanding Multitenancy, where data security and isolation are crucial in environments where multiple tenants share the same infrastructure.

- Enabling MFA-Delete for Extra Protection: Multi-factor authentication (MFA) Delete prevents unauthorized deletions by requiring an additional authentication factor.

- Using IAM Roles and Policies: Instead of storing credentials locally, leveraging IAM roles with the least privilege access enhances security when running AWS CLI commands.

- Encrypting Objects in S3: Enforcing encryption ensures data security at rest. Users can apply Server-Side Encryption (SSE) using AWS-managed keys (SSE-S3) or customer-managed keys (SSE-KMS), where learners gain hands-on experience with securing data and understanding encryption mechanisms within the AWS cloud environment.

- Enabling MFA-Delete for Extra Protection: Multi-factor authentication (MFA) Delete prevents unauthorized deletions by requiring an additional authentication factor.

Linux:

Alternatively, you can install using pip if you have Python installed:

Step 2: Configure AWS CLI

Once the AWS CLI is installed, you must configure it with your AWS account credentials, a process that aligns with DevOps practices, where automating and managing cloud infrastructure through CLI tools is essential for efficient deployment and continuous integration/continuous delivery (CI/CD) workflows. To do this, run the following command:

You will be prompted to enter the following details:

Step 3: Verify Installation

To verify that the CLI is set up properly, you can run:

This command should list the S3 buckets in your account. If you see an error message like “Access Denied,” check your IAM permissions and ensure that your credentials are correct.

Managing Buckets with AWS S3 CLI

Managing S3 buckets through the CLI is straightforward, allowing you to create, list, delete, and configure buckets with ease. To create a new bucket, use the command aws s3 mb s3://my-new-bucket. To list all buckets in your AWS account, use aws s3 ls. If you need to delete a bucket, ensure it is empty first and then run aws s3 rb s3://my-bucket-name. To modify access control settings, such as making a bucket public, use the command aws s3api put-bucket-acl –bucket my-bucket-name –acl public-read. Additionally, you can enable bucket versioning to keep multiple versions of objects with the command aws s3api put-bucket-versioning –bucket my-bucket-name –versioning-configuration Status=Enabled, a feature that is utilized by Cloud Computing Companies in India to ensure data integrity and protection in their cloud storage solutions. These commands provide basic control over your S3 buckets directly from the AWS CLI. You can also configure lifecycle policies for objects in your S3 buckets using the aws s3api put-bucket-lifecycle-configuration command to automate tasks like object expiration or transitioning to different storage classes. To upload files, use the aws s3 cp command to copy files to and from your S3 buckets, such as aws s3 cp myfile.txt s3://my-bucket-name/.

For syncing directories, you can use aws s3 sync to synchronize a local directory with an S3 bucket or vice versa. To monitor the usage and access logs of a bucket, use the aws s3api put-bucket-logging command. Finally, you can set up cross-region replication to automatically replicate objects between S3 buckets in different AWS regions with aws s3api put-bucket-replication. These additional commands further enhance your ability to manage and automate tasks with AWS S3.

To Earn Your AWS Certification, Gain Insights From Leading AWS Experts And Advance Your Career With ACTE’s AWS Course Today!

Basic AWS S3 CLI Commands

The AWS S3 CLI offers several commands to help you manage S3 resources efficiently, a topic covered in the AWS Course Syllabus and Subjects, where learners dive into various AWS tools and commands to manage and optimize cloud storage and resources in real-world scenarios. Here are some basic commands that are commonly used:

Working with Objects in S3 Using CLI

Once you have your buckets set up, you will often need to manage the objects (files) within them. This includes uploading, downloading, deleting, and organizing objects to ensure efficient storage and retrieval. Additionally, you may need to apply access control policies to determine who can view or modify the objects. Versioning can also be enabled to maintain different versions of the same file, providing an added layer of protection against accidental deletions or overwrites, a key concept explored in AWS Training, where participants learn how to configure and manage versioning to enhance data protection and recovery in AWS environments. Regularly monitoring the usage and performance of your objects is also important to optimize costs and ensure the integrity of your stored data. With these management tasks in place, you can ensure that your storage environment remains secure, efficient, and well-organized. AWS S3 CLI provides several commands for working with objects:

S3 Bucket Policies and Permissions via CLI

To control access to your S3 buckets and objects, AWS provides mechanisms like Bucket Policies, ACLs (Access Control Lists), and IAM Policies. To set a bucket policy, create a JSON policy file (policy.json) and use the command aws s3api put-bucket-policy –bucket my-bucket-name –policy file://policy.json. To view the policy attached to a bucket, use aws s3api get-bucket-policy –bucket my-bucket-name. You can apply an ACL to a bucket with aws s3api put-bucket-acl –bucket my-bucket-name –acl private, and for individual objects, use aws s3api put-object-acl –bucket my-bucket-name –key myfile.txt –acl public-read. Additionally, enable logging for a bucket to track access requests by creating a logs bucket and using aws s3api put-bucket-logging –bucket my-bucket-name –bucket-logging-status file://logging-config.json, a practice that is crucial in Cloud Computing for monitoring and auditing cloud storage access and ensuring compliance with security standards. These commands help you manage and secure access to your S3 resources effectively. You can also set up Cross-Origin Resource Sharing (CORS) for your bucket to allow resources to be accessed by different domains. To configure CORS, create a CORS configuration file (cors.json) and run aws s3api put-bucket-cors –bucket my-bucket-name –cors-configuration file://cors.json. To manage permissions for users, you can attach IAM policies that define granular access permissions to S3 resources, using aws iam put-user-policy for specific users or aws iam put-group-policy for groups. For more fine-grained control, consider using conditions in your bucket policies, such as restricting access based on IP addresses or time of day. You can also enable encryption for data at rest using the aws s3api put-bucket-encryption command to enforce encryption for all objects stored in the bucket. Lastly, remember to regularly review and audit your bucket and object access controls to ensure compliance with security best practices.

Gain Your Master’s Certification in AWS by Enrolling in Our AWS Masters Course.

Troubleshooting S3 CLI Commands

When using the AWS S3 CLI, you may encounter errors related to permissions, connectivity, or syntax. Here are some troubleshooting tips:

Advanced S3 CLI Features

Beyond basic operations, the AWS S3 CLI offers advanced functionalities that enhance efficiency and automation in managing large-scale data storage solutions. One such feature is S3 Batch Operations, which allows users to perform actions on multiple objects simultaneously, making it ideal for large datasets where executing individual actions would be inefficient. Batch operations can include tasks like copying, tagging, and updating access control. For example, you can create a batch job to tag objects with specific metadata using the aws s3api create-job command, similar to how a Jenkins Pipeline can automate and streamline tasks like code deployment, testing, and environment configuration in continuous integration/continuous delivery workflows. Additionally, users can leverage Glacier Storage and Object Lifecycle Policies to optimize cost management by moving objects to Amazon S3 Glacier for long-term archival storage. This can be automated through lifecycle policies, which are configured using the aws s3api put-bucket-lifecycle-configuration command, offering a seamless way to manage data storage over time. Additionally, users can set up event notifications, allowing them to track changes and take real-time actions based on specific object states. With these advanced functionalities, AWS S3 becomes a highly flexible and scalable solution for managing vast amounts of data efficiently.

Preparing for a AWS Job Interview? Check Out Our Blog on AWS Interview Questions & Answer

Security Best Practices for S3 CLI Usage

Securing AWS S3 resources is critical to preventing unauthorized access and data breaches. The CLI enables efficient security management through encryption, access control, and monitoring.

Conclusion

The AWS S3 CLI is an essential tool for developers, system administrators, and DevOps engineers who manage cloud storage resources efficiently. By leveraging the CLI, users can execute a wide range of operations, from basic file transfers to advanced automation and security configurations, a key focus of AWS Training, where learners gain hands-on experience with using AWS CLI for efficient cloud management and automation tasks. Its scriptable nature makes it an excellent choice for integrating with CI/CD pipelines, backup solutions, and large-scale data processing workflows. Beyond simple file management, the AWS S3 CLI provides capabilities for bucket policies, access control lists (ACLs), and versioning, ensuring fine-grained control over data security and lifecycle management. Advanced features such as batch processing, encryption, and lifecycle policies enable organizations to optimize storage costs while maintaining compliance and data integrity.