- Introduction to Docker

- Docker Architecture

- Docker Images and Containers

- Docker vs Virtual Machines

- Creating Docker Containers

- Docker Use Cases

- Docker Best Practices

- Conclusion

Introduction to Docker

Docker is an open-source platform that automates the deployment, scaling, and management of applications in lightweight, portable containers. It enables developers to package an application and its dependencies into a container, which can be run consistently across different environments (development, testing, production). Docker simplifies the process of building, shipping, and running applications by providing a consistent environment across multiple systems, ensuring that applications behave the same way regardless of where they are deployed. Docker Training are isolated, secure, and fast to launch, making it easier to manage applications, scale resources, and improve CI/CD (Continuous Integration/Continuous Deployment) processes. By eliminating compatibility issues between different environments, Docker enhances development efficiency, reduces deployment time, and minimizes system conflicts. It also supports microservices architecture, allowing developers to break down applications into smaller, manageable services that communicate seamlessly. With features like Docker Compose for multi-container applications and Docker Swarm for orchestration, Docker streamlines containerized application deployment in both cloud and on-premise infrastructures. The platform integrates well with Kubernetes, providing robust container management, automated scaling, and fault tolerance for enterprise-grade applications.

Docker Architecture

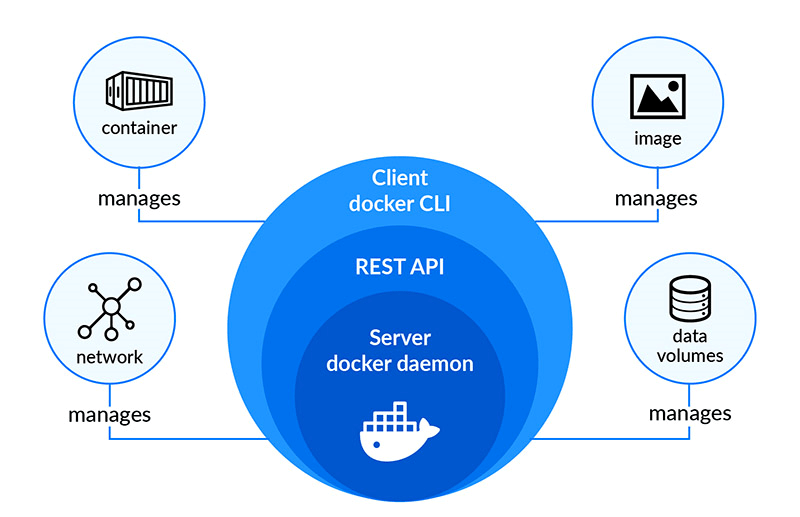

Docker’s architecture is designed to provide a consistent and lightweight environment for running applications. Here’s an overview of its components:

-

Docker Engine:

- Docker Daemon (dockerd): The background service that manages containers, images, networks, and volumes. Complete Guide to Docker and AWS ECS listens for Docker API requests and handles container operations like building, running, and managing images.

- Docker CLI (Command Line Interface): The command-line tool that allows users to interact with the Docker daemon. It enables developers to run commands such as docker build, docker run, and docker ps to manage containers and images.

- Docker API: The REST API that the Docker CLI uses to interact with the Docker daemon. Docker Images:

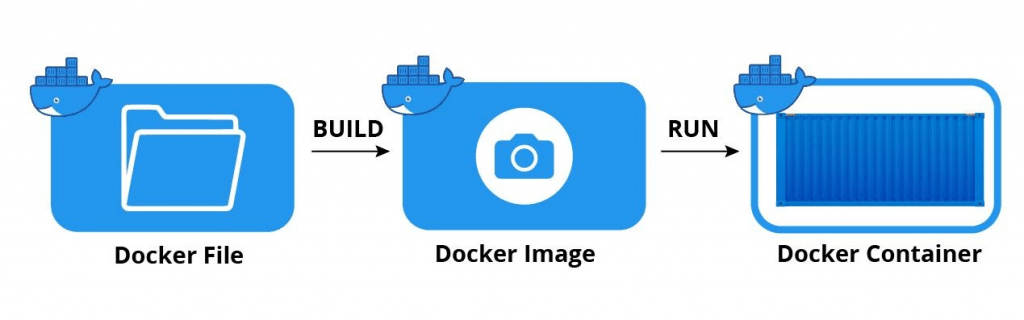

- A Docker image is a read-only template used to create containers. It includes the application code, libraries, dependencies, and runtime. Images are built from a Dockerfile, which defines the steps to build the image.

The Docker Engine is the core component of Docker, consisting of:

-

Docker Containers:

- Containers are lightweight, portable execution environments created from Docker images. Containers run as isolated processes and share the same kernel as the host system, making them more efficient than virtual machines. Docker Registry:

- A Docker registry is a centralized repository for storing Docker images. The most common registry is Docker Containers on AWS, but users can also set up private registries. Registries allow users to share and distribute images. Docker Volumes:

- Volumes are used for persistent storage in Docker. While containers are ephemeral and can be deleted, volumes allow data to persist between container restarts and are independent of the container lifecycle.

Learn how to manage and deploy Docker by joining this Docker Training Course today.

Docker Images and Containers

Docker images serve as blueprints for applications, containing everything needed to run them, including application code, libraries, system tools, and configurations. Built using a Dockerfile, which defines the step-by-step instructions for creating an image, Docker images are read-only and act as templates for launching containers. They consist of multiple layers, with each layer representing a change, such as installing a package or copying files. The use of base images, like Alpine or Ubuntu, provides a foundational layer for building customized images. Docker images are often tagged to represent different versions, such as myapp:v1 or myapp:latest, making version management easier. A Docker container, on the other hand, is a running instance of a Docker image, executing applications in an isolated environment. Containers ensure portability, allowing applications to run consistently across various environments, from development to production. Ideas for Docker Projects isolated from each other and the host system, with separate filesystems, networking, and processes. By default, containers are ephemeral, meaning their data is lost when they are stopped or removed unless persistent storage is configured using volumes. While containers are typically stateless and designed to process workloads without retaining data, they can be made stateful by connecting to external databases or persistent storage solutions.

Unlock your potential in Cloud Computing with this Docker Training Course.

Docker vs Virtual Machines

While both Docker containers and virtual machines (VMs) enable isolation and resource allocation, they differ significantly in their architecture and use cases.

| Aspect | Docker Containers | Virtual Machines |

|---|---|---|

| Isolation Level | Isolate at the application level (shared OS kernel) | Isolate at the hardware level (separate OS) |

| Resource Overhead | Lightweight, minimal overhead | Heavyweight, requires more resources (OS, kernel) |

| Startup Time | Fast, typically seconds | Slower, takes minutes to start |

| Portability | Highly portable across environments | Less portable due to dependencies on OS |

| Use Cases | Ideal for microservices, CI/CD, development | Ideal for running full OS instances and legacy apps |

Creating Docker Containers

To create and manage Docker containers, you follow a few basic steps:

Create a Dockerfile:A Dockerfile is a text file that contains a series of instructions to build a Docker image. Each instruction in the Dockerfile creates a new layer in the image.

Example Dockerfile:- #FROM ubuntu:latest

- #RUN apt-get update && apt-get install -y python3

- #COPY . /app

- #CMD [“python3”, “/app/app.py”]

Build the Image:

Use the docker build command to create an image from the Dockerfile:

- #docker build -t myapp

Use the docker run command to start a container from the built image:

- # docker run -d –name myapp-container myapp

- This starts the container in detached mode (-d) with the name myapp-container.

Looking to master Docker? Sign up for ACTE’s Docker Master Program Training Course and begin your journey today!

Managing Containers:To list all containers:

- #docker ps

To stop a running container:

- # docker stop myapp-container

To remove a container:

- #docker rm myapp-container

Docker Use Cases

Docker offers various use cases that significantly enhance development, testing, and deployment processes. It is particularly beneficial for microservices architecture, where each microservice runs in its own container, ensuring isolation, scalability, and simplified management for distributed applications. This modular approach allows teams to develop, deploy, and scale individual services independently, improving agility and efficiency. In Continuous Integration/Continuous Deployment (CI/CD), Docker plays a crucial role by enabling consistent and isolated environments for testing, building, and deploying applications. Developers can use Docker Training in CI/CD pipelines to ensure that applications function reliably across different stages, from development to production. Additionally, Docker simplifies development and testing by allowing developers to create isolated environments for testing new features or maintaining different versions of an application. Since containers guarantee a consistent runtime environment, they eliminate compatibility issues between development machines and deployment servers. For cloud and hybrid deployments, Docker’s portability allows seamless migration between cloud providers or on-premises infrastructure. It integrates with orchestration tools like Kubernetes to manage containerized applications at scale. Furthermore, Docker facilitates legacy application modernization by containerizing older applications, enabling them to run on modern infrastructure without extensive code modifications. This helps organizations extend the lifespan of their legacy software while leveraging modern cloud-native technologies.

Boost your chances in Cloud Computing interviews by checking out our blog on Cloud Computing Interview Questions and Answers!

Docker Best Practices

- Use Official Images: When possible, use official or trusted base images (e.g., ubuntu, node, nginx) from Docker Hub. These images are maintained and optimized by the community.

- Keep Dockerfiles Lean: Reduce the size of your Docker images by minimizing the number of layers and the size of the base image. Docker Compose for Multi Container Apps multi-stage builds to reduce unnecessary dependencies in production images.

- Use .dockerignore: Just as .gitignore works for Git, .dockerignore prevents unnecessary files (like local configuration or build files) from being included in the Docker image.

- Label Your Images: Use labels to provide metadata about your Docker images (e.g., version, description, maintainer). This makes it easier to track and manage images.

- Limit Container Privileges: Avoid running containers with unnecessary privileges. Run containers with the least privileged access required to Understanding Docker .

- Use Volumes for Persistent Data: Store persistent data outside of containers using Docker volumes. This ensures that data is not lost when a container is removed or recreated.

- Monitor Containers: Use monitoring tools like Prometheus, Grafana, or Docker’s built-in metrics to track the performance of containers and identify potential issues.

- Security Best Practices: Use trusted base images and keep them up to date. Scan images for vulnerabilities before deployment. Avoid running containers as root unless absolutely necessary.

Conclusion

Docker containers have revolutionized application development and deployment by providing a lightweight, portable, and consistent environment across various platforms. By enabling microservices architecture, streamlining CI/CD pipelines, and enhancing development and testing processes, Docker ensures efficiency, scalability, and faster software delivery. Docker Training portability makes it ideal for cloud and hybrid deployments, allowing seamless application migration and management. Additionally, Docker plays a crucial role in modernizing legacy applications, enabling businesses to transition to modern infrastructure without extensive rework. With its ability to optimize resource utilization, improve security, and integrate with orchestration tools like Kubernetes, Docker has become an essential tool in modern DevOps and cloud computing. As containerization continues to evolve, Docker will remain a key technology in building, deploying, and managing scalable applications.